Linux Block IO: Introducing Multi-queue SSD Access on

Multi-core Systems

Matias Bjørling

*†

Jens Axboe

†

David Nellans

†

Philippe Bonnet

*

*

IT University of Copenhagen

{mabj,phbo}@itu.dk

†

Fusion-io

{jaxboe,dnellans}@fusionio.com

ABSTRACT

The IO performance of storage devices has accelerated from

hundreds of IOPS five years ago, to hundreds of thousands

of IOPS today, and tens of millions of IOPS projected in five

years. This sharp evolution is primarily due to the introduc-

tion of NAND-flash devices and their data parallel design. In

this work, we demonstrate that the block layer within the

operating system, originally designed to handle thousands

of IOPS, has become a bottleneck to overall storage system

performance, specially on the high NUMA-factor processors

systems that are becoming commonplace. We describe the

design of a next generation block layer that is capable of

handling tens of millions of IOPS on a multi-core system

equipped with a single storage device. Our experiments

show that our design scales graciously with the number of

cores, even on NUMA systems with multiple sockets.

Categories and Subject Descriptors

D.4.2 [Operating System]: Storage Management—Sec-

ondary storage; D.4.8 [Operating System]: Performance—

measurements

General Terms

Design, Experimentation, Measurement, Performance.

Keywords

Linux, Block Layer, Solid State Drives, Non-volatile Mem-

ory, Latency, Throughput.

1 Introduction

As long as secondary storage has been synonymous with

hard disk drives (HDD), IO latency and throughput have

been shaped by the physical characteristics of rotational de-

vices: Random accesses that require disk head movement

are slow and sequential accesses that only require rotation

of the disk platter are fast. Generations of IO intensive al-

gorithms and systems have been designed based on these

two fundamental characteristics. Today, the advent of solid

state disks (SSD) based on non-volatile memories (NVM)

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee.

SYSTOR ’13 June 30 - July 02 2013, Haifa, Israel

Copyright 2013 ACM 978-1-4503-2116-7/13/06 ...$15.00.

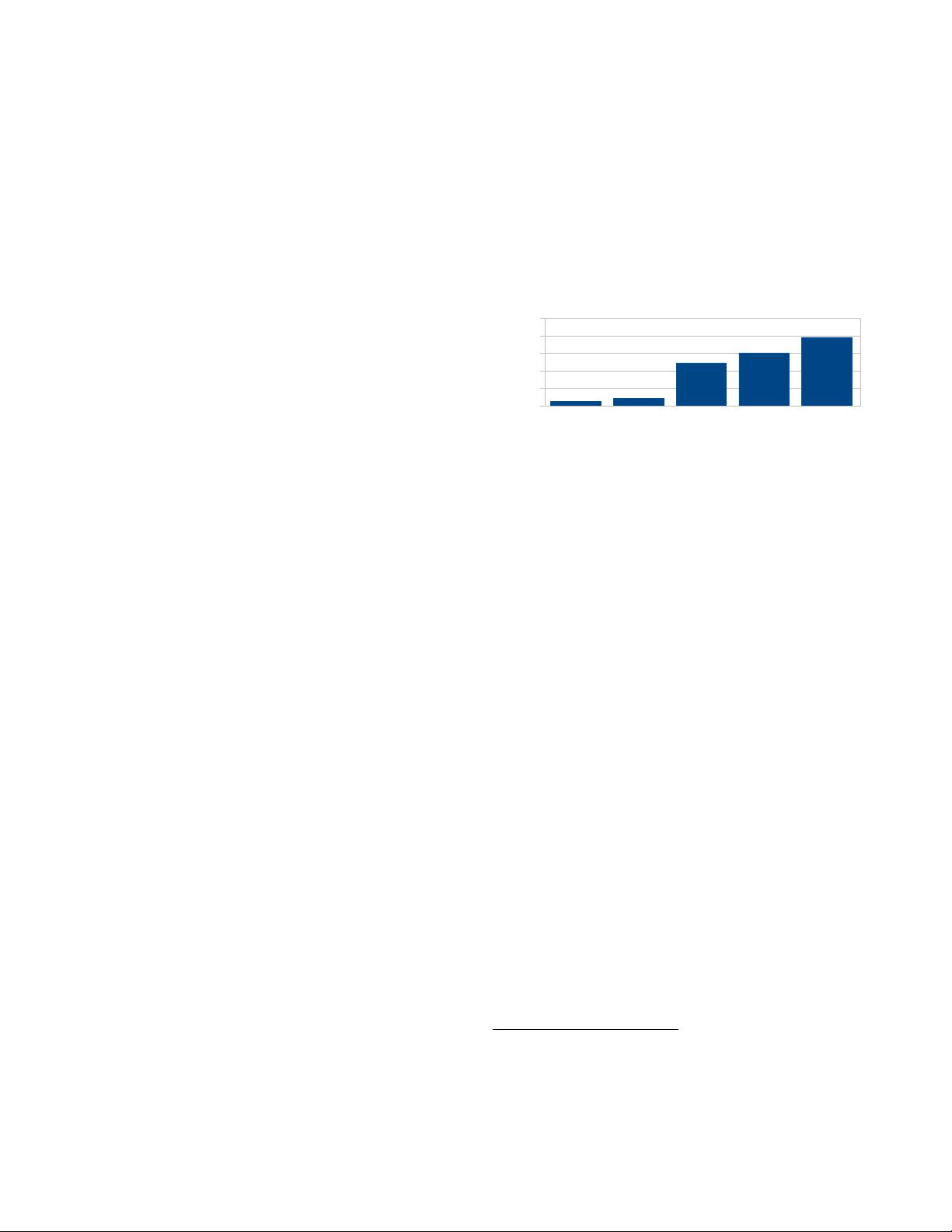

2012

2011

2010

4K Read IOPS

0

200k

400k

600k

800k

1M

785000

608000

498000

90000

60000

SSD 1 SSD 2 SSD 3 SSD 4 SSD 5

Figure 1: IOPS for 4K random read for five SSD

devices.

(e.g., flash or phase-change memory [11, 6]) is transforming

the performance characteristics of secondary storage. SSDs

often exhibit little latency difference between sequential and

random IOs [16]. IO latency for SSDs is in the order of tens

of microseconds as opposed to tens of milliseconds for HDDs.

Large internal data parallelism in SSDs disks enables many

concurrent IO operations which, in turn, allows single de-

vices to achieve close to a million IOs per second (IOPS)

for random accesses, as opposed to just hundreds on tradi-

tional magnetic hard drives. In Figure 1, we illustrate the

evolution of SSD performance over the last couple of years.

A similar, albeit slower, performance transformation has

already been witnessed for network systems. Ethernet speed

evolved steadily from 10 Mb/s in the early 1990s to 100 Gb/s

in 2010. Such a regular evolution over a 20 years period has

allowed for a smooth transition between lab prototypes and

mainstream deployments over time. For storage, the rate of

change is much faster. We have seen a 10,000x improvement

over just a few years. The throughput of modern storage de-

vices is now often limited by their hardware (i.e., SATA/SAS

or PCI-E) and software interfaces [28, 26]. Such rapid leaps

in hardware performance have exposed previously unnoticed

bottlenecks at the software level, both in the operating sys-

tem and application layers. Today, with Linux, a single

CPU core can sustain an IO submission rate of around 800

thousand IOPS. Regardless of how many cores are used to

submit IOs, the operating system block layer can not scale

up to over one million IOPS. This may be fast enough for

today’s SSDs - but not for tomorrow’s.

We can expect that (a) SSDs are going to get faster, by

increasing their internal parallelism

1

[9, 8] and (b) CPU

1

If we look at the performance of NAND-flash chips, access

times are getting slower, not faster, in timings [17]. Access

time, for individual flash chips, increases with shrinking fea-

ture size, and increasing number of dies per package. The

decrease in individual chip performance is compensated by

improved parallelism within and across chips.