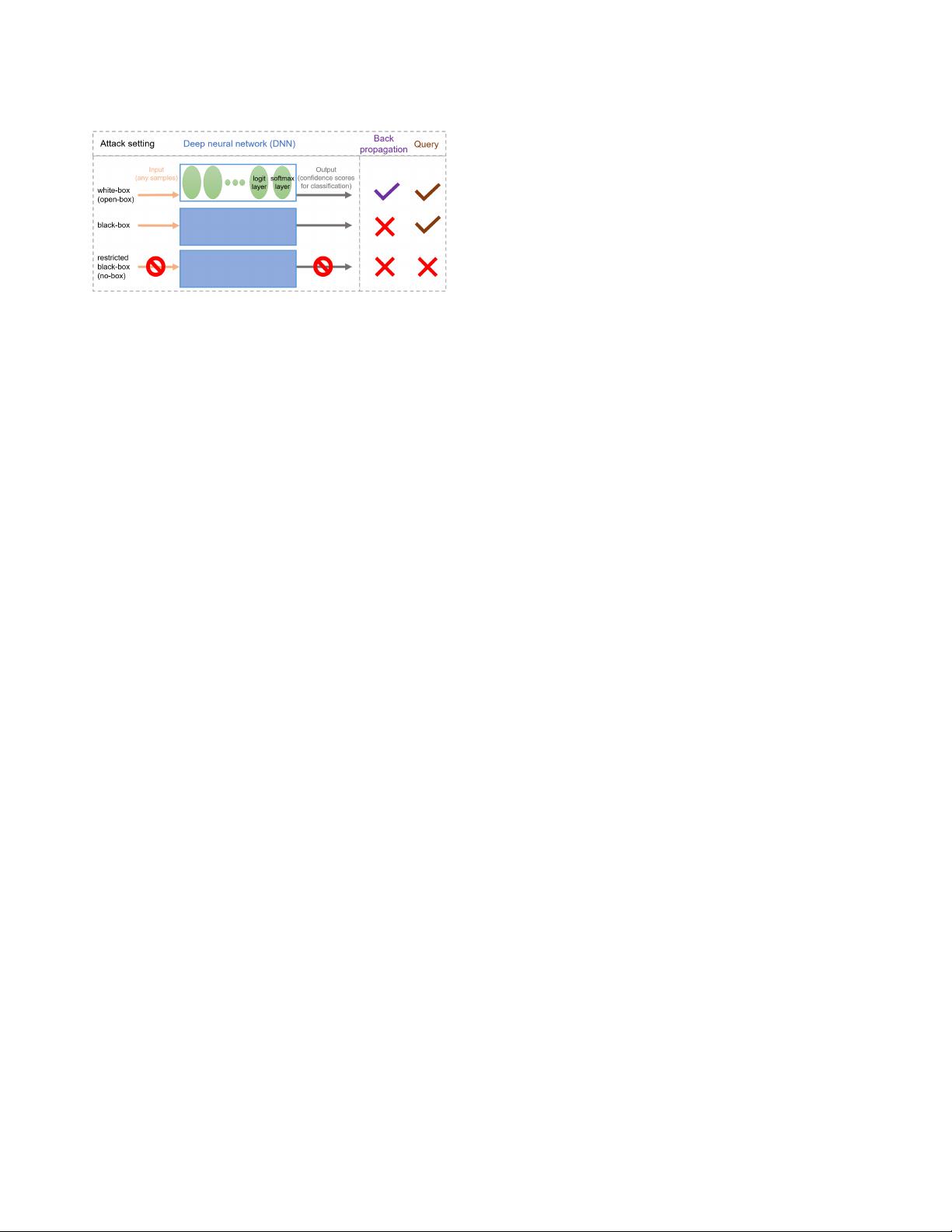

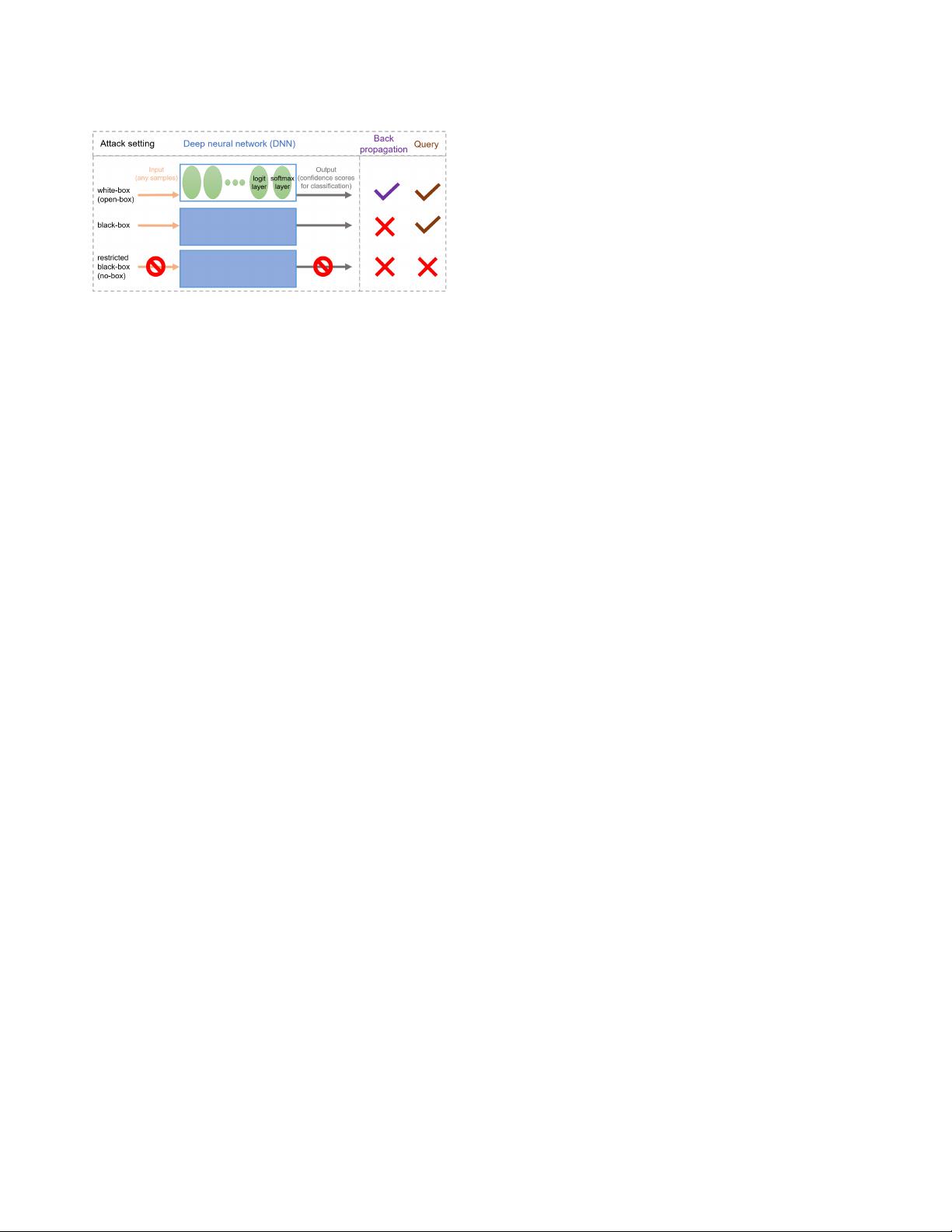

Figure 2: Taxonomy of adversarial attacks to deep neural

networks (DNNs). “Back propagation” means an attacker

can access the internal congurations in DNNs (e.g., per-

forming gradient descent), and “Query” means an attacker

can input any sample and observe the corresponding output.

successfully bypass 10 dierent detections methods designed for

detecting adversarial examples [7].

• Transferability:

In the context of adversarial attacks, transfer-

ability means that the adversarial examples generated from one

model are also very likely to be misclassied by another model.

In particular, the aforementioned adversarial attacks have demon-

strated that their adversarial examples are highly transferable from

one DNN at hand to the targeted DNN. One possible explanation

of inherent attack transferability for DNNs lies in the ndings that

DNNs commonly have overwhelming generalization power and lo-

cal linearity for feature extraction [

40

]. Notably, the transferability

of adversarial attacks brings about security concerns for machine

learning applications based on DNNs, as malicious examples may

be easily crafted even when the exact parameters of a targeted DNN

are absent. More interestingly, the authors in [

29

] have shown that

a carefully crafted universal perturbation to a set of natural im-

ages can lead to misclassication of all considered images with

high probability, suggesting the possibility of attack transferability

from one image to another. Further analysis and justication of a

universal perturbation is given in [30].

1.2 Black-box attacks and substitute models

While the denition of an open-box (white-box) attack to DNNs is

clear and precise - having complete knowledge and allowing full

access to a targeted DNN, the denition of a “black-box” attack

to DNNs may vary in terms of the capability of an attacker. In an

attacker’s perspective, a black-box attack may refer to the most

challenging case where only benign images and their class labels

are given, but the targeted DNN is completely unknown, and one is

prohibited from querying any information from the targeted classi-

er for adversarial attacks. This restricted setting, which we call a

“no-box” attack setting, excludes the principal adversarial attacks

introduced in Section 1.1, as they all require certain knowledge

and back propagation from the targeted DNN. Consequently, under

this no-box setting the research focus is mainly on the attack trans-

ferability from one self-trained DNN to a targeted but completely

access-prohibited DNN.

On the other hand, in many scenarios an attacker does have the

privilege to query a targeted DNN in order to obtain useful informa-

tion for crafting adversarial examples. For instance, a mobile app or

a computer software featuring image classication (mostly likely

trained by DNNs) allows an attacker to input any image at will and

acquire classication results, such as the condence scores or rank-

ing for classication. An attacker can then leverage the acquired

classication results to design more eective adversarial examples

to fool the targeted classier. In this setting, back propagation for

gradient computation of the targeted DNN is still prohibited, as

back propagation requires the knowledge of internal congurations

of a DNN that are not available in the black-box setting. However,

the adversarial query process can be iterated multiple times until an

attacker nds a satisfactory adversarial example. For instance, the

authors in [

26

] have demonstrated a successful black-box adversar-

ial attack to Clarifai.com, which is a black-box image classication

system.

Due to its feasibility, the case where an attacker can have free

access to the input and output of a targeted DNN while still be-

ing prohibited from performing back propagation on the targeted

DNN has been called a practical black-box attack setting for DNNs

[

8

,

16

,

17

,

26

,

34

,

35

]. For the rest of this paper, we also refer a

black-box adversarial attack to this setting. For illustration, the

attack settings and their limitations are summarized in Figure 2. It

is worth noting that under this black-box setting, existing attacking

approaches tend to make use of the power of free query to train a

substitute model [

17

,

34

,

35

], which is a representative substitute of

the targeted DNN. The substitute model can then be attacked using

any white-box attack techniques, and the generated adversarial

images are used to attack the target DNN. The primary advantage

of training a substitute model is its total transparency to an at-

tacker, and hence essential attack procedures for DNNs, such as

back propagation for gradient computation, can be implemented on

the substitute model for crafting adversarial examples. Moreover,

since the substitute model is representative of a targeted DNN in

terms of its classication rules, adversarial attacks to a substitute

model are expected to be similar to attacking the corresponding

targeted DNN. In other words, adversarial examples crafted from a

substitute model can be highly transferable to the targeted DNN

given the ability of querying the targeted DNN at will.

1.3 Defending adversarial attacks

One common observation from the development of security-related

research is that attack and defense often come hand-in-hand, and

one’s improvement depends on the other’s progress. Similarly, in

the context of robustness of DNNs, more eective adversarial at-

tacks are often driven by improved defenses, and vice versa. There

has been a vast amount of literature on enhancing the robustness

of DNNs. Here we focus on the defense methods that have been

shown to be eective in tackling (a subset of) the adversarial attacks

introduced in Section 1.1 while maintaining similar classication

performance for the benign examples. Based on the defense tech-

niques, we categorize the defense methods proposed for enhancing

the robustness of DNNs to adversarial examples as follows.

• Detection-based defense:

Detection-based approaches aim to

dierentiate an adversarial example from a set of benign exam-

ples using statistical tests or out-of-sample analysis. Interested

readers can refer to recent works in [

10

,

13

,

18

,

28

,

42

,

43

] and

references therein for details. In particular, feature squeezing is