of them has analyzed the SR performance of different chan-

nels, and the necessity of recovering all three channels.

2.2 Convolutional Neural Networks (CNN)

Convolutional neural networks date back decades [26] and

deep CNNs have recently shown an explosive popularity

partially due to its success in image classification [18], [25].

They have also been successfully applied to other computer

vision fields, such as object detection [33], [50], face recogni-

tion [38], and pedestrian detection [34]. Several factors are

of central importance in this progress: (i) the efficient train-

ing implementation on modern powerful GPUs [25], (ii) the

proposal of the rectified linear unit (ReLU) [32] which

makes convergence much faster while still presents good

quality [25], and (iii) the easy access to an abundance of

data (like ImageNet [9]) for training larger models. Our

method also benefits from these progresses.

2.3 Deep Learning for Image Restoration

There have been a few studies of using deep learning tech-

niques for image restoration. The multi-layer perceptron

(MLP), whose all layers are fully-connected (in contrast to

convolutional), is applied for natural image denoising [3]

and post-deblurring denoising [35]. More closely related to

our work, the convolutional neural network is applied for

natural image denoising [21] and removing noisy patterns

(dirt/rain) [12]. These restoration problems are more or less

denoising-driven. Cui et al. [5] propose to embed auto-

encoder networks in their super-resolution pipeline under

the notion internal example-based approach [16]. The deep

model is not specifically designed to be an end-to-end solu-

tion, since each layer of the cascade requires independent

optimization of the self-similarity search process and the

auto-encoder. On the contrary, the proposed SRCNN opti-

mizes an end-to-end mapping. Further, the SRCNN is faster

at speed. It is not only a quantitatively superior method, but

also a practically useful one.

3CONVOLUTIONAL NEURAL NETWORKS FOR

SUPER-RESOLUTION

3.1 Formulation

Consider a single low-resolution image, we first upscale it to

the desired size using bicubic interpolation, which is the

only pre-processing we perform.

3

Let us denote the interpo-

lated image as Y. Our goal is to recover from Y an image

F ðYÞ that is as similar as possible to the ground truth high-

resolution image X. For the ease of presentation, we still call

Y a “low-resolution” image, although it has the same size as

X. We wish to learn a mapping F, which conceptually con-

sists of three operations:

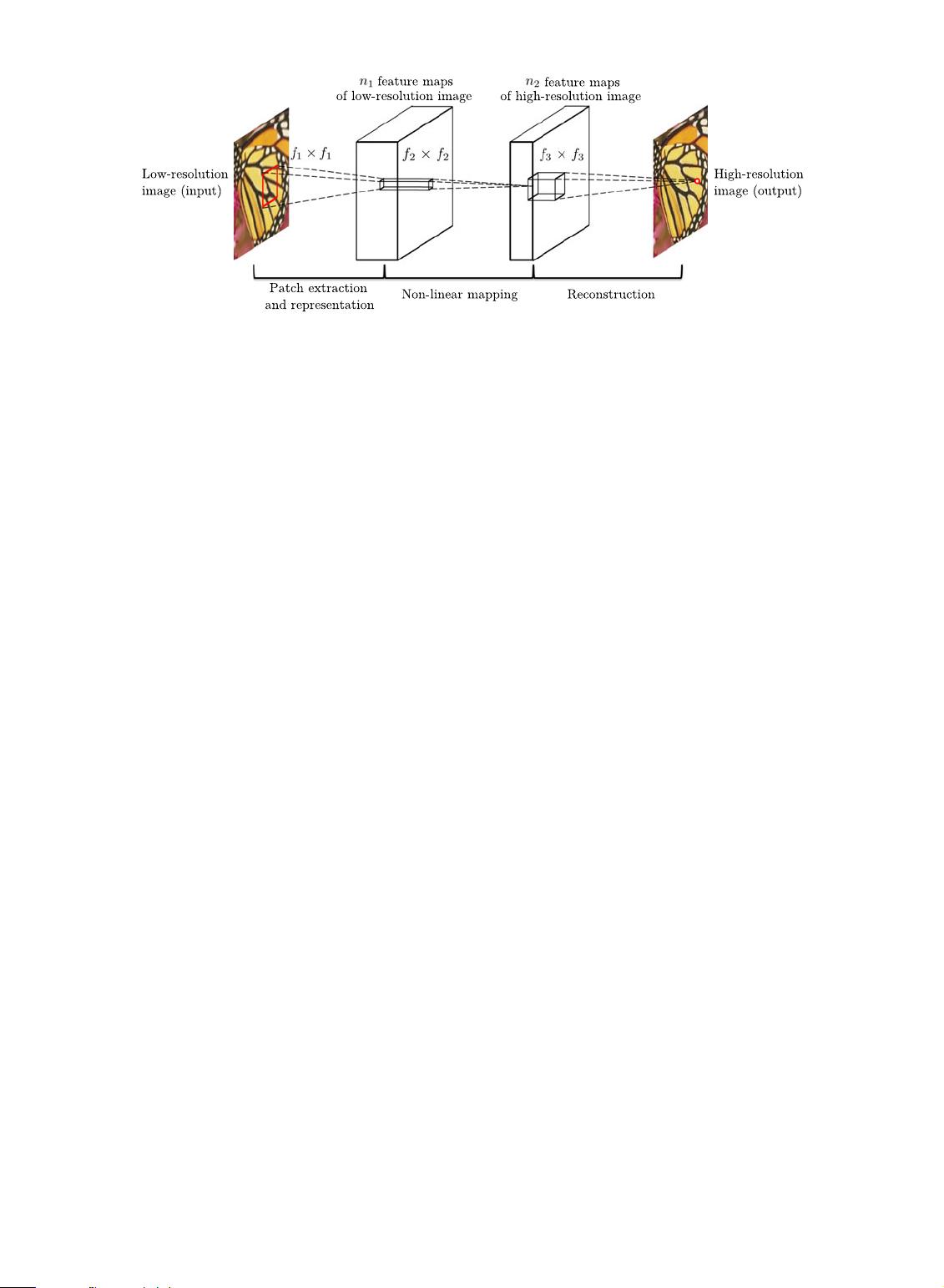

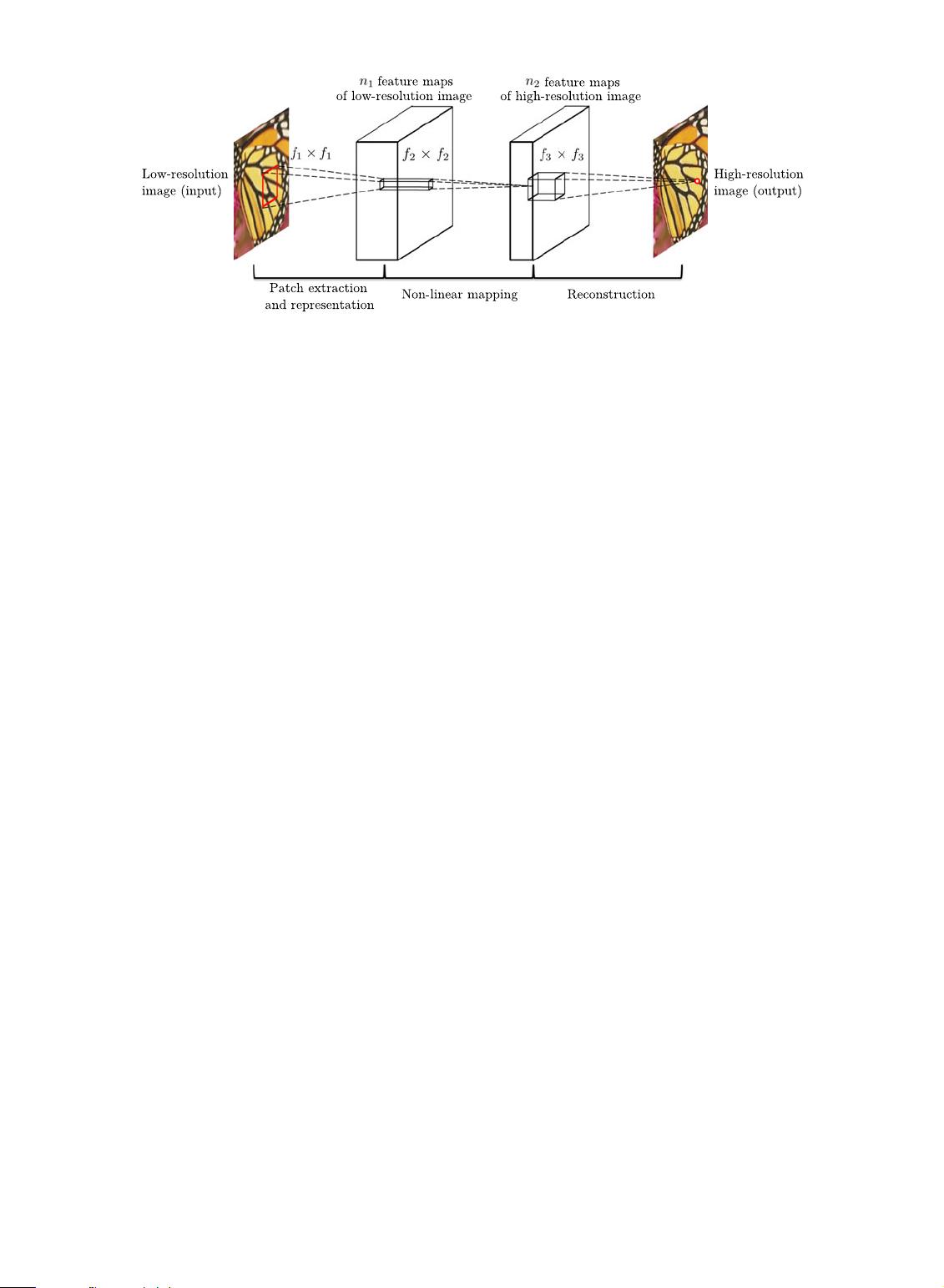

1) Patch extraction and representation. this operation

extracts (overlapping) patches from the low-resolu-

tion image Y and represents each patch as a high-

dimensional vector. These vectors comprise a set of

feature maps, of which the number equals to the

dimensionality of the vectors.

2) Non-linear mapping. this operation nonlinearly maps

each high-dimensional vector onto another high-

dimensional vector. Each mapped vector is concep-

tually the representation of a high-resolution patch.

These vectors comprise another set of feature maps.

3) Reconstruction. this operation aggregates the above

high-resolution patch-wise representations to gener-

ate the final high-resolution image. This image is

expected to be similar to the ground truth X.

We will show th at all these operations form a convolu-

tional neural network. An overview of the network is

depicted in Fig. 2. Next we detail our definition of each

operation.

3.1.1 Patch Extraction and Representation

A popular strategy in image restoration (e.g., [1]) is to

densely extract patches and then represent them by a set of

pre-trained bases such as PCA, DCT, Haar, etc. This is

equivalent to convolving the image by a set of filters, each

of which is a basis. In our formulation, we involve the opti-

mization of these bases into the optimization of the network.

Formally, our first layer is expressed as an operation F

1

:

F

1

ðYÞ¼max 0;W

1

Y þ B

1

ðÞ; (1)

Fig. 2. Given a low-resolution image Y, the first convolutional layer of the SRCNN extracts a set of feature maps. The second layer maps these

feature maps nonlinearly to high-resolution patch representations. The last layer combines the predictions within a spatial neighbourhood to produce

the final high-resolution image F ðYÞ.

3. Bicubic interpolation is also a convolutional operation, so it can be

formulated as a convolutional layer. However, the output size of this

layer is larger than the input size, so there is a fractional stride. To take

advantage of the popular well-optimized implementations such as

cuda-convnet [25], we exclude this “layer” from learning.

DONG ET AL.: IMAGE SUPER-RESOLUTION USING DEEP CONVOLUTIONAL NETWORKS 297