没有合适的资源?快使用搜索试试~ 我知道了~

首页Mahout实践指南:推荐系统和聚类算法详解

"MahoutinAction完整版本"

MahoutinAction完整版本是 Apache Mahout 的一个详细指南,涵盖了推荐算法、数据挖掘和个性化推荐等方面的知识。

**推荐算法**

推荐算法是 Mahout 的核心组件之一,MahoutinAction完整版本中详细介绍了推荐算法的基本概念和实现方式。推荐算法的主要目标是根据用户的行为和喜好,推荐用户可能感兴趣的物品或服务。Mahout 提供了多种推荐算法,包括基于用户的协同过滤、基于项目的协同过滤、矩阵分解等。

**数据挖掘**

数据挖掘是指从大量数据中提取有价值的信息和模式的过程。MahoutinAction完整版本中介绍了数据挖掘的基本概念和技术,包括数据预处理、数据转换、数据挖掘算法等。

**个性化推荐**

个性化推荐是指根据用户的行为和喜好,提供个性化的推荐结果。MahoutinAction完整版本中详细介绍了个性化推荐的概念和实现方式,包括基于用户的协同过滤、基于项目的协同过滤、矩阵分解等。

**Mahout clustering**

Mahout clustering 是 Mahout 的一个重要组件,用于对数据进行聚类分析。MahoutinAction完整版本中详细介绍了 Mahout clustering 的基本概念和实现方式,包括 K-均值聚类、 Hierarchical 聚类、 DBSCAN 聚类等。

**Mahout recommendations**

Mahout recommendations 是 Mahout 的一个核心组件,用于提供个性化推荐。MahoutinAction完整版本中详细介绍了 Mahout recommendations 的基本概念和实现方式,包括基于用户的协同过滤、基于项目的协同过滤、矩阵分解等。

**数据表示**

数据表示是指将数据转换为机器学习算法可以使用的格式。MahoutinAction完整版本中介绍了数据表示的基本概念和技术,包括向量空间模型、矩阵分解等。

**分布式计算**

分布式计算是指将计算任务分布到多个节点上,以提高计算速度和可扩展性。MahoutinAction完整版本中介绍了分布式计算的基本概念和技术,包括 Hadoop、MapReduce 等。

MahoutinAction完整版本是一个详细的指南,涵盖了 Mahout 的各个方面,包括推荐算法、数据挖掘、个性化推荐、Mahout clustering、Mahout recommendations、数据表示、分布式计算等。

©Manning Publications Co. Please post comments or corrections to the Author Online forum:

http://www.manning-sandbox.com/forum.jspa?forumID=623

Before discussing each of these components in more detail in the next two chapters, we can

summarize the role of each component now. A

DataModel implementation stores and provides access to

all the preference, user and item data needed in the computation. A

UserSimiliarity implementation

provides some notion of how similar two users are; this could be based on one of many possible metrics

or calculations. A

UserNeighborhood implementation defines a notion of a group of users that are most

similar to a given user. Finally, a

Recommender implementation pulls all these components together to

recommend items to users, and related functionality.

2.2.3 Analyzing the output

Compile and run this using your favorite IDE. The output of running the program in your terminal or IDE

should be: RecommendedItem[item:104, value:4.257081]

The request asked for one top recommendation, and got one. The recommender engine recommended

book 104 to user 1. Further, it says that the recommender engine did so because it estimated user 1’s

preference for book 104 to be about 4.3, and that was the highest among all the items eligible for

recommendations.

That’s not bad. 107 did not appear, which was also recommendable, but only associated to a user with

different tastes. It picked 104 over 106, and this makes sense after noting that 104 is a bit more highly

rated overall. Further, the output contained a reasonable estimate of how much user 1 likes item 104 –

something between the 4.0 and 4.5 that users 4 and 5 expressed.

The right answer isn't obvious from looking at the data, but the recommender engine made some

decent sense of it and returned a defensible answer. If you got a pleasant tingle out of seeing this simple

program give a useful and non-obvious result from a small pile of data, then the world of machine learning

is for you!

For clear, small data sets, producing recommendations is as trivial as it appears above. In real life,

data sets are huge, and they are noisy. For example, imagine a popular news site recommending news

articles to readers. Preferences are inferred from article clicks. But, many of these “preferences” may be

bogus – maybe a reader clicked an article but didn't like it, or, had clicked the wrong story. Perhaps many

of the clicks occurred while not logged in, so can’t be associated to a user. And, imagine the size of the

data set – perhaps billions of clicks in a month.

Producing the right recommendations from this data and producing them quickly are not trivial. Later

we will present the tools Mahout provides to attack a range of such problems by way of case studies. They

will show how standard approaches can produce poor recommendations or take a great deal of memory

and CPU time, and, how to configure and customize Mahout to improve performance.

2.3 Evaluating a Recommender

A recommender engines is a tool, a means to answer the question, “what are the best recommendations

for a user?” Before investigating the answers, it’s best to investigate the question. What exactly is a good

recommendation? And how does one know when a recommender is producing them? The remainder of

this chapter pauses to explore evaluation of a recommender, because this is a tool that will be useful

when looking at specific recommender systems.

The best possible recommender would be a sort of psychic that could somehow know, before you do,

exactly how much you would like every possible item that you've not yet seen or expressed any

preference for. A recommender that could predict all your preferences exactly would merely present all

other items ranked by your future preference and be done. These would be the best possible

recommendations.

And indeed most recommender engines operate by trying to do just this, estimating ratings for some

or all other items. So, one way of evaluating a recommender's recommendations is to evaluate the quality

of its estimated preference values – that is, evaluating how closely the estimated preferences match the

actual preferences.

12

Licensed to Duan Jienan <jnduan@gmail.com>

©Manning Publications Co. Please post comments or corrections to the Author Online forum:

http://www.manning-sandbox.com/forum.jspa?forumID=623

2.3.1 Training data and scoring

Those “actual preferences” don't exist though. Nobody knows for sure how you'll like some new item in

the future (including you). This can be simulated to a recommender engine by setting aside a small part of

the real data set as test data. These test preferences are not present in the training data fed into a

recommender engine under evaluation -- which is all data except the test data. Instead, the recommender

is asked to estimate preference for the missing test data, and estimates are compared to the actual

values.

From there, it is fairly simple to produce a kind of “score” for the recommender. For example it’s

possible to compute the average difference between estimate and actual preference. With a score of this

type, lower is better, because that would mean the estimates differed from the actual preference values

by less. 0.0 would mean perfect estimation -- no difference at all between estimates and actual values.

Sometimes the root-mean-square of the differences is used: this is the square root of the average of

the squares of the differences between actual and estimated preference values. Again, lower is better.

Item 1 Item 2 Item 3

Actual

3.0 5.0 4.0

Estimate

3.5 2.0 5.0

Difference

0.5 3.0 1.0

Average

Difference

= (0.5 + 3.0 + 1.0) / 3 = 1.5

Root Mean

Square

=√((0.5

2

+ 3.0

2

+ 1.0

2

) / 3) = 1.8484

Table 2.1 An illustration of the average difference, and root mean square calculation

Above, the table shows the difference between a set of actual and estimated preferences, and how

they are translated into scores. Root-mean-square more heavily penalizes estimates that are way off, as

with item 2 here, and that is considered desirable by some. For example, an estimate that’s off by 2 whole

stars is probably more than twice as “bad” as one off by just 1 star. Because the simple average of

differences is perhaps more intuitive and easy to understand, upcoming examples will use it.

2.3.2 Running RecommenderEvaluator

Let's revisit the example code and instead evaluate the simple recommender, on this simple data set:

Listing 2.3 Configuring and running an evaluation of a Recommender

RandomUtils.useTestSeed(); A

DataModel model = new FileDataModel(new File("intro.csv"));

RecommenderEvaluator evaluator =

new AverageAbsoluteDifferenceRecommenderEvaluator();

RecommenderBuilder builder = new RecommenderBuilder() { B

@Override

public Recommender buildRecommender(DataModel model)

throws TasteException {

UserSimilarity similarity = new PearsonCorrelationSimilarity(model);

UserNeighborhood neighborhood =

new NearestNUserNeighborhood(2, similarity, model);

return

new GenericUserBasedRecommender(model, neighborhood, similarity);

}

};

double score = evaluator.evaluate(

13

Licensed to Duan Jienan <jnduan@gmail.com>

©Manning Publications Co. Please post comments or corrections to the Author Online forum:

http://www.manning-sandbox.com/forum.jspa?forumID=623

builder, null, model, 0.7, 1.0); C

System.out.println(score);

A Used only in examples for repeatable result

B Builds the same Recommender as above

C Use 70% of data to train; test with other 30%

Most of the action happens in

evaluate(). Inside, the RecommenderEvaluator handles splitting

the data into a training and test set, builds a new training

DataModel and Recommender to test, and

compares its estimated preferences to the actual test data.

Note that there is no

Recommender passed to this method. This is because, inside, the method will

need to build a

Recommender around a newly created training DataModel. So the caller must provide an

object that can build a

Recommender from a DataModel – a RecommenderBuilder. Here, it builds the

same implementation that was tried earlier in this chapter.

2.3.3 Assessing the result

This program prints the result of the evaluation: a score indicating how well the Recommender performed.

In this case you should simply see:

1.0. Even though a lot of randomness is used inside the evaluator to

choose test data, the result should be consistent because of the call to

RandomUtils.useTestSeed(),

which forces the same random choices each time. This is only used in such examples, and unit tests, to

guarantee repeatable results. Don’t use it in your real code.

What this value means depends on the implementation used – here,

AverageAbsoluteDifferenceRecommenderEvaluator. A result of 1.0 from this implementation

means that, on average, the recommender estimates a preference that deviates from the actual

preference by 1.0.

A value of 1.0 is not great, on a scale of 1 to 5, but there is so little data here to begin with. Your

results may differ as the data set is split randomly, and hence the training and test set may differ with

each run.

This technique can be applied to any

Recommender and DataModel. To use root-mean-square

scoring, replace

AverageAbsoluteDifferenceRecommenderEvaluator with the implementation

RMSRecommenderEvaluator.

Also, the

null parameter to evaluate() could instead be an instance of DataModelBuilder,

which can be used to control how the training

DataModel is created from training data. Normally the

default is fine; it may not be if you are using a specialized implementation of

DataModel in your

deployment. A

DataModelBuilder is how you would inject it into the evaluation process.

The

1.0 parameter at the end controls how much of the overall input data is used. Here it means

“100%.” This can be used to produce a quicker, if less accurate, evaluation by using only a little of a

potentially huge data set. For example,

0.1 would mean 10% of the data is used and 90% is ignored.

This is quite useful when rapidly testing small changes to a

Recommender.

2.4 Evaluating precision and recall

We could also take a broader view of the recommender problem: it’s not strictly necessary to estimate

preference values in order to produce recommendations. It’s not always essential to present estimated

preference values to users. In many cases, just an ordered list of recommendations, from best to worst, is

sufficient. In fact, in some cases the exact ordering of the list doesn’t matter much – a set of a few good

recommendations is fine.

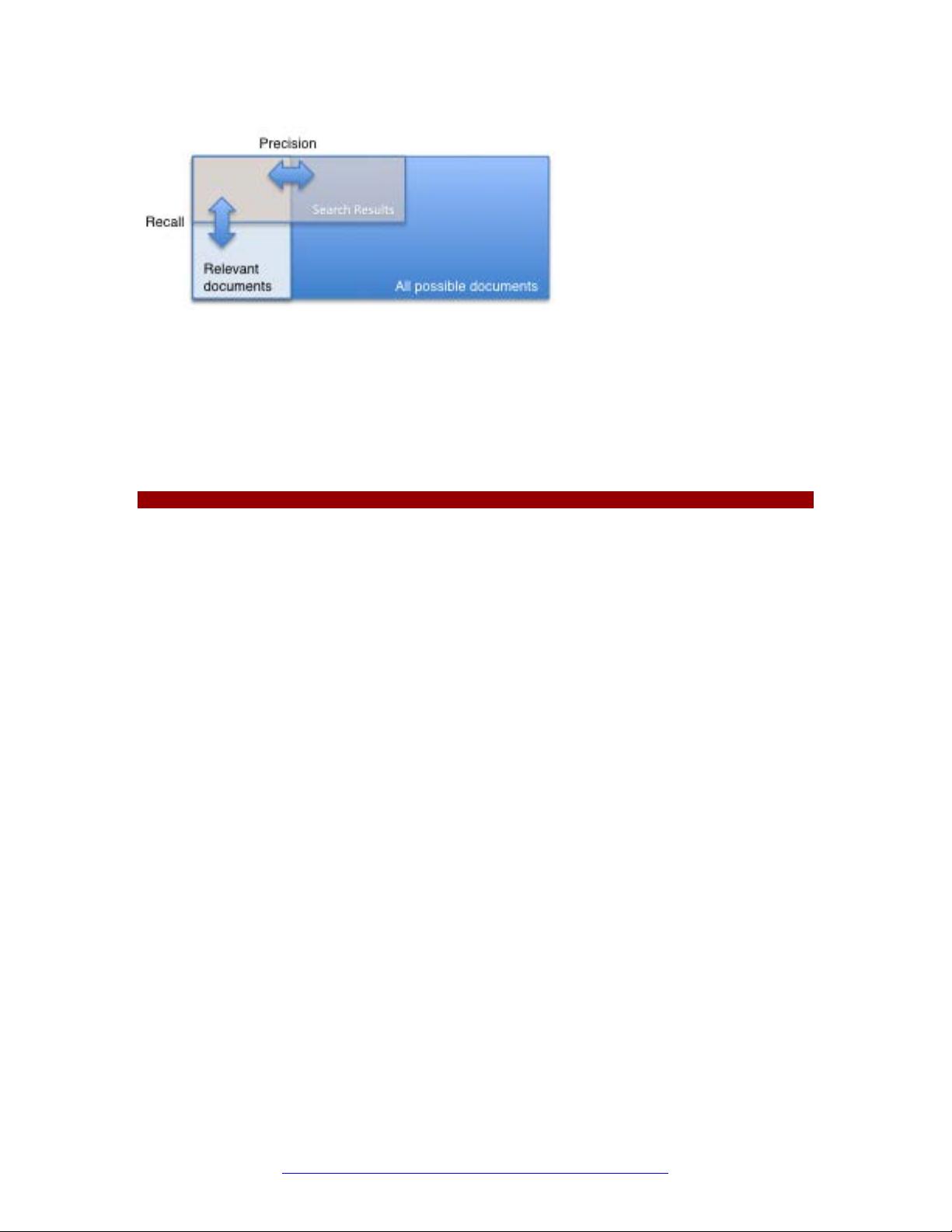

Taking this more general view, we could also apply classic information retrieval metrics to evaluate

recommenders: precision and recall. These terms are typically applied to things like search engines, which

return some set of best results for a query out of many possible results.

A search engine should not return irrelevant results in the top results, although it should strive to

return as many relevant results as possible. “Precision” is the proportion of top results that are relevant,

for some definition of relevant. “Precision at 10” would be this proportion judged from the top 10 results.

“Recall” is the proportion of all relevant results included in the top results. See figure 2.3 for a

visualization of these ideas.

14

Licensed to Duan Jienan <jnduan@gmail.com>

©Manning Publications Co. Please post comments or corrections to the Author Online forum:

http://www.manning-sandbox.com/forum.jspa?forumID=623

Figure 2.3 An illustration of precision and recall in the context of search results

These terms can easily be adapted to recommenders: precision is the proportion of top

recommendations that are good recommendations, and recall is the proportion of good recommendations

that appear in top recommendations. The next section will define “good”.

2.4.1 Running RecommenderIRStatsEvaluator

Again, Mahout provides a fairly simple way to compute these values for a Recommender:

Listing 2.4 Configuring and running a precision and recall evaluation

RandomUtils.useTestSeed();

DataModel model = new FileDataModel(new File("intro.csv"));

RecommenderIRStatsEvaluator evaluator =

new GenericRecommenderIRStatsEvaluator();

RecommenderBuilder recommenderBuilder = new RecommenderBuilder() {

@Override

public Recommender buildRecommender(DataModel model)

throws TasteException {

UserSimilarity similarity = new PearsonCorrelationSimilarity(model);

UserNeighborhood neighborhood =

new NearestNUserNeighborhood(2, similarity, model);

return

new GenericUserBasedRecommender(model, neighborhood, similarity);

}

};

IRStatistics stats = evaluator.evaluate(

recommenderBuilder, null, model, null, 2,

GenericRecommenderIRStatsEvaluator.CHOOSE_THRESHOLD,

1.0); A

System.out.println(stats.getPrecision());

System.out.println(stats.getRecall());

A Evaluate precision and recall at 2

Without the call to

RandomUtils.useTestSeed(), the result you see would vary significantly due to

random selection of training data and test data, and because the data set is so small here. But with the

call, the result ought to be:

0.75

1.0

Precision at 2 is 0.75; on average about three-quarters of recommendations were “good.” Recall at 2 is

1.0; all good recommendations are among those recommended.

But what exactly is a “good” recommendation here? The framework was asked to decide. It didn’t

receive a definition. Intuitively, the most highly preferred items in the test set are the good

recommendations, and the rest aren’t.

15

Licensed to Duan Jienan <jnduan@gmail.com>

©Manning Publications Co. Please post comments or corrections to the Author Online forum:

http://www.manning-sandbox.com/forum.jspa?forumID=623

Listing 2.5 User 5’s preference in test data set

5,101,4.0

5,102,3.0

5,103,2.0

5,104,4.0

5,105,3.5

5,106,4.0

Look at user 5 in this simple data set again. Let’s imagine the preferences for items 101, 102 and 103

were withheld as test data. The preference values for these are 4.0, 3.0 and 2.0. With these values

missing from the training data, a recommender engine ought to recommend 101 before 102, and 102

before 103, because this is the order in which user 5 prefers these items. But would it be a good idea to

recommend 103? It’s last on the list; user 5 doesn’t seem to like it much. Book 102 is just average. Book

101 looks reasonable as its preference value is well above average. Maybe 101 is a good

recommendation; 102 and 103 are valid, but not good recommendations.

And this is the thinking that the

RecommenderEvaluator employs. When not given an explicit

threshold that divides good recommendations from bad, the framework will pick a threshold, per user,

that is equal to the user's average preference value µ plus one standard deviation σ:

threshold = µ +

σ

If you’ve forgotten your statistics, don’t worry. This takes items whose preference value is not merely

a little more than average (µ), but above average by a significant amount (σ). In practice this means that

about the 16% of items that are most highly preferred are considered “good” recommendations to make

back to the user. The other arguments to this method are similar to those discussed before and are more

fully documented in the project javadoc.

2.4.2 Problems with precision and recall

The usefulness of precision and recall tests in the context of recommenders depends entirely on how well

a “good” recommendation can be defined. Above, the threshold was given, or defined by the framework. A

poor choice will hurt the usefulness of the resulting score.

There’s a subtler problem with these tests, though. Here, they necessarily pick the set of good

recommendations from among items for which the user has already expressed some preference. But the

best recommendations are of course not necessarily among those the user already knows about!

Imagine running such a test for a user who would absolutely love the little-known French cult film, “My

Brother The Armoire”. Let’s say it’s objectively a great recommendation for this user. But, the user has

never heard of this movie. If a recommender actually returned this film when recommending movies, it

would be penalized; the test framework can only pick good recommendations from among those in the

user’s set of preferences already.

The issue is further complicated when the preferences are ‘boolean’ and contain no preference value.

There is not even a notion of relative preference on which to select a subset of good items. The best the

test can do is to randomly select some preferred items as the good ones.

The test nevertheless has some use. The items a user prefers are a reasonably proxy for the best

recommendations for the user, but by no means a perfect one. In the case of boolean preference data,

only a precision-recall test is available anyway. It is worth understanding the test’s limitations in this

context.

2.5 Evaluating the GroupLens data set

With these tools in hand, we will be able to discuss not only the speed, but also the quality of

recommender engines. Although examples with large amounts real data are still a couple chapters away,

it’s already possible to quickly evaluate performance on a small data set.

16

Licensed to Duan Jienan <jnduan@gmail.com>

剩余286页未读,继续阅读

421 浏览量

2023-04-20 上传

dadao201

- 粉丝: 0

- 资源: 3

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功