Cache Organization and Memory Management

of the Intel Nehalem Computer Architecture

Trent Rolf

University of Utah Computer Engineering

CS 6810 Final Project

December 2009

Abstract—Intel is now shipping microprocessors using their

new architecture codenamed “Nehalem” as a successor to the

Core architecture. This design uses multiple cores like its prede-

cessor, but claims to improve the utilization and communication

between the individual cores. This is primarily accomplished

through better memory management and cache organization.

Some benchmarking and research has been performed on the

Nehalem architecture to analyze the cache and memory improve-

ments. In this paper I take a closer look at these studies to

determine if the performance gains are significant.

I. INTRODUCTION

The predecessor to Nehalem, Intel’s Core architecture, made

use of multiple cores on a single die to improve performance

over traditional single-core architectures. But as more cores

and processors were added to a high-performance system,

some serious weaknesses and bandwidth bottlenecks began to

appear.

After the initial generation of dual-core Core processors,

Intel began a Core 2 series processor which was not much

more than using two or more pairs of dual-core dies. The cores

communicated via system memory which caused large delays

due to limited bandwidth on the processor bus [5]. Adding

more cores increased the burden on the processor and memory

buses, which diminished the performance gains that could be

possible with more cores.

The new Nehalem architecture sought to improve core-to-

core communication by establishing a point-to-point topology

in which microprocessor cores can communicate directly with

one another and have more direct access to system memory.

II. OVERVIEW OF NEHALEM

A. Architectural Approach

The approach to the Nehalem architecture is more modular

than the Core architecture which makes it much more flexible

and customizable to the application. The architecture really

only consists of a few basic building blocks. The main blocks

are a microprocessor core (with its own L2 cache), a shared

L3 cache, a Quick Path Interconnect (QPI) bus controller, an

integrated memory controller (IMC), and graphics core.

With this flexible architecture, the blocks can be configured

to meet what the market demands. For example, the Bloom-

field model, which is intended for a performance desktop ap-

plication, has four cores, an L3 cache, one memory controller,

and one QPI bus controller. Server microprocessors like the

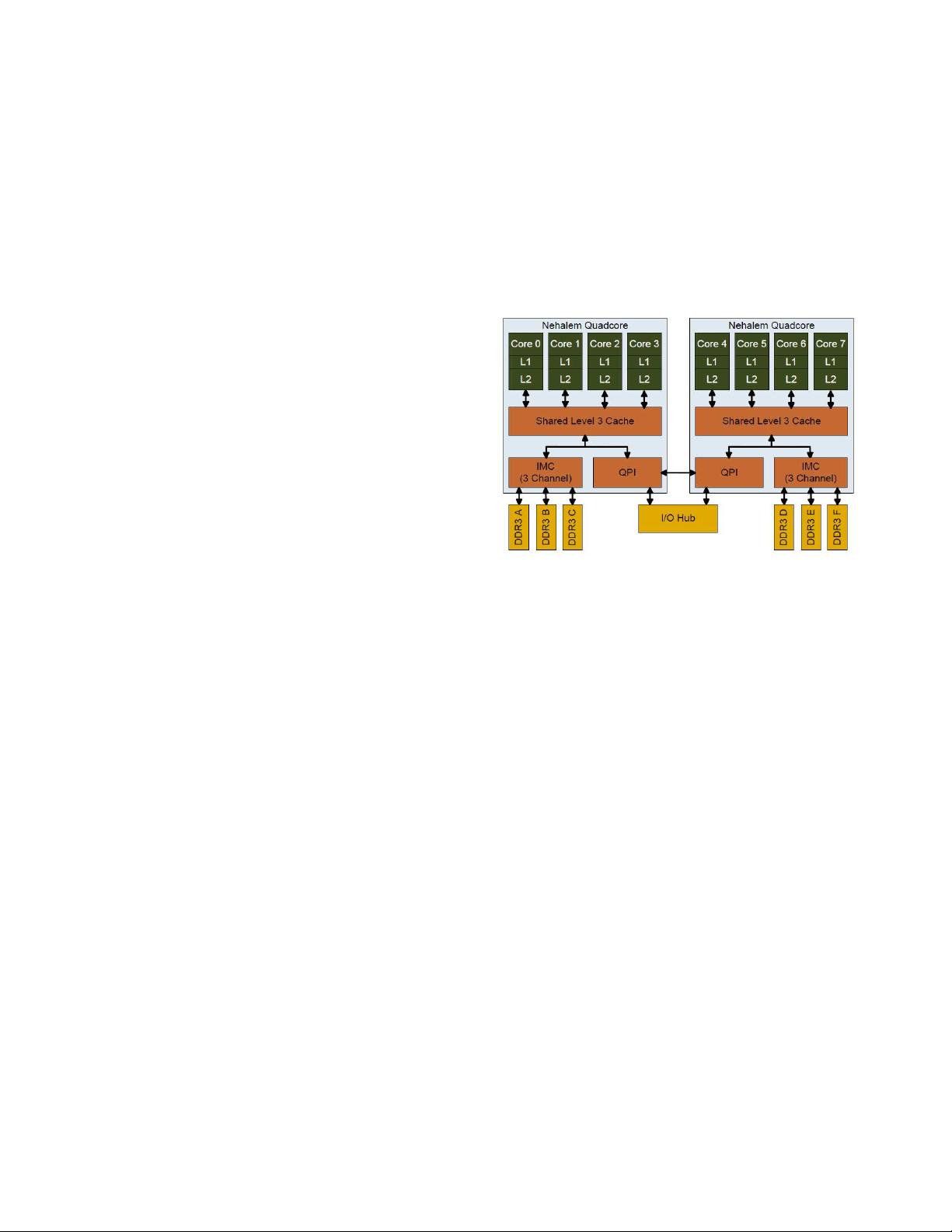

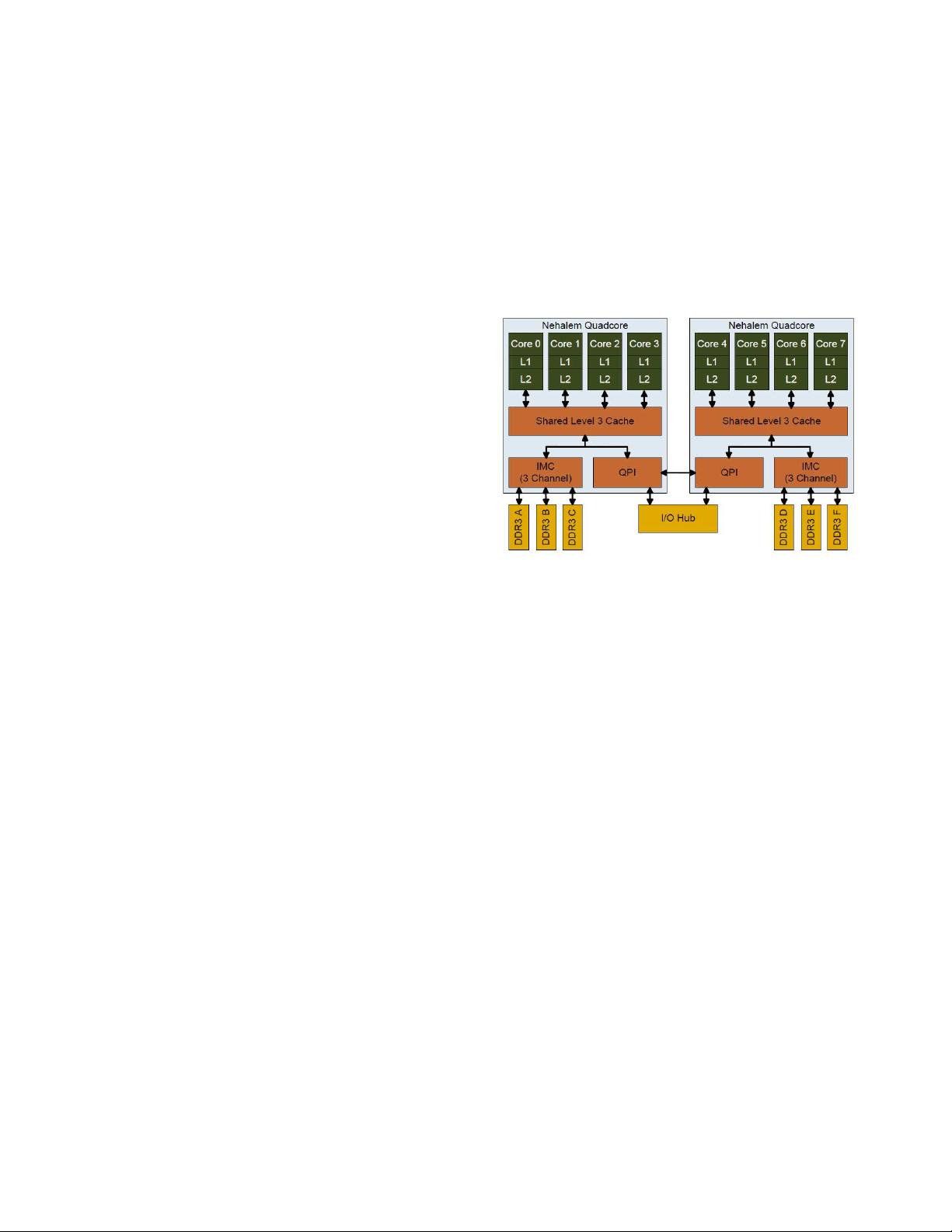

Fig. 1. Eight-core Nehalem Processor [1]

Beckton model can have eight cores, and four QPI bus con-

trollers [5]. The architecture allows the cores to communicate

very effectively in either case. The specifics of the memory

organization are described in detail later.

Figure 1 is an example of an eight-core Nehalem processor

with two QPI bus controllers. This is the configuration of the

processor used in [1].

B. Branch Prediction

Another significant improvement in the Nehalem microar-

chitecture involves branch prediction. For the Core architec-

ture, Intel designed what they call a “Loop Stream Detector,”

which detects loops in code execution and saves the instruc-

tions in a special buffer so they do not need to be contin-

ually fetched from cache. This increased branch prediction

success for loops in the code and improved performance. Intel

engineers took the concept even further with the Nehalem

architecture by placing the Loop Stream Detector after the

decode stage eliminating the instruction decode from a loop

iteration and saving CPU cycles.

C. Out-of-order Execution

Out-of-order execution also greatly increases the perfor-

mance of the Nehalem architecture. This feature allows the

processor to fill pipeline stalls with useful instructions so

the pipeline efficiency is maximized. Out-of-order execution

was present in the Core architecture, but in the Nehalem