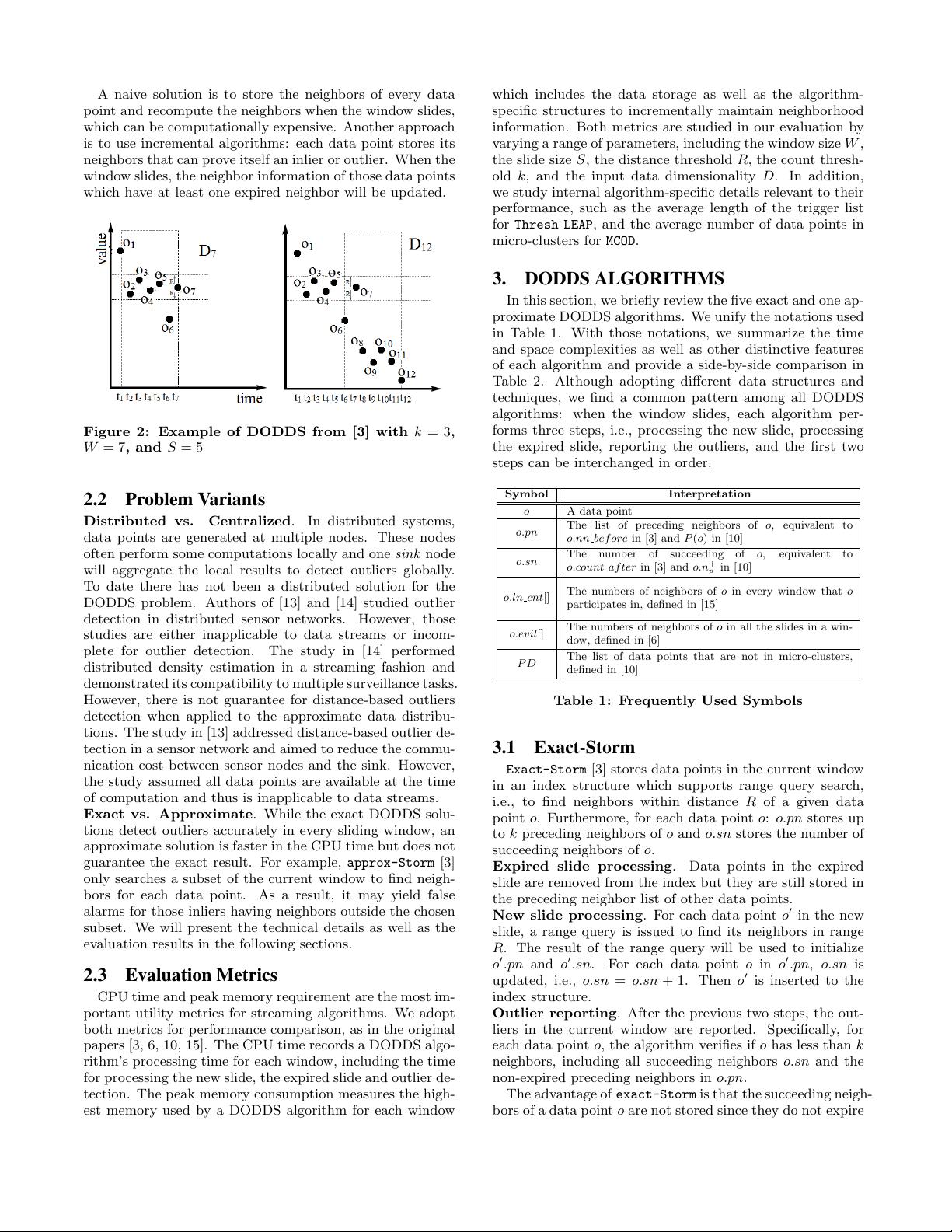

A naive solution is to store the neighbors of every data

point and recompute the neighbors when the window slides,

which ca n be computationally expensive. Another approach

is to use incremental algorithms: each data poi nt stores its

neighbors that can prove itself an inlier o r outlier. When the

window slides, the neighbor information of those data points

which have at least o n e expired neighbor will be updated.

Figure 2: Example of DODDS from [3] with k = 3,

W = 7, and S = 5

2.2 Problem Variants

Distributed vs. Centralized. In distributed systems,

data points are generated at multiple nodes. These nodes

often perform some computations locally and one sink node

will ag g rega t e the local results to detect outliers g l ob a l ly.

To date there has not been a distributed solution for the

DODDS problem. Authors of [13] and [14] studied outlier

detection in distributed sensor networks. However, those

studies are either inapplicable to da t a streams or incom-

plete for ou t l ier detection. The study i n [14] performed

distributed density estimation in a streaming fashion and

demonstrated its compatibility to multiple surveillance tasks.

However, there is not guarantee for distance-based outliers

detection when applied to the approximate data d ist ri b u -

tions. The study in [1 3 ] addressed distance-based outlier de-

tection in a sen so r network and aimed to reduce the commu-

nication cost between sensor nodes and the sink. However,

the study assumed all data points are available at the time

of computation and thus is ina p p l ica b l e to data streams.

Exact vs. Approximate. While the exact DODDS solu-

tions det ec t outliers accurately in every sliding window, an

approximate solution is faster in the CPU time but does not

guarantee the exact result. For example, approx-Storm [3]

only searches a subset of the current window to find neigh-

bors for each data point. As a result, it may yield false

alarms for tho se inliers having neighbors outsid e the chosen

subset. We will present the technical details as well as the

evaluation results in th e following sect i o n s.

2.3 Evaluation Metrics

CPU time and peak memory requirement are the most im-

p

ortant utility metrics for streaming algorithms. We adopt

both metrics for performance comparison, as in the original

papers [3, 6, 10, 15]. The CPU time records a DODDS alg o -

rithm’s processing time for each window, including the time

for processing the new slide, the expired slide and outlier de-

tection. The peak memory consumption measures the high-

est memory used by a DODDS alg o rith m for each window

which includes the data st o ra g e as well as the algorithm-

specific struct u res to incrementally maintain neighborhood

information. Both metrics are studied in our evaluation by

varying a range of parameters, including the window size W ,

the slide size S, the distance threshold R, the count thresh-

old k, and the input data dimensionality D. In addition,

we study internal algorithm-specific details relevant to their

performance, such a s the average length of the trigger list

for Thresh

LEAP, and the average number of data points in

micro-clusters for MCOD.

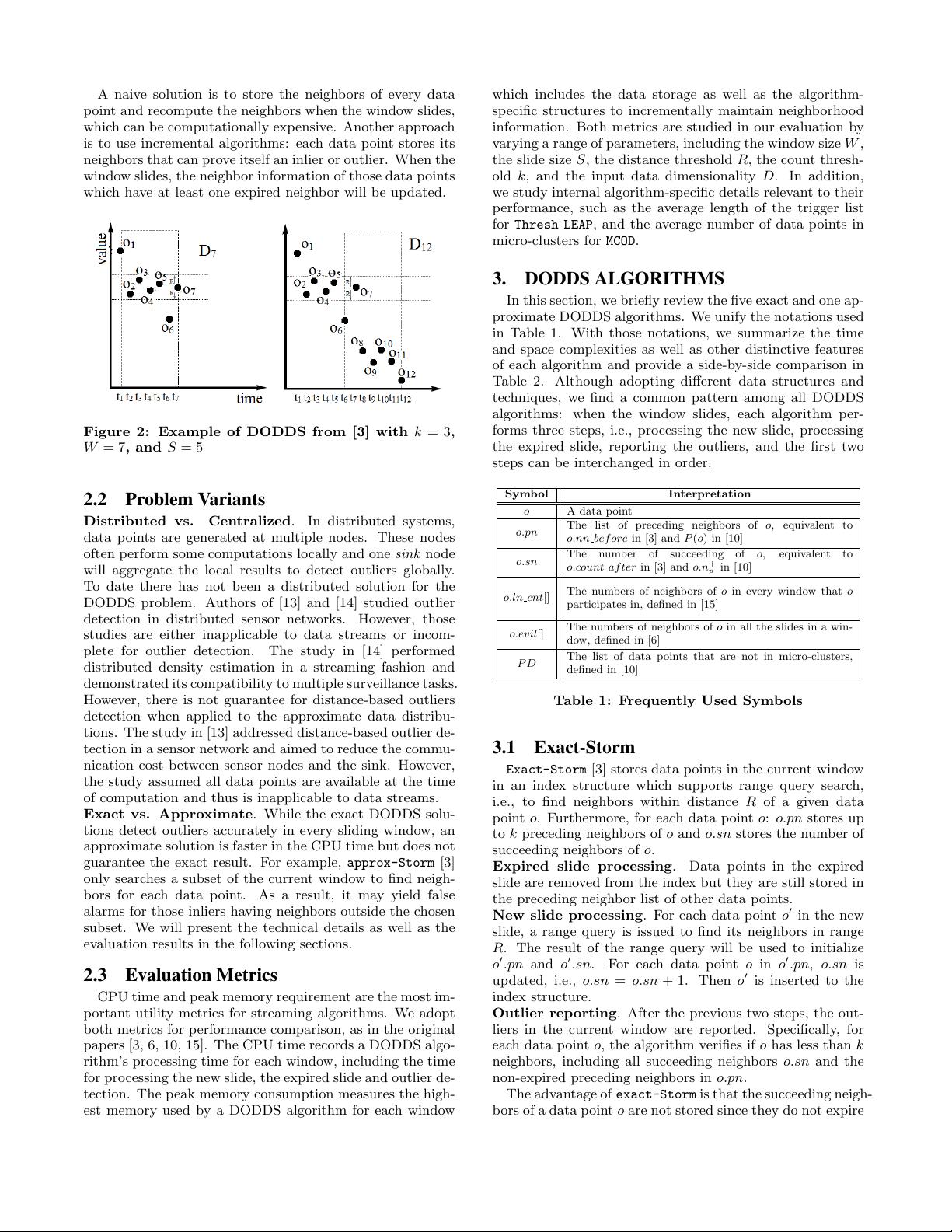

3. DODDS ALGORITHMS

In this section, we briefly review the five exact and one ap-

proximate DODDS algorithms. We unify the notations used

in Table 1. With those notations, we summarize the time

and space complexities as well as other distinctive features

of each algorithm and provide a side-by-side comparison in

Table 2. Although adopting different data structures and

techniques, we find a common pattern among all DODDS

algorithms: when the window slides, each algorithm per-

forms three steps, i.e., p rocessing the new sli d e, processing

the expired slide, reporting the outliers, and the first two

steps can be interchanged in order.

Symbol Interpretation

o A data point

o.pn

The list of preceding neighbors of o, equivalent to

o.nn bef ore in [3] and P(o) in [10]

o.sn

The number of succeeding of o, equivalent to

o.count af ter in [3] and o.n

+

p

in [10]

o.ln cnt[]

The numbers of neighbors of o in every window that o

participates in, defined in [15]

o.evil[]

The numbers of neighbors of o in all the slides in a win -

dow, d efi n ed in [6]

P D

The list of data points that are not in micro-clusters,

defined in [10]

Table 1: Frequently Used Symbols

3.1 Exact-Storm

Exact-Storm [

3] stores data points in the cu rrent window

in an index structure which supports range query search,

i.e., to find neighbors within distance R of a given data

point o. Furtherm o re, for each data point o: o.pn stores up

to k preceding neighbors of o a n d o.sn stores the number of

succeeding neighbors of o.

Expired slide processing. Data points in the expired

slide are removed from the index but they are still stored in

the preceding neighbor list of other d a t a points.

New slide processing. For each data point o

′

in the new

s

lide, a range query is issued to find its nei ghbors in range

R. The result of the range query will b e used to initialize

o

′

.pn a

nd o

′

.sn. For each data point o in o

′

.pn, o.sn is

updated, i.e., o.sn = o.sn + 1. Then o

′

is inserted to the

index structure.

Outlier reporting. After the previous two steps, the out-

liers in the current window are reported. Specifically, for

each data point o, the algorithm verifies if o has less than k

neighbors, including all succeeding neighbors o.sn and the

non-expired preceding neighbors in o .p n .

The advantage of exact-Storm is tha t t h e suc c eed in g n eigh -

bors of a data point o are not stored since they do not expire

1091