SSD 0

Valid chunkInvalid chunk

SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

SSD 0 SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

SSD 0 SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

1

D

2

D

0

P

1

D

2

D

0

P

1

D

2

D

(a) (b) (c)

1

D

2

D

3

D

4

D

5

D

1

P

4

D

1

P

3

D

4

D

5

D

1

P

3

D

4

D

5

D

1

P

4

D

1

P

4

D

2

P

4

D

Log

device

1

L

2

SSD 0 SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

SSD 0 SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

3

D

4

D

5

D

1

P

3

D

4

D

5

D

1

P

Log

device

Incoming requests: 1. Update 2. Update 3. Update

Before

After

1

*

D

SSD 0 SSD 1 SSD 2 SSD 3

0

D

1

D

2

D

0

P

3

D

4

D

5

D

1

P

Cache

Cache

2

*

D

4

*

D

Note that: Note that: Note that:

1 1 1

L D D !

2 2 2

D D !

0 0 1 2

P P L L ! !

1 1 4

P P L !

0 0 1 2

P D D D ! !

1 3 5 4

P D D D ! !

2 1 2 4

P D D D ! !

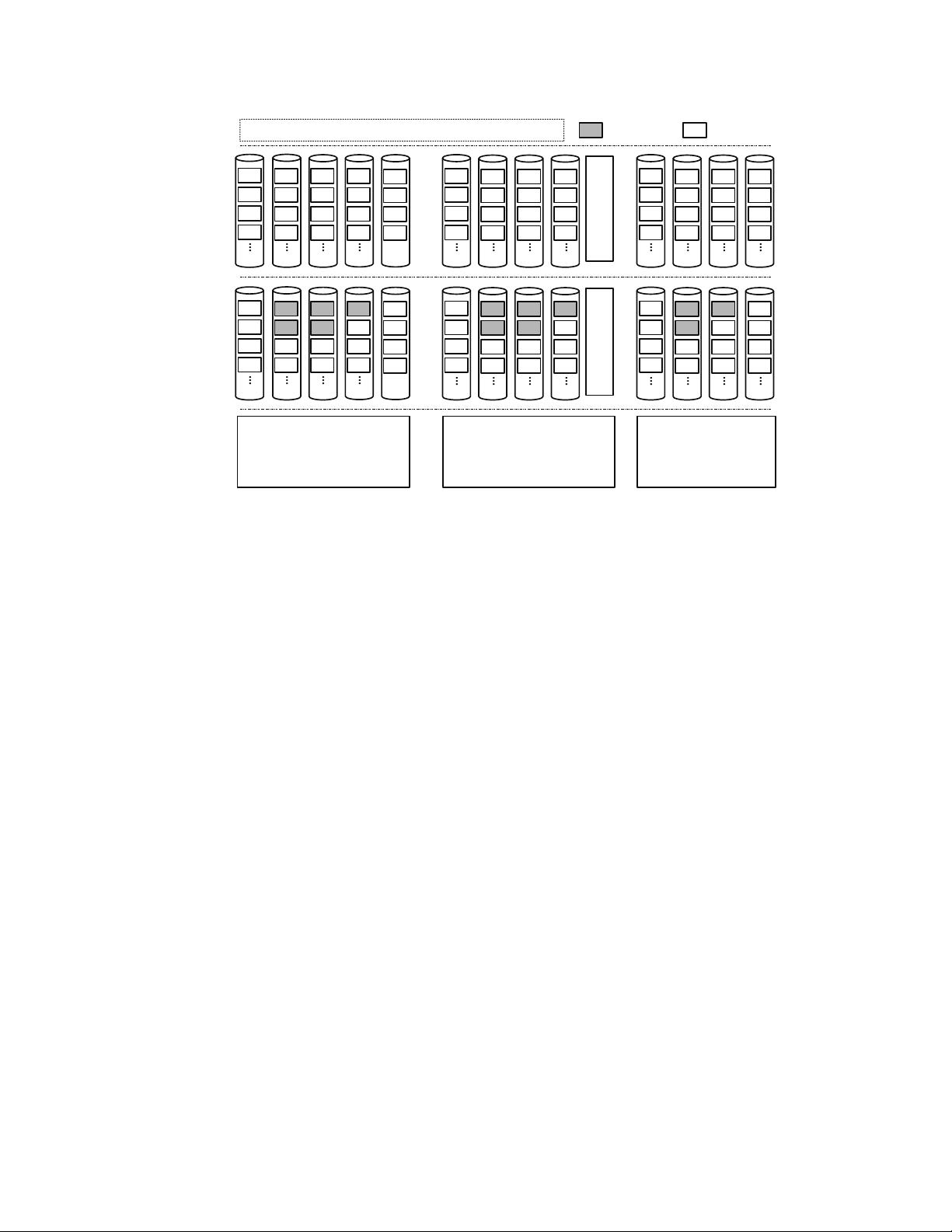

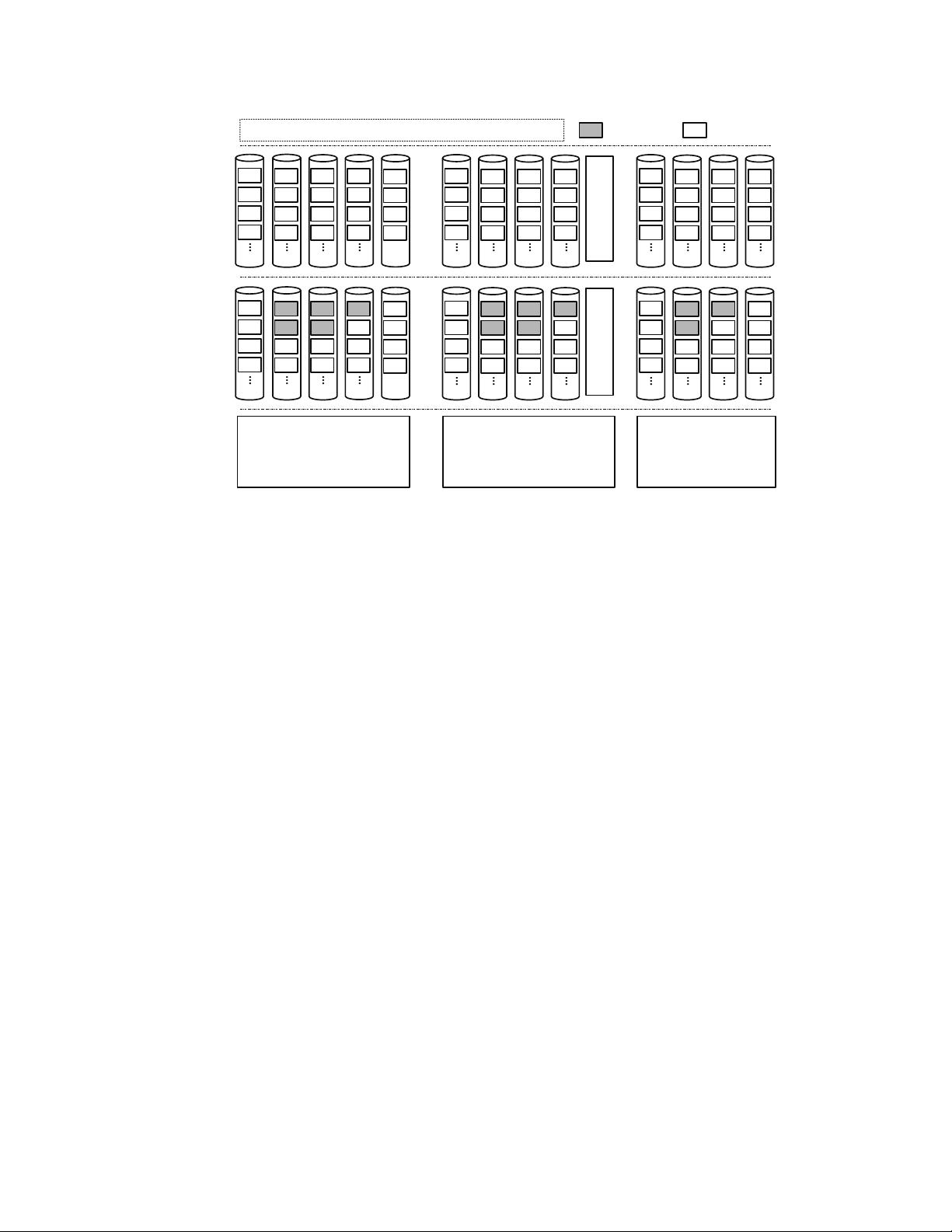

Fig. 2: Three categories of enhanced RAID schemes: (a) Parity Logging; (b) Parity Caching; (c) Elastic Striping

a round-robin manner for load balance. When data chunks are

updated, their corresponding parity chunks will be updated as

well via either read-modify-write or read-reconstruction-write.

We let the chunk size be equal to the page size in this paper.

C. Enhanced RAID Schemes

As we discussed in

§

I, parity update in a RAID will

introduce extra I/Os and degrade both the performance and en-

durance of SSD RAID. Various RAID schemes are developed

to reduce the I/Os caused by parity update, and we classify

them into three categories: parity logging [29, 23, 36], parity

caching [7, 13, 18, 20], and elastic striping [16].

Fig. 2 illustrates the three schemes. Suppose that there are

six data chunks D

0

, D

1

, D

2

, D

3

, D

4

, D

5

and two parity chunks

P

0

, P

1

stored in an SSD RAID array at the beginning, and the

incoming requests are: (1) updating D

1

to D

∗

1

, (2) updating

D

2

to D

∗

2

, (3) updating D

4

to D

∗

4

. We assume that the three

update requests arrive sequentially.

Fig. 2(a) illustrates the parity logging scheme. It usually

employs a dedicated device to log data writes, e.g., uses an

HDD as the log device to absorb small writes. With parity

logging, D

1

is updated to D

∗

1

in SSD 1, and a delta, L

1

,

which is computed via XOR-ing D

1

with D

∗

1

, is logged on

the log device. Then D

2

is updated to D

∗

2

in SSD 2, and

L

2

is also logged. When serving the last request, it writes

D

∗

4

into SSD 1, and writes L

4

into HDD. At last, it uses the

defined computation denoted in Fig. 2(a) to update P

0

to P

∗

0

and P

1

to P

∗

1

in SSD 3 and SSD 2, respectively. We note that

the number of parity writes gets reduced, so as the writes to

SSDs. However, parity logging requires a dedicated device to

log small writes, and it may also harms system-level wear-

leveling.

Fig. 2(b) shows an example of the parity caching scheme.

Parity caching uses a buffer to cache all incoming writes so to

delay parity updates. The key idea is that if more chunks are

updated together, the chance of constructing full-stripe writes

becomes larger, so the I/Os caused by parity update can be

reduced. As shown in Fig. 2(b), it buffers D

∗

1

and D

∗

2

in the

cache first, and then flushes them to SSDs and updates P

0

to P

∗

0

. This process will also happen when updating D

4

and

P

1

. Parity caching can also reduce parity writes, but it usually

requires a dedicated NVRAM, which is expensive and still not

mature in the current market. Note that D

1

, D

2

, D

4

, P

0

and P

1

in both Fig. 2(a) and Fig. 2(b) are marked invalid at the device

level after data update and they can be reclaimed automatically

by the corresponding SSDs.

As shown in Fig. 2(c), elastic striping manages write

requests in a log-structured manner. It appends D

∗

1

, D

∗

2

and D

∗

4

into the RAID array for constructing a new stripe instead of

updating them in the original devices. Note that D

1

, D

2

and D

4

are out-of-date but are still needed to be kept in SSDs for data

protection, and this consumes extra storage space. Therefore,

elastic striping marks these chunks as invalid at RAID level and

calls RAID-level GC operation to reclaim the space occupied

by these invalid chunks. This scheme can effectively reduce

parity writes, mitigate the performance degradation caused by

RMW and RRW, and benefit the wear-leveling among SSDs.

Moreover, elastic striping seems to be suitable for SSD RAID

as out-of-place overwrite is usually adopted by SSDs. The

primary issue of this scheme is that RAID-level GC cost may

be very high if invalid chunks are scattered over the stripes

in the whole RAID array. This motivates us to develop a

workload-aware scheme to reduce the RAID-level GC cost so

to improve the SSD RAID performance. We further discuss

162162