P1: OSO/OVY P2: OSO/OVY QC: OSO/OVY T1: OSO

das23402

Ch00 GTBL020-Dasgupta-v10 August 2, 2006 2:44

6 0.3 Big-O notation

Arithmetic operations on arbitrarily large numbers cannot possibly be performed

in a single, constant-time step. We need to audit our earlier running time estimates

and make them more honest.

We will see in Chapter 1 that the addition of two n-bit numbers takes time roughly

proportional to n; this is not too hard to understand if you think back to the grade-

school procedure for addition, which works on one digit at a time. Thus fib1,

which performs about F

n

additions, actually uses a number of basic steps roughly

proportional to nF

n

. Likewise, the number of steps taken by fib2 is proportional

to n

2

, still polynomial in n and therefore exponentially superior to fib1. This cor-

rection to the running time analysis does not diminish our breakthrough.

But can we do even better than fib2? Indeed we can: see Exercise 0.4.

0.3 Big-O notation

We’ve just seen how sloppiness in the analysis of running times can lead to an

unacceptable level of inaccuracy in the result. But the opposite danger is also

present: it is possible to be too precise. An insightful analysis is based on the right

simplifications.

Expressing running time in terms of basic computer steps is already a simplifica-

tion. After all, the time taken by one such step depends crucially on the particu-

lar processor and even on details such as caching strategy (as a result of which

the running time can differ subtly from one execution to the next). Account-

ing for these architecture-specific minutiae is a nightmarishly complex task and

yields a result that does not generalize from one computer to the next. It there-

fore makes more sense to seek an uncluttered, machine-independent characteriza-

tion of an algorithm’s efficiency. To this end, we will always express running time

by counting the number of basic computer steps, as a function of the size of the

input.

And this simplification leads to another. Instead of reporting that an algorithm takes,

say, 5n

3

+ 4n + 3 steps on an input of size n, it is much simpler to leave out lower-

order terms such as 4n and 3 (which become insignificant as n grows), and even the

detail of the coefficient 5 in the leading term (computers will be five times faster in

a few years anyway), and just say that the algorithm takes time O(n

3

) (pronounced

“big oh of n

3

”).

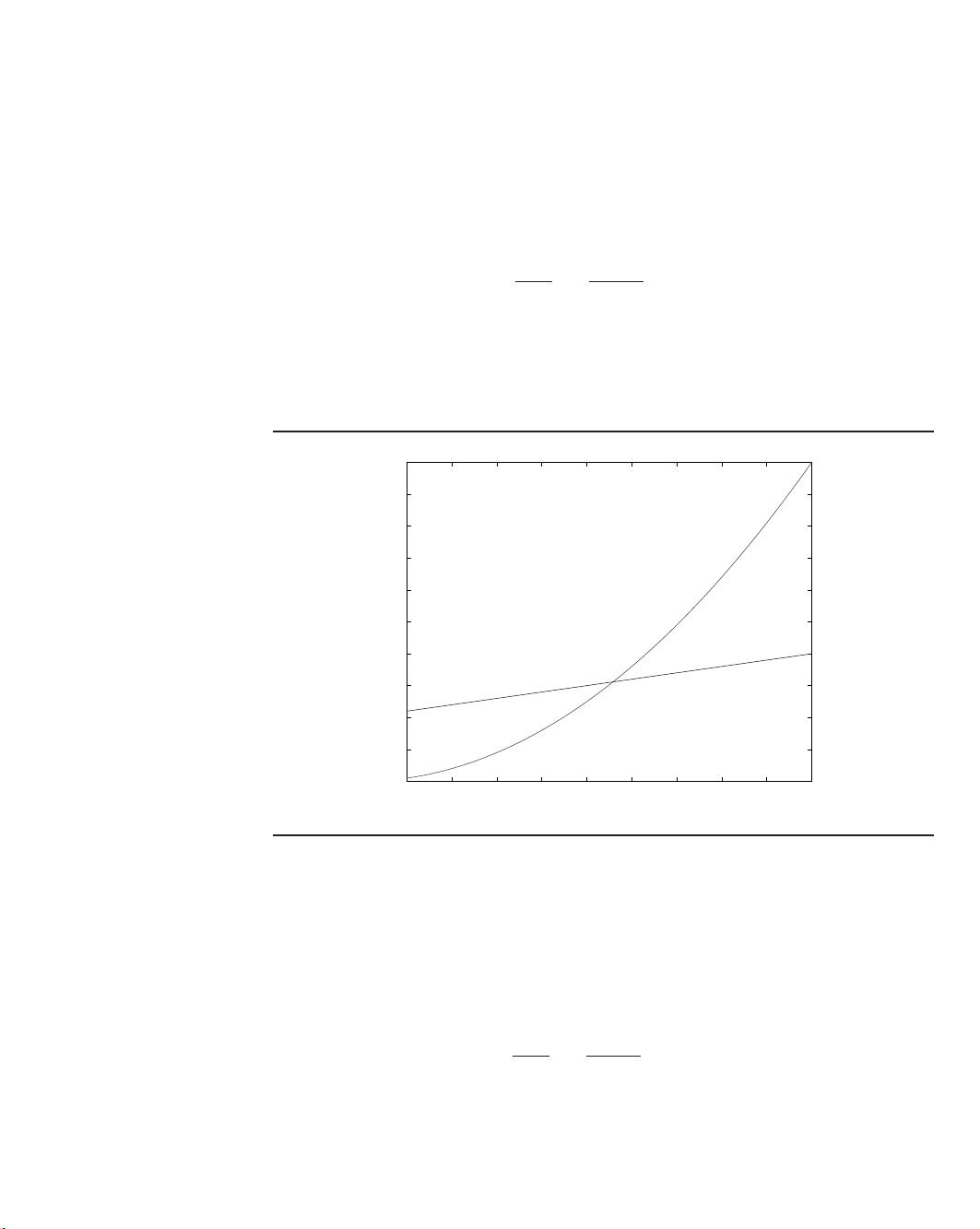

It is time to define this notation precisely. In what follows, think of f (n) and g(n)

as the running times of two algorithms on inputs of size n.

Let f (n) and g(n) be functions from positive integers to positive reals. We say

f = O(g) (which means that “ f grows no faster than g”) if there is a constant

c > 0 such that f (n) ≤ c · g(n).

Saying f = O (g) is a very loose analog of “ f ≤ g.” It differs from the usual notion

of ≤ because of the constant c, so that for instance 10n = O(n). This constant also

allows us to disregard what happens for small values of n. For example, suppose we