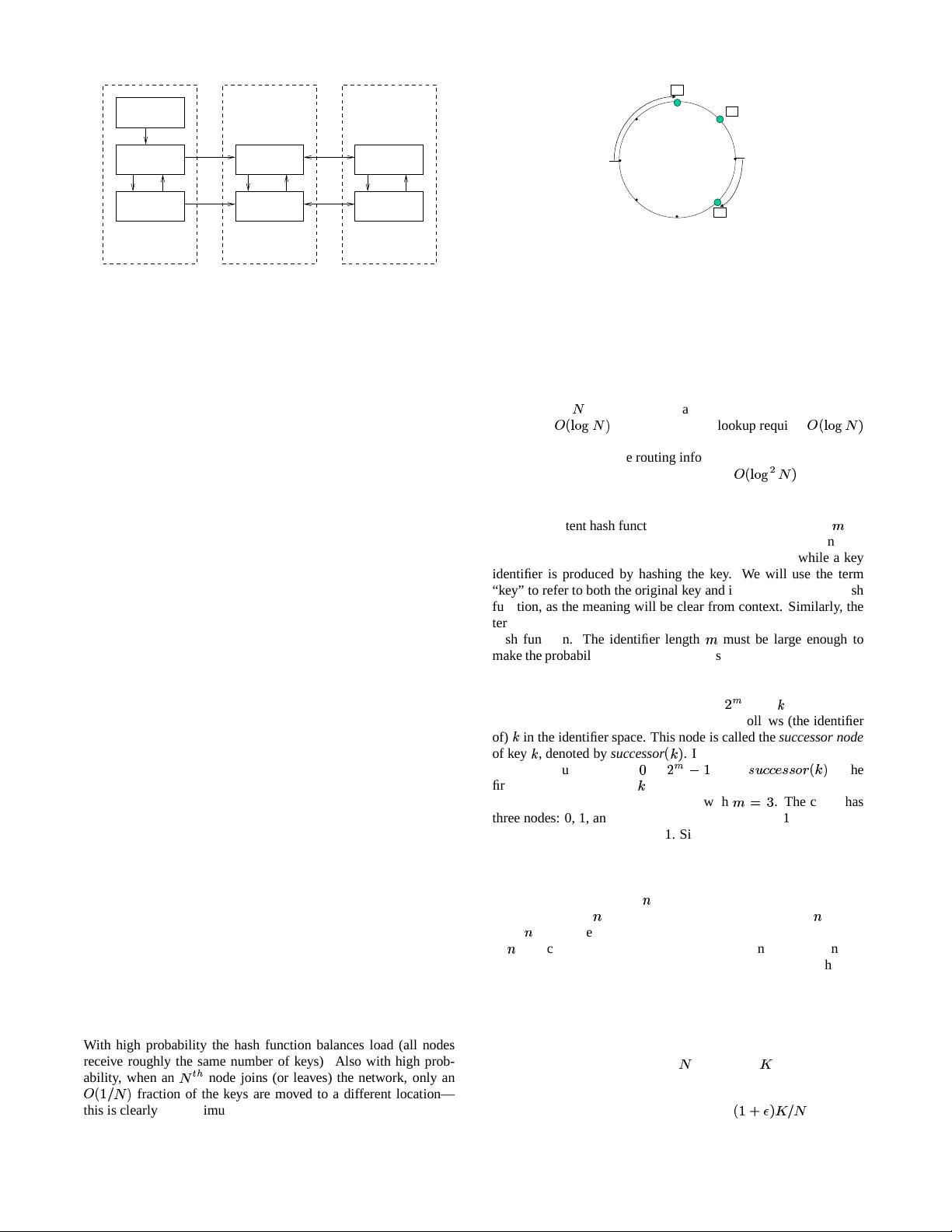

Server

Chord

Chord Chord

File System

Block Store Block Store Block Store

Client Server

Figure 1: Structure of an example Chord-based distributed

storage system.

machine is only occasionally available, they can offer to store

others’ data while they are up, in return for having their data

stored elsewhere when they are down. The data’s name can

serve as a key to identify the (live) Chord node responsible

for storing the data item at any given time. Many of the

same issues arise as in the Cooperative Mirroring applica-

tion, though the focus here is on availability rather than load

balance.

Distributed Indexes to support Gnutella- or Napster-like keyword

search. A key in this application could be derived from the

desired keywords, while values could be lists of machines

offering documents with those keywords.

Large-Scale Combinatorial Search, such as code breaking. In

this case keys are candidate solutions to the problem (such as

cryptographic keys); Chord maps these keys to the machines

responsible for testing them as solutions.

Figure 1 shows a possible three-layered software structure for a

cooperative mirror system. The highest layer would provide a file-

like interface to users, including user-friendly naming and authenti-

cation. This “file system” layer might implement named directories

and files, mapping operations on them to lower-level block opera-

tions. The next layer, a “block storage” layer, would implement

the block operations. It would take care of storage, caching, and

replication of blocks. The block storage layer would use Chord to

identify the node responsible for storing a block, and then talk to

the block storage server on that node to read or write the block.

4. The Base Chord Protocol

The Chord protocol specifies how to find the locations of keys,

how new nodes join the system, and how to recover from the failure

(or planned departure) of existing nodes. This section describes a

simplified version of the protocol that does not handle concurrent

joins or failures. Section 5 describes enhancements to the base pro-

tocol to handle concurrent joins and failures.

4.1 Overview

At its heart, Chord provides fast distributed computation of a

hash function mapping keys to nodes responsible for them. It uses

consistent hashing [11, 13], which has several good properties.

With high probability the hash function balances load (all nodes

receive roughly the same number of keys). Also with high prob-

ability, when an

node joins (or leaves) the network, only an

fraction of the keys are moved to a different location—

this is clearly the minimum necessary to maintain a balanced load.

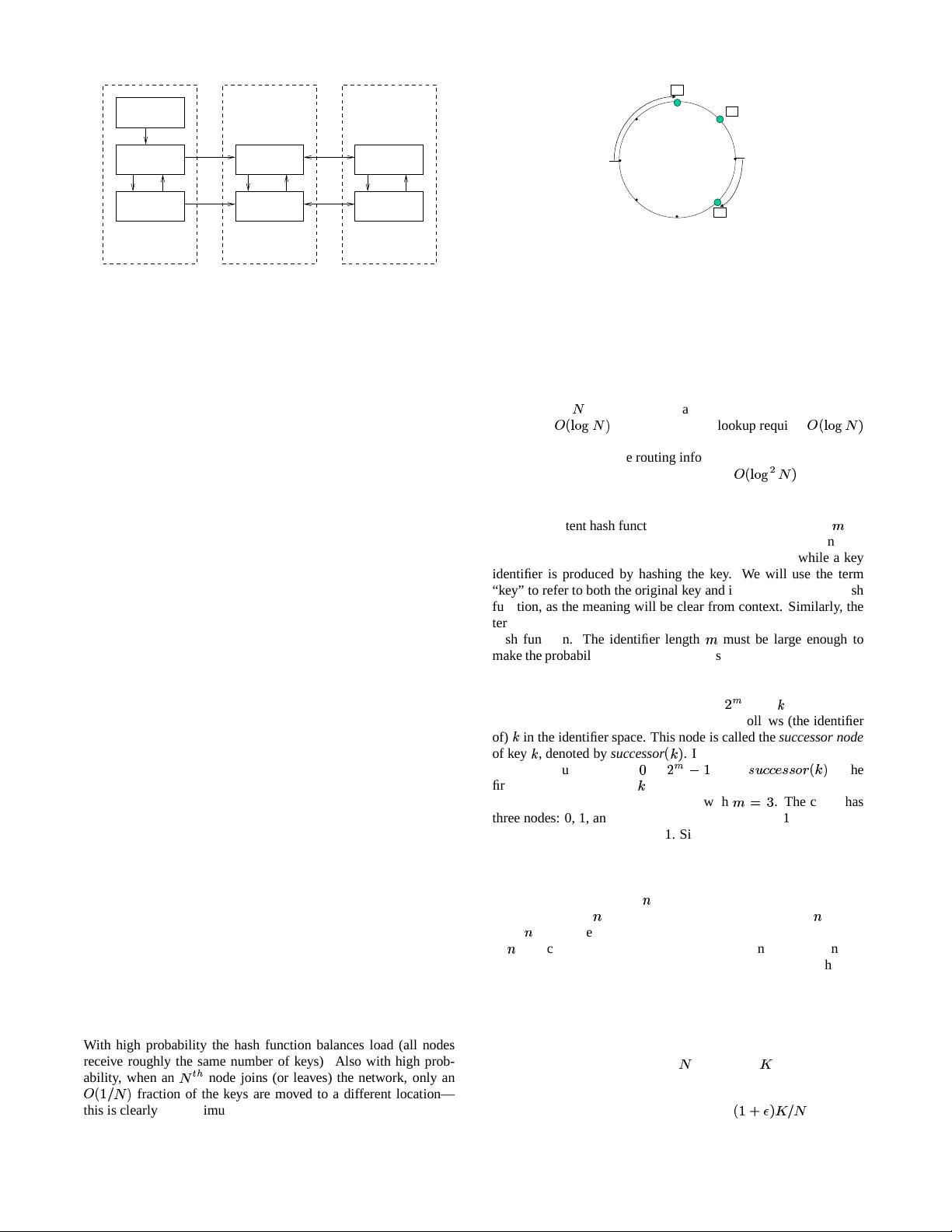

Figure 2: An identifier circle consisting of the three nodes 0, 1,

and 3. In this example, key 1 is located at node 1, key 2 at node

3, and key 6 at node 0.

Chord improves the scalability of consistent hashing by avoid-

ing the requirement that every node know about every other node.

A Chord node needs only a small amount of “routing” informa-

tion about other nodes. Because this information is distributed, a

node resolves the hash function by communicating with a few other

nodes. In an

-node network, each node maintains information

only about

other nodes, and a lookup requires

messages.

Chord must update the routing information when a node joins or

leaves the network; a join or leave requires

messages.

4.2 Consistent Hashing

The consistent hash function assigns each node and key an

-bit

identifier using a base hash function such as SHA-1 [9]. A node’s

identifier is chosen by hashing the node’s IP address, while a key

identifier is produced by hashing the key. We will use the term

“key” to refer to both the original key and its image under the hash

function, as the meaning will be clear from context. Similarly, the

term “node” will refer to both the node and its identifier under the

hash function. The identifier length

must be large enough to

make the probability of two nodes or keys hashing to the same iden-

tifier negligible.

Consistent hashing assigns keys to nodes as follows. Identifiers

are ordered in an identifier circle modulo

. Key

is assigned to

the first node whose identifier is equal to or follows (the identifier

of)

in the identifier space. This node is called the successor node

of key

, denoted by successor

. If identifiers are represented as

a circle of numbers from

to

, then

is the

first node clockwise from

.

Figure 2 shows an identifier circle with

. The circle has

three nodes: 0, 1, and 3. The successor of identifier 1 is node 1, so

key 1 would be located at node 1. Similarly, key 2 would be located

at node 3, and key 6 at node 0.

Consistent hashing is designed to let nodes enter and leave the

network with minimal disruption. To maintain the consistent hash-

ing mapping when a node

joins the network, certain keys previ-

ously assigned to

’s successor now become assigned to

. When

node

leaves the network, all of its assigned keys are reassigned

to

’s successor. No other changes in assignment of keys to nodes

need occur. In the example above, if a node were to join with iden-

tifier 7, it would capture the key with identifier 6 from the node

with identifier 0.

The following results are proven in the papers that introduced

consistent hashing [11, 13]:

THEOREM 1. For any set of

nodes and

keys, with high

probability:

1. Each node is responsible for at most

"!

keys

3