IEEE

TRANSACTIONS

ON

NEURAL

NETWORKS,

VOL.

5,

NO.

6,

NOVEMBER

1994

989

Brief

Papers

Training Feedforward Networks with the Marquardt Algorithm

Martin

T.

Hagan

and

Mohammad

B.

Menhaj

Abstract-

The Marquardt algorithm for nonlinear least

squares is presented and is incorporated into the backpropagation

algorithm for training feedforward neural networks. The

algorithm is tested on several function approximation problems,

and is compared with a conjugate gradient algorithm and a

variable learning rate algorithm. It is found that the Marquardt

algorithm is much more efficient than either of the other

techniques when the network contains no more than a few

hundred weights.

I I I

dl)

~,

,

:

dn)

I. INTRODUCTION

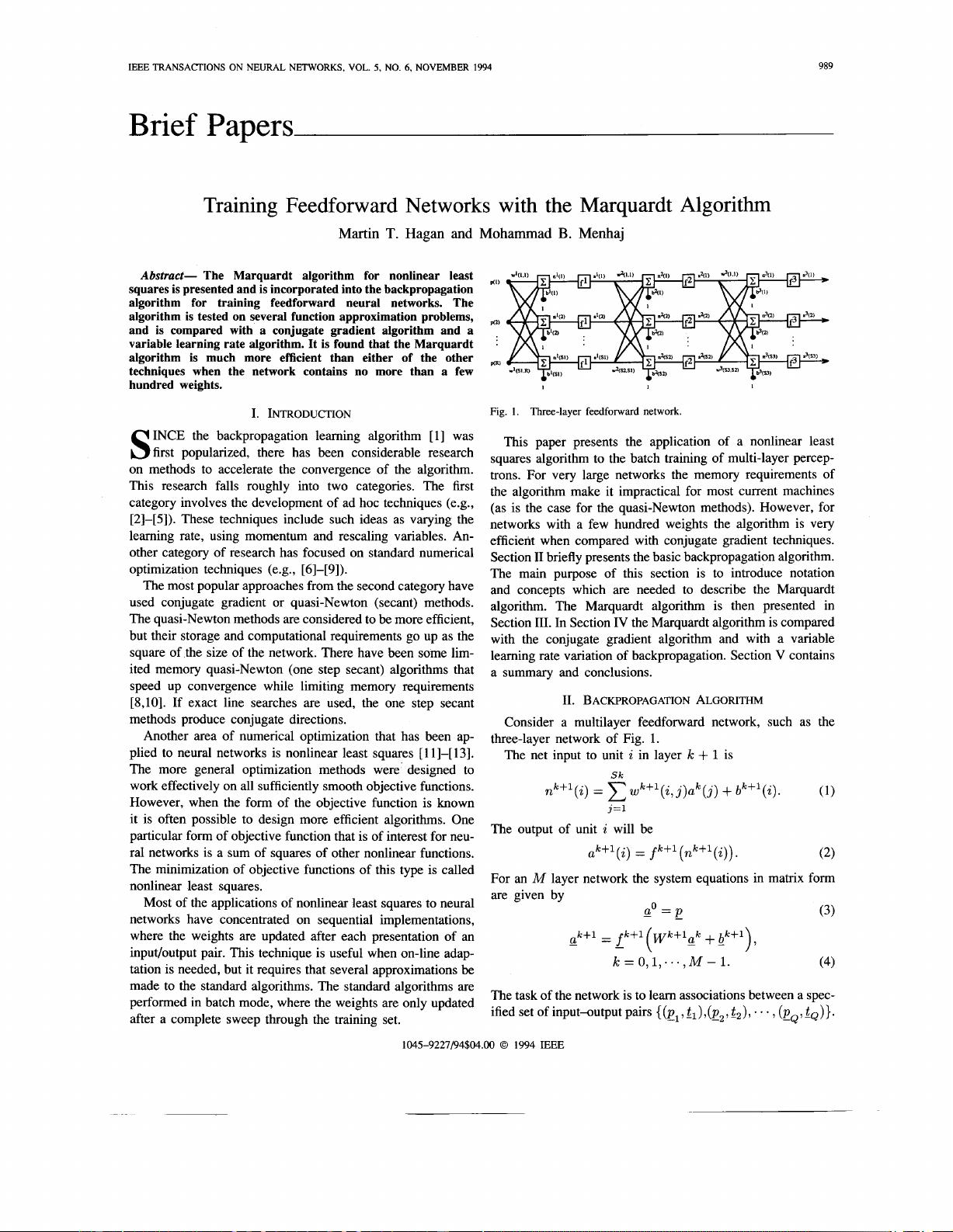

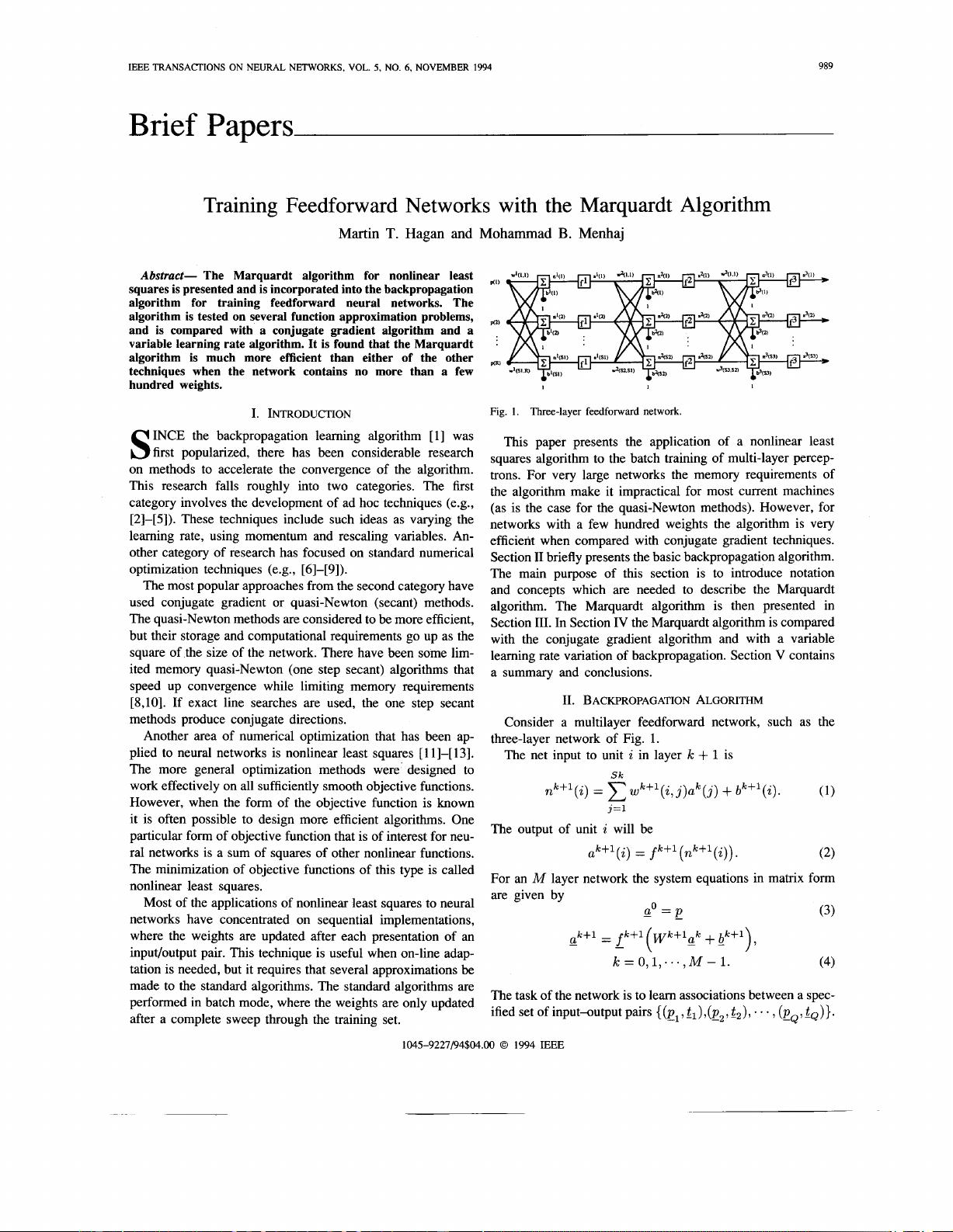

Fig.

1.

Three-layer feedforward network.

INCE the backpropagation learning algorithm

[

11 was

S

first popularized, there has been considerable research

on methods to accelerate the convergence of the algorithm.

This research falls roughly into two categories. The first

category involves the development of ad hoc techniques (e.g.,

[2]-[5]). These techniques include such ideas as varying the

learning rate, using momentum and rescaling variables. An-

other category of research has focused on standard numerical

optimization techniques (e.g.,

[

61-[ 91).

The most popular approaches from the second category have

used conjugate gradient or quasi-Newton (secant) methods.

The quasi-Newton methods are considered to

be

more efficient,

but their storage and computational requirements

go

up as the

square of the size of the network. There have been some lim-

ited memory quasi-Newton (one step secant) algorithms that

speed up convergence while limiting memory requirements

[&lo]. If exact line searches are used, the one step secant

methods produce conjugate directions.

Another area of numerical optimization that has been ap-

plied to neural networks is nonlinear least squares

[

1 11-[ 131.

The more general optimization methods were designed to

work effectively on all sufficiently smooth objective functions.

However, when the form of the objective function is known

it is often possible to design more efficient algorithms. One

particular form of objective function that is of interest for neu-

ral networks is a sum of squares of other nonlinear functions.

The minimization of objective functions of this type is called

nonlinear least squares.

Most of the applications of nonlinear least squares to neural

networks have concentrated on sequential implementations,

where the weights are updated after each presentation of an

input/output pair. This technique is useful when on-line adap-

tation is needed, but it requires that several approximations

be

This paper presents the application of a nonlinear least

squares algorithm to the batch training of multi-layer percep-

trons. For very large networks the memory requirements of

the algorithm make it impractical for most current machines

(as is the case for the quasi-Newton methods). However, for

networks with a few hundred weights the algorithm is very

efficient when compared with conjugate gradient techniques.

Section I1 briefly presents the basic backpropagation algorithm.

The main purpose of this section is to introduce notation

and concepts which are needed to describe the Marquardt

algorithm. The Marquardt algorithm is then presented in

Section 111. In Section IV the Marquardt algorithm is compared

with the conjugate gradient algorithm and with a variable

learning rate variation of backpropagation. Section V contains

a summary and conclusions.

11.

BACKPROPAGATION

ALGORITHM

Consider a multilayer feedforward network, such as the

The net input to unit

i

in layer

k

+

1

is

three-layer network of Fig. 1.

Sk

,k+l(i)

=

E,&++'(.

2,

j)ak

.

(j)

+

bk+'(i).

(1)

j=1

The output of unit

i

will be

For an

M

layer network the system equations in matrix form

are given by

ao

=p

(3)

,k+l

=

fk++'

k

=

0,1,.

. .

,M

-

1.

(4)

made to the standard algorithms. The standard algorithms are

performed in batch mode, where the weights are only updated

after a complete sweep through the training set.

The task of the network is to learn associations between a spec-

ified set of input-output pairs

{

(pl

,

tl

,

(p2

,

t2

,

.

. .

(pQ

,

t~

1.

1045-9227/94$04.00

0

1994

IEEE