evaluated on different modalities of medical imagining as

shown in Fig. 1. The contributions of this work can be

summarized as follows:

1) Two new models RU-Net and R2U-Net are introduced for

medical image segmentation.

2) The experiments are conducted on three different

modalities of medical imaging including retina blood vessel

segmentation, skin cancer segmentation, and lung

segmentation.

3) Performance evaluation of the proposed models is

conducted for the patch-based method for retina blood vessel

segmentation tasks and the end-to-end image-based approach

for skin lesion and lung segmentation tasks.

4) Comparison against recently proposed state-of-the-art

methods that shows superior performance against equivalent

models with same number of network parameters.

The paper is organized as follows: Section II discusses related

work. The architectures of the proposed RU-Net and R2U-Net

models are presented in Section III. Section IV, explains the

datasets, experiments, and results. The conclusion and future

direction are discussed in Section V.

II. RELATED WORK

Semantic segmentation is an active research area where

DCNNs are used to classify each pixel in the image

individually, which is fueled by different challenging datasets

in the fields of computer vision and medical imaging [23, 24,

and 25]. Before the deep learning revolution, the traditional

machine learning approach mostly relied on hand engineered

features that were used for classifying pixels independently. In

the last few years, a lot of models have been proposed that have

proved that deeper networks are better for recognition and

segmentation tasks [5]. However, training very deep models is

difficult due to the vanishing gradient problem, which is

resolved by implementing modern activation functions such as

Rectified Linear Units (ReLU) or Exponential Linear Units

(ELU) [5,6]. Another solution to this problem is proposed by

He et al., a deep residual model that overcomes the problem

utilizing an identity mapping to facilitate the training process

[26].

In addition, CNNs based segmentation methods based on

FCN provide superior performance for natural image

segmentation [2]. One of the image patch-based architectures is

called Random architecture, which is very computationally

intensive and contains around 134.5M network parameters.

The main drawback of this approach is that a large number of

pixel overlap and the same convolutions are performed many

times. The performance of FCN has improved with recurrent

neural networks (RNN), which are fine-tuned on very large

datasets [27]. Semantic image segmentation with DeepLab is

one of the state-of-the-art performing methods [28]. SegNet

consists of two parts, one is the encoding network which is a

13-layer VGG16 network [5], and the corresponding decoding

network uses pixel-wise classification layers. The main

contribution of this paper is the way in which the decoder up-

samples its lower resolution input feature maps [10]. Later, an

improved version of SegNet, which is called Bayesian SegNet

was proposed in 2015 [29]. Most of these architectures are

explored using computer vision applications. However, there

are some deep learning models that have been proposed

specifically for the medical image segmentation, as they

consider data insufficiency and class imbalance problems.

One of the very first and most popular approaches for

semantic medical image segmentation is called “U-Net” [12].

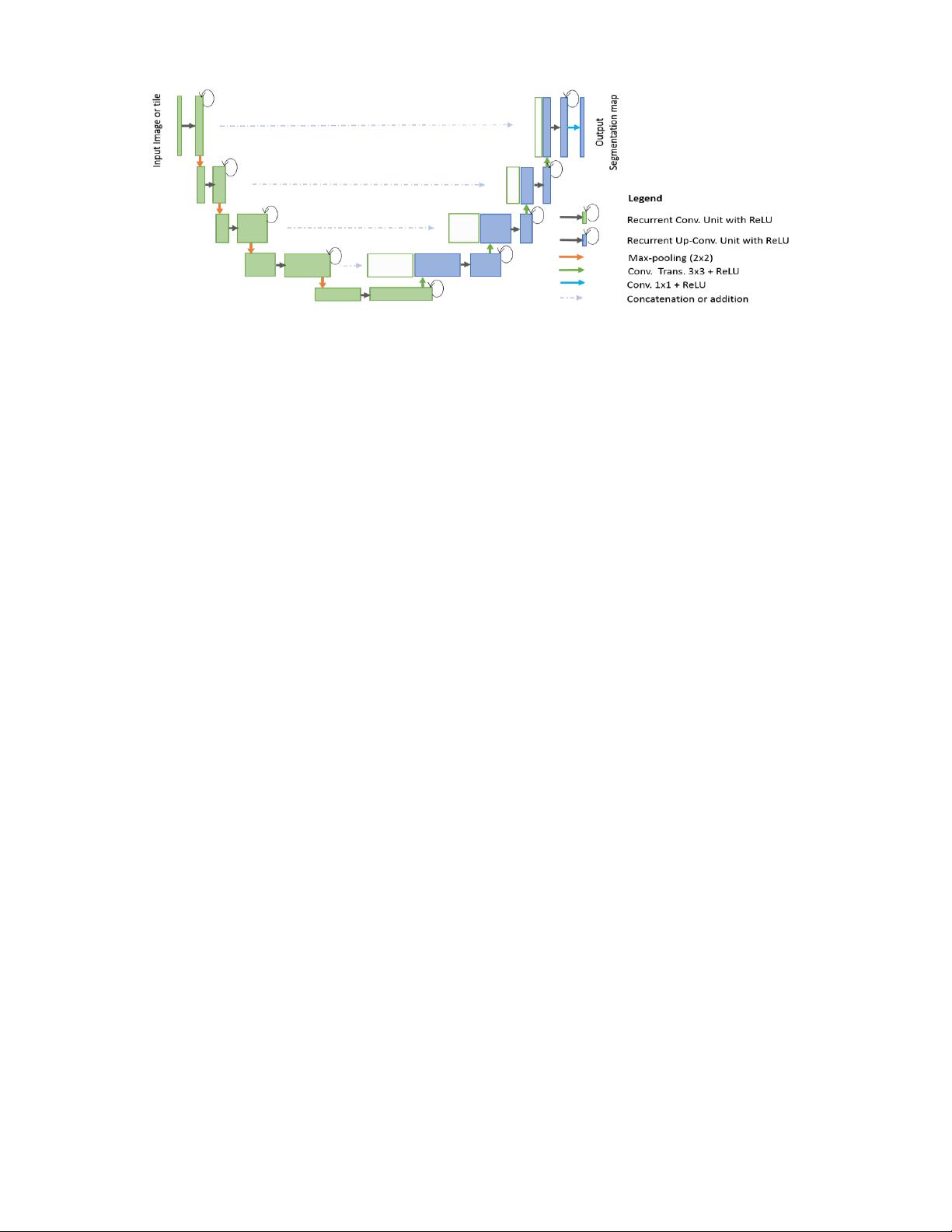

A diagram of the basic U-Net model is shown in Fig. 2.

According to the structure, the network consists of two main

parts: the convolutional encoding and decoding units. The basic

convolution operations are performed followed by ReLU

activation in both parts of the network. For down sampling in

the encoding unit, 2×2 max-pooling operations are performed.

In the decoding phase, the convolution transpose (representing

up-convolution, or de-convolution) operations are performed to

up-sample the feature maps. The very first version of U-Net was

used to crop and copy feature maps from the encoding unit to

the decoding unit. The U-Net model provides several

advantages for segmentation tasks: first, this model allows for

the use of global location and context at the same time. Second,

it works with very few training samples and provides better

performance for segmentation tasks [12]. Third, an end-to-end

pipeline process the entire image in the forward pass and

directly produces segmentation maps. This ensures that U-Net

preserves the full context of the input images, which is a major

advantage when compared to patch-based segmentation

approaches [12, 14].