Lei et al. EURASIP Journal on Image and Video Processing

(2018) 2018:122

Page 3 of 14

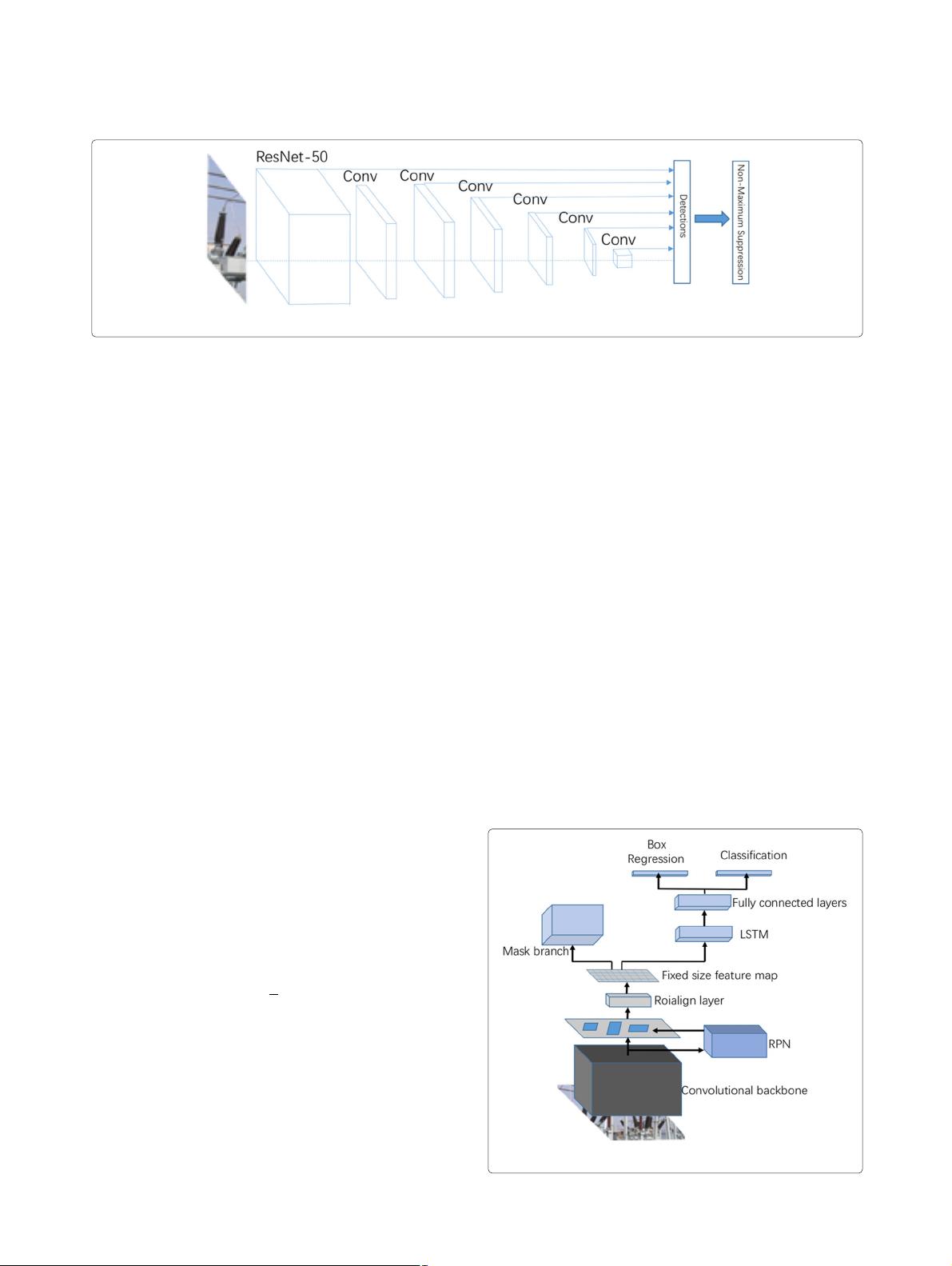

Fig. 2 The structure of R-FCN

lower efficiency. This process significantly improves the

detection speed of the entire model. However, it can only

determine the target’s general location instead of the spe-

cific power component’s position. Overall, this model has

a low recognition rate when the power components are

occluded. Thus, it cannot meet the on-site requirements

for power component identification.

2.2 Power station identification based on R-FCN method

The target detection of the regional-based full convolu-

tional network [22] is divided into two steps: position-

ing a target and then classifying the target to a specific

category. First, R-FCN model uses a rudimentary con-

volutional network to generate a feature map. Then, the

regional feature map is used to generate the feature map

before and after the full map is constructed. The model

determines the target’s outline by searching and filtering

[23] scene images through these feature maps. Finally, the

classification framework recognizes the target.

Figure 2 demonstrates the structure of R-FCN model.

The target image is passed through a basic convolutional

network to generate feature maps and input these feature

maps into a full-volume network to generate a score bank

of position-sensitive score maps. The results of the basic

convolutional network go through the RPN network to

generate RoI. For a RoI of size w × h (obtained by the RPN

network), the target frame is divided into k × k subar-

eas, each subarea is of size w × h/k2. For anyone subarea

bin

i, j

, j ≤ k − 1, define a location-sensitive pooling

operation:

r

c

(i, j|∇) =

(x,y)∈bin(i,j)

1

n

z

i,j,c

(x + x

0

, y + y

0

|∇) (1)

where r

c

(i, j|∇) isthepooledresponseofsubareabin(i, j)

to c categories and z

i,j,c

stands for a location-sensitive

score map corresponding to subarea bin(i, j). x

0

+ y

0

represents the coordinates of the upper left corner of

the target candidate box, n isthenumberofpixelsin

subarea bin(i, j),and∇ represents all the learned param-

eters of the network. The model calculates the average

of pooled response output r

c

(i, j|∇) for k × k sub-

regions and uses the softmax regression classification

method to obtain the probability that it belongs to each

category.

R-FCN integrates the target’s position information into

ROI pooling by position-sensitive score map, which solves

the problem that the ROI pooling of Faster-RCNN net-

work has no translation invariance. Thus, this model

improves the accuracy of target detection and classifi-

cation so that the operating efficiency of the model is

significantly superior. However, it is evident that the R-

FCN model still cannot detect the specific location of

the target and lacks the robustness to the scene of power

components with many interfering objects.

3 Recognition of power components based on

Mask LSTM-CNN

Although the Faster-RCNN and R-FCN methods improve

the processing speed and accuracy of part identification

models, they cannot refine the specific contours of power

components so that live working robots cannot accurately

identify components’ orientations through such methods.

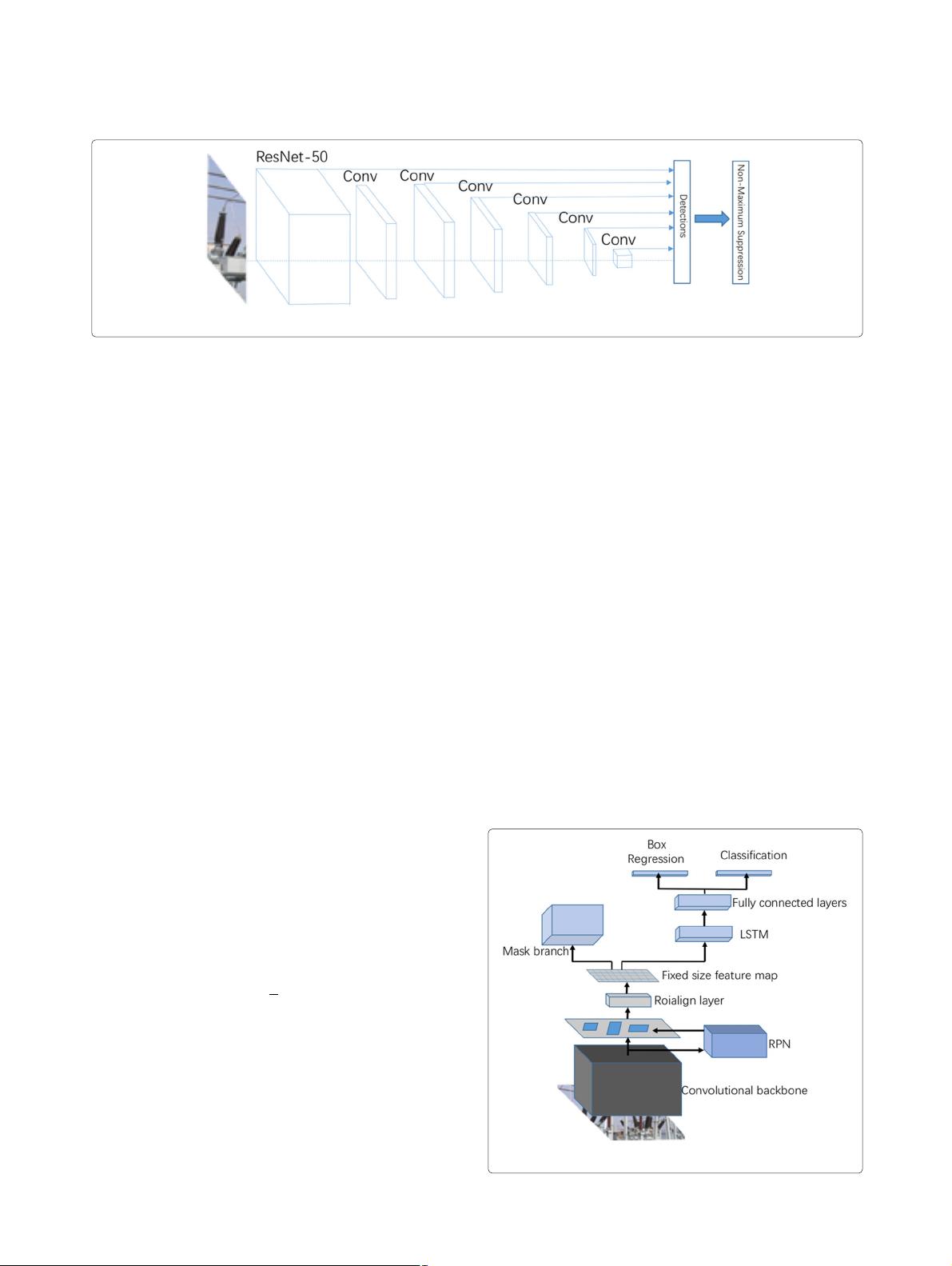

Fig. 3 The structure of Mask LSTM-CNN