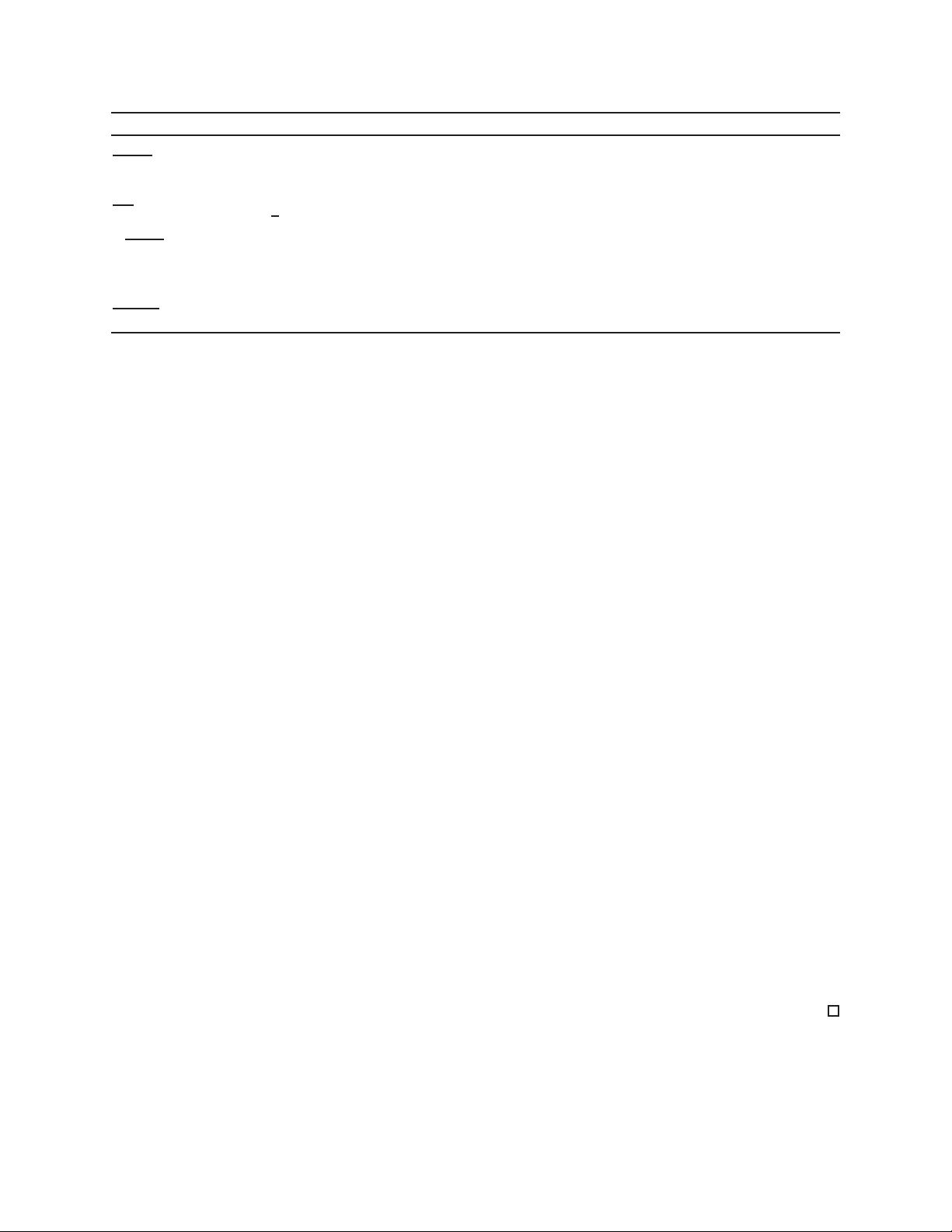

Algorithm 1 Pseudocode (quadratic-penalty version)

input training data, neural net architecture with weight s w

w ← arg min

w

L(w) reference net

θ

1

, θ

2

← arg min

θ

1

,θ

2

kw − ∆

1

(θ

1

) − ∆

2

(θ

2

)k

2

init

for

µ = µ

0

< µ

1

< · · · < ∞

w ← arg min

w

L(w) +

µ

2

kw − ∆

1

(θ

1

) − ∆

2

(θ

2

)k

2

L step

while

alternation does not converge

θ

1

← arg min

θ

1

k(w − ∆

2

(θ

2

)) − ∆

1

(θ

1

)k

2

C step

θ

2

← arg min

θ

2

k(w − ∆

1

(θ

1

)) − ∆

2

(θ

2

)k

2

if kw − ∆

1

(θ

1

) − ∆

2

(θ

2

)k is small enough then exit the loop

return

w, θ

1

, θ

2

problems involve discrete and continuo us variables and can be NP-hard (such a s quantization with an

adaptive codebook). Converge nc e can be established quite generally for convex functions [

4, 42]. For

nonconvex functions, convergence res ults are complex and mor e restrictive [38]. One simple case where

convergence occurs is if the objective in (3) (i.e., each ∆

i

) is continuously differentiable and it has a unique

minimizer over each θ

i

[

5, Proposition 2.7.1]. However, in certain cases the optimization ca n be solved

exactly without any alternation. We give a specific result next.

4.1 Exactly solvable C step

Solution of the C s tep (eq.

3) does not need to be an alternating optimization. Below we give an e xact

algorithm for the additive combination of fixed codebook quantization (e.g., {−1, +1}, {−1, 0, +1}, etc.)

and sparse corrections .

Theorem 4.1 (Exac tly solvable C step for combination of fixed codebook quantization + s parse correc tions).

Given a fixed codebook C consider compression of the weights w

i

with an additive combinations of quantized

values q

i

∈ C and sparse corrections s

i

:

min

q,s

X

i

(w

i

− (q

i

+ s

i

))

2

s.t. ksk

0

≤ κ, (5)

Then the following provides one optimal solution (q

∗

, s

∗

): first s et q

∗

i

= closes t(w

i

) in codebook for each i,

then solve for s: min

s

P

i

(w

i

− q

∗

i

− s

i

))

2

s.t. ksk

0

≤ κ.

Proof. Imag ine we know the optimal set of nonzeros o f the vector s, which we denote as N . Then, for the

elements not in N , the optimal s olution is s

∗

i

= 0 and q

∗

i

= closest (w

i

). For the elements in N , we can find

their optimal solution by solving independently for each i:

min

q

i

,s

i

(w

i

− (q

i

+ s

i

))

2

s.t. q

i

∈ C.

The solution is s

∗

i

= w

i

− q

i

for arbitrary chosen q

i

∈ C. Using this, we can rew rite the e q.

5 as

P

i/∈N

(w

i

− q

∗

i

)

2

.

This is minimized by taking as set N the κ largest in magnitude elements of w

i

− q

∗

i

(indexed over i).

Hence, the final solution is: 1) Set the elements of N to be the κ largest in magnitude e lements of w

i

− q

∗

i

(there may be multiple such sets, any one is valid). 2) For each i in N : set s

∗

i

= w

i

− q

∗

i

, and q

∗

i

= a ny

element in C. For each i not in N: set s

∗

i

= 0, q

∗

i

= closest(w

i

) (there may be 2 closest values, any one is

valid). This c ontains multiple solutions. One particular one is as given in the theorem statement, where we

set q

∗

i

= closest (w

i

) for every i, which is practically more desirable because it leads to a smaller ℓ

1

-norm of

s.

5 Experiments on CIFAR10

We evaluate the effectiveness of additively combining compressio ns on deep nets of different sizes on the

CIFAR10 (VGG16 a nd ResNets). We systematically study each combination of two o r three compressio ns

6