20 CHAPTER 2 • REGULAR EXPRESSIONS, TEXT NORMALIZATION, EDIT DISTANCE

This utterance has two kinds of disfluencies. The broken-off word main- is

disfluency

called a fragment. Words like uh and um are called fillers or filled pauses. Should

fragment

filled pause

we consider these to be words? Again, it depends on the application. If we are

building a speech transcription system, we might want to eventually strip out the

disfluencies.

But we also sometimes keep disfluencies around. Disfluencies like uh or um

are actually helpful in speech recognition in predicting the upcoming word, because

they may signal that the speaker is restarting the clause or idea, and so for speech

recognition they are treated as regular words. Because people use different disflu-

encies they can also be a cue to speaker identification. In fact Clark and Fox Tree

(2002) showed that uh and um have different meanings. What do you think they are?

Are capitalized tokens like They and uncapitalized tokens like they the same

word? These are lumped together in some tasks (speech recognition), while for part-

of-speech or named-entity tagging, capitalization is a useful feature and is retained.

How about inflected forms like cats versus cat? These two words have the same

lemma cat but are different wordforms. A lemma is a set of lexical forms having

lemma

the same stem, the same major part-of-speech, and the same word sense. The word-

form is the full inflected or derived form of the word. For morphologically complex

wordform

languages like Arabic, we often need to deal with lemmatization. For many tasks in

English, however, wordforms are sufficient.

How many words are there in English? To answer this question we need to

distinguish two ways of talking about words. Types are the number of distinct words

word type

in a corpus; if the set of words in the vocabulary is V, the number of types is the

vocabulary size |V|. Tokens are the total number N of running words. If we ignore

word token

punctuation, the following Brown sentence has 16 tokens and 14 types:

They picnicked by the pool, then lay back on the grass and looked at the stars.

When we speak about the number of words in the language, we are generally

referring to word types.

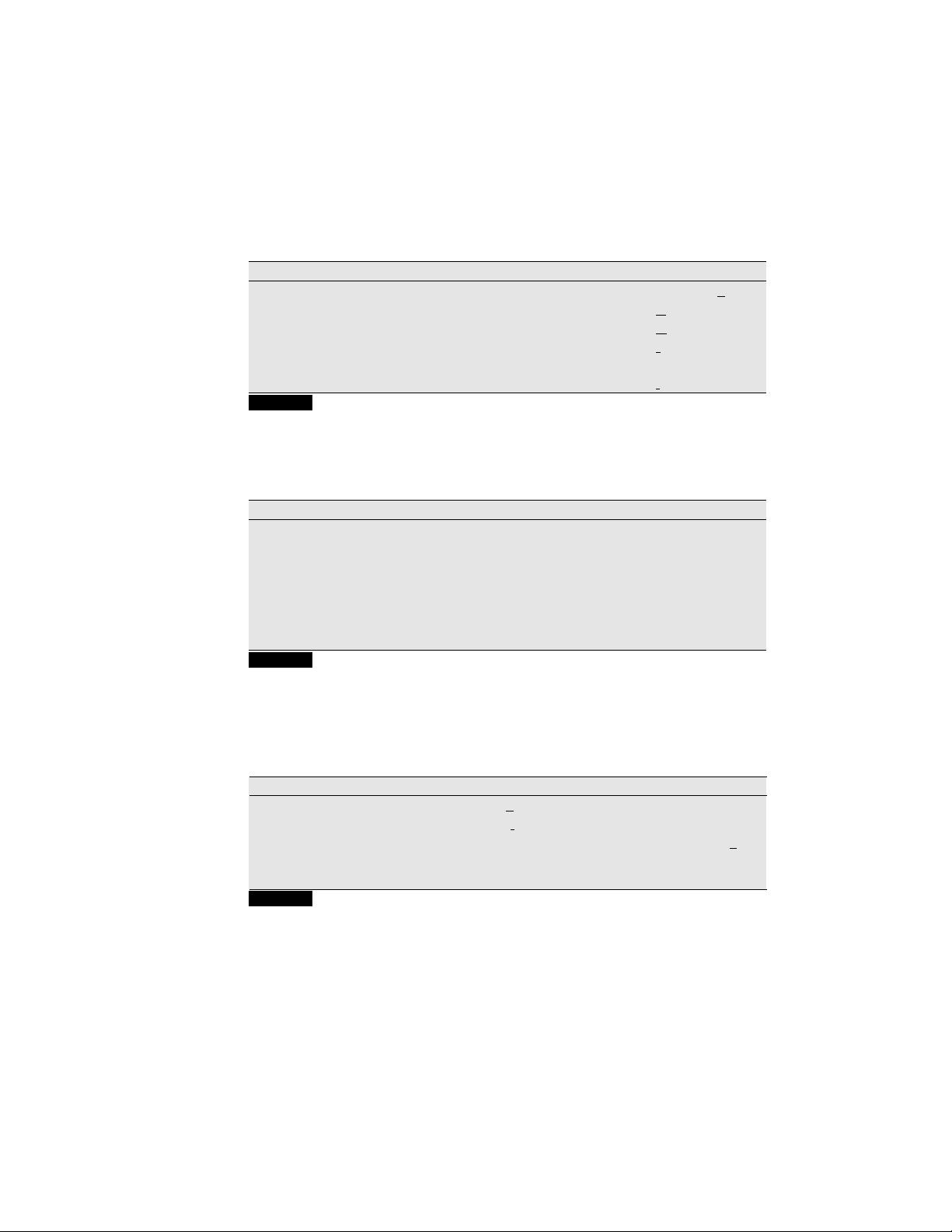

Corpus Tokens = N Types = |V|

Shakespeare 884 thousand 31 thousand

Brown corpus 1 million 38 thousand

Switchboard telephone conversations 2.4 million 20 thousand

COCA 440 million 2 million

Google N-grams 1 trillion 13 million

Figure 2.10 Rough numbers of types and tokens for some English language corpora. The

largest, the Google N-grams corpus, contains 13 million types, but this count only includes

types appearing 40 or more times, so the true number would be much larger.

Fig. 2.10 shows the rough numbers of types and tokens computed from some

popular English corpora. The larger the corpora we look at, the more word types

we find, and in fact this relationship between the number of types |V| and number

of tokens N is called Herdan’s Law (Herdan, 1960) or Heaps’ Law (Heaps, 1978)

Herdan’s Law

Heaps’ Law

after its discoverers (in linguistics and information retrieval respectively). It is shown

in Eq. 2.1, where k and β are positive constants, and 0 < β < 1.

|V| = kN

β

(2.1)

The value of β depends on the corpus size and the genre, but at least for the

large corpora in Fig. 2.10, β ranges from .67 to .75. Roughly then we can say that