没有合适的资源?快使用搜索试试~ 我知道了~

首页2018年OSDI会议论文精选

"《OSDI2018论文集》是由USENIX网站提供的一份重要的学术资料,收录了第13届美国计算机协会系统程序员操作系统设计与实现研讨会(USERS 2018)的会议论文。该会议于2018年10月8日至10日在加利福尼亚州卡尔斯巴德召开,由ACM SIGOPS赞助,并得到了包括Facebook、Google、Microsoft等在内的多家科技巨头的支持。

会议的宗旨是促进操作系统设计和实施领域的研究与发展,论文集包含了来自全球顶尖学者的研究成果,涵盖了操作系统设计、系统架构、编程语言、系统安全、虚拟化、存储技术等多个前沿话题。这些论文不仅展示了最新的理论研究成果,还反映了业界对提升系统性能、效率以及应对新兴挑战的探索。

版权方面,论文作者或其雇主保留了各自作品的知识产权,但允许非商业性的教育或研究目的下的完整作品复制。个人用户可以打印一份供个人使用,但必须遵守版权规定,尊重所有商标。USENIX作为主办方,强调了对知识产权的尊重,并感谢所有赞助商,如Amazon、Bloomberg和Oracle等,以及OpenAccess Publishing Partner PeerJ等合作伙伴的支持。

该论文集的出版,标志着操作系统研究领域的最新进展,对于操作系统专业人员、研究人员、学生以及行业从业者来说,是一份宝贵的参考资料,有助于他们了解最新的技术趋势和发展动态。通过阅读和分析这些论文,读者可以深入理解操作系统设计的创新方法,以及如何将这些理论应用到实际系统中,推动技术进步。"

a component into a Panorama observer. One is to insert

Panorama API hooks into the component’s source code.

Another is to integrate with the component’s logs by con-

tinuously parsing and monitoring log entries related to

other components. The latter approach is transparent to

components but captures less accurate information. We

initially adopted the latter approach by adding plug-in

support in Panorama to manage log-parsing scripts. But,

as we applied Panorama to more systems, maintaining

these scripts became painful because their logging prac-

tices differed significantly. Much information is also un-

available in logs [

50]. Thus, even though we still sup-

port logging integration, we mainly use the instrumen-

tation approach. To relieve developers of the burden of

inserting Panorama hooks, Panorama provides an offline

analysis tool that does the source-code instrumentation

automatically. §

4 describes this offline analysis.

3.5 Observation Exchange

Observations submitted to the LOS by a local observer

only reflect a partial view of the subject. To reduce bias

in observations, Panorama runs a dissemination proto-

col to propagate observations to, and learn observations

from, other LOSes. Consequently, for each monitored

subject, the LOS stores observations from multiple ob-

servers. The observation exchange in Panorama is only

among cliques of LOSes that share a subject. To achieve

selective exchange, each LOS keeps a watch list, which

initially contains only the local observer. When a local

observer reports an observation to the LOS, the LOS will

add the observation’s subject to the watch list to indicate

that it is now interested in others’ observations about this

subject. Each LOS also keeps an ignore list for each sub-

ject, which lists LOSes to which it should not propagate

new observations about that subject. When a local ob-

servation for a new subject appears for the first time, the

LOS does a one-time broadcast. LOSes that are not inter-

ested in the observation (based on their own watch lists)

will instruct the broadcasting LOS to include them in its

ignore list. If an LOS later becomes interested in this

subject, the protocol ensures that the clique members re-

move this LOS from their ignore lists.

3.6 Judging Failure from Observations

With numerous observations collected about a subject,

Panorama uses a decision engine to reach a verdict and

stores the result in the LOS’s verdict table. A simple

decision policy is to use the latest observation as the ver-

dict. But, this can be problematic since a subject experi-

encing intermittent errors may be treated as healthy. An

alternative is to reach an unhealthy verdict if there is any

recent negative observation. This could cause one biased

observer, whose negative observation is due to its own

issue, to mislead others.

We use a bounded-look-back majority algorithm, as

follows. For a set of observations about a subject, we first

group the observations by the unique observer, and ana-

lyze each group separately. The observations in a group

are inspected from latest to earliest and aggregated based

on their associated contexts. For an observation being

inspected, if its status is different than the previously

recorded status for that context, the look-back of obser-

vations for that context stops after a few steps to favor

newer statuses. Afterwards, for each recorded context,

if either the latest status is unhealthy or the healthy sta-

tus does not have the strict majority, the verdict for that

context is unhealthy with an aggregated severity level.

In this way, we obtain an analysis summary for each

context in each group. To reach a final verdict for each

context across all groups, the summaries from different

observers are aggregated and decided based on a sim-

ple majority. Using group-based summaries allows in-

cremental update of the verdict and avoids being biased

by one observer or context in the aggregation. The de-

cision engine could use more complex algorithms, but

we find that our simple algorithm works well in practice.

This is because most observations collected by Panorama

constitute strong evidence rather than superficial signals.

The PENDING status (Section

4.3) needs additional han-

dling: during the look-back for a context, if the current

status is HEALTHY and the older status is PENDING, that

older PENDING status will be skipped because it was only

temporary. In other words, that partial observation is now

complete. Afterwards, a PENDING status with occurrences

exceeding a threshold is downgraded to UNHEALTHY.

4 Design Pattern and Observability

The effectiveness of Panorama depends on the hooks

in observers. We initially designed a straightforward

method to insert these hooks. In testing it on real-world

distributed systems, however, we found that component

interactions in practice can be complex. Certain interac-

tions, if not treated appropriately, will cause the extracted

observations to be misleading. In this section, we first

show a gray failure that our original method failed to de-

tect, and then investigate the reason behind the challenge.

4.1 A Failed Case

In one incident of a production ZooKeeper service, ap-

plications were experiencing many lock timeouts [

23].

An engineer investigated the issue by checking metrics

in the monitoring system and found that the number of

connections per client had significantly increased. It ini-

USENIX Association 13th USENIX Symposium on Operating Systems Design and Implementation 5

tially looked like a resource leak in the client library, but

the root cause turned out to be complicated.

The production environment used IPSec to secure

inter-host traffic, and a Linux kernel module used Intel

AES instructions to provide AES encryption for IPSec.

But this kernel module could occasionally introduce data

corruption with Xen paravirtualization, for reasons still

not known today. Typically the kernel validated packet

checksums and dropped corrupt packets. But, in IPSec,

two checksums exist: one for the IP payload, the other

for the encrypted TCP payload. For IPSec NAT-T mode,

the Linux kernel did not validate the TCP payload check-

sum, thereby permitting corrupt packets. These were de-

livered to the ZooKeeper leader, including a corrupted

length field for a string. When ZooKeeper used the

length to allocate memory to deserialize the string, it

raised an out-of-memory (OOM) exception.

Surprisingly, when this OOM exception happened,

ZooKeeper continued to run. Heartbeats were normal

and no leader re-election was triggered. When eval-

uating this incident in Panorama, no failure was re-

ported either. We studied the ZooKeeper source code

to understand why this happened. In ZooKeeper, a re-

quest is first picked up by the listener thread, which

then calls the ZooKeeperServer thread that further in-

vokes a chain of XXXRequestProcessor threads to pro-

cess the request. The OOM exception happens in the

PrepRequestProcessor thread, the first request proces-

sor. The ZooKeeperServer thread invokes the interface

of the PrepRequestProcessor as follows:

1

try {

2 firstProcessor.processRequest(si);

3 } catch (RequestProcessorException e) {

4 LOG.error("Unable to process request: " + e);

5 }

If the execution passes line 2, it provides positive ev-

idence that the PrepRequestProcessor thread is healthy.

If, instead, the execution reaches line 4, it represents neg-

ative evidence about PrepRequestProcessor. But with

the Panorama hooks inserted at both places, no negative

observations are reported. This is because the implemen-

tation of the processRequest API involves an indirec-

tion: it simply puts a request in a queue and immedi-

ately returns. Asynchronously, the thread polls and pro-

cesses the queue. Because of this design, even though

the OOM exception causes the PrepRequestProcessor

thread to exit its main loop, the ZooKeeperServer thread

is still able to call processRequest and is unable to tell

that PrepRequestProcessor has an issue. The hooks are

only observing the status of the indirection layer, i.e.,

the queue, rather than the PrepRequestProcessor thread.

Thus, negative observations only appear when the re-

quest queue cannot insert new items; but, by default, its

capacity is Integer.MAX

_

VALUE!

reply

request

C1

C2

request

reply

C1

C2

reply

request

C1

C2

reply

request

C1

C2

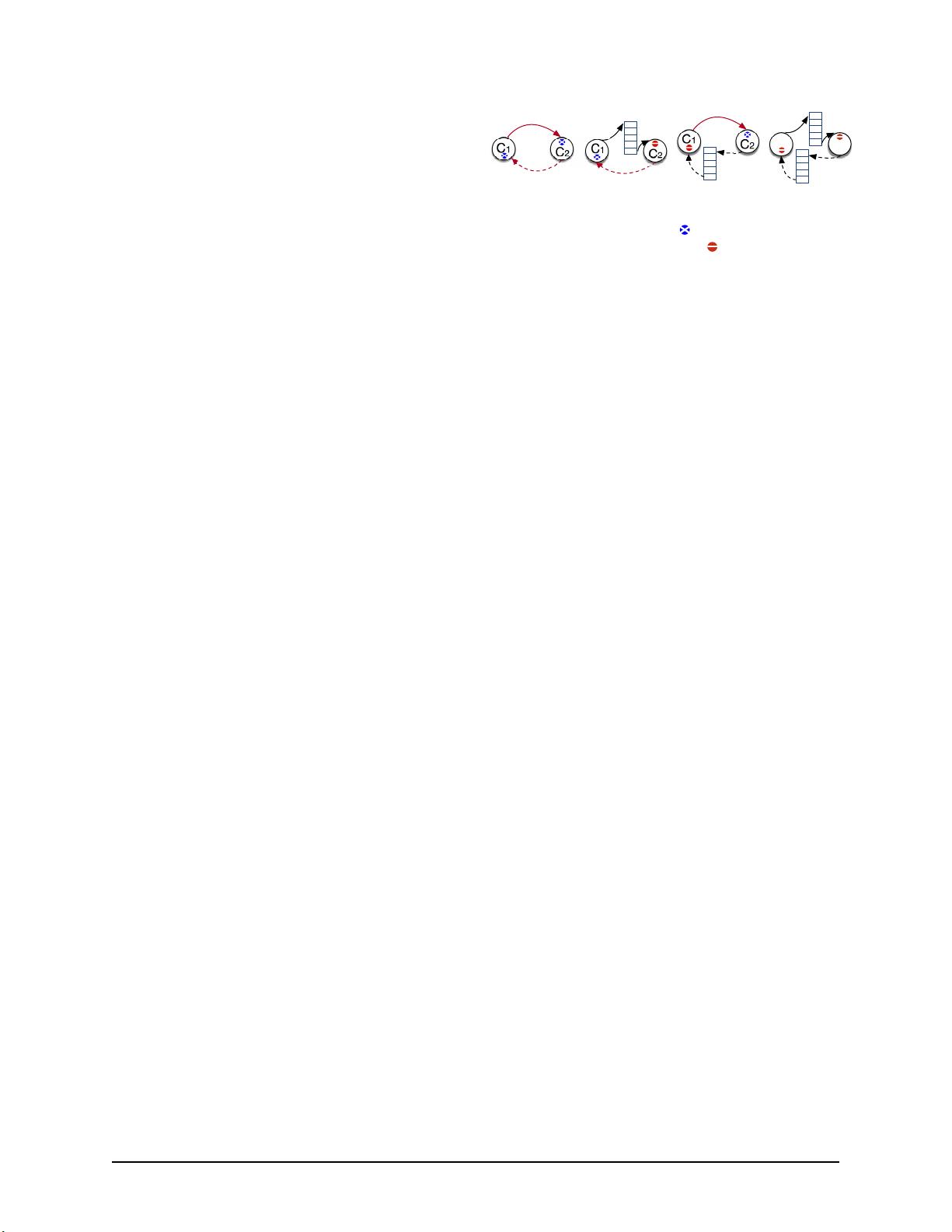

(a) (b) (c) (d)

Figure 2: Design patterns of component interactions and their

impact on failure observability. means that failure is ob-

servable to the other component, and means that failure is

unobservable to it.

4.2 Observability Patterns

Although the above case is a unique incident, we extrap-

olate a deeper implication for failure detection: certain

design patterns can undermine failure observability in a

system and thereby pose challenges for failure detection.

To reveal this connection, consider two components C

1

and C

2

where C

1

makes requests of C

2

. We expect that,

through this interaction, C

1

and C

2

should be able to

make observations about each other’s status. However,

their style of interaction can have a significant effect on

this observability.

We have identified the following four basic patterns of

interaction (Figure

2), each having a different effect on

this observability. Interestingly, we find examples of all

four patterns in real-world system software.

(a) No indirection. Pattern (a) is the most straightfor-

ward. C

1

makes a request to C

2

, then C

2

optionally

replies to C

1

. This pattern has the best degree of ob-

servability: C

1

can observe C

2

from errors in its request

path; C

2

can also observe C

1

to some extent in its re-

ply path. Listing

1 shows an example of this pattern. In

this case, C

1

is the follower and C

2

is the leader. C

1

first

contacts C

2

, then C

2

sends C

1

a snapshot or other infor-

mation through an input stream. Failures are observed

via errors or timeouts in the connection, I/O through the

input stream, and/or reply contents.

(b) Request indirection. A level of indirection exists in

the request path: when C

1

makes a request to C

2

, an inter-

mediate layer (e.g., a proxy or a queue) takes the request

and replies to C

1

. C

2

will later take the request from the

intermediate layer, process it, and optionally reply to C

1

directly. This design pattern has a performance benefit

for both C

1

and C

2

. It also provides decoupling between

their two threads. But, because of the indirection, C

1

no

longer directly interacts with C

2

so C

2

’s observability is

reduced. The immediate observation C

1

makes when re-

questing from C

2

does not reveal whether C

2

is having

problems, since usually the request path succeeds as in

the case in §

4.1.

(c) Reply indirection. Pattern (c) is not intuitive. C

1

makes a request, which is directly handled by C

2

, but the

reply goes through a layer of indirection (e.g., a queue or

a proxy). Thus, C

1

can observe issues in C

2

but C

1

’s ob-

6 13th USENIX Symposium on Operating Systems Design and Implementation USENIX Association

servability to C

2

is reduced. One scenario leading to this

pattern is when a component makes requests to multiple

components and needs to collect more than one of their

replies to proceed. In this case, replies are queued so that

they can be processed en masse when a sufficient number

are available. For example, in Cassandra, when a process

sends digest requests to multiple replicas, it must wait for

responses from R replicas. So, whenever it gets a reply

from a replica, it queues the reply for later processing.

(d) Full indirection. In pattern (d), neither component

directly interacts with the other so they get the least ob-

servability. This pattern has a performance benefit since

all operations are asynchronous. But, the code logic can

be complex. ZooKeeper contains an example: When a

follower forwards a request to a leader, the request is pro-

cessed asynchronously, and when the leader later notifies

the follower to commit the request, that notification gets

queued.

4.3 Implications

Pattern (a) has the best failure observability and is eas-

iest for Panorama to leverage. The other three patterns

are more challenging; placing observation hooks with-

out considering the effects of indirection can cause in-

completeness (though not inaccuracy) in failure detec-

tion (§

2). That is, a positive observation will not nec-

essarily mean the monitored component is healthy but a

negative observation means the component is unhealthy.

Pragmatically, this would be an acceptable limitation if

the three indirection patterns were uncommon. However,

we checked the cross-thread interaction code in several

distributed systems and found, empirically, that patterns

(a) and (b) are both pervasive. We also found that differ-

ent software has different preferences, e.g., ZooKeeper

uses pattern (a) frequently, but Cassandra uses pattern

(b) more often.

This suggests Panorama should accommodate indirec-

tion in extracting observations. One solution is to instru-

ment hooks in the indirection layer. But, we find that in-

direction layers in practice are implemented with various

data structures and are often used for multiple purposes,

making tracking difficult. We use a simple but robust

solution and describe it in §

5.4.

5 Observability Analysis

To systematically identify and extract useful observa-

tions from a component, Panorama provides an offline

tool that statically analyzes a program’s source code,

finds critical points, and injects hooks for reporting ob-

servations.

5.1 Locate Observation Boundary

Runtime errors are useful evidence of failure. Even if

an error is tolerated by a requester, it may still indi-

cate a critical issue in the provider. But, not all errors

should be reported. Panorama only extracts errors gen-

erated when crossing component boundaries, because

these constitute observations from the requester side. We

call such domain-crossing function invocations observa-

tion boundaries.

The first step of observability analysis is to locate

observation boundaries. There are two types of such

boundaries: inter-process and inter-thread. An inter-

process boundary typically manifests as a library API in-

vocation, a socket I/O call, or a remote procedure call

(RPC). Sometimes, it involves calling into custom code

that encapsulates one of those three to provide a higher-

level messaging service. In any case, with some domain

knowledge about the communication mechanisms used,

the analyzer can locate inter-process observation bound-

aries in source code. An inter-thread boundary is a call

crossing two threads within a process. The analyzer iden-

tifies such boundaries by finding custom public methods

in classes that extend the thread class.

5.2 Identify Observer and Observed

At each observation boundary, we must identify the ob-

server and subject. Both identities are specific to the dis-

tributed system being monitored. For thread-level obser-

vation boundaries, the thread identities are statically ana-

lyzable, e.g., the name of the thread or class that provides

the public interfaces. For process-level boundaries, the

observer identity is the process’s own identity in the dis-

tributed system, which is known when the process starts;

it only requires one-time registration with Panorama. We

can also usually identify the subject identity, if the re-

mote invocations use well-known methods, via either an

argument of the function invocation or a field in the class.

A challenge is that sometimes, due to nested polymor-

phism, the subject identity may be located deep down in

the type hierarchy. For example, it is not easy to deter-

mine if OutputStream.write() performs network I/O or

local disk I/O. We address this challenge by changing the

constructors of remote types (e.g., socket get I/O stream)

to return a compatible wrapper that extends the return

type with a subject field and can be differentiated from

other types at runtime by checking if that field is set.

5.3 Extract Observation

Once we have observation boundaries, the next step is to

search near them for observation points: program points

that can supply critical evidence about observed compo-

nents. A typical example of such an observation point is

USENIX Association 13th USENIX Symposium on Operating Systems Design and Implementation 7

void deserialize(DataTree dt, InputArchive ia)

{

DataNode node = ia.readRecord("node");

if (node.parent == null) {

LOG.error("Missing parent.");

throw new IOException("Invalid Datatree");

}

dt.add(node);

}

void snapshot() {

ia = BinaryInputArchive.getArchive(

sock.getInputStream());

try {

deserialize(getDataTree(), ia);

} catch (IOException e) {

sock.close();

}

}

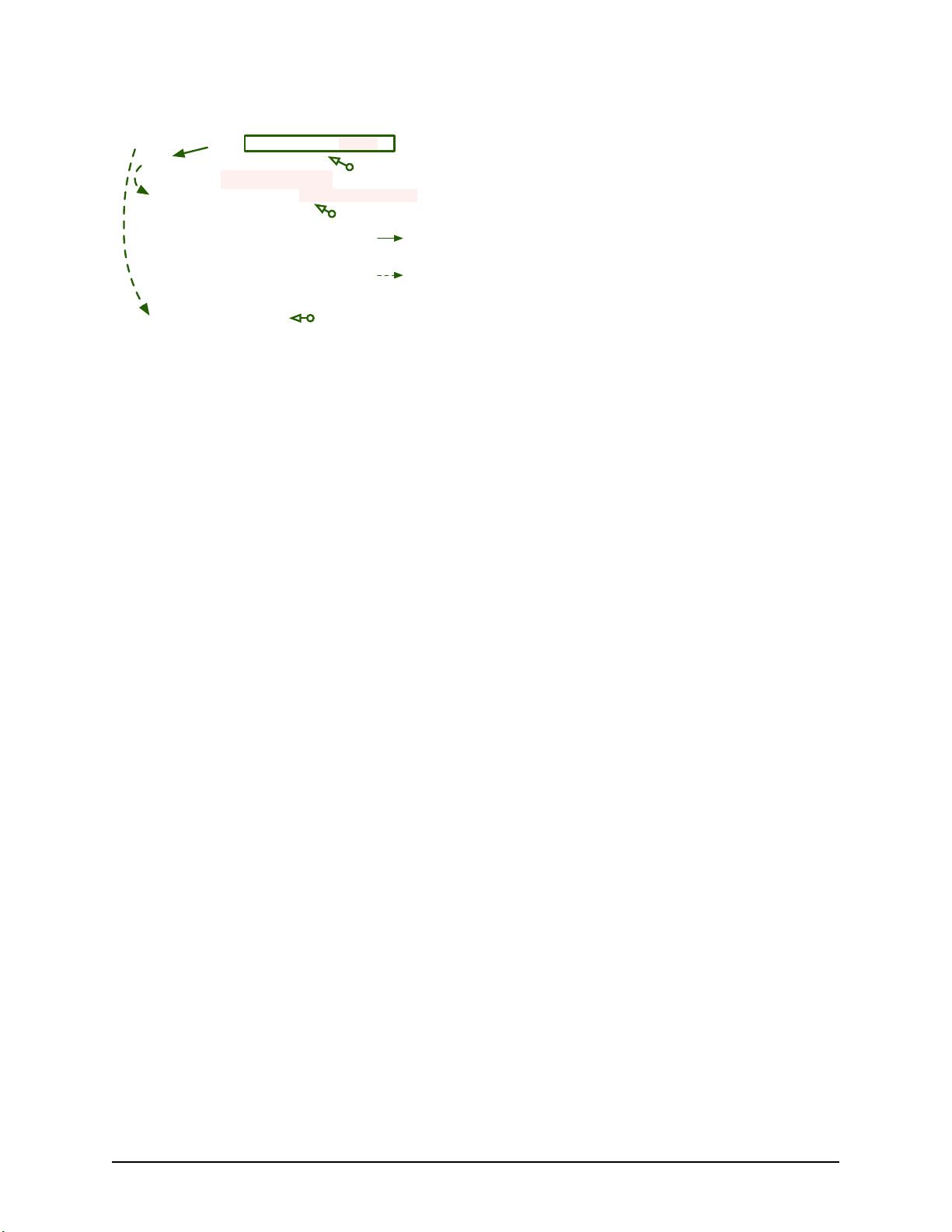

Ob-Point

Ob-Point

Ob-Boundary

data flow

control flow

Figure 3: Observation points in direct interaction (§4.2).

an exception handler invoked when an exception occurs

at an observation boundary.

To locate observation points that are exception han-

dlers, a straightforward approach is to first identify the

type of exceptions an observation boundary can generate,

then locate the catch clauses for these types in code re-

gions after the boundary. There are two challenges with

this approach. First, as shown in Figure

3, an exception

could be caught at the caller or caller’s caller. Recur-

sively walking up the call chain to locate the clause is

cumbersome and could be inaccurate. Second, the type

of exception thrown by the boundary could be a generic

exception such as IOException that could be generated

by other non-boundary code in the same try clause.

These two challenges can be addressed by inserting a try

just before the boundary and a catch right after it. This

works but, if the observation boundaries are frequent, the

excessive wrapping can cause non-trivial overhead.

The ideal place to instrument is the shared exception

handler for adjacent invocations. Our solution is to add

a special field in the base Throwable class to indicate the

subject identity and the context, and to ensure boundary-

generated exceptions set this field. Then, when an ex-

ception handler is triggered at runtime, we can check if

this field is set, and if so treat it as an observation point.

We achieve the field setting by wrapping the outermost

function body of each boundary method with a try and

catch, and by rethrowing the exception after the hook.

Note that this preserves the original program semantics.

Another type of observation point we look for is one

where the program handles a response received from

across a boundary. For example, the program may

raise an exception for a missing field or wrong signa-

ture in the returned DataNode in Figure

3, indicating

potential partial failure or corrupt state in the remote

process. To locate these observation points, our ana-

lyzer performs intra-procedural analysis to follow the

data flow of responses from a boundary. If an excep-

tion thrown is control-dependent on the response, we

consider it an observation point, and we insert code to

set the subject/context field before throwing the excep-

tion just as described earlier. This data-flow analysis is

conservative: e.g., the code if (a + b > 100) {throw

Exception("unexpected");}, where a comes from a

boundary but b does not, is not considered an observation

point because the exception could be due to b. In other

words, our analysis may miss some observation points

but will not locate wrong observation points.

So far, we have described negative observation points,

but we also need mechanisms to make positive obser-

vations. Ideally, each successful interaction across a

boundary is an observation point that can report positive

evidence. But, if these boundaries appear frequently, the

positive observation points can be excessive. So, we co-

alesce similar positive observation points that are located

close together.

For each observation point, the analyzer inserts hooks

to discover evidence and report it. At each negative

observation point, we get the subject identity and con-

text from the modified exception instance. We statically

choose the status; if the status is to be some level of

UNHEALTHY then we set this level based on the severity

of the exception handling. For example, if the exception

handler calls System.exit(), we set the status to a high

level of UNHEALTHY. At each positive observation point,

we get the context from the nearby boundary and also

statically choose the status. We immediately report each

observation to the Panorama library, but the library will

typically not report it synchronously. The library will

buffer excessive observations and send them in one ag-

gregate message later.

5.4 Handling Indirection

As we discussed in §

4, observability can be reduced

when indirection exists at an observation boundary. For

instance, extracted observations may report the subject

as healthy while it is in fact unhealthy. The core issue is

that indirection splits a single interaction between com-

ponents among multiple observation boundaries. A suc-

cessful result at the first observation boundary may only

indicate partial success of the overall interaction; the in-

teraction may only truly complete later, when, e.g., a

callback is invoked, or a condition variable unblocks, or

a timeout occurs. We must ideally wait for an interaction

to complete before making an observation.

We call the two locations of a split interaction the ob-

origin and ob-sink, reflecting the order they’re encoun-

tered. Observations at the ob-origin represent positive

but temporary and weak evidence. For example, in Fig-

ure

4, the return from sendRR is an ob-origin. Where the

8 13th USENIX Symposium on Operating Systems Design and Implementation USENIX Association

public List<Row> fetchRows() {

ReadCommand command = ...;

sendRR(command.newMessage(), endPoint, handler);

...

try {

Row row = handler.get();

}

catch (ReadTimeoutException ex) {

throw ex;

}

catch (DigestMismatchException ex) {

logger.error("Digest mismatch: {}", ex);

}

}

public void response(MessageIn message) {

resolver.preprocess(message);

condition.signal();

}

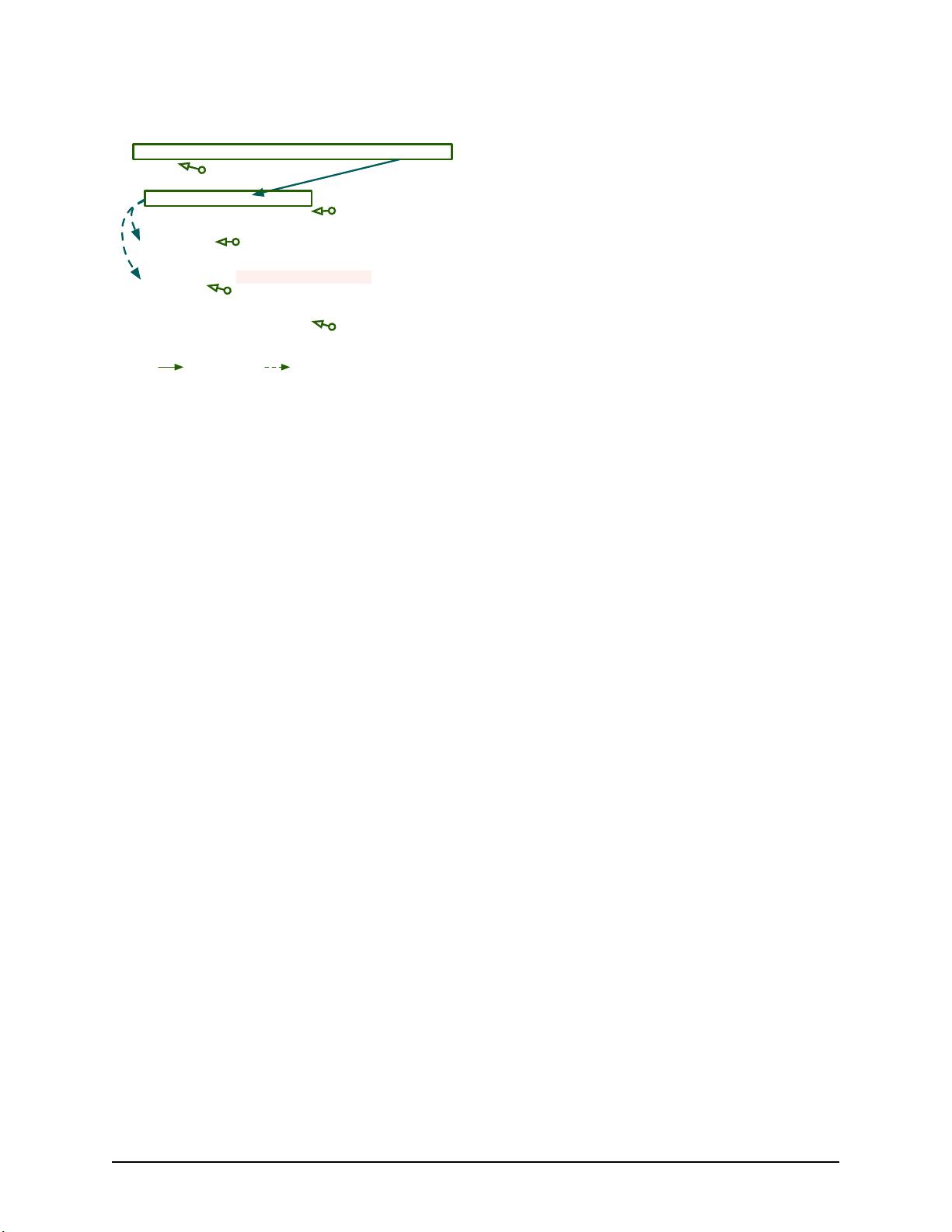

data flow control flow

Ob-Origin

Ob-Point

Ob-Sink

Ob-Point

Ob-Sink

Figure 4: Observation points when indirection exists (§4.2).

callback of handler, response, is invoked, it is an ob-

sink. In addition, when the program later blocks waiting

for the callback, e.g., handler.get, the successful return

is also an ob-sink. If an ob-origin is properly matched

with an ob-sink, the positive observation becomes com-

plete and strong. Otherwise, an outstanding ob-origin is

only a weak observation and may degrade to a negative

observation, e.g., when handler.get times out.

Tracking an interaction split across multiple program

locations is challenging given the variety of indirection

implementations. To properly place hooks when indirec-

tion exists, the Panorama analyzer needs to know what

methods are asynchronous and the mechanisms for no-

tification. For instance, a commonly used one is Java

FutureTask [

40]. For custom methods, this knowledge

comes from specifications of the boundary-crossing in-

terfaces, which only requires moderate annotation. With

this knowledge, the analyzer considers an ob-origin to be

immediately after any call site of an asynchronous inter-

face. We next discuss how to locate ob-sinks.

We surveyed the source code of popular distributed

systems and found the majority of ob-sinks fall into four

patterns: (1) invoking a callback-setting method; (2) per-

forming a blocking wait on a callback method; (3) check-

ing a completion flag; and (4) reaching another obser-

vation boundary with a third component, in cases when

a request must be passed on further. For the first two

patterns, the analyzer considers the ob-sink to be before

and after the method invocation, respectively. For the

third pattern, the analyzer locates the spin-loop body and

considers the ob-sink to be immediately after the loop.

The last pattern resembles SEDA [

48]: after A asyn-

chronously sends a request to B, B does not notify A of

the status after it finishes but rather passes on the request

to C. Therefore, for that observation boundary in B, the

analyzer needs to not only insert a hook for C but also

treat it as an ob-sink for the A-to-B interaction.

When our analyzer finds an ob-origin, it inserts a

hook that submits an observation with the special sta-

tus PENDING. This means that the observer currently only

sees weak positive evidence about the subject’s status,

but expects to receive stronger evidence shortly. At any

ob-sink indicating positive evidence, our analyzer inserts

a hook to report a HEALTHY observation. At any ob-sink

indicating negative evidence, the analyzer inserts a hook

to report a negative observation.

To link an ob-sink observation with its corresponding

ob-origin observation, these observations must share the

same subject and context. To ensure this, the analyzer

uses a similar technique as in exception tracking. It adds

a special field containing the subject identity and context

to the callback handler, and inserts code to set this field

at the ob-origin. If the callback is not instrumentable,

e.g., because it is an integer resource handle, then the an-

alyzer inserts a call to the Panorama library to associate

the handle with an identity and context.

Sometimes, the analyzer finds an ob-origin but cannot

find the corresponding ob-sink or cannot extract the sub-

ject identity or context. This can happen due to either

lack of knowledge or the developers having forgotten to

check for completion in the code. In such a case, the an-

alyzer will not instrument the ob-origin, to avoid making

misleading PENDING observations.

We find that ob-origin and ob-sink separation is useful

in detecting not only issues involving indirection but also

liveness issues. To see why, consider what happens when

A invokes a boundary-crossing blocking function of B,

and B gets stuck so the function never returns. When

this happens, even though A witnesses B’s problem, it

does not get a chance to report the issue because it never

reaches the observation point following the blocking call.

Inserting an ob-origin before the function call provides

evidence of the liveness issue: LOSes will see an old

PENDING observation with no subsequent corresponding

ob-sink observation. Thus, besides asynchronous inter-

faces, call sites of synchronous interfaces that may block

for long should also be included in the ob-origin set.

6 Implementation

We implemented the Panorama service in ∼ 6,000 lines

of Go code, and implemented the observability analyzer

(§

5) using the Soot analysis framework [46] and the As-

pectJ instrumentation framework [

2].

We defined Panorama’s interfaces using protocol

buffers [

7]. We then used the gRPC framework [5] to

build the RPC service and to generate clients in different

languages. So, the system can be easily used by various

components written in different languages. Panorama

provides a thin library that wraps the gRPC client for

USENIX Association 13th USENIX Symposium on Operating Systems Design and Implementation 9

剩余826页未读,继续阅读

2019-04-05 上传

2021-02-03 上传

2021-04-22 上传

2021-05-07 上传

2011-03-23 上传

2010-12-27 上传

WangEPang

- 粉丝: 0

- 资源: 5

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Android圆角进度条控件的设计与应用

- mui框架实现带侧边栏的响应式布局

- Android仿知乎横线直线进度条实现教程

- SSM选课系统实现:Spring+SpringMVC+MyBatis源码剖析

- 使用JavaScript开发的流星待办事项应用

- Google Code Jam 2015竞赛回顾与Java编程实践

- Angular 2与NW.js集成:通过Webpack和Gulp构建环境详解

- OneDayTripPlanner:数字化城市旅游活动规划助手

- TinySTM 轻量级原子操作库的详细介绍与安装指南

- 模拟PHP序列化:JavaScript实现序列化与反序列化技术

- ***进销存系统全面功能介绍与开发指南

- 掌握Clojure命名空间的正确重新加载技巧

- 免费获取VMD模态分解Matlab源代码与案例数据

- BuglyEasyToUnity最新更新优化:简化Unity开发者接入流程

- Android学生俱乐部项目任务2解析与实践

- 掌握Elixir语言构建高效分布式网络爬虫

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功