Exploring Randomly Wired Neural Networks for Image Recognition

Saining Xie Alexander Kirillov Ross Girshick Kaiming He

Facebook AI Research (FAIR)

Abstract

Neural networks for image recognition have evolved

through extensive manual design from simple chain-like

models to structures with multiple wiring paths. The suc-

cess of ResNets [11] and DenseNets [16] is due in large

part to their innovative wiring plans. Now, neural architec-

ture search (NAS) studies are exploring the joint optimiza-

tion of wiring and operation types, however, the space of

possible wirings is constrained and still driven by manual

design despite being searched. In this paper, we explore a

more diverse set of connectivity patterns through the lens of

randomly wired neural networks. To do this, we first define

the concept of a stochastic network generator that encap-

sulates the entire network generation process. Encapsula-

tion provides a unified view of NAS and randomly wired net-

works. Then, we use three classical random graph models

to generate randomly wired graphs for networks. The re-

sults are surprising: several variants of these random gen-

erators yield network instances that have competitive ac-

curacy on the ImageNet benchmark. These results suggest

that new efforts focusing on designing better network gen-

erators may lead to new breakthroughs by exploring less

constrained search spaces with more room for novel design.

1. Introduction

What we call deep learning today descends from the

connectionist approach to cognitive science [38, 7]—a

paradigm reflecting the hypothesis that how computational

networks are wired is crucial for building intelligent ma-

chines. Echoing this perspective, recent advances in com-

puter vision have been driven by moving from models with

chain-like wiring [19, 53, 42, 43] to more elaborate connec-

tivity patterns, e.g., ResNet [11] and DenseNet [16], that are

effective in large part because of how they are wired.

Advancing this trend, neural architecture search (NAS)

[55, 56] has emerged as a promising direction for jointly

searching wiring patterns and which operations to per-

form. NAS methods focus on search [55, 56, 33, 26, 29,

27] while implicitly relying on an important—yet largely

overlooked—component that we call a network generator

(defined in §3.1). The NAS network generator defines a

family of possible wiring patterns from which networks

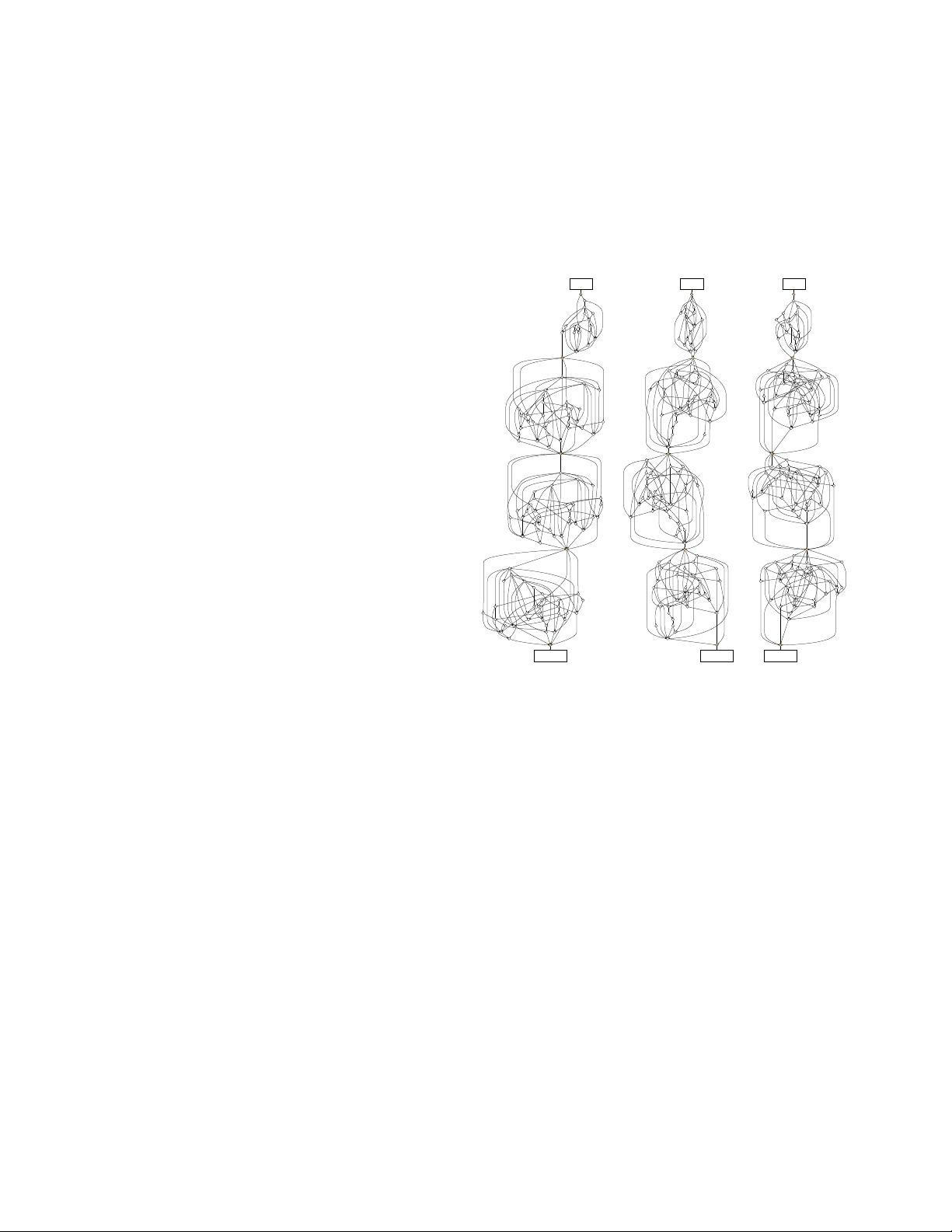

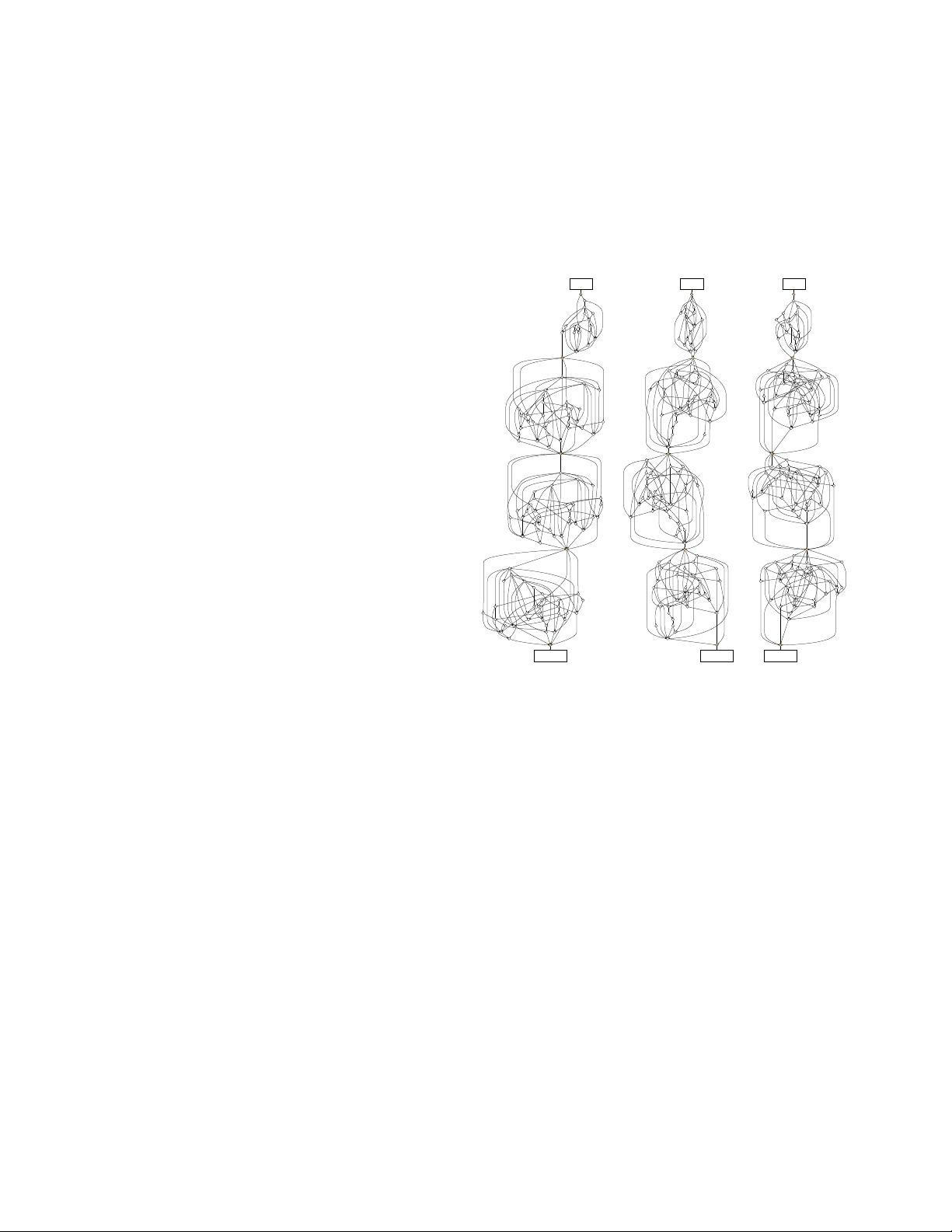

classifier classifier classifier

conv

1

conv

1

conv

1

Figure 1. Randomly wired neural networks generated by the

classical Watts-Strogatz (WS) [50] model: these three instances

of random networks achieve (left-to-right) 79.1%, 79.1%, 79.0%

classification accuracy on ImageNet under a similar computational

budget to ResNet-50, which has 77.1% accuracy.

are sampled subject to a learnable probability distribution.

However, like the wiring patterns in ResNet and DenseNet,

the NAS network generator is hand designed and the space

of allowed wiring patterns is constrained in a small subset

of all possible graphs. Given this perspective, we ask: What

happens if we loosen this constraint and design novel net-

work generators?

We explore this question through the lens of randomly

wired neural networks that are sampled from stochastic

network generators, in which a human-designed random

process defines generation. To reduce bias from us—the

authors of this paper—on the generators, we use three clas-

sical families of random graph models in graph theory [51]:

the Erd

˝

os-R

´

enyi (ER) [6], Barab

´

asi-Albert (BA) [1], and

Watts-Strogatz (WS) [50] models. To define complete net-

works, we convert a random graph into a directed acyclic

graph (DAG) and apply a simple mapping from nodes to

their functional roles (e.g., to the same type of convolution).

1

arXiv:1904.01569v2 [cs.CV] 8 Apr 2019