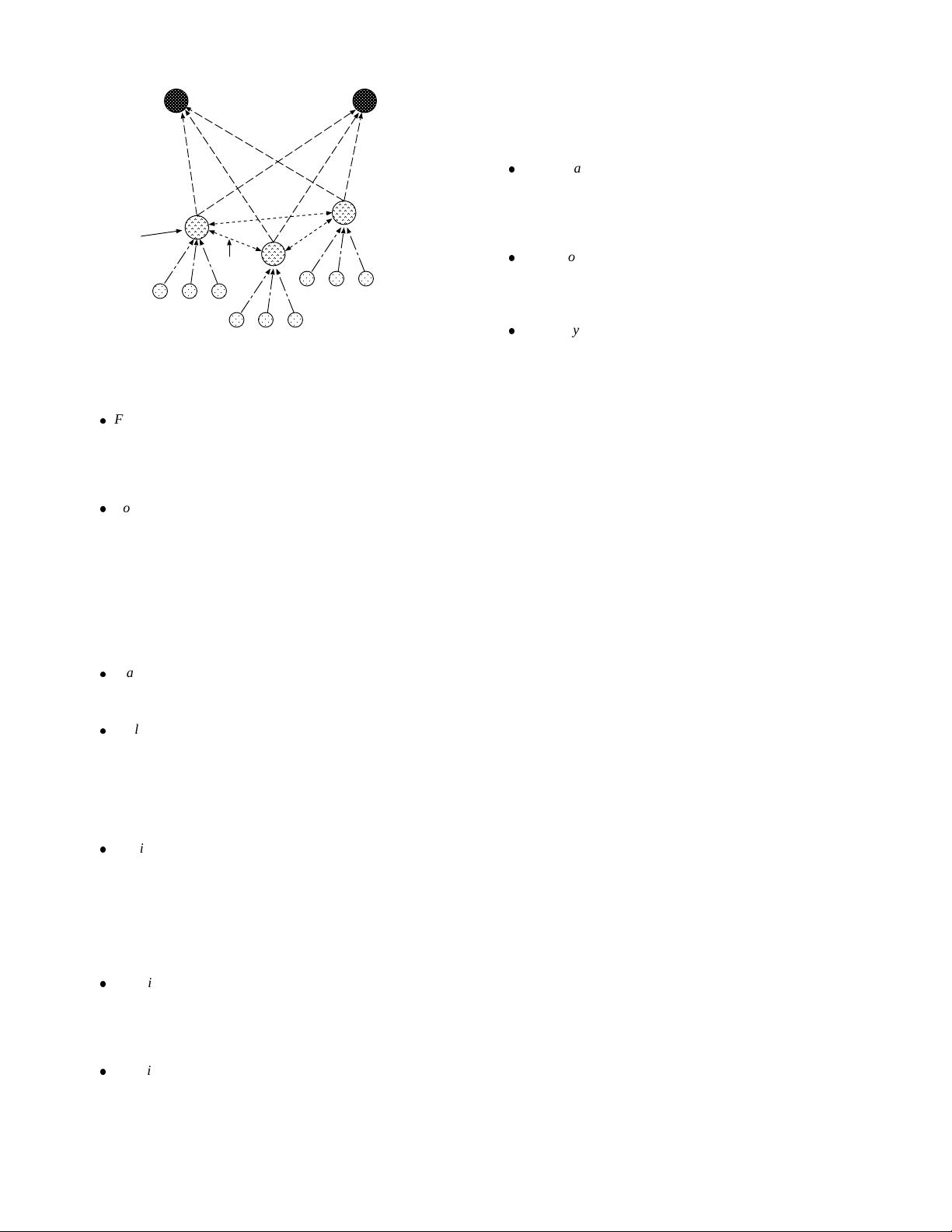

clients

clients

clients

Web server Web server

proxy

cooperation

Figure 3: A generic WWW caching system.

Fast access. From users’ point of view, access latency is an

important measurement of the quality of Web service. A de-

sirable caching system should aim at reducing Web access

latency. In particular, it should provide user a lower latency

on average than those without employing a caching system.

Robustness. From users’ prospect, the robustness means

availability, which is another important measurement of qual-

ity of Web service. Users desire to haveWeb service available

whenever they want. The robustness has three aspects. First,

it’s desirable that a few proxies crash wouldn’t tear the en-

tire system down. The caching system should eliminate the

single point failure as much as possible. Second, the caching

system should fall back gracefully in case of failures. Third,

the caching system would be design in such a way that it’s

easy to recover from a failure.

Transparency. A Web caching system should be transparent

for the user, the only results user should notice are faster re-

sponse and higher availability.

Scalability. We have seen an explosive growth in network

size and density in last decades and is facing a more rapid

increasing growth in near future. The key to success in such

an environment is the scalability. We would like a caching

scheme to scale well along the increasing size and density of

network. This requires all protocols employed in the caching

system to be as lightweight as possible.

Efficiency. There are two aspects to efficiency. First, how

much overhead does the Web caching system impose on net-

work? We would like a caching system to impose a minimal

additional burden on the network. This includes both control

packets and extra data packets incurred by using a caching

system. Second, the caching system shouldn’t adopt any

scheme which leads to under-utilization of critical resources

in network.

Adaptivity. It’s desirable to make the caching system adapt

to the dynamic changing of the user demand and the network

environment. The adaptivity involves several aspects: cache

management, cache routing, proxy placement, etc. This is

essential to achieve optimal performance.

Stability. The schemesused in Web caching system shouldn’t

introduce instabilities into the network. For example, naive

cache routing based on dynamic network information will re-

sult in oscillation. Such an oscillation is not desirable since

the network is under-utilization and the variance of the access

latency to a proxy or server would be very high.

Load balancing. It’s desirable that the caching scheme dis-

tributes the load evenly through the entire network. A sin-

gle proxy/server shouldn’t be a bottleneck (or hot spot) and

thereby degrades the performance of a portion of the network

or even slow down the entire service system.

Ability to deal with heterogeneity. As networks grow in scale

and coverage, they span a range of hardware and software

architectures. The Web caching schemeneed adapt to a range

of network architectures.

Simplicity. Simplicity is alwaysan asset. Simpler schemesare

easier to implement and likely to be accepted as international

standards. We would like an ideal Web caching mechanism

to be simple to deploy.

4 Web caching schemes

Havingdescribedthe attributes of an ideal Web cachingsystem, we

now survey some schemes described in the literature and point out

their inadequacies.

4.1 Caching architectures

The performance of a Web cache system depends on the size of its

client community; the bigger is the user community, the higher is

the probability that a cached document (previously requested) will

soon be requested again. Caches sharing mutual trust may assist

each other to increase the hit rate. A caching architecture should

provide the paradigm for proxies to cooperate efficiently with each

other.

4.1.1 Hierarchical caching architecture

One approach to coordinate caches in the same system is to set up

a caching hierarchy. With hierarchical caching, caches are placed

at multiple levels of the network. For the sake of simplicity, we

assume that there are four levels of caches: bottom, institutional,

regional, and national levels [69]. At the bottom level of the hier-

archy there are the client/browser caches. When a request is not

satisfied by the client cache, the request is redirected to the institu-

tional cache. If the document is not found at the institutional level,

the request is then forwarded to the regional level cache which in

turn forwards unsatisfied requests to the national level cache. If the

document is not found at any cache level, the national level cache

contacts directly the original server. When the document is found,

either at a cache or at the original server, it travels down the hier-

archy, leaving a copy at each of the intermediate caches along its

path. Further requests for the same document travel up the caching

hierarchy until the document is hit at some cache level.

Hierarchical Web caching was first proposed in the Harvest

project [14]. Other examples of hierarchical caching are Adaptive

Web caching [58], Access Driven cache [83], etc. A hierarchical

architecture is more bandwidth efficient, particularly when some

cooperating cache servers do not have high-speed connectivity. In

such a structure, popular Web pages can be efficiently diffused to-

wards the demand. However, there are several problems associated

with a caching hierarchy [69] [71]: