没有合适的资源?快使用搜索试试~ 我知道了~

首页自监督学习:生成和对比方法综述

自监督学习:生成和对比方法综述

需积分: 50 39 下载量 145 浏览量

更新于2023-03-03

1

收藏 7.47MB PDF 举报

自监督学习作为一种新的学习方法,近几年在表征学习方面取得了骄人的成绩,其利用输入数据本身作为监督,并使得几乎所有类型的下游任务都受益。

资源详情

资源推荐

1

Self-supervised Learning: Generative or Contrastive

Xiao Liu, Fanjin Zhang, Zhenyu Hou, Li Mian, Zhaoyu Wang, Jing Zhang, Jie Tang, Senior Member

Abstract

—Deep supervised learning has achieved great success in the last decade. However, its deficiencies of dependence on manual

labels and vulnerability to attacks have driven people to explore a better solution. As an alternative, self-supervised learning attracts many

researchers for its soaring performance on representation learning in the last several years. Self-supervised representation learning

leverages input data itself as supervision and benefits almost all types of downstream tasks. In this survey, we take a look into new

self-supervised learning methods for representation in computer vision, natural language processing, and graph learning. We

comprehensively review the existing empirical methods and summarize them into three main categories according to their objectives:

generative, contrastive, and generative-contrastive (adversarial). We further investigate related theoretical analysis work to provide

deeper thoughts on how self-supervised learning works. Finally, we briefly discuss open problems and future directions for

self-supervised learning.

Index Terms—Self-supervised Learning, Generative Model, Contrastive Learning, Deep Learning

F

1 INTRODUCTION

D

eep neural networks [77] have shown outstanding

performance on various machine learning tasks, es-

pecially on supervised learning in computer vision (image

classification [32], [54], [59], semantic segmentation [45],

[85]), natural language processing (pre-trained language

models [33], [74], [84], [149], sentiment analysis [83], question

answering [5], [35], [111], [150] etc.) and graph learning (node

classification [58], [70], [106], [138], graph classification [7],

[123], [155] etc.). Generally, the supervised learning is trained

over a specific task with a large manually labeled dataset

which is randomly divided into training, validatiton and test

sets.

However, supervised learning is meeting its bottleneck.

It not only relies heavily on expensive manual labeling

but also suffers from generalization error, spurious cor-

relations, and adversarial attacks. We expect the neural

network to learn more with fewer labels, fewer samples,

or fewer trials. As a promising candidate, self-supervised

learning has drawn massive attention for its fantastic data

efficiency and generalization ability, with many state-of-

the-art models following this paradigm. In this survey, we

will take a comprehensive look at the development of the

recent self-supervised learning models and discuss their

theoretical soundness, including frameworks such as Pre-

trained Language Models (PTM), Generative Adversarial

•

Xiao Liu, Fanjin Zhang, and Zhengyu Hou are with the Department of

Computer Science and Technology, Tsinghua University, Beijing, China.

E-mail: liuxiao17@mails.tsinghua.edu.cn, zfj17@mails.tsinghua.edu.cn,

hzy17@mails.tsinghua.edu.cn

•

Jie Tang is with the Department of Computer Science and Technology,

Tsinghua University, and Tsinghua National Laboratory for Information

Science and Technology (TNList), Beijing, China, 100084.

E-mail: jietang@tsinghua.edu.cn, corresponding author

• Li Mian is with the Beijing Institute of Technonlogy, Beijing, China.

Email: 1120161659@bit.edu.cn

• Zhaoyu Wang is with the Anhui University, Anhui, China.

Email: wzy950507@163.com

• Jing Zhang is with the Renming University of China, Beijing, China.

Email: zhang-jing@ruc.edu.cn

Fig. 1: An illustration to distinguish the supervised, unsu-

pervised and self-supervised learning framework. In self-

supervised learning, the “related information” could be

another modality, parts of inputs, or another form of the

inputs. Repainted from [31].

Networks (GAN), Autoencoder and its extensions, Deep

Infomax, and Contrastive Coding.

The term “self-supervised learning” was first introduced

in robotics, where the training data is automatically labeled

by finding and exploiting the relations between different

input sensor signals. It was then borrowed by the field

of machine learning. In a speech on AAAI 2020, Yann

LeCun described self-supervised learning as ”the machine

predicts any parts of its input for any observed part.” We

can summarize them into two classical definitions following

LeCun’s:

•

Obtain “labels” from the data itself by using a “semi-

automatic” process.

• Predict part of the data from other parts.

Specifically, the “other part” here could be incomplete,

transformed, distorted, or corrupted. In other words, the

machine learns to ’recover’ whole, or parts of, or merely

some features of its original input.

People are often confused by unsupervised learning and

self-supervised learning. Self-supervised learning can be

viewed as a branch of unsupervised learning since there

arXiv:2006.08218v2 [cs.LG] 16 Jun 2020

2

is no manual label involved. However, narrowly speaking,

unsupervised learning concentrates on detecting specific

data patterns, such as clustering, community discovery, or

anomaly detection, while self-supervised learning aims at

recovering, which is still in the paradigm of supervised set-

tings. Figure 1 provides a vivid explanation of the differences

between them.

There exist several comprehensive reviews related to Pre-

trained Language Models [107], Generative Adversarial Net-

works [142], Autoencoder and contrastive learning for visual

representation [64]. However, none of them concentrates on

the inspiring idea of self-supervised learning that illustrates

researchers and models in many fields. In this work, we

collect studies from natural language processing, computer

vision, and graph learning in recent years to present an up-

to-date and comprehensive retrospective on the frontier of

self-supervised learning. To sum up, our contributions are:

•

We provide a detailed and up-to-date review of self-

supervised learning for representation. We introduce

the background knowledge, models with variants, and

important frameworks. One can easily grasp the frontier

ideas of self-supervised learning.

•

We categorize self-supervised learning models into

generative, contrastive, and generative-contrastive (ad-

versarial), with particular genres inner each one. We

demonstrate the pros and cons of each category and

discuss the recent attempt to shift from generative to

contrastive.

•

We examine the theoretical soundness of self-supervised

learning methods and show how it can benefit the

downstream supervised learning tasks.

•

We identify several open problems in this field, analyze

the limitations and boundaries, and discuss the future

direction for self-supervised representation learning.

We organize the survey as follows. In Section 2, we

introduce the preliminary knowledge for new computer

vision, natural language processing, and graph learning.

From Section 3 to Section 5, we will introduce the empirical

self-supervised learning methods utilizing generative, con-

trastive and generative-contrastive objectives. In Section 6,

we investigate the theoretical basis behind the success of self-

supervised learning and its merits and drawbacks. In Section

7, we discuss the open problems and future directions in this

field.

2 B ACKGROUND

2.1 Representation Learning in NLP

Pre-trained word representations are key components in

natural language processing tasks. Word embedding is to

represent words as low-dimensional real-valued vectors.

There are two kinds of word embeddings: non-contextual

and contextual embeddings.

Non-contextual Embeddings does not consider the con-

text information of the token; that is, these models only

map the token into a distributed embedding space. Thus, for

each word

x

in the vocabulary

V

, embedding will assign

it a specific vector.

e

x

∈ R

d

, where

d

is the dimension of

the embedding. These embeddings can not model complex

characteristics of word use and polysemous.

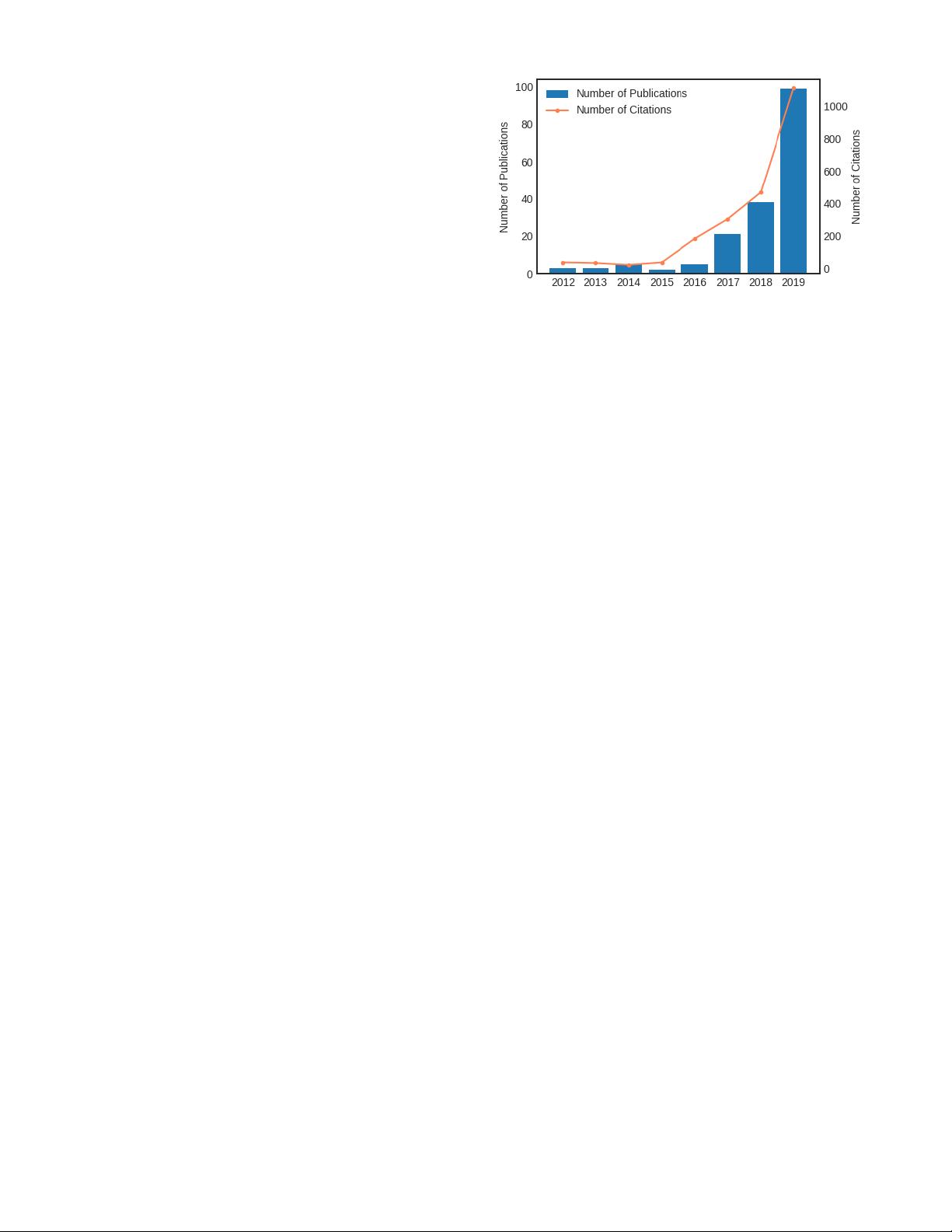

Fig. 2: Number of publications and citations on self-

supervised learning from 2012-2019. Self-supervised learning

is gaining huge attention in recent years. Data from Microsoft

Academic Graph [121]. This only includes paper containing

the complete keyword “self-supervised learning”, so the real

number should be even larger.

To model both complex characteristics of word use and

polysemy, contextual embedding is proposed. For a text

sequence

x

1

, x

2

, ..., x

N

,

x

n

∈ V

, the contextual embedding

of x

n

depends on the whole sequence.

[e

1

, e

2

, ..., e

N

] = f(x

1

, x

2

, ..., x

N

),

here

f(·)

is the embedding function. Since for a certain token

x

i

, the embedding

e

i

can be different if

x

i

in difference

context,

e

i

is called contextual embedding. This kind of em-

bedding can distinguish the semantics of words in different

contexts.

Distributed word representation represents each word

as dense, real-valued, and low-dimensional vector. First-

generation word embedding is introduced as a neural

network language model (NNLM) [10]. For NNLM, most

of the complexity is caused by the non-linear hidden layer

in the model. Mikolov et al. proposed Word2Vec Model

[88] [89] to learn the word representations efficiently. There

are two models: Continuous Bag-of-Words Model (CBOW)

and Continuous Skip-gram Model (SG). Word2Vec is a

kind of context prediction model, and it is one of the

most popular implementations to generate non-contextual

word embeddings for NLP. In the first-generation word

embedding, the same word has the same embedding. Since a

word can have multiple senses, therefore, second-generation

word embedding method is proposed. In that case, each

word token has its embedding. These embeddings also called

contextualized word embedding since the embeddings of

word tokens depend on its context. ELMo (Embeddings from

Language Model) [102] is one implementation to generate

those contextual word embeddings. ELMo is an RNN-based

bidirectional language model, it learns multiple embedding

for each word token, and dependent on how to combine

those embeddings based on the downstream tasks. ELMo

is a feature-based approach, that is, the model is used as a

feature extractor to extract word embedding, and send those

embeddings to the downstream task model. The parameters

of the extractor are fixed, only the parameters in the backend

model can be trained.

3

Recently, BERT (Bidirectional Encoder Representations

from Transformers) [33] bring large improvements on 11

NLP tasks. Different from feature-based approaches like

ELMo, BERT is a fine-tuned approach. The model is first pre-

trained on a large number of corpora through self-supervised

learning, then fine-tuned with labeled data. As the name

showed, BERT is an encoder of the transformer, in the

training stage, BERT masked some tokens in the sentence,

then training to predict the masked word. When use BERT,

initialized the BERT model with pre-trained weights, and

fine-tune the pre-trained model to solve downstream tasks.

2.2 Representation Learning in CV

Computer vision is one of the greatest benefited fields thanks

to deep learning. In the past few years, researchers have

developed a range of efficient network architectures for

supervised tasks. For self-supervised tasks, many of them are

also proved to be useful. In this section, we introduce ResNet

architecture [54], which is the backbone of a large part of the

self-supervised techniques for visual representation models.

Since AlexNet [73], CNN architecture is going deeper and

deeper. While AlexNet had only five convolutional layers,

the VGG network [120]and GoogleNet (also codenamed

Inception v1) [127] had 19 and 22 layers respectively.

Evidence [120], [127] reveals that network depth is of

crucial importance, and driven by its significance of depth,

a question arises: Is learning better networks as easy as

stacking more layers? An obstacle to answering this question

was the notorious problem of vanishing/exploding gradients

[12], which hamper convergence from the beginning. This

problem, however, has been addressed mainly by normal-

ized initialization [79], [116]and intermediate normalization

layers [61], which enable networks with tens of layers to

start converging for stochastic gradient descent (SGD) with

backpropagation [78].

When deeper networks can start converging, a degra-

dation problem has been exposed: with the network depth

increasing, accuracy becomes saturated (which might be

unsurprising) and then degrades rapidly. Unexpectedly, such

degradation is not caused by overfitting, and adding more

layers to a suitably deep model will lead to higher training

error

Residual neural network (ResNet), proposed by He et al.

[54], effectively resolved this problem. Instead of asking every

few stacked layers to directly learn a desired underlying

mapping, authors of [54] design a residual mapping architec-

ture ResNet. The core idea of ResNet is the introduction of

shortcut connections(Fig. 2), which are those skipping over

one or more layers.

A building block is defined as:

y = F (x, {W

i

}) + x. (1)

Here

x

and

y

are the input and output vectors of the

layers considered. The function

F (x, {W

i

})

represents the

residual mapping to be learned. For the example in Fig. 2 that

has two layers,

F = W

2

σ(W

1

x)

in which

σ

denotes ReLU

[91] and the biases are omitted for simplifying notations. The

operation

F + x

is performed by a shortcut connection and

element-wise addition.

Because of its compelling results, ResNet blew peoples

minds and quickly became one of the most popular ar-

chitectures in various computer vision tasks. Since then,

ResNet architecture has been drawing extensive attention

from researchers, and multiple variants based on ResNet

are proposed, including ResNeXt [145], Densely Connected

CNN [59], wide residual networks [153].

2.3 Representation Learning on Graphs

As a ubiquitous data structure, graphs are extensively

employed in multitudes of fields and become the backbone

of many systems. The central problem in machine learning

on graphs is to find a way to represent graph structure so that

it can be easily utilized by machine learning models [51]. To

tackle this problem, researchers propose a series of methods

for graph representation learning at node level and graph

level, which has become a research spotlight recently.

We first define several basic terminologies. Generally, a

graph is defined as

G = (V, E, X)

, where

V

denotes a set

of vertices,

|V |

denotes the number of vertices in the graph,

and

E ⊆ (V × V )

denotes a set of edges connecting the

vertices.

X ∈ R

|V |×d

is the optional original vertex feature

matrix. When input features are unavailable,

X

is set as

orthogonal matrix or initialized with normal distribution,

etc., in order to make the input node features less correlated.

The problem of node representation learning is to learn latent

node representations

Z ∈ R

|V |×d

z

, which is also termed

as network representation learning, network embedding,

etc. There are also some graph-level representation learning

problems, which aims to learn an embedding for the whole

graph.

In general, existing network embedding approaches are

broadly categorized as (1) factorization-based approaches

such as NetMF [104], [105], GraRep [18], HOPE [97], (2)

shallow embedding approaches such as DeepWalk [101],

LINE [128], HARP [21], and (3) neural network approaches

[19], [82]. Recently, graph convolutional network (GCN)

[70] and its multiple variants have become the dominant

approaches in graph modeling, thanks to the utilization of

graph convolution that effectively fuses graph topology and

node features.

However, the majority of advanced graph representation

learning methods require external guidance like annotated

labels. Many researchers endeavor to propose unsupervised

algorithms [48], [52], [139], which do not rely on any external

labels. Self-supervised learning also opens up an opportunity

for effective utilization of the abundant unlabeled data [99],

[125].

3 GENERATIVE SELF-SUPERVISED LEARNING

3.1 Auto-regressive (AR) Model

Auto-regressive (AR) models can be viewed as “Bayes net

structure” (directed graph model). The joint distribution can

be factorized as a product of conditionals

max

θ

p

θ

(x) =

T

X

t=1

log p

θ

(x

t

|x

1:t−1

) (2)

where the probability of each variable is dependent on the

previous variables.

4

Model FOS Type Generator Self-supervision Pretext Task

Hard

NS

Hard

PS

NS strategy

GPT/GPT-2 [109], [110] NLP G AR Following words Next word prediction - - -

PixelCNN [134], [136] CV G AR Following pixels Next pixel prediction - - -

NICE [36] CV G

Flow

based

Whole image Image reconstruction

- - -

RealNVP [37] CV G - - -

Glow [68] CV G - - -

word2vec [88], [89] NLP G AE

Context words

CBOW & SkipGram × × End-to-end

FastText [15] NLP G AE CBOW × × End-to-end

DeepWalk-based

[47], [101], [128]

Graph G AE

Graph edges Link prediction

× × End-to-end

VGAE [71] Graph G AE × × End-to-end

BERT [33] NLP G AE

Masked words

Sentence topic

Masked language model,

Next senetence prediction

- - -

SpanBERT [65] NLP G AE Masked words Masked language model - - -

ALBERT [74] NLP G AE

Masked words

Sentence order

Masked language model,

Sentence order prediction

- - -

ERNIE [126] NLP G AE

Masked words

Sentence topic

Masked language model,

Next senetence prediction

- - -

VQ-VAE 2 [112] CV G AE Whole image Image reconstruction - - -

XLNet [149] NLP G AE+AR Masked words Permutation language model - - -

RelativePosition [38] CV C -

Spatial relations

(Context-Instance)

Relative postion prediction - - -

CDJP [67] CV C -

Jigsaw + Inpainting

+ Colorization

× × End-to-end

PIRL [90] CV C - Jigsaw × X Memory bank

RotNet [44] CV C - Rotation Prediction - - -

Deep InfoMax [55] CV C -

Belonging

(Context-Instance)

MI Maximization

× × End-to-end

AMDIM [6] CV C - × X End-to-end

CPC [96] CV C - × × End-to-end

InfoWord [72] NLP C - × × End-to-end

DGI [139] Graph C - X × End-to-end

InfoGraph [123] Graph C - × ×

End-to-end

(batch-wise)

S

2

GRL [99] Graph C - × × End-to-end

Pre-trained GNN [57] Graph C -

Belonging

Node attributes

MI maximization,

Masked attribute prediction

× × End-to-end

DeepCluster [20] CV C -

Similarity

(Context-Context)

Cluster discrimination

- - -

Local Aggregation [160] CV C - - - -

ClusterFit [147] CV C - - - -

InstDisc [144] CV C -

Identity

(Context-Context)

Instance discrimination

× × Memory bank

CMC [130] CV C - × X End-to-end

MoCo [53] CV C - × × Momentum

MoCo v2 [25] CV C - × X Momentum

SimCLR [22] CV C - × X

End-to-end

(batch-wise)

GCC [63] Graph C - × X Momentum

GAN [46] CV G-C AE

Whole image Image reconstruction

- - -

Adversarial AE [86] CV G-C AE - - -

BiGAN/ALI [39], [42] CV G-C AE - - -

BigBiGAN [40] CV G-C AE - - -

Colorization [75] CV G-C AE Image color Colorization - - -

Inpainting [98] CV G-C AE Parts of images Inpainting - - -

Super-resolution [80] CV G-C AE Details of images Super-resolution - - -

ELECTRA [27] NLP G-C AE Masked words Replaced token detection X × End-to-end

WKLM [146] NLP G-C AE Masked entities Replaced entity detection X × End-to-end

ANE [29] Graph G-C AE

Graph edges Link prediction

- - -

GraphGAN [140] Graph G-C AE - - -

GraphSGAN [34] Graph G-C AE Graph nodes Node classification - - -

TABLE 1: An overview of recent self-supervised representation learning. For acronyms used, “FOS” refers to fields of study;

“NS” refers to negative samples; “PS” refers to positive samples; “MI” refers to mutual information. For alphabets in “Type”:

G Generative ; C Contrastive; G-C Generative-Contrastive (Adversarial).

In NLP, the objective of auto-regressive language model-

ing is usually maximizing the likelihood under the forward

autoregressive factorization [149]. GPT [109] and GPT-2 [110]

use Transformer decoder architecture [137] for language

model. Different from GPT, GPT-2 removes the fine-tuning

processes of different tasks. In order to learn unified represen-

tations that generalize across different tasks, GPT-2 models

p(output|input, task)

, which means given different tasks,

the same inputs can have different outputs.

The auto-regressive models have also been employed

in computer vision, such as PixelRNN [136] and Pixel-

CNN [134]. The general idea is to use auto-regressive

剩余19页未读,继续阅读

syp_net

- 粉丝: 158

- 资源: 1196

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- zigbee-cluster-library-specification

- JSBSim Reference Manual

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功