没有合适的资源?快使用搜索试试~ 我知道了~

首页Artificial Intelligence - Machine Learning in Natural Language Processing

资源详情

资源评论

资源推荐

ML in NLP June 02

NASSLI 1

Machine Learning in Natural

Language Processing

Fernando Pereira

University of Pennsylvania

NASSLLI, June 2002

Thanks to

: William Bialek, John Lafferty, Andrew McCallum, Lillian Lee,

Lawrence Saul, Yves Schabes, Stuart Shieber, Naftali Tishby

ML in NLP

Introduction

ML in NLP

Why ML in NLP

n Examples are easier to create than rules

n Rule writers miss low frequency cases

n Many factors involved in language interpretation

n People do it

n AI

n Cognitive science

n Let the computer do it

n Moore’s law

n storage

n lots of data

ML in NLP

Classification

n Document topic

n Word sense

treasury

bonds ´

chemical

bonds

politics, business national, environment

ML in NLP June 02

NASSLI 2

ML in NLP

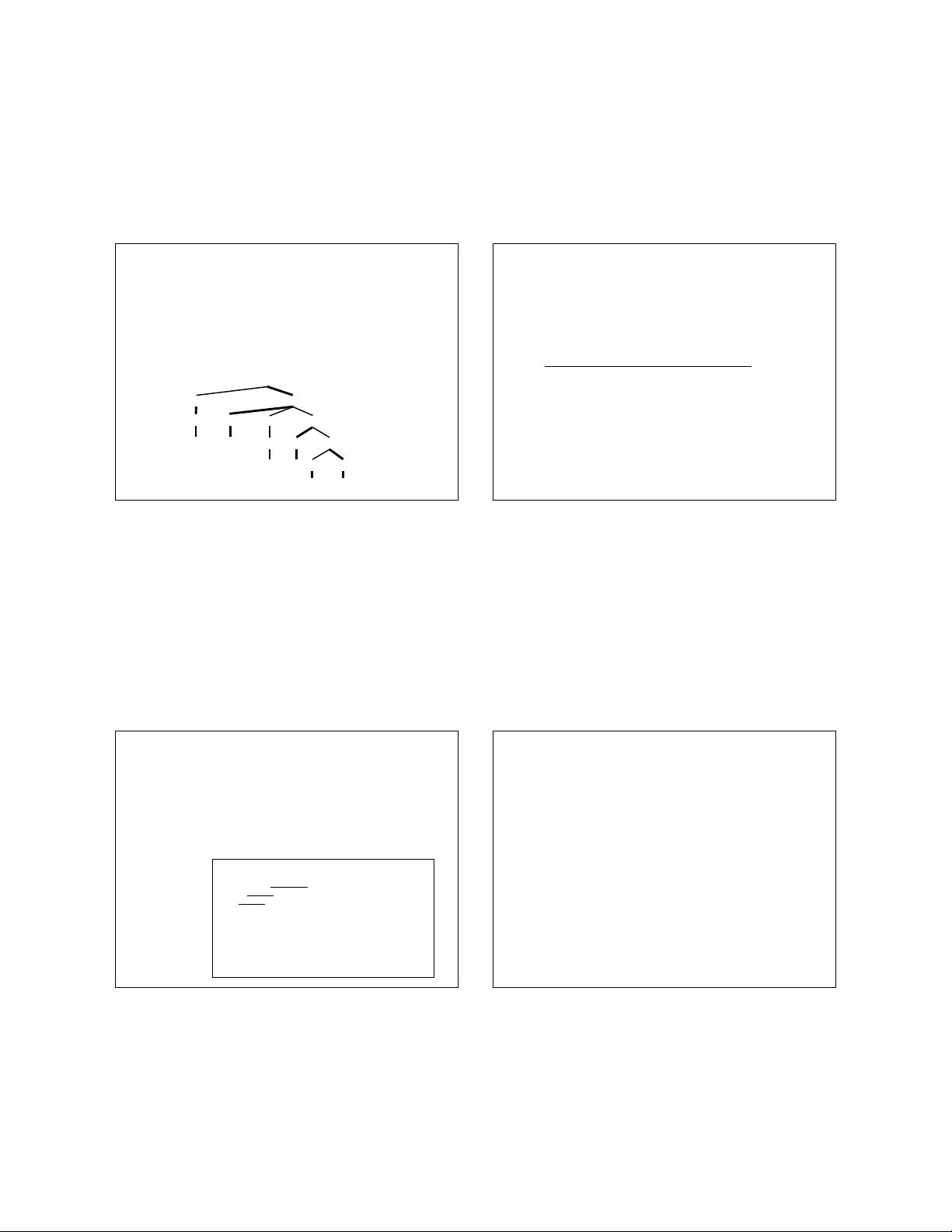

N(bin)

S(dumped)

NP-C(workers)

N(workers)

workers

VP(dumped)

V(dumped)

dumped

NP-C(sacks)

N(sacks)

sacks

PP(into)

P(into) NP-C(bin)

D(a)

a bin

into

Analysis

n Tagging

n Parsing

laterdecadesupshowthatsymptomscausing

JJNNSRPVBPWDTNNSVBG

ML in NLP

Language Modeling

n Is this a likely English sentence?

n Disambiguate noisy transcription

It’s easy to wreck a nice beach

It’s easy to recognize speech

P(colorless green ideas sleep furiously)

P(furiously sleep ideas green colorless)

ª 2 ¥ 10

5

ML in NLP

Inference

n Translation

n Information extraction

ligações

covalentes

fi

covalent

bonds

obrigações

do tesouro

fi

treasury

bonds

Sara Lee to Buy 30% of DIM

Chicago, March 3 - Sara Lee Corp said it agreed to buy a 30 percent interest in

Paris-based DIM S.A., a subsidiary of BIC S.A., at cost of about 20 million

dollars. DIM S.A., a hosiery manufacturer, had sales of about 2 million dollars.

The investment includes the purchase of 5 million newly issued DIM shares

valued at about 5 million dollars, and a loan of about 15 million dollars, it said.

The loan is convertible into an additional 16 million DIM shares, it noted.

The proposed agreement is subject to approval by the French government, it

said.

acquirer

acquired

ML in NLP

Machine Learning Approach

n Algorithms that

write programs

n Specify

• Form of output programs

• Accuracy criterion

n

Input

: set of training examples

n

Output

: program that performs as accurately

as possible on the training examples

n

But will it work on new examples?

ML in NLP June 02

NASSLI 3

ML in NLP

Fundamental Questions

n

Generalization

: is the learned program useful on new

examples?

n

Statistical learning theory

: quantifiable tradeoffs between

number of examples

,

complexity

of program class, and

generalization error

n

Computational tractability

: can we find a good program

quickly?

n If not, can we find a good approximation?

n

Adaptation

: can the program learn quickly from new

evidence?

n Information-theoretic analysis: relationship between adaptation

and compression

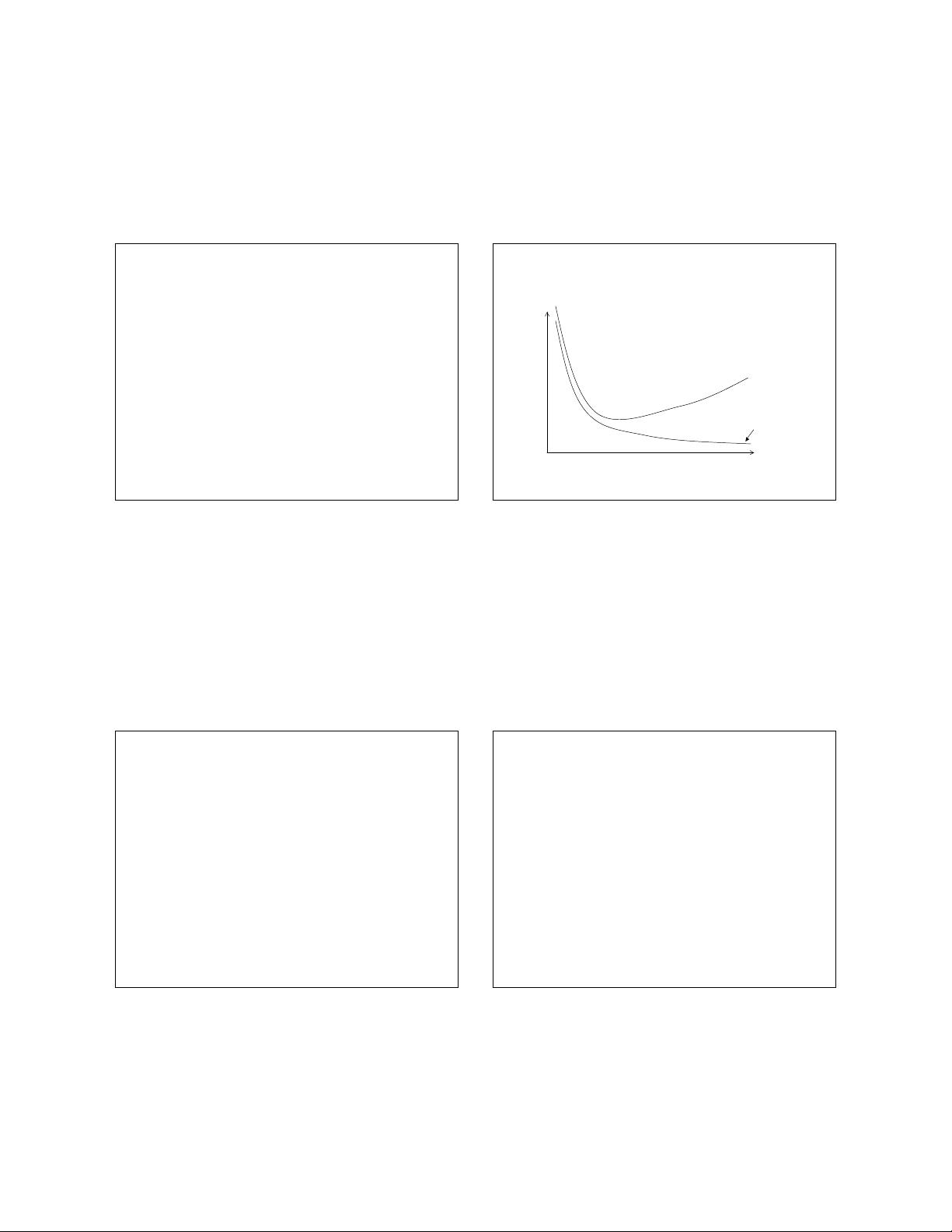

ML in NLP

Learning Tradeoffs

Program class complexity

Error of best program

training

testing on new examples

Overfitting

Rote learning

ML in NLP

Machine Learning Methods

n Classifiers

n Document classification

n Disambiguation disambiguation

n Structured models

n Tagging

n Parsing

n Extraction

n Unsupervised learning

n Generalization

n Structure induction

ML in NLP

Jargon

n

Instance

: event type of interest

n Document and its class

n Sentence and its analysis

n …

n

Supervised learning

: learn

classification

function

from hand-labeled instances

n

Unsupervised learning

: exploit correlations to

organize training instances

n

Generalization

: how well does it work on

unseen data

n

Features

: map instance to set of elementary

events

ML in NLP June 02

NASSLI 4

ML in NLP

Classification Ideas

n Represent instances by

feature

vectors

n Content

n Context

n Learn function from feature vectors

n Class

n Class-probability distribution

n Redundancy is our friend:

many weak

clues

ML in NLP

Structured Model Ideas

n

Interdependent

decisions

n Successive parts-of-speech

n Parsing/generation steps

n Lexical choice

• Parsing

• Translation

n Combining decisions

n Sequential decisions

n Generative models

n Constraint satisfaction

ML in NLP

Unsupervised Learning Ideas

n

Clustering

: class induction

n

Latent

variables

I’m thinking of sports fi more sporty words

n

Distributional

regularities

• Know words by the company they keep

n Data compression

n Infer

dependencies among variables

:

structure learning

ML in NLP

Methodological Detour

n Empiricist/information-theoretic view:

words combine following their associations

in previous material

n Rationalist/generative view:

words combine according to a formal

grammar in the class of possible natural-

language grammars

ML in NLP June 02

NASSLI 5

ML in NLP

Chomsky’s Challenge to Empiricism

(1) Colorless green ideas sleep furiously.

(2) Furiously sleep ideas green colorless.

… It is fair to assume that neither sentence (1) nor

(2) (nor indeed any part of these sentences) has

ever occurred in an English discourse. Hence, in

any statistical model for grammaticalness, these

sentences will be ruled out on identical grounds as

equally ‘remote’ from English. Yet (1), though

nonsensical, is grammatical, while (2) is not.

Chomsky 57

ML in NLP

The Return of Empiricism

n Empiricist methods

work

:

n Markov models can capture a surprising fraction of

the unpredictability in language

n Statistical information retrieval methods beat

alternatives

n Statistical parsers are more accurate than

competitors based on rationalist methods

n Machine-learning, statistical techniques close to

human performance in part-of-speech tagging, sense

disambiguation

n

Just engineering tricks?

ML in NLP

Unseen Events

n Chomsky’s implicit assumption:

any model must

assign zero probability to unseen events

n naïve estimation of Markov model probabilities from

frequencies

n no

latent (hidden)

events

n Any such model

overfits

data: many events are

likely to be missing in any finite sample

\ The learned model

cannot generalize

to unseen

data

\ Support for

poverty of the stimulus

arguments

ML in NLP

The Science of Modeling

n Probability estimates can be

smoothed

to

accommodate unseen events

n

Redundancy

in language supports

effective statistical inference procedures

\

the stimulus is richer than it might seem

n

Statistical learning theory

:

generalization

ability

of a model class can be measured

independently of

model representation

n

Beyond Markov models:

effects of

latent

conditioning variables

can be estimated

from data

剩余38页未读,继续阅读

gongyg1

- 粉丝: 3

- 资源: 42

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

- SPC统计方法基础知识.pptx

- MW全能培训汽轮机调节保安系统PPT教学课件.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论2