没有合适的资源?快使用搜索试试~ 我知道了~

首页VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITION

VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITI...

需积分: 47 62 下载量 177 浏览量

更新于2023-03-16

评论 1

收藏 195KB PDF 举报

VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITION非常经典的VGG-NET框架就是出自这篇文章

资源详情

资源评论

资源推荐

arXiv:1409.1556v6 [cs.CV] 10 Apr 2015

Published as a conference paper at ICLR 2015

VERY DEEP CONVOLUTIONAL NETWORKS

FOR LARGE-SCALE IMAGE RECOGNITION

Karen Simonyan

∗

& Andrew Zisserman

+

Visual Geometry Group, Department of Engineering Science, University of Oxford

{karen,az}@robots.ox.ac.uk

ABSTRACT

In this work we investigate the effect of the convolutional network depth on its

accuracy in the large-scale image recognition setting. Our main contribution is

a thorough evaluation of networks of increasing depth using an architecture with

very small (3 × 3) convolution filters, which shows that a significant improvement

on the prior-art configurations can be achieved by pushing the depth to 16–19

weight layers. These findings were the basis of our ImageNet Challenge 2014

submission, where our team secured the first and the second places in the localisa-

tion and classification tracks respectively. We also show that our representations

generalise well to other datasets, where they achieve state-of-the-art results. We

have made our two best-performing ConvNet models publicly available to facili-

tate further research on the use of deep visual representations in computer vision.

1 INTRODUCTION

Convolutional networks (ConvNets) have recently enjoyed a great success in large-scale im-

age and video recognition (Krizhevsky et al., 2012; Zeiler & Fergus, 2013; Sermanet et al., 2014;

Simonyan & Zisserman, 2014) which has become possible due to the large public image reposito-

ries, such as ImageNet (Deng et al., 2009), and high-performancecomputing systems, such as GPUs

or large-scale distributed clusters (Dean et al., 2012). In particular, an important role in the advance

of deep visual recognition architectures has been played by the ImageNet Large-Scale Visual Recog-

nition Challenge (ILSVRC) (Russakovsky et al., 2014), which has served as a testbed for a few

generations of large-scale image classification systems, from high-dimensional shallow feature en-

codings (Perronnin et al., 2010) (the winner of ILSVRC-2011) to deep ConvNets (Krizhevsky et al.,

2012) (the winner of ILSVRC-2012).

With ConvNets becoming more of a commodity in the computer vision field, a number of at-

tempts have been made to improve the original architecture of Krizhevsky et al. (2012) in a

bid to achieve better accuracy. For instance, the best-performing submissions to the ILSVRC-

2013 (Zeiler & Fergus, 2013; Sermanet et al., 2014) utilised smaller receptive window size and

smaller stride of the first convolutional layer. Another line of improvements dealt with training

and testing the networks densely over the whole image and over multiple scales (Sermanet et al.,

2014; Howard, 2014). In this paper, we address another important aspect of ConvNet architecture

design – its depth. To this end, we fix other parameters of the architecture, and steadily increase the

depth of the network by adding more convolutional layers, which is feasible due to the use of very

small (3 × 3) convolution filters in all layers.

As a result, we come up with significantly more accurate ConvNet architectures, which not only

achieve the state-of-the-art accuracy on ILSVRC classification and localisation tasks, but are also

applicable to other image recognition datasets, where they achieve excellent performance even when

used as a part of a relatively simple pipelines (e.g. deep features classified by a linear SVM without

fine-tuning). We have released our two best-performing models

1

to facilitate further research.

The rest of the paper is organised as follows. In Sect. 2, we describe our ConvNet configurations.

The details of the image classification training and evaluation are then presented in Sect. 3, and the

∗

current affiliation: Google DeepMind

+

current affiliation: University of Oxford and Google DeepMind

1

http://www.robots.ox.ac.uk/

˜

vgg/research/very_deep/

1

Published as a conference paper at ICLR 2015

configurations are compared on the ILSVRC classification task in Sect. 4. Sect. 5 concludes the

paper. For completeness, we also describe and assess our ILSVRC-2014 object localisation system

in Appendix A, and discuss the generalisation of very deep features to other datasets in Appendix B.

Finally, Appendix C contains the list of major paper revisions.

2 CONVNET CONFIGURATIONS

To measure the improvement brought by the increased ConvNet depth in a fair setting, all our

ConvNet layer configurations are designed using the same principles, inspired by Ciresan et al.

(2011); Krizhevsky et al. (2012). In this section, we first describe a generic layout of our ConvNet

configurations (Sect. 2.1) and then detail the specific configurations used in the evaluation (Sect. 2.2).

Our design choices are then discussed and compared to the prior art in Sect. 2.3.

2.1 ARCHITECTURE

During training, the input to our ConvNets is a fixed-size 224 × 224 RGB image. The only pre-

processing we do is subtracting the mean RGB value, computed on the training set, from each pixel.

The image is passed through a stack of convolutional (conv.) layers, where we use filters with a very

small receptive field: 3 × 3 (which is the smallest size to capture the notion of left/right, up/down,

center). In one of the configurations we also utilise 1 × 1 convolution filters, which can be seen as

a linear transformation of the input channels (followed by non-linearity). The convolution stride is

fixed to 1 pixel; the spatial padding of conv. layer input is such that the spatial resolution is preserved

after convolution, i.e. the padding is 1 pixel for 3 × 3 conv. layers. Spatial pooling is carried out by

five max-pooling layers, which follow some of the conv. layers (not all the conv. layers are followed

by max-pooling). Max-pooling is performed over a 2 × 2 pixel window, with stride 2.

A stack of convolutional layers (which has a different depth in different architectures) is followed by

three Fully-Connected (FC) layers: the first two have 4096 channels each, the third performs 1000-

way ILSVRC classification and thus contains 1000 channels (one for each class). The final layer is

the soft-max layer. The configuration of the fully connected layers is the same in all networks.

All hidden layers are equipped with the rectification (ReLU (Krizhevsky et al., 2012)) non-linearity.

We note that none of our networks (except for one) contain Local Response Normalisation

(LRN) normalisation (Krizhevsky et al., 2012): as will be shown in Sect. 4, such normalisation

does not improve the performance on the ILSVRC dataset, but leads to increased memory con-

sumption and computation time. Where applicable, the parameters for the LRN layer are those

of (Krizhevsky et al., 2012).

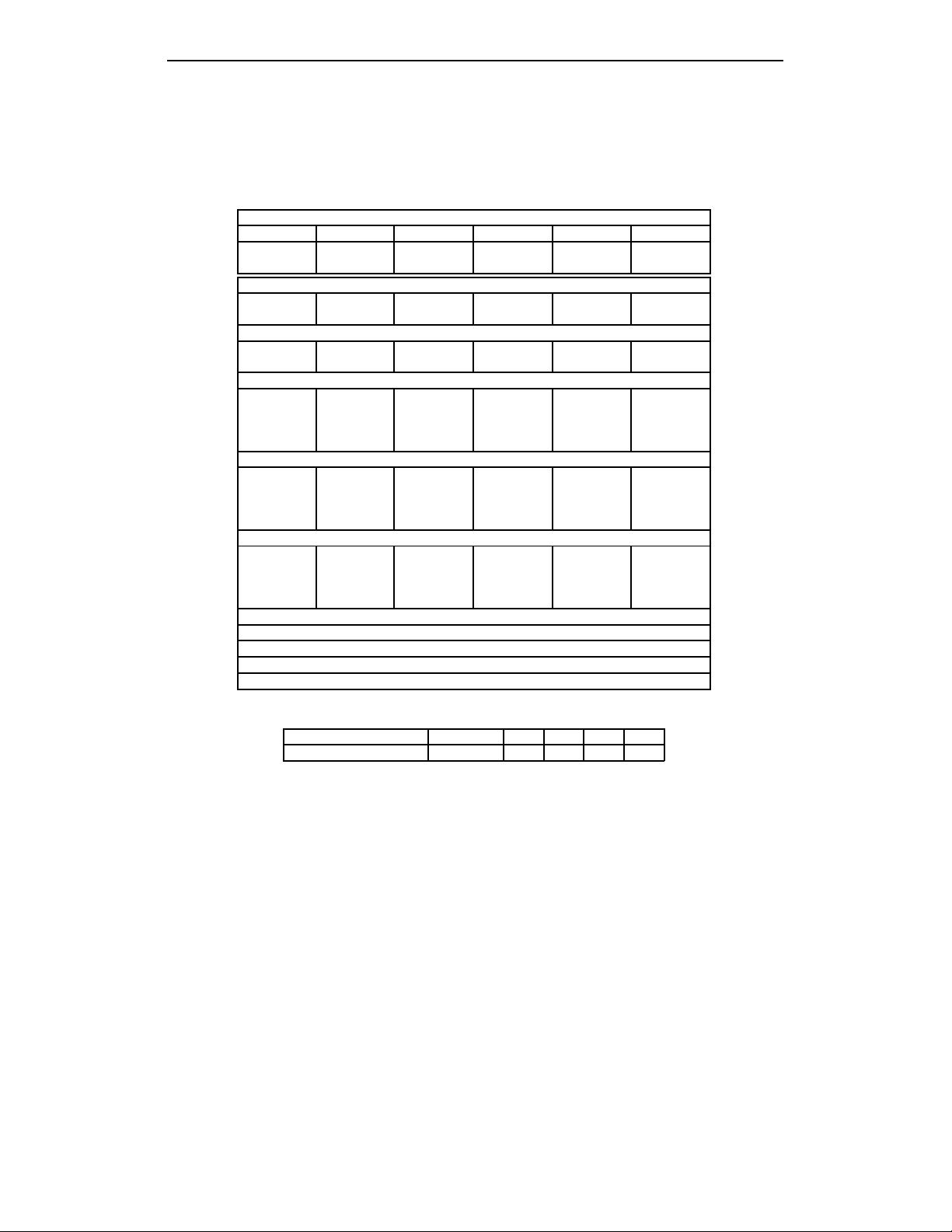

2.2 CONFIGURATIONS

The ConvNet configurations, evaluated in this paper, are outlined in Table 1, one per column. In

the following we will refer to the nets by their names (A–E). All configurations follow the generic

design presented in Sect. 2.1, and differ only in the depth: from 11 weight layers in the network A

(8 conv. and 3 FC layers) to 19 weight layers in the network E (16 conv. and 3 FC layers). The width

of conv. layers (the number of channels) is rather small, starting from 64 in the first layer and then

increasing by a factor of 2 after each max-pooling layer, until it reaches 512.

In Table 2 we report the number of parameters for each configuration. In spite of a large depth, the

number of weights in our nets is not greater than the number of weights in a more shallow net with

larger conv. layer widths and receptive fields (144M weights in (Sermanet et al., 2014)).

2.3 DISCUSSION

Our ConvNet configurations are quite different from the ones used in the top-performing entries

of the ILSVRC-2012 (Krizhevsky et al., 2012) and ILSVRC-2013 competitions (Zeiler & Fergus,

2013; Sermanet et al., 2014). Rather than using relatively large receptive fields in the first conv. lay-

ers (e.g. 11 × 11 with stride 4 in (Krizhevsky et al., 2012), or 7 × 7 with stride 2 in (Zeiler & Fergus,

2013; Sermanet et al., 2014)), we use very small 3 × 3 receptive fields throughout the whole net,

which are convolved with the input at every pixel (with stride 1). It is easy to see that a stack of two

3 × 3 conv. layers (without spatial pooling in between) has an effective receptive field of 5 × 5; three

2

Published as a conference paper at ICLR 2015

Table 1: ConvNet configurations (shown in columns). The depth of the configurations increases

from the left (A) to the right (E), as more layers are added (the added layers are shown in bold). The

convolutional layer parameters are denoted as “convhreceptive field sizei-hnumber of channelsi”.

The ReLU activation function is not shown for brevity.

ConvNet Configuration

A A-LRN B C D E

11 weight 11 weight 13 weight 16 weight 16 weight 19 weight

layers layers layers layers layers layers

input (224 × 224 RGB image)

conv3-64 conv3-64 conv3-64 conv3-64 conv3-64 conv3-64

LRN conv3-64 conv3-64 conv3-64 conv3-64

maxpool

conv3-128 conv3-128 conv3-128 conv3-128 conv3-128 conv3-128

conv3-128 conv3-128 conv3-128 conv3-128

maxpool

conv3-256 conv3-256 conv3-256 conv3-256 conv3-256 conv3-256

conv3-256 conv3-256 conv3-256 conv3-256 conv3-256 conv3-256

conv1-256 conv3-256 conv3-256

conv3-256

maxpool

conv3-512 conv3-512 conv3-512 conv3-512 conv3-512 conv3-512

conv3-512 conv3-512 conv3-512 conv3-512 conv3-512 conv3-512

conv1-512 conv3-512 conv3-512

conv3-512

maxpool

conv3-512 conv3-512 conv3-512 conv3-512 conv3-512 conv3-512

conv3-512 conv3-512 conv3-512 conv3-512 conv3-512 conv3-512

conv1-512 conv3-512 conv3-512

conv3-512

maxpool

FC-4096

FC-4096

FC-1000

soft-max

Table 2: Number of parameters (in millions).

Network A,A-LRN B C D E

Number of parameters 133 133 134 138 144

such layers have a 7 × 7 effective receptive field. So what have we gained by using, for instance, a

stack of three 3 × 3 conv. layers instead of a single 7 × 7 layer? First, we incorporate three non-linear

rectification layers instead of a single one, which makes the decision function more discriminative.

Second, we decrease the number of parameters: assuming that both the input and the output of a

three-layer 3 × 3 convolution stack has C channels, the stack is parametrised by 3

3

2

C

2

= 27C

2

weights; at the same time, a single 7 × 7 conv. layer would require 7

2

C

2

= 49C

2

parameters, i.e.

81% more. This can be seen as imposing a regularisation on the 7 × 7 conv. filters, forcing them to

have a decomposition through the 3 × 3 filters (with non-linearity injected in between).

The incorporation of 1 × 1 conv. layers (configuration C, Table 1) is a way to increase the non-

linearity of the decision function without affecting the receptive fields of the conv. layers. Even

though in our case the 1 × 1 convolution is essentially a linear projection onto the space of the same

dimensionality (the number of input and output channels is the same), an additional non-linearity is

introduced by the rectification function. It should be noted that 1 × 1 conv. layers have recently been

utilised in the “Network in Network” architecture of Lin et al. (2014).

Small-size convolution filters have been previously used by Ciresan et al. (2011), but their nets

are significantly less deep than ours, and they did not evaluate on the large-scale ILSVRC

dataset. Goodfellow et al. (2014) applied deep ConvNets (11 weight layers) to the task of

street number recognition, and showed that the increased depth led to better performance.

GoogLeNet (Szegedy et al., 2014), a top-performing entry of the ILSVRC-2014 classification task,

was developed independently of our work, but is similar in that it is based on very deep ConvNets

3

剩余13页未读,继续阅读

autocyz

- 粉丝: 533

- 资源: 7

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- 2023年中国辣条食品行业创新及消费需求洞察报告.pptx

- 2023年半导体行业20强品牌.pptx

- 2023年全球电力行业评论.pptx

- 2023年全球网络安全现状-劳动力资源和网络运营的全球发展新态势.pptx

- 毕业设计-基于单片机的液体密度检测系统设计.doc

- 家用清扫机器人设计.doc

- 基于VB+数据库SQL的教师信息管理系统设计与实现 计算机专业设计范文模板参考资料.pdf

- 官塘驿林场林防火(资源监管)“空天地人”四位一体监测系统方案.doc

- 基于专利语义表征的技术预见方法及其应用.docx

- 浅谈电子商务的现状及发展趋势学习总结.doc

- 基于单片机的智能仓库温湿度控制系统 (2).pdf

- 基于SSM框架知识产权管理系统 (2).pdf

- 9年终工作总结新年计划PPT模板.pptx

- Hytera海能达CH04L01 说明书.pdf

- 数据中心运维操作标准及流程.pdf

- 报告模板 -成本分析与报告培训之三.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0