where Σ

x

= C

d

Λ

x

C

T

d

and Λ

x

is a d × d real diagonal matrix. By Example 1, φ , U

T

p

x is the

p-dimensional feature vector where U

p

is a d ×p real matrix with orthonormal columns that extract

the features for the learning process. Consequently, φ ∼ N(0, Σ

φ

) where Σ

φ

= U

T

p

C

d

Λ

x

C

T

d

U

p

.

If the feature space for learning is based on DCT basis vectors (i.e., the columns of U

p

are the first p

columns of C

d

), then the p × p input feature covariance matrix Σ

φ

is diagonal; in fact, its diagonal

entries are exactly the first p entries of the diagonal of the d × d data covariance matrix Λ

x

.

2.2. The double descent phenomenon

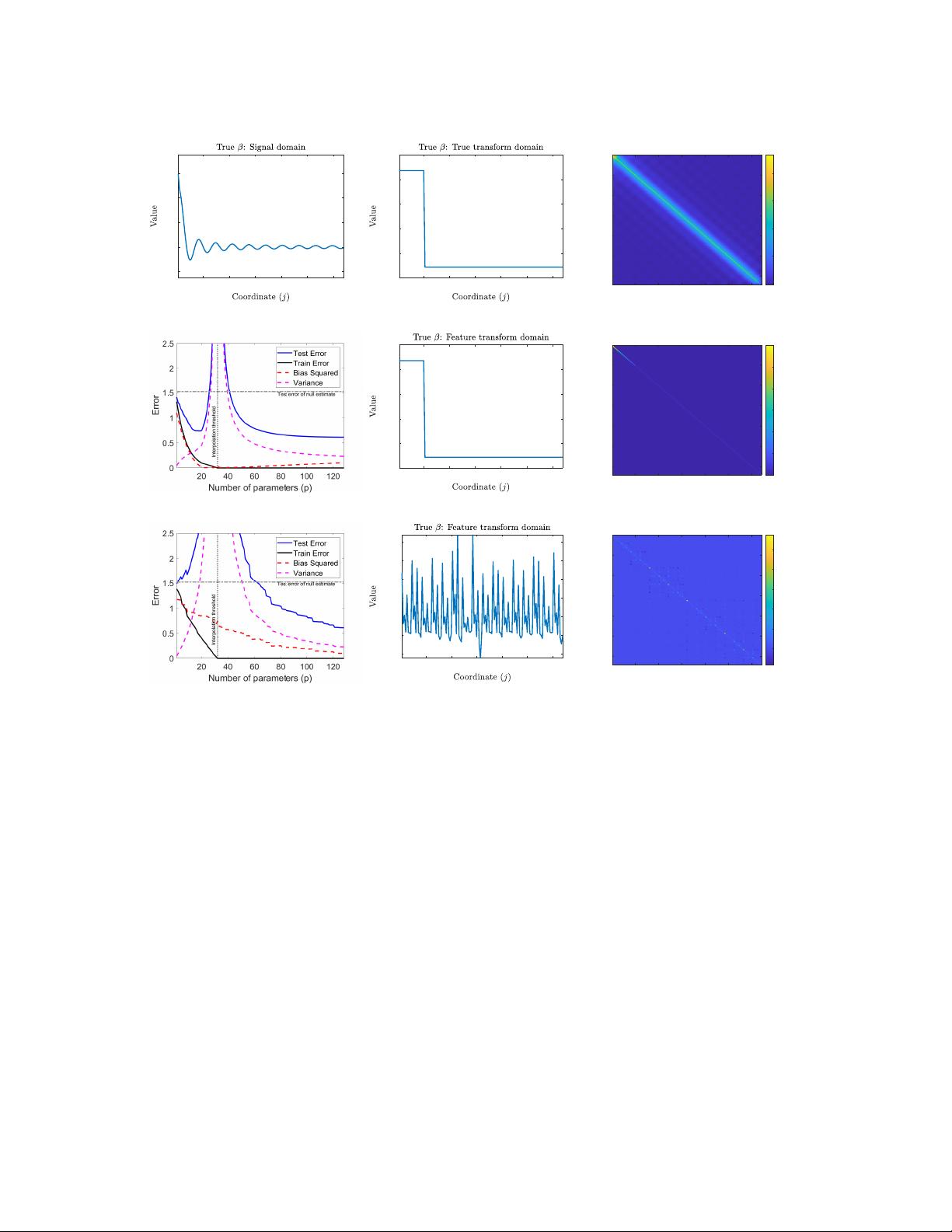

Figure 2 studies the relationship between model complexity (in number of parameters) and test

error induced by linear regression with a linear feature map (in line with Example 1). For both

experiments, we set d = 128 and n = 32, and vary the number of parameters p to be between 1

and d. We also consider β to be an approximately 20-sparse parameter vector such that the majority

of its energy is contained in the first 20 discrete cosine transform (DCT) features (see Figure 2(b)

for an illustration). The input data covariance matrix Σ

x

is also considered to be anisotropic, as

pictured in the heatmap of its values in Figure 2(c).

We consider two choices of linear feature maps, i.e., two choices of orthonormal bases {u

j

}

d

j=1

:

the discrete cosine transform (DCT) basis and the Hadamard basis. In the first case of DCT features,

the model is well-specified for p = d and the bulk of the optimal model fit will involve the first 20

features as a consequence of the approximate sparsity of the true parameter β in the DCT basis (see

Figure 2(e)). As a consequence of this alignment, the feature covariance matrix, Σ

φ

, is diagonal

and its entries constitute the first p entries of the diagonalized form of the data covariance matrix,

denoted by Λ

x

. The ensuing spiked covariance structure is depicted in Figure 2(f). Figure 2(d)

plots the test error as a function of the number of parameters, p, in the model and shows a counter-

intuitive relationship. When p < n = 32, we obtain non-zero training error and observe the classic

bias-variance tradeoff. When p > n, we are able to perfectly interpolate the training data (with

the minimum `

2

-norm solution). Although interpolation of noise is traditionally associated with

overfitting, here we observe that the test error monotonically decays with the number of parameters

p! This is a manifestation of the double descent phenomenon in an elementary example inspired by

signal processing perspectives.

The second choice of the Hadamard basis also gives rise to a double descent behavior, but is

quite different both qualitatively and quantitatively. The feature space utilized for learning (the

Hadamard basis) is fundamentally mismatched to the feature space in which the original parameter

vector β is sparse (the DCT basis). Consequently, as seen in Figure 2(h), the energy of the true

parameter vector β is spread over the d Hadamard features in an unorganized manner. Moreover,

the covariance matrix of Hadamard features, depicted as a heat-map in Figure 2(i), is significantly

more correlated and dispersed in its structure. Figure 2(g) displays a stronger benefit of overparam-

eterization in this model owing to the increased model misspecification arising from the choice of

Hadamard feature map. Unlike in the well-specified case of DCT features, the overparameterized

regime significantly dominates the underparameterized regime in terms of test performance.

Finally, the results in Fig. 3 correspond to β that its components are shown in Fig. 3(a) and its

DCT-domain representation is presented in Fig. 3(b). The majority of β’s energy is in a mid-range

“feature band” that includes the 18 DCT features at coordinates j = 21, . . . , 38; in addition, there

are two more high-energy features in coordinates j = 1, 2, and the remaining coordinates are of low

energy (see Fig. 3(b)). The input covariance matrix Σ

x

, presented in Fig. 3(c), is the same as before

9