"深入探讨机器学习对偶性:共轭函数、平滑技术与Fenchel对偶性"

需积分: 9 106 浏览量

更新于2024-03-21

收藏 1.62MB PDF 举报

Duality in machine learning is a fundamental concept that plays a crucial role in optimizing machine learning algorithms. In the report on "Machine Learning Duality" by Google research scientist Mathieu Blondel at PSL University, key topics such as conjugate functions, smoothing techniques, Fenchel duality, Fenchel-Young loss, and block dual coordinate ascent algorithm were discussed.

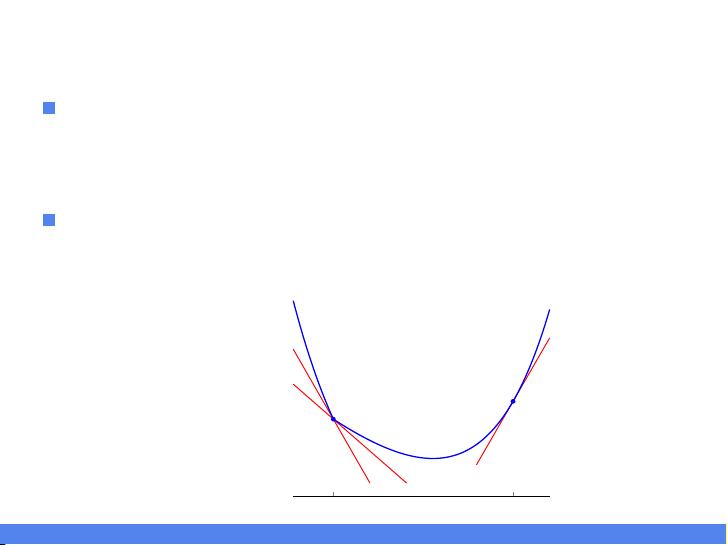

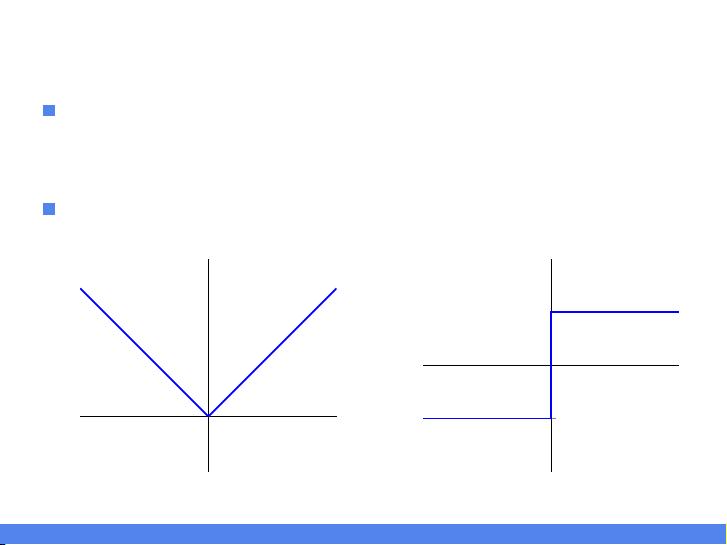

Conjugate functions are functions that are related to each other through the Legendre-Fenchel transformation, providing a way to characterize the dual problem of a given optimization problem. Smoothing techniques involve transforming non-smooth objective functions into smooth functions to make them easier to optimize. Fenchel duality is a powerful concept in convex optimization that relates primal and dual optimization problems through convex conjugates. The Fenchel-Young loss function is a specific application of Fenchel duality in the context of machine learning.

The block dual coordinate ascent algorithm is an optimization algorithm that leverages the dual problem formulation to optimize over blocks of coordinates iteratively. This algorithm is particularly useful for solving large-scale machine learning problems efficiently.

In conclusion, duality in machine learning is a versatile tool that allows us to tackle optimization problems from different perspectives. By understanding and applying concepts such as conjugate functions, smoothing techniques, Fenchel duality, Fenchel-Young loss, and block dual coordinate ascent algorithm, we can improve the efficiency and performance of machine learning algorithms. Mathieu Blondel's research on machine learning duality provides valuable insights into these concepts and their practical implications in the field of machine learning.

2022-08-03 上传

2023-05-26 上传

2023-06-02 上传

2023-08-27 上传

2024-09-12 上传

2023-10-23 上传

2023-06-09 上传

syp_net

- 粉丝: 158

- 资源: 1187

最新资源

- 磁性吸附笔筒设计创新,行业文档精选

- Java Swing实现的俄罗斯方块游戏代码分享

- 骨折生长的二维与三维模型比较分析

- 水彩花卉与羽毛无缝背景矢量素材

- 设计一种高效的袋料分离装置

- 探索4.20图包.zip的奥秘

- RabbitMQ 3.7.x延时消息交换插件安装与操作指南

- 解决NLTK下载停用词失败的问题

- 多系统平台的并行处理技术研究

- Jekyll项目实战:网页设计作业的入门练习

- discord.js v13按钮分页包实现教程与应用

- SpringBoot与Uniapp结合开发短视频APP实战教程

- Tensorflow学习笔记深度解析:人工智能实践指南

- 无服务器部署管理器:防止错误部署AWS帐户

- 医疗图标矢量素材合集:扁平风格16图标(PNG/EPS/PSD)

- 人工智能基础课程汇报PPT模板下载