没有合适的资源?快使用搜索试试~ 我知道了~

首页神经网络剪枝的研究进展状态(Neural Network Pruning).pdf

神经网络剪枝的研究进展状态(Neural Network Pruning).pdf

需积分: 36 27 下载量 167 浏览量

更新于2023-05-14

评论

收藏 763KB PDF 举报

社区缺乏标准化的基准和度量标准。这一缺陷非常严重,以至于很难对修剪技术进行比较,也很难确定这一领域在过去三十年中取得了多大的进步。为了解决这种情况,我们确定了当前实践中的问题,提出了具体的补救措施,并引入了ShrinkBench,这是一个开源框架,用于促进修剪方法的标准化评估。我们使用收缩台对各种修剪技术进行了比较,结果表明,它的综合评价可以防止在比较修剪方法时常见的缺陷。

资源详情

资源评论

资源推荐

WHAT IS THE STATE OF NEURAL NETWORK PRUNING?

Davis Blalock

* 1

Jose Javier Gonzalez Ortiz

* 1

Jonathan Frankle

1

John Guttag

1

ABSTRACT

Neural network pruning—the task of reducing the size of a network by removing parameters—has been the

subject of a great deal of work in recent years. We provide a meta-analysis of the literature, including an overview

of approaches to pruning and consistent findings in the literature. After aggregating results across 81 papers

and pruning hundreds of models in controlled conditions, our clearest finding is that the community suffers

from a lack of standardized benchmarks and metrics. This deficiency is substantial enough that it is hard to

compare pruning techniques to one another or determine how much progress the field has made over the past

three decades. To address this situation, we identify issues with current practices, suggest concrete remedies, and

introduce ShrinkBench, an open-source framework to facilitate standardized evaluations of pruning methods. We

use ShrinkBench to compare various pruning techniques and show that its comprehensive evaluation can prevent

common pitfalls when comparing pruning methods.

1 INTRODUCTION

Much of the progress in machine learning in the past

decade has been a result of deep neural networks. Many

of these networks, particularly those that perform the best

(Huang et al., 2018), require enormous amounts of compu-

tation and memory. These requirements not only increase

infrastructure costs, but also make deployment of net-

works to resource-constrained environments such as mo-

bile phones or smart devices challenging (Han et al., 2015;

Sze et al., 2017; Yang et al., 2017).

One popular approach for reducing these resource require-

ments at test time is neural network pruning, which entails

systematically removing parameters from an existing net-

work. Typically, the initial network is large and accurate,

and the goal is to produce a smaller network with simi-

lar accuracy. Pruning has been used since the late 1980s

(Janowsky, 1989; Mozer & Smolensky, 1989a;b; Karnin,

1990), but has seen an explosion of interest in the past

decade thanks to the rise of deep neural networks.

For this study, we surveyed 81 recent papers on pruning

in the hopes of extracting practical lessons for the broader

community. For example: which technique achieves the

best accuracy/efficiency tradeoff? Are there strategies that

work best on specific architectures or datasets? Which

high-level design choices are most effective?

There are indeed several consistent results: pruning param-

eters based on their magnitudes substantially compresses

*

Equal contribution

1

MIT CSAIL, Cambridge, MA, USA.

Correspondence to: Davis Blalock <dblalock@mit.edu>.

Proceedings of the 3

rd

MLSys Conference, Austin, TX, USA,

2020. Copyright 2020 by the author(s).

networks without reducing accuracy, and many pruning

methods outperform random pruning. However, our cen-

tral finding is that the state of the literature is such that our

motivating questions are impossible to answer. Few papers

compare to one another, and methodologies are so inconsis-

tent between papers that we could not make these compar-

isons ourselves. For example, a quarter of papers compare

to no other pruning method, half of papers compare to at

most one other method, and dozens of methods have never

been compared to by any subsequent work. In addition,

no dataset/network pair appears in even a third of papers,

evaluation metrics differ widely, and hyperparameters and

other counfounders vary or are left unspecified.

Most of these issues stem from the absence of standard

datasets, networks, metrics, and experimental practices. To

help enable more comparable pruning research, we identify

specific impediments and pitfalls, recommend best prac-

tices, and introduce ShrinkBench, a library for standard-

ized evaluation of pruning. ShrinkBench makes it easy to

adhere to the best practices we identify, largely by provid-

ing a standardized collection of pruning primitives, models,

datasets, and training routines.

Our contributions are as follows:

1. A meta-analysis of the neural network pruning litera-

ture based on comprehensively aggregating reported re-

sults from 81 papers.

2. A catalog of problems in the literature and best prac-

tices for avoiding them. These insights derive from an-

alyzing existing work and pruning hundreds of models.

3. ShrinkBench, an open-source library for evaluating

neural network pruning methods available at

https://github.com/jjgo/shrinkbench.

arXiv:2003.03033v1 [cs.LG] 6 Mar 2020

What is the State of Neural Network Pruning?

2 OVERVIEW OF PRUNING

Before proceeding, we first offer some background on neu-

ral network pruning and a high-level overview of how ex-

isting pruning methods typically work.

2.1 Definitions

We define a neural network architecture as a function fam-

ily f (x; ·). The architecture consists of the configuration of

the network’s parameters and the sets of operations it uses

to produce outputs from inputs, including the arrangement

of parameters into convolutions, activation functions, pool-

ing, batch normalization, etc. Example architectures in-

clude AlexNet and ResNet-56. We define a neural network

model as a particular parameterization of an architecture,

i.e., f (x; W ) for specific parameters W . Neural network

pruning entails taking as input a model f (x; W ) and pro-

ducing a new model f (x; M W

0

). Here W

0

is set of

parameters that may be different from W , M ∈ {0, 1}

|W

0

|

is a binary mask that fixes certain parameters to 0, and is

the elementwise product operator. In practice, rather than

using an explicit mask, pruned parameters of W are fixed

to zero or removed entirely.

2.2 High-Level Algorithm

There are many methods of producing a pruned model

f(x; M W

0

) from an initially untrained model f (x; W

0

),

where W

0

is sampled from an initialization distribution D.

Nearly all neural network pruning strategies in our survey

derive from Algorithm 1 (Han et al., 2015). In this algo-

rithm, the network is first trained to convergence. After-

wards, each parameter or structural element in the network

is issued a score, and the network is pruned based on these

scores. Pruning reduces the accuracy of the network, so

it is trained further (known as fine-tuning) to recover. The

process of pruning and fine-tuning is often iterated several

times, gradually reducing the network’s size.

Many papers propose slight variations of this algorithm.

For example, some papers prune periodically during train-

ing (Gale et al., 2019) or even at initialization (Lee et al.,

2019b). Others modify the network to explicitly include

additional parameters that encourage sparsity and serve as

a basis for scoring the network after training (Molchanov

et al., 2017).

2.3 Differences Betweeen Pruning Methods

Within the framework of Algorithm 1, pruning methods

vary primarily in their choices regarding sparsity structure,

scoring, scheduling, and fine-tuning.

Structure. Some methods prune individual parameters

(unstructured pruning). Doing so produces a sparse neural

Algorithm 1 Pruning and Fine-Tuning

Input: N , the number of iterations of pruning, and

X, the dataset on which to train and fine-tune

1: W ← initialize()

2: W ← trainT oConvergence(f (X; W ))

3: M ← 1

|W |

4: for i in 1 to N do

5: M ← prune(M, score(W ))

6: W ← f ineT une(f(X; M W ))

7: end for

8: return M, W

network, which—although smaller in terms of parameter-

count—may not be arranged in a fashion conducive to

speedups using modern libraries and hardware. Other

methods consider parameters in groups (structured prun-

ing), removing entire neurons, filters, or channels to ex-

ploit hardware and software optimized for dense computa-

tion (Li et al., 2016; He et al., 2017).

Scoring. It is common to score parameters based on their

absolute values, trained importance coefficients, or contri-

butions to network activations or gradients. Some prun-

ing methods compare scores locally, pruning a fraction of

the parameters with the lowest scores within each struc-

tural subcomponent of the network (e.g., layers) (Han et al.,

2015). Others consider scores globally, comparing scores

to one another irrespective of the part of the network in

which the parameter resides (Lee et al., 2019b; Frankle &

Carbin, 2019).

Scheduling. Pruning methods differ in the amount of the

network to prune at each step. Some methods prune all

desired weights at once in a single step (Liu et al., 2019).

Others prune a fixed fraction of the network iteratively over

several steps (Han et al., 2015) or vary the rate of pruning

according to a more complex function (Gale et al., 2019).

Fine-tuning. For methods that involve fine-tuning, it is

most common to continue to train the network using the

trained weights from before pruning. Alternative propos-

als include rewinding the network to an earlier state (Fran-

kle et al., 2019) and reinitializing the network entirely (Liu

et al., 2019).

2.4 Evaluating Pruning

Pruning can accomplish many different goals, including re-

ducing the storage footprint of the neural network, the com-

putational cost of inference, the energy requirements of in-

ference, etc. Each of these goals favors different design

choices and requires different evaluation metrics. For ex-

ample, when reducing the storage footprint of the network,

all parameters can be treated equally, meaning one should

evaluate the overall compression ratio achieved by prun-

ing. However, when reducing the computational cost of

What is the State of Neural Network Pruning?

inference, different parameters may have different impacts.

For instance, in convolutional layers, filters applied to spa-

tially larger inputs are associated with more computation

than those applied to smaller inputs.

Regardless of the goal, pruning imposes a tradeoff between

model efficiency and quality, with pruning increasing the

former while (typically) decreasing the latter. This means

that a pruning method is best characterized not by a single

model it has pruned, but by a family of models correspond-

ing to different points on the efficiency-quality curve. To

quantify efficiency, most papers report at least one of two

metrics. The first is the number of multiply-adds (often

referred to as FLOPs) required to perform inference with

the pruned network. The second is the fraction of param-

eters pruned. To measure quality, nearly all papers report

changes in Top-1 or Top-5 image classification accuracy.

As others have noted (Lebedev et al., 2014; Figurnov et al.,

2016; Louizos et al., 2017; Yang et al., 2017; Han et al.,

2015; Kim et al., 2015; Wen et al., 2016; Luo et al., 2017;

He et al., 2018b), these metrics are far from perfect. Param-

eter and FLOP counts are a loose proxy for real-world la-

tency, throughout, memory usage, and power consumption.

Similarly, image classification is only one of the countless

tasks to which neural networks have been applied. How-

ever, because the overwhelming majority of papers in our

corpus focus on these metrics, our meta-analysis necessar-

ily does as well.

3 LESSONS FROM THE LITERATURE

After aggregating results from a corpus of 81 papers, we

identified a number of consistent findings. In this section,

we provide an overview of our corpus and then discuss

these findings.

3.1 Papers Used in Our Analysis

Our corpus consists of 79 pruning papers published since

2010 and two classic papers (LeCun et al., 1990; Hassibi

et al., 1993) that have been compared to by a number of

recent methods. We selected these papers by identifying

popular papers in the literature and what cites them, sys-

tematically searching through conference proceedings, and

tracing the directed graph of comparisons between prun-

ing papers. This last procedure results in the property that,

barring oversights on our part, there is no pruning paper

in our corpus that compares to any pruning paper outside

of our corpus. Additional details about our corpus and its

construction can be found in Appendix A.

3.2 How Effective is Pruning?

One of the clearest findings about pruning is that it works.

More precisely, there are various methods that can sig-

nificantly compress models with little or no loss of accu-

racy. In fact, for small amounts of compression, pruning

can sometimes increase accuracy (Han et al., 2015; Suzuki

et al., 2018). This basic finding has been replicated in a

large fraction of the papers in our corpus.

Along the same lines, it has been repeatedly shown that, at

least for large amounts of pruning, many pruning methods

outperform random pruning (Yu et al., 2018; Gale et al.,

2019; Frankle et al., 2019; Mariet & Sra, 2015; Suau et al.,

2018; He et al., 2017). Interestingly, this does not always

hold for small amounts of pruning (Morcos et al., 2019).

Similarly, pruning all layers uniformly tends to perform

worse than intelligently allocating parameters to different

layers (Gale et al., 2019; Han et al., 2015; Li et al., 2016;

Molchanov et al., 2016; Luo et al., 2017) or pruning glob-

ally (Lee et al., 2019b; Frankle & Carbin, 2019). Lastly,

when holding the number of fine-tuning iterations constant,

many methods produce pruned models that outperform re-

training from scratch with the same sparsity pattern (Zhang

et al., 2015; Yu et al., 2018; Louizos et al., 2017; He et al.,

2017; Luo et al., 2017; Frankle & Carbin, 2019) (at least

with a large enough amount of pruning (Suau et al., 2018)).

Retraining from scratch in this context means training a

fresh, randomly-initialized model with all weights clamped

to zero throughout training, except those that are nonzero

in the pruned model.

Another consistent finding is that sparse models tend to

outperform dense ones for a fixed number of parameters.

Lee et al. (2019a) show that increasing the nominal size

of ResNet-20 on CIFAR-10 while sparsifying to hold the

number of parameters constant decreases the error rate.

Kalchbrenner et al. (2018) obtain a similar result for audio

synthesis, as do Gray et al. (2017) for a variety of additional

tasks across various domains. Perhaps most compelling of

all are the many results, including in Figure 1, showing that

pruned models can obtain higher accuracies than the origi-

nal models from which they are derived. This demonstrates

that sparse models can not only outperform dense counter-

parts with the same number of parameters, but sometimes

dense models with even more parameters.

3.3 Pruning vs Architecture Changes

One current unknown about pruning is how effective it

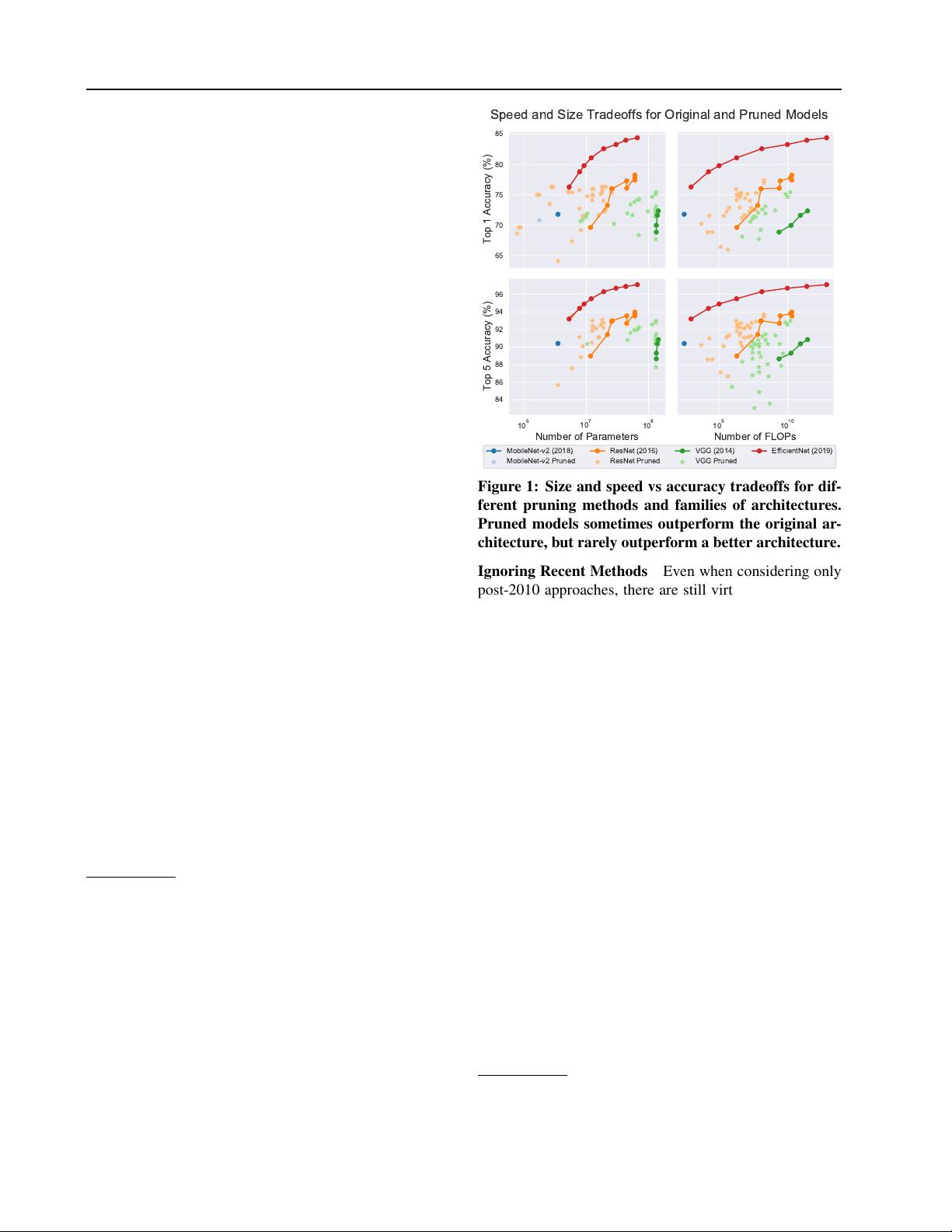

tends to be relative to simply using a more efficient archi-

tecture. These options are not mutually exclusive, but it

may be useful in guiding one’s research or development

efforts to know which choice is likely to have the larger

impact. Along similar lines, it is unclear how pruned mod-

els from different architectures compare to one another—

i.e., to what extent does pruning offer similar benefits

across architectures? To address these questions, we plot-

ted the reported accuracies and compression/speedup levels

of pruned models on ImageNet alongside the same metrics

What is the State of Neural Network Pruning?

for different architectures with no pruning (Figure 1).

1

We

plot results within a family of models as a single curve.

2

Figure 1 suggests several conclusions. First, it reinforces

the conclusion that pruning can improve the time or space

vs accuracy tradeoff of a given architecture, sometimes

even increasing the accuracy. Second, it suggests that prun-

ing generally does not help as much as switching to a better

architecture. Finally, it suggests that pruning is more effec-

tive for architectures that are less efficient to begin with.

4 MISSING CONTROLLED COMPARISONS

While there do appear to be a few general and consistent

findings in the pruning literature (see the previous section),

by far the clearest takeaway is that pruning papers rarely

make direct and controlled comparisons to existing meth-

ods. This lack of comparisons stems largely from a lack

of experimental standardization and the resulting fragmen-

tation in reported results. This fragmentation makes it dif-

ficult for even the most committed authors to compare to

more than a few existing methods.

4.1 Omission of Comparison

Many papers claim to advance the state of the art, but

don’t compare to other methods—including many pub-

lished ones—that make the same claim.

Ignoring Pre-2010s Methods There was already a rich

body of work on neural network pruning by the mid 1990s

(see, e.g., Reed’s survey (Reed, 1993)), which has been al-

most completely ignored except for Lecun’s Optimal Brain

Damage (LeCun et al., 1990) and Hassibi’s Optimal Brain

Surgeon (Hassibi et al., 1993). Indeed, multiple authors

have rediscovered existing methods or aspects thereof, with

Han et al. (2015) reintroducing the magnitude-based prun-

ing of Janowsky (1989), Lee et al. (2019b) reintroducing

the saliency heuristic of Mozer & Smolensky (1989a), and

He et al. (2018a) reintroducing the practice of “reviving”

previously pruned weights described in Tresp et al. (1997).

1

Since many pruning papers report only change in accuracy or

amount of pruning, without giving baseline numbers, we normal-

ize all pruning results to have accuracies and model sizes/FLOPs

as if they had begun with the same model. Concretely, this means

multiplying the reported fraction of pruned size/FLOPs by a stan-

dardized initial value. This value is set to the median initial size or

number of FLOPs reported for that architecture across all papers.

This normalization scheme is not perfect, but does help control for

different methods beginning with different baseline accuracies.

2

The EfficientNet family is given explicitly in the original pa-

per (Tan & Le, 2019), the ResNet family consists of ResNet-

18, ResNet-34, ResNet-50, etc., and the VGG family consists of

VGG-{11, 13, 16, 19}. There are no pruned EfficientNets since

EfficientNet was published too recently. Results for non-pruned

models are taken from (Tan & Le, 2019) and (Bianco et al., 2018).

65

70

75

80

85

Top 1 Accuracy (%)

10

6

10

7

10

8

Number of Parameters

84

86

88

90

92

94

96

Top 5 Accuracy (%)

10

9

10

10

Number of FLOPs

Speed and Size Tradeoffs for Original and Pruned Models

MobileNet-v2 (2018)

MobileNet-v2 Pruned

ResNet (2016)

ResNet Pruned

VGG (2014)

VGG Pruned

EfficientNet (2019)

Figure 1: Size and speed vs accuracy tradeoffs for dif-

ferent pruning methods and families of architectures.

Pruned models sometimes outperform the original ar-

chitecture, but rarely outperform a better architecture.

Ignoring Recent Methods Even when considering only

post-2010 approaches, there are still virtually no methods

that have been shown to outperform all existing “state-of-

the-art” methods. This follows from the fact, depicted in

the top plot of Figure 2, that there are dozens of modern

papers—including many affirmed through peer review—

that have never been compared to by any later study.

A related problem is that papers tend to compare to few

existing methods. In the lower plot of Figure 2, we see

that more than a fourth of our corpus does not compare

to any previously proposed pruning method, and another

fourth compares to only one. Nearly all papers compare to

three or fewer. This might be adequate if there were a clear

progression of methods with one or two “best” methods at

any given time, but this is not the case.

4.2 Dataset and Architecture Fragmentation

Among 81 papers, we found results using 49 datasets, 132

architectures, and 195 (dataset, architecture) combinations.

As shown in Table 1, even the most common combination

of dataset and architecture—VGG-16 on ImageNet

3

(Deng

et al., 2009)—is used in only 22 out of 81 papers. More-

over, three of the top six most common combinations in-

volve MNIST (LeCun et al., 1998a). As Gale et al. (2019)

and others have argued, using larger datasets and models is

essential when assessing how well a method works for real-

3

We adopt the common practice of referring to the

ILSVRC2012 training and validation sets as “ImageNet.”

剩余17页未读,继续阅读

syp_net

- 粉丝: 158

- 资源: 1196

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- zigbee-cluster-library-specification

- JSBSim Reference Manual

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0