没有合适的资源?快使用搜索试试~ 我知道了~

首页各类速查表汇总-PySpark Cheat Sheet -Spark in Python

资源详情

资源推荐

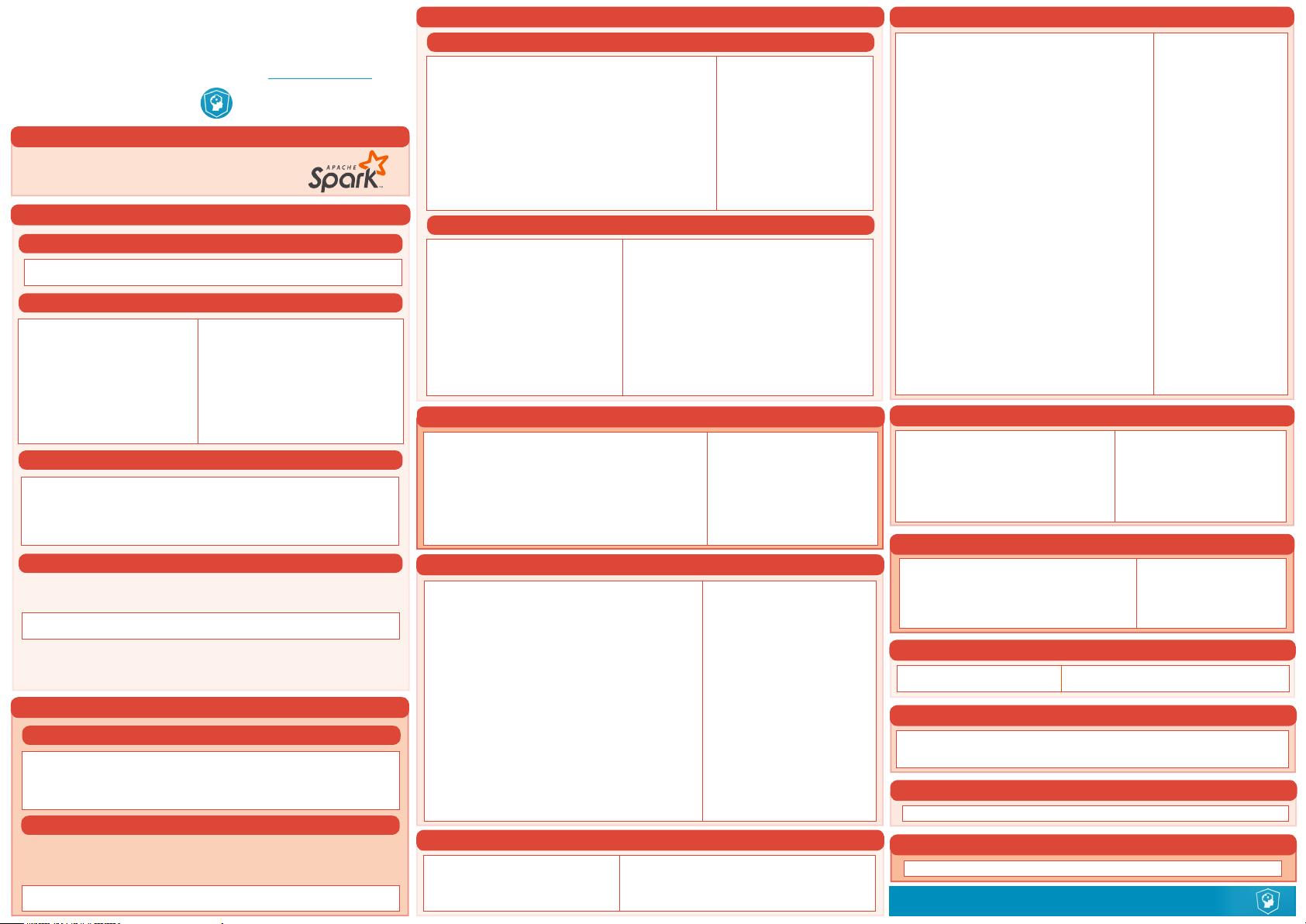

Python For Data Science Cheat Sheet

PySpark - RDD Basics

Learn Python for data science Interactively at www.DataCamp.com

DataCamp

Learn Python for Data Science Interactively

Initializing Spark

PySpark is the Spark Python API that exposes

the Spark programming model to Python.

>>> from pyspark import SparkContext

>>> sc = SparkContext(master = 'local[2]')

Loading Data

>>> rdd = sc.parallelize([('a',7),('a',2),('b',2)])

>>> rdd2 = sc.parallelize([('a',2),('d',1),('b',1)])

>>> rdd3 = sc.parallelize(range(100))

>>> rdd4 = sc.parallelize([("a",["x","y","z"]),

("b",["p", "r"])])

Spark

>>> rdd.map(lambda x: x+(x[1],x[0])) Apply a function to each RDD element

.collect()

[('a',7,7,'a'),('a',2,2,'a'),('b',2,2,'b')]

>>> rdd5 = rdd.atMap(lambda x: x+(x[1],x[0])) Apply a function to each RDD element

and flaen the result

>>> rdd5.collect()

['a',7,7,'a','a',2,2,'a','b',2,2,'b']

>>> rdd4.atMapValues(lambda x: x) Apply a flatMap function to each (key,value)

.collect() pair of rdd4 without changing the keys

[('a','x'),('a','y'),('a','z'),('b','p'),('b','r')]

Applying Functions

>>> sc.stop()

>>> sc.version Retrieve SparkContext version

>>> sc.pythonVer Retrieve Python version

>>> sc.master Master URL to connect to

>>> str(sc.sparkHome) Path where Spark is installed on worker nodes

>>> str(sc.sparkUser()) Retrieve name of the Spark User running

SparkContext

>>> sc.appName Return application name

>>> sc.applicationId Retrieve application ID

>>> sc.defaultParallelism Return default level of parallelism

>>> sc.defaultMinPartitions Default minimum number of partitions for

RDDs

>>> from pyspark import SparkConf, SparkContext

>>> conf = (SparkConf()

.setMaster("local")

.setAppName("My app")

.set("spark.executor.memory", "1g"))

>>> sc = SparkContext(conf = conf)

Configuration

SparkContext

Stopping SparkContext

Using The Shell

$ ./bin/spark-shell --master local[2]

$ ./bin/pyspark --master local[4] --py-les code.py

Inspect SparkContext

Set which master the context connects to with the --master argument, and

add Python .zip, .egg or .py files to the runtime path by passing a

comma-separated list to --py-les.

Execution

$ ./bin/spark-submit examples/src/main/python/pi.py

In the PySpark shell, a special interpreter-aware SparkContext is already

created in the variable called sc.

>>> rdd.saveAsTextFile("rdd.txt")

>>> rdd.saveAsHadoopFile("hdfs://namenodehost/parent/child",

'org.apache.hadoop.mapred.TextOutputFormat')

>>> rdd3.max() Maximum value of RDD elements

99

>>> rdd3.min() Minimum value of RDD elements

0

>>> rdd3.mean() Mean value of RDD elements

49.5

>>> rdd3.stdev() Standard deviation of RDD elements

28.866070047722118

>>> rdd3.variance() Compute variance of RDD elements

833.25

>>> rdd3.histogram(3) Compute histogram by bins

([0,33,66,99],[33,33,34])

>>> rdd3.stats() Summary statistics (count, mean, stdev, max &

min)

Saving

Retrieving RDD Information

>>> rdd.getNumPartitions() List the number of partitions

>>> rdd.count() Count RDD instances

3

>>> rdd.countByKey() Count RDD instances by key

defaultdict(<type 'int'>,{'a':2,'b':1})

>>> rdd.countByValue() Count RDD instances by value

defaultdict(<type 'int'>,{('b',2):1,('a',2):1,('a',7):1})

>>> rdd.collectAsMap() Return (key,value) pairs as a

{'a': 2,'b': 2} dictionary

>>> rdd3.sum() Sum of RDD elements

4950

>>> sc.parallelize([]).isEmpty() Check whether RDD is empty

True

Summary

Basic Information

>>> rdd.repartition(4) New RDD with 4 partitions

>>> rdd.coalesce(1) Decrease the number of partitions in the RDD to 1

Repartitioning

Parallelized Collections

External Data

>>> textFile = sc.textFile("/my/directory/*.txt")

>>> textFile2 = sc.wholeTextFiles("/my/directory/")

Sort

>>> rdd2.sortBy(lambda x: x[1]) Sort RDD by given function

.collect()

[('d',1),('b',1),('a',2)]

>>> rdd2.sortByKey() Sort (key, value) RDD by key

.collect()

[('a',2),('b',1),('d',1)]

Mathematical Operations

>>> rdd.subtract(rdd2) Return each rdd value not contained

.collect() in rdd2

[('b',2),('a',7)]

>>> rdd2.subtractByKey(rdd) Return each (key,value) pair of rdd2

.collect() with no matching key in rdd

[('d', 1)]

>>> rdd.cartesian(rdd2).collect() Return the Cartesian product of rdd

and rdd2

Read either one text file from HDFS, a local file system or or any

Hadoop-supported file system URI with textFile(), or read in a directory

of text files with wholeTextFiles().

Reducing

>>> rdd.reduceByKey(lambda x,y : x+y) Merge the rdd values for

.collect() each key

[('a',9),('b',2)]

>>> rdd.reduce(lambda a, b: a + b) Merge the rdd values

('a',7,'a',2,'b',2)

Grouping by

>>> rdd3.groupBy(lambda x: x % 2) Return RDD of grouped values

.mapValues(list)

.collect()

>>> rdd.groupByKey() Group rdd by key

.mapValues(list)

.collect()

[('a',[7,2]),('b',[2])]

Aggregating

>>> seqOp = (lambda x,y: (x[0]+y,x[1]+1))

> > > c o m b O p = (l a m b d a x,y:(x[0]+y[0],x[1]+y[1]))

>>> rdd3.aggregate((0,0),seqOp,combOp) Aggregate RDD elements of each

(4950,100) partition and then the results

>>> rdd.aggregateByKey((0,0),seqop,combop) Aggregate values of each RDD key

.collect()

[('a',(9,2)), ('b',(2,1))]

>>> rdd3.fold(0,add) Aggregate the elements of each

4950 partition, and then the results

>>> rdd.foldByKey(0, add) Merge the values for each key

.collect()

[('a',9),('b',2)]

>>> rdd3.keyBy(lambda x: x+x) Create tuples of RDD elements by

.collect() applying a function

Reshaping Data

>>> def g(x): print(x)

>>> rdd.foreach(g) Apply a function to all RDD elements

('a', 7)

('b', 2)

('a', 2)

Iterating

Selecting Data

Geing

>>> rdd.collect() Return a list with all RDD elements

[('a', 7), ('a', 2), ('b', 2)]

>>> rdd.take(2) Take first 2 RDD elements

[('a', 7), ('a', 2)]

>>> rdd.rst() Take first RDD element

('a', 7)

>>> rdd.top(2) Take top 2 RDD elements

[('b', 2), ('a', 7)]

Sampling

>>> rdd3.sample(False, 0.15, 81).collect() Return sampled subset of rdd3

[3,4,27,31,40,41,42,43,60,76,79,80,86,97]

Filtering

>>> rdd.lter(lambda x: "a" in x)

Filter the RDD

.collect()

[('a',7),('a',2)]

>>> rdd5.distinct().collect()

Return distinct RDD values

['a',2,'b',7]

>>> rdd.keys().collect()

Return (key,value) RDD's keys

['a', 'a', 'b']

xuwx66

- 粉丝: 1

- 资源: 25

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- 基于单片机的瓦斯监控系统硬件设计.doc

- 基于单片机的流量检测系统的设计_机电一体化毕业设计.doc

- 基于单片机的继电器设计.doc

- 基于单片机的湿度计设计.doc

- 基于单片机的流量控制系统设计.doc

- 基于单片机的火灾自动报警系统毕业设计.docx

- 基于单片机的铁路道口报警系统设计毕业设计.doc

- 基于单片机的铁路道口报警研究与设计.doc

- 基于单片机的流水灯设计.doc

- 基于单片机的时钟系统设计.doc

- 基于单片机的录音器的设计.doc

- 基于单片机的万能铣床设计设计.doc

- 基于单片机的简易安防声光报警器设计.doc

- 基于单片机的脉搏测量器设计.doc

- 基于单片机的家用防盗报警系统设计.doc

- 基于单片机的简易电子钟设计.doc

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功