xviii Preface

learning more about oating-point arithmetic. Some will skip parts of Chapter3,

either because they don’t need them or because they o er a review. However, we

introduce the running example of matrix multiply in this chapter, showing how

subword parallels o ers a fourfold improvement, so don’t skip sections 3.6 to 3.8.

Chapter4 explains pipelined processors. Sections 4.1, 4.5, and 4.10 give overviews

and Section 4.12 gives the next performance boost for matrix multiply for those with

a so ware focus. ose with a hardware focus, however, will nd that this chapter

presents core material; they may also, depending on their background, want to read

Appendix C on logic design rst. e last chapter on multicores, multiprocessors,

and clusters, is mostly new content and should be read by everyone. It was

signi cantly reorganized in this edition to make the ow of ideas more natural

and to include much more depth on GPUs, warehouse scale computers, and the

hardware-so ware interface of network interface cards that are key to clusters.

e rst of the six goals for this rth edition was to demonstrate the importance

of understanding modern hardware to get good performance and energy e ciency

with a concrete example. As mentioned above, we start with subword parallelism

in Chapter3 to improve matrix multiply by a factor of 4. We double performance

in Chapter4 by unrolling the loop to demonstrate the value of instruction level

parallelism. Chapter5 doubles performance again by optimizing for caches using

blocking. Finally, Chapter6 demonstrates a speedup of 14 from 16 processors by

using thread-level parallelism. All four optimizations in total add just 24 lines of C

code to our initial matrix multiply example.

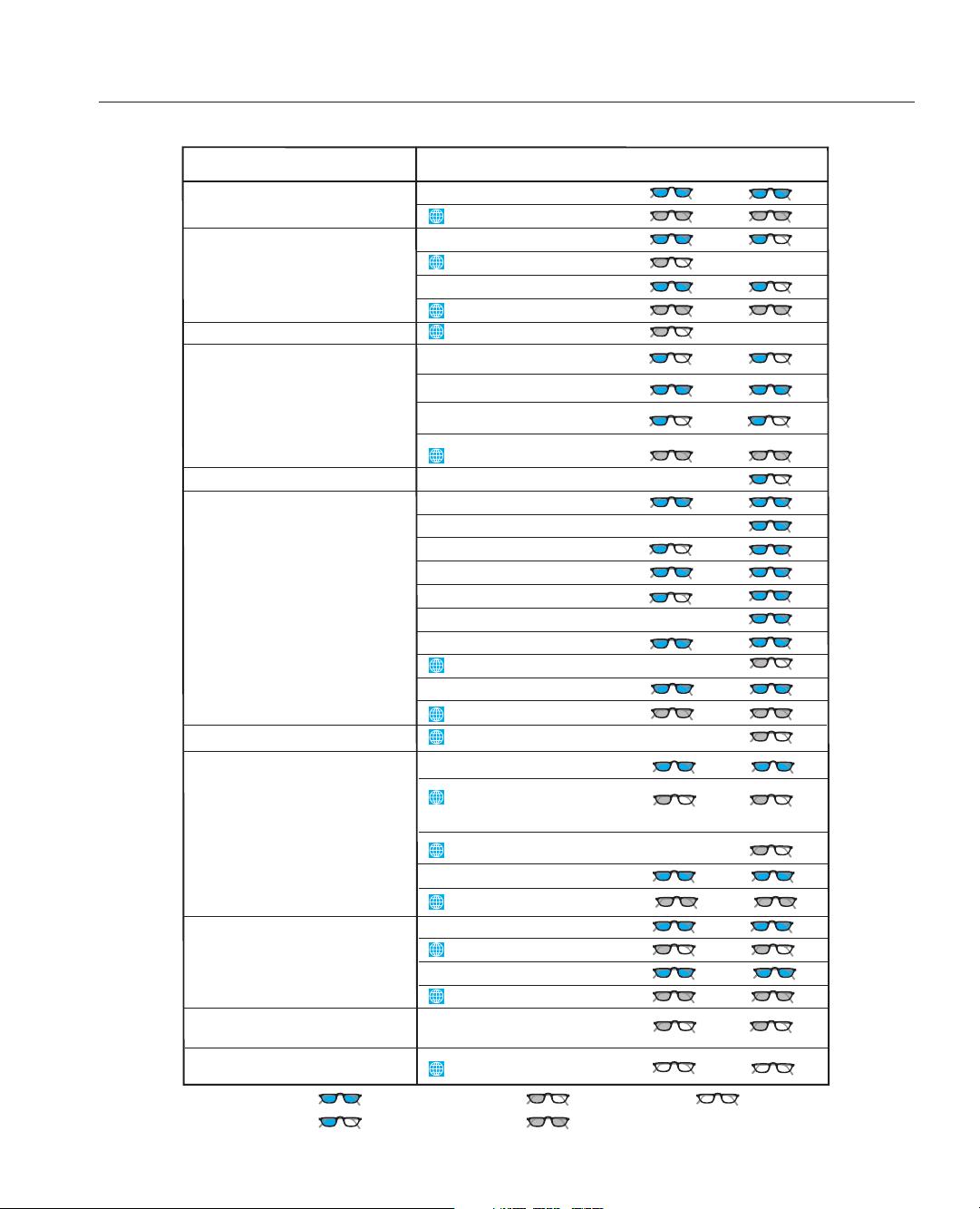

e second goal was to help readers separate the forest from the trees by

identifying eight great ideas of computer architecture early and then pointing out

all the places they occur throughout the rest of the book. We use (hopefully) easy

to remember margin icons and highlight the corresponding word in the text to

remind readers of these eight themes. ere are nearly 100 citations in the book.

No chapter has less than seven examples of great ideas, and no idea is cited less than

ve times. Performance via parallelism, pipelining, and prediction are the three

most popular great ideas, followed closely by Moore’s Law. e processor chapter

(4) is the one with the most examples, which is not a surprise since it probably

received the most attention from computer architects. e one great idea found in

every chapter is performance via parallelism, which is a pleasant observation given

the recent emphasis in parallelism in the eld and in editions of this book.

e third goal was to recognize the generation change in computing from the

PC era to the PostPC era by this edition with our examples and material. us,

Chapter1 dives into the guts of a tablet computer rather than a PC, and Chapter6

describes the computing infrastructure of the cloud. We also feature the ARM,

which is the instruction set of choice in the personal mobile devices of the PostPC

era, as well as the x86 instruction set that dominated the PC Era and (so far)

dominates cloud computing.

e fourth goal was to spread the I/O material throughout the book rather

than have it in its own chapter, much as we spread parallelism throughout all the

chapters in the fourth edition. Hence, I/O material in this edition can be found in