使用OmniThreadLib进行并行编程

"平行编程与OmniThreadLib的使用"

在Delphi XE环境下进行并行程序开发时,OmniThreadLibrary是一个非常有用的开源库。这个库由其作者精心设计,旨在简化多线程编程,提高应用程序的性能和响应性。本文将深入探讨多线程编程的基础知识,并介绍如何利用OmniThreadLibrary来实现这一目标。

首先,让我们理解什么是多线程编程。多线程是指在一个进程中同时执行多个线程,每个线程都拥有自己的CPU状态、栈空间和局部变量。这使得程序可以在不同的任务之间并发执行,从而提高计算机资源的利用率。当一个程序启动时,操作系统会创建一个进程,这个进程中包含程序的基本信息,如内存分配、打开的文件和窗口句柄等。但CPU的状态信息是属于线程的,最初只有一个主线程与进程一起被创建。

现代操作系统支持在同一进程中执行多个执行线程,通过在代码中创建额外的后台线程来实现这一点。这使得程序能够并行处理任务,比如在用户界面更新的同时进行数据处理,提供更好的用户体验。

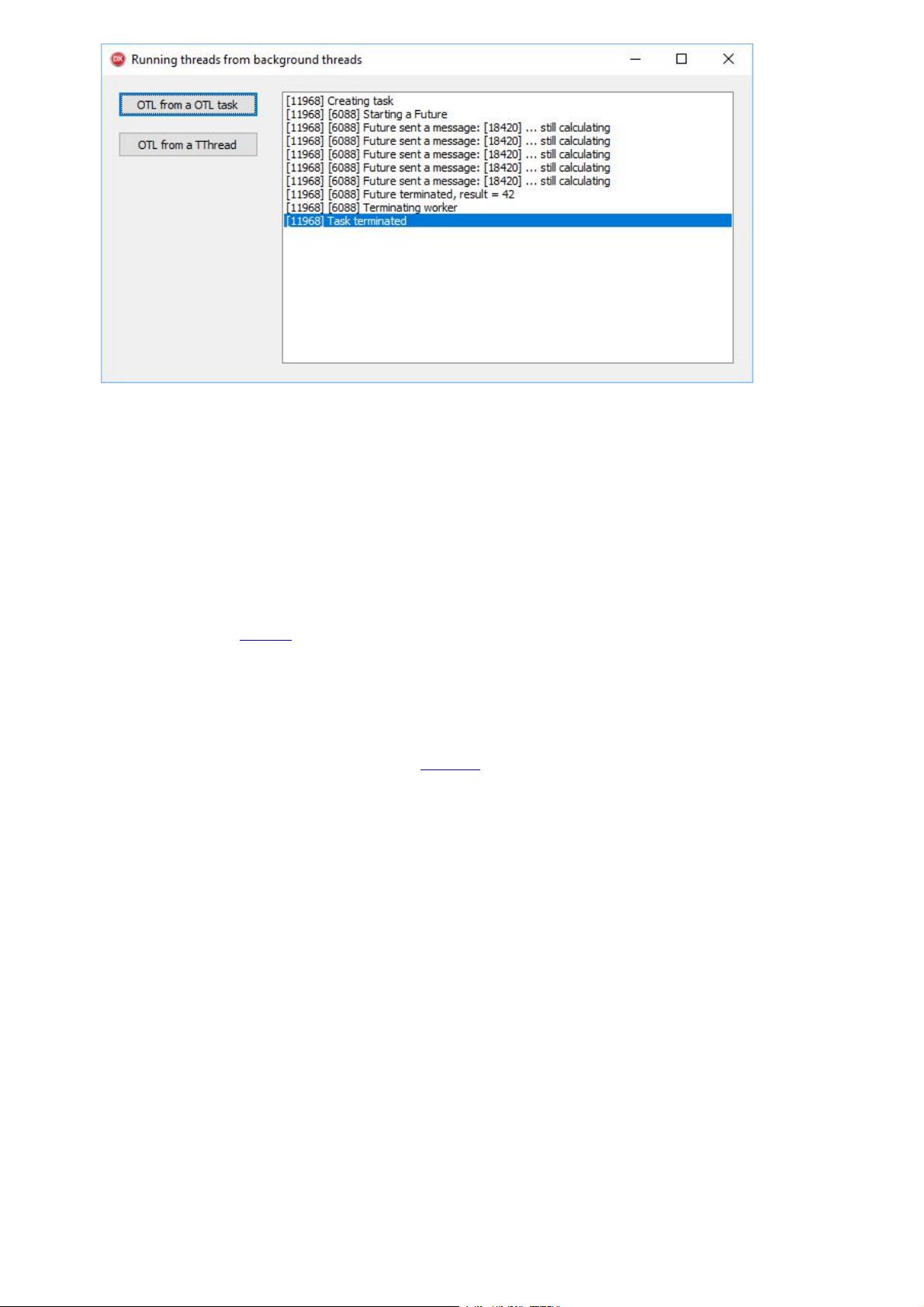

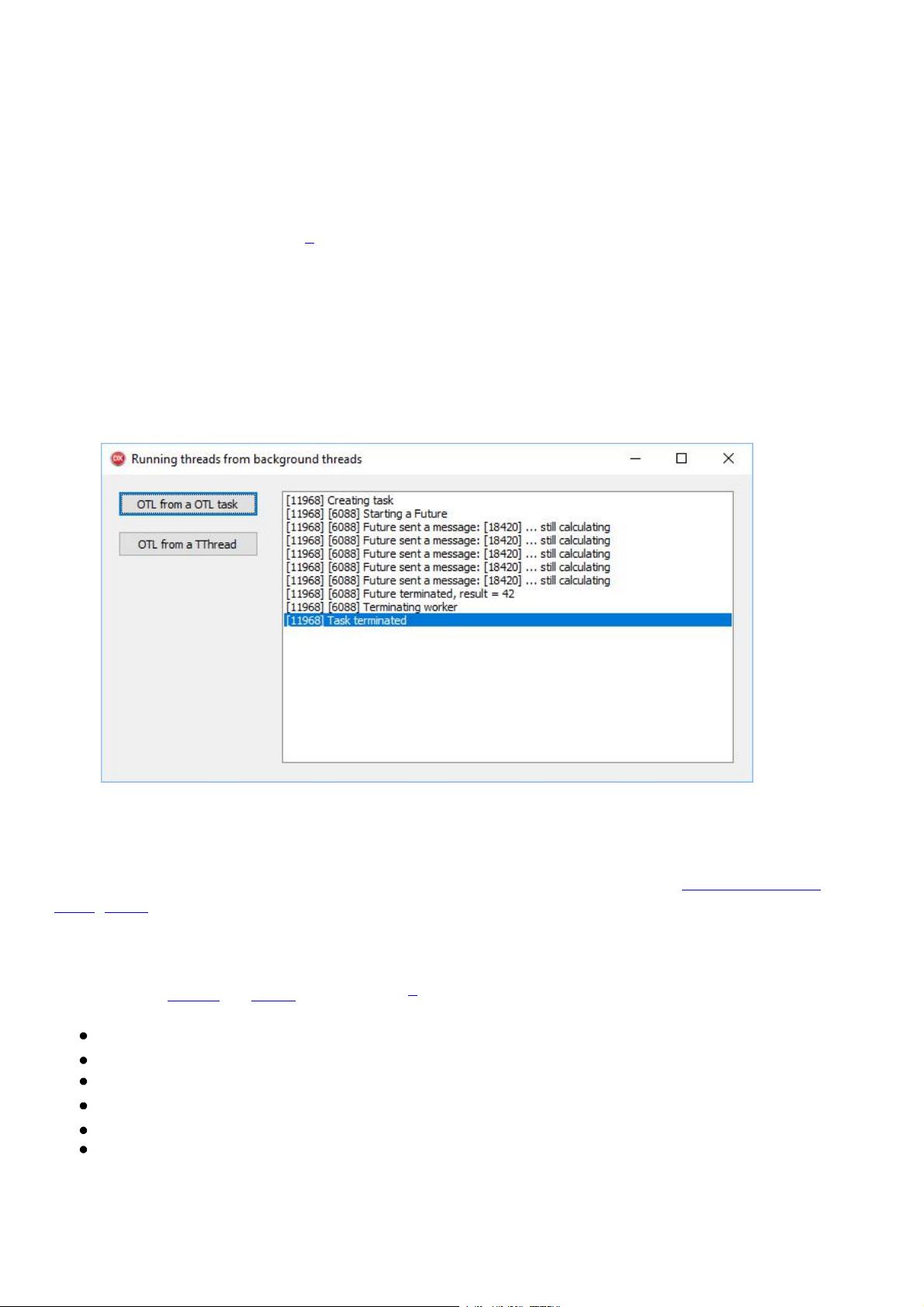

OmniThreadLibrary就是为了解决这些问题而诞生的。它为Delphi开发者提供了一套简单易用的API,用于创建、管理和同步线程。库中的类和组件可以帮助我们创建线程池、实现任务调度、处理线程间的通信和同步,以及处理线程异常和错误。

例如,使用OmniThreadLibrary可以轻松创建一个新的工作线程:

```delphi

uses

OTL.threads;

var

WorkerThread: TOmniWorker;

begin

WorkerThread := TOmniWorker.Create(procedure

var

Data: Integer;

begin

// 在这里执行你的线程任务

Data := ComputeExpensiveResult();

// 可以使用WorkerThread的事件或方法来与主线程通信

WorkerThread.Message.Data := Data;

end);

WorkerThread.OnTerminate := WorkerTerminated;

WorkerThread.Start;

end;

procedure WorkerTerminated(Sender: TObject);

begin

// 处理线程结束后的逻辑

end;

```

在这个例子中,`TOmniWorker`类代表一个工作线程,`ComputeExpensiveResult()`是你在新线程中执行的计算操作。`OnTerminate`事件则允许你在主线程中处理线程完成后的逻辑。

此外,OmniThreadLibrary还提供了诸如`TOmniThreadPool`这样的类,用于管理一组可重用的工作线程,这样可以高效地处理大量并发任务。还有`TOmniBlockingCollection`用于线程安全的数据传递,以及`TOmniTask`用于异步任务和回调。

掌握多线程编程以及使用OmniThreadLibrary可以帮助你编写出更加高效、响应更快的Delphi应用程序。通过学习和实践,你可以充分利用现代多核处理器的能力,提升软件的性能,同时降低程序的复杂性和维护成本。

2018-10-16 上传

2013-08-27 上传

228 浏览量

2016-05-28 上传

2013-10-03 上传

2010-12-21 上传

2013-10-03 上传

2018-05-05 上传

sonadorje

- 粉丝: 27

- 资源: 2

最新资源

- CoreOS部署神器:configdrive_creator脚本详解

- 探索CCR-Studio.github.io: JavaScript的前沿实践平台

- RapidMatter:Web企业架构设计即服务应用平台

- 电影数据整合:ETL过程与数据库加载实现

- R语言文本分析工作坊资源库详细介绍

- QML小程序实现风车旋转动画教程

- Magento小部件字段验证扩展功能实现

- Flutter入门项目:my_stock应用程序开发指南

- React项目引导:快速构建、测试与部署

- 利用物联网智能技术提升设备安全

- 软件工程师校招笔试题-编程面试大学完整学习计划

- Node.js跨平台JavaScript运行时环境介绍

- 使用护照js和Google Outh的身份验证器教程

- PHP基础教程:掌握PHP编程语言

- Wheel:Vim/Neovim高效缓冲区管理与导航插件

- 在英特尔NUC5i5RYK上安装并优化Kodi运行环境