没有合适的资源?快使用搜索试试~ 我知道了~

首页Statistical Pattern Recognition: A Review

Statistical Pattern Recognition: A Review

经典的统计模式识别综述,英文版

Statistical Pattern Recognition: A Review

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE

Statistical Pattern Recognition: A Review

Anil K. Jain, Fellow, IEEE, Robert P.W. Duin, and Jianchang Mao, Senior Member, IEEE

AbstractÐThe primary goal of pattern recognition is supervised or unsupervised classification. Among the various frameworks in

which pattern recognition has been traditionally formulated, the statistical approach has been most intensively studied and used in

practice. More recently, neural network techniques and methods imported from statistical learning theory have been receiving

increasing attention. The design of a recognition system requires careful attention to the following issues: definition of pattern classes,

sensing environment, pattern representation, feature extraction and selection, cluster analysis, classifier design and learning, selection

of training and test samples, and performance evaluation. In spite of almost 50 years of research and development in this field, the

general problem of recognizing complex patterns with arbitrary orientation, location, and scale remains unsolved. New and emerging

applications, such as data mining, web searching, retrieval of multimedia data, face recognition, and cursive handwriting recognition,

require robust and efficient pattern recognition techniques. The objective of this review paper is to summarize and compare some of

the well-known methods used in various stages of a pattern recognition system and identify research topics and applications which are

at the forefront of this exciting and challenging field.

Index TermsÐStatistical pattern recognition, classification, clustering, feature extraction, feature selection, error estimation, classifier

combination, neural networks.

æ

1INTRODUCTION

B

Y the time they are five years old, most children can

recognize digits and letters. Small characters, large

characters, handwritten, machine printed, or rotatedÐall

are easily recognized by the young. The characters may be

written on a cluttered background, on crumpled paper or

may even be partially occluded. We take this ability for

granted until we face the task of teaching a machine how to

do the same. Pattern recognition is the study of how

machines can observe the environment, learn to distinguish

patterns of interest from their background, and make sound

and reasonable decisions about the categories of the

patterns. In spite of almost 50 years of research, design of

a general purpose machine pattern recognizer remains an

elusive goal.

The best pattern recognizers in most instances are

humans, yet we do not understand how humans recognize

patterns. Ross [140] emphasizes the work of Nobel Laureate

Herbert Simon whose central finding was that pattern

recognition is critical in most human decision making tasks:

ªThe more relevant patterns at your disposal, the better

your decisions will be. This is hopeful news to proponents

of artificial intelligence, since computers can surely be

taught to recognize patterns. Indeed, successful computer

programs that help banks score credit applicants, help

doctors diagnose disease and help pilots land airplanes

depend in some way on pattern recognition... We need to

pay much more explicit attention to teaching pattern

recognition.º Our goal here is to introduce pattern recogni-

tion as the best possible way of utilizing available sensors,

processors, and domain knowledge to make decisions

automatically.

1.1 What is Pattern Recognition?

Automatic (machine) recognition, description, classifica-

tion, and grouping of patterns are important problems in a

variety of engineering and scientific disciplines such as

biology, psychology, medicine, marketing, computer vision,

artificial intelligence, and remote sensing. But what is a

pattern? Watanabe [163] defines a pattern ªas opposite of a

chaos; it is an entity, vaguely defined, that could be given a

name.º For example, a pattern could be a fingerprint image,

a handwritten cursive word, a human face, or a speech

signal. Given a pattern, its recognition/classification may

consist of one of the following two tasks [163]: 1) supervised

classification (e.g., discriminant analysis) in which the input

pattern is identified as a member of a predefined class,

2) unsupervised classification (e.g., clustering) in which the

pattern is assigned to a hitherto unknown class. Note that

the recognition problem here is being posed as a classifica-

tion or categorization task, where the classes are either

defined by the system designer (in supervised classifica-

tion) or are learned based on the similarity of patterns (in

unsupervised classification).

Interest in the area of pattern recognition has been

renewed recently due to emerging applications which are

not only challenging but also computationally more

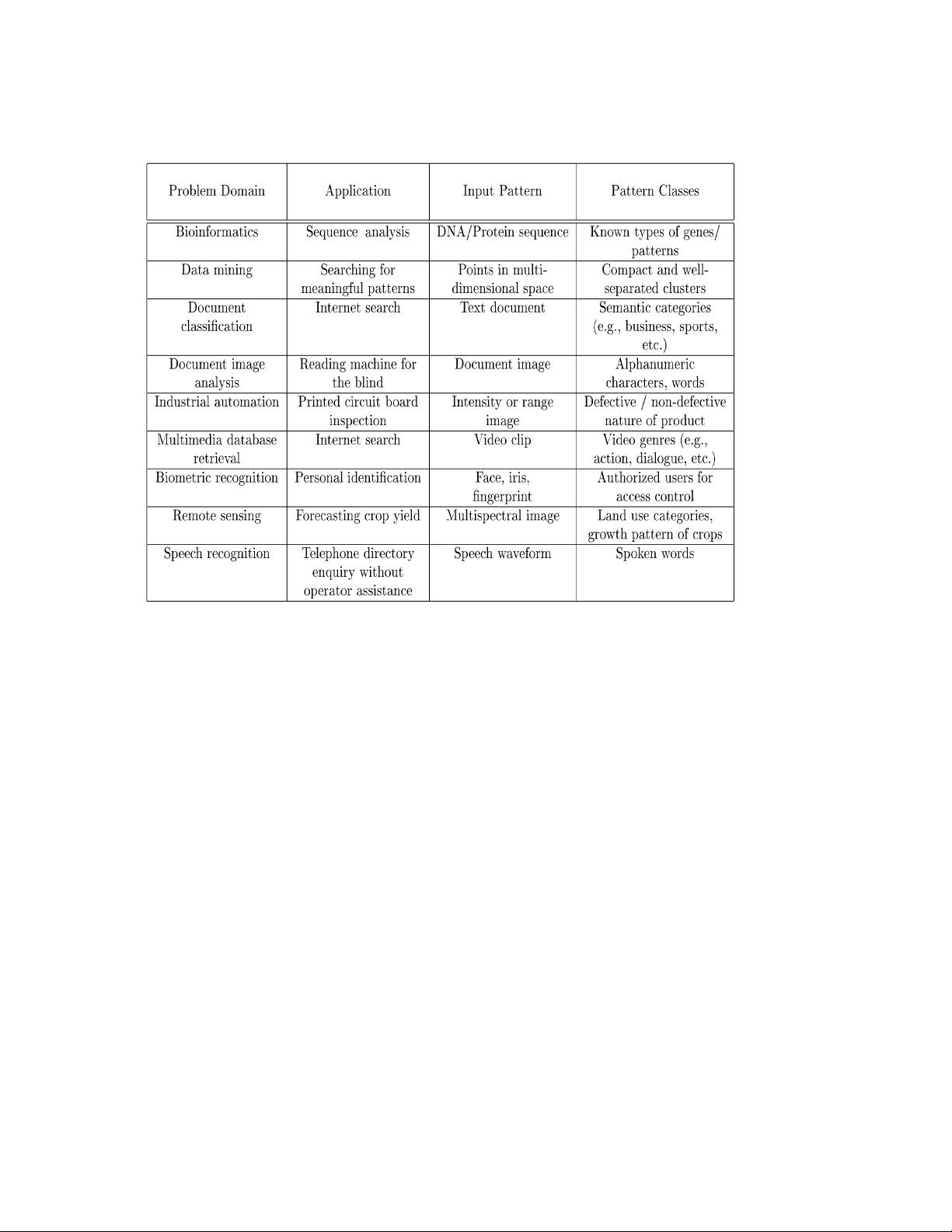

demanding (see Table 1). These applications include data

mining (identifying a ªpattern,º e.g., correlation, or an

outlier in millions of multidimensional patterns), document

classification (efficiently searching text documents), finan-

cial forecasting, organization and retrieval of multimedia

databases, and biometrics (personal identification based on

4 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 22, NO. 1, JANUARY 2000

. A.K. Jain is with the Department of Computer Science and Engineering,

Michigan State University, East Lansing, MI 48824.

E-mail: jain@cse.msu.edu.

. R.P.W. Duin is with the Department of Applied Physics, Delft University

of Technology, 2600 GA Delft, the Netherlands.

E-mail: duin@ph.tn.tudelft.nl.

. J. Mao is with the IBM Almaden Research Center, 650 Harry Road, San

Jose, CA 95120. E-mail: mao@almaden.ibm.com.

Manuscript received 23 July 1999; accepted 12 Oct. 1999.

Recommended for acceptance by K. Bowyer.

For information on obtaining reprints of this article, please send e-mail to:

tpami@computer.org, and reference IEEECS Log Number 110296.

0162-8828/00/$10.00 ß 2000 IEEE

various physical attributes such as face and fingerprints).

Picard [125] has identified a novel application of pattern

recognition, called affective computing which will give a

computer the ability to recognize and express emotions, to

respond intelligently to human emotion, and to employ

mechanisms of emotion that contribute to rational decision

making. A common characteristic of a number of these

applications is that the available features (typically, in the

thousands) are not usually suggested by domain experts,

but must be extracted and optimized by data-driven

procedures.

The rapidly growing and available computing power,

while enabling faster processing of huge data sets, has also

facilitated the use of elaborate and diverse methods for data

analysis and classification. At the same time, demands on

automatic pattern recognition systems are rising enor-

mously due to the availability of large databases and

stringent performance requirements (speed, accuracy, and

cost). In many of the emerging applications, it is clear that

no single approach for classification is ªoptimalº and that

multiple methods and approaches have to be used.

Consequently, combining several sensing modalities and

classifiers is now a commonly used practice in pattern

recognition.

The design of a pattern recognition system essentially

involves the following three aspects: 1) data acquisition and

preprocessing, 2) data representation, and 3) decision

making. The problem domain dictates the choice of

sensor(s), preprocessing technique, representation scheme,

and the decision making model. It is generally agreed that a

well-defined and sufficiently constrained recognition pro-

blem (small intraclass variations and large interclass

variations) will lead to a compact pattern representation

and a simple decision making strategy. Learning from a set

of examples (training set) is an important and desired

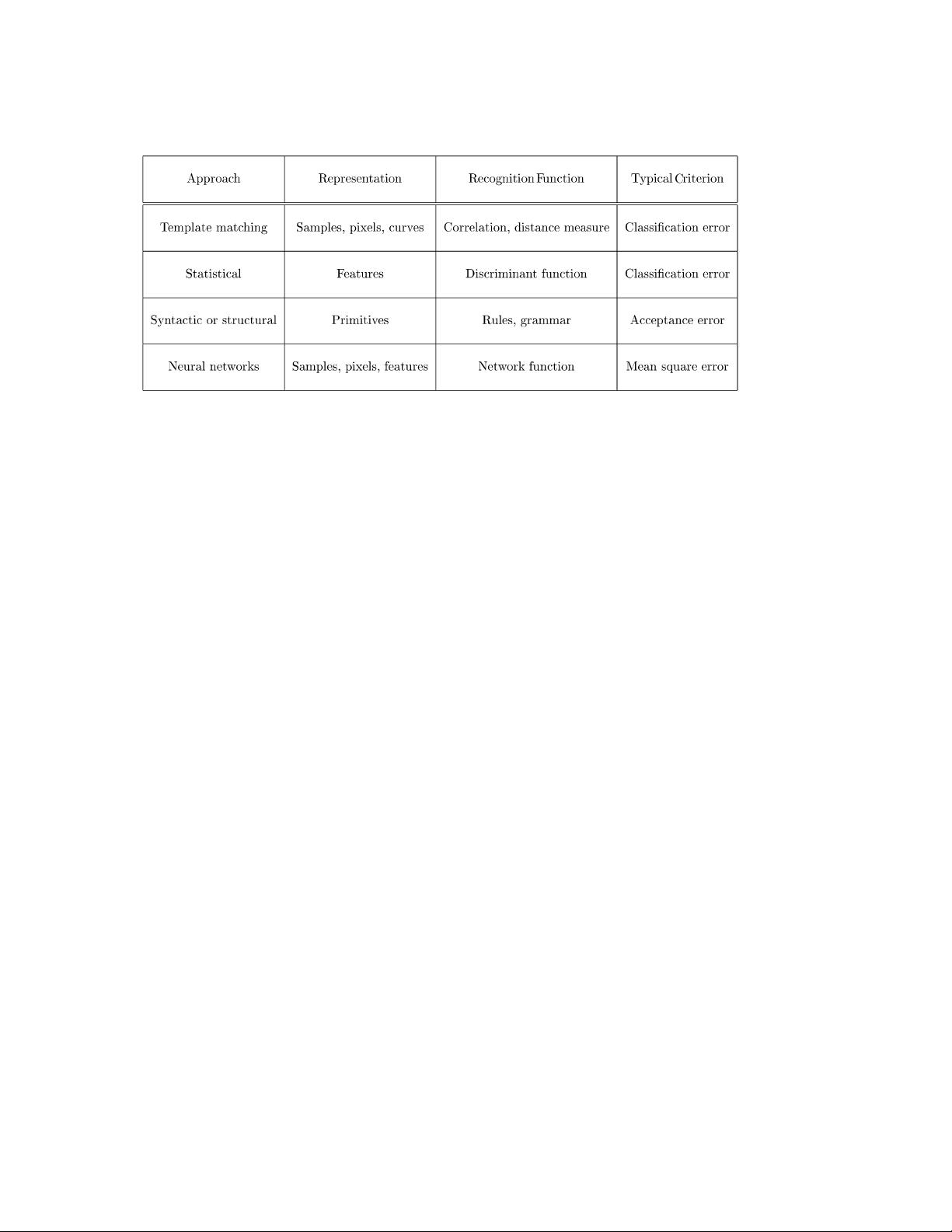

attribute of most pattern recognition systems. The four best

known approaches for pattern recognition are: 1) template

matching, 2) statistical classification, 3) syntactic or struc-

tural matching, and 4) neural networks. These models are

not necessarily independent and sometimes the same

pattern recognition method exists with different interpreta-

tions. Attempts have been made to design hybrid systems

involving multiple models [57]. A brief description and

comparison of these approaches is given below and

summarized in Table 2.

1.2 Template Matching

One of the simplest and earliest approaches to pattern

recognition is based on template matching. Matching is a

generic operation in pattern recognition which is used to

determine the similarity between two entities (points,

curves, or shapes) of the same type. In template matching,

a template (typically, a 2D shape) or a prototype of the

pattern to be recognized is available. The pattern to be

recognized is matched against the stored template while

taking into account all allowable pose (translation and

rotation) and scale changes. The similarity measure, often a

correlation, may be optimized based on the available

training set. Often, the template itself is learned from the

training set. Template matching is computationally de-

manding, but the availability of faster processors has now

JAIN ET AL.: STATISTICAL PATTERN RECOGNITION: A REVIEW 5

TABLE 1

Examples of Pattern Recognition Applications

made this approach more feasible. The rigid template

matching mentioned above, while effective in some

application domains, has a number of disadvantages. For

instance, it would fail if the patterns are distorted due to the

imaging process, viewpoint change, or large intraclass

variations among the patterns. Deformable template models

[69] or rubber sheet deformations [9] can be used to match

patterns when the deformation cannot be easily explained

or modeled directly.

1.3 Statistical Approach

In the statistical approach, each pattern is represented in

terms of d features or measurements and is viewed as a

point in a d-dimensional space. The goal is to choose those

features that allow pattern vectors belonging to different

categories to occupy compact and disjoint regions in a

d-dimensional feature space. The effectiveness of the

representation space (feature set) is determined by how

well patterns from different classes can be separated. Given

a set of training patterns from each class, the objective is to

establish decision boundaries in the feature space which

separate patterns belonging to different classes. In the

statistical decision theoretic approach, the decision bound-

aries are determined by the probability distributions of the

patterns belonging to each class, which must either be

specified or learned [41], [44].

One can also take a discriminant analysis-based ap-

proach to classification: First a parametric form of the

decision boundary (e.g., linear or quadratic) is specified;

then the ªbestº decision boundary of the specified form is

found based on the classification of training patterns. Such

boundaries can be constructed using, for example, a mean

squared error criterion. The direct boundary construction

approaches are supported by Vapnik's philosophy [162]: ªIf

you possess a restricted amount of information for solving

some problem, try to solve the problem directly and never

solve a more general problem as an intermediate step. It is

possible that the available information is sufficient for a

direct solution but is insufficient for solving a more general

intermediate problem.º

1.4 Syntactic Approach

In many recognition problems involving complex patterns,

it is more appropriate to adopt a hierarchical perspective

where a pattern is viewed as being composed of simple

subpatterns which are themselves built from yet simpler

subpatterns [56], [121]. The simplest/elementary subpat-

terns to be recognized are called primitives and the given

complex pattern is represented in terms of the interrelation-

ships between these primitives. In syntactic pattern recog-

nition, a formal analogy is drawn between the structure of

patterns and the syntax of a language. The patterns are

viewed as sentences belonging to a language, primitives are

viewed as the alphabet of the language, and the sentences

are generated according to a grammar. Thus, a large

collection of complex patterns can be described by a small

number of primitives and grammatical rules. The grammar

for each pattern class must be inferred from the available

training samples.

Structural pattern recognition is intuitively appealing

because, in addition to classification, this approach also

provides a description of how the given pattern is

constructed from the primitives. This paradigm has been

used in situations where the patterns have a definite

structure which can be captured in terms of a set of rules,

such as EKG waveforms, textured images, and shape

analysis of contours [56]. The implementation of a syntactic

approach, however, leads to many difficulties which

primarily have to do with the segmentation of noisy

patterns (to detect the primitives) and the inference of the

grammar from training data. Fu [56] introduced the notion

of attributed grammars which unifies syntactic and statis-

tical pattern recognition. The syntactic approach may yield

a combinatorial explosion of possibilities to be investigated,

demanding large training sets and very large computational

efforts [122].

1.5 Neural Networks

Neural networks can be viewed as massively parallel

computing systems consisting of an extremely large

number of simple processors with many interconnections.

Neural network models attempt to use some organiza-

tional principles (such as learning, generalization, adap-

tivity, fault tolerance and distributed representation, and

6 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 22, NO. 1, JANUARY 2000

TABLE 2

Pattern Recognition Models

computation) in a network of weighted directed graphs

in which the nodes are artificial neurons and directed

edges (with weights) are connections between neuron

outputs and neuron inputs. The main characteristics of

neural networks are that they have the ability to learn

complex nonlinear input-output relationships, use se-

quential training procedures, and adapt themselves to

the data.

The most commonly used family of neural networks for

pattern classification tasks [83] is the feed-forward network,

which includes multilayer perceptron and Radial-Basis

Function (RBF) networks. These networks are organized

into layers and have unidirectional connections between the

layers. Another popular network is the Self-Organizing

Map (SOM), or Kohonen-Network [92], which is mainly

used for data clustering and feature mapping. The learning

process involves updating network architecture and con-

nection weights so that a network can efficiently perform a

specific classification/clustering task. The increasing popu-

larity of neural network models to solve pattern recognition

problems has been primarily due to their seemingly low

dependence on domain-specific knowledge (relative to

model-based and rule-based approaches) and due to the

availability of efficient learning algorithms for practitioners

to use.

Neural networks provide a new suite of nonlinear

algorithms for feature extraction (using hidden layers)

and classification (e.g., multilayer perceptrons). In addition,

existing feature extraction and classification algorithms can

also be mapped on neural network architectures for

efficient (hardware) implementation. In spite of the see-

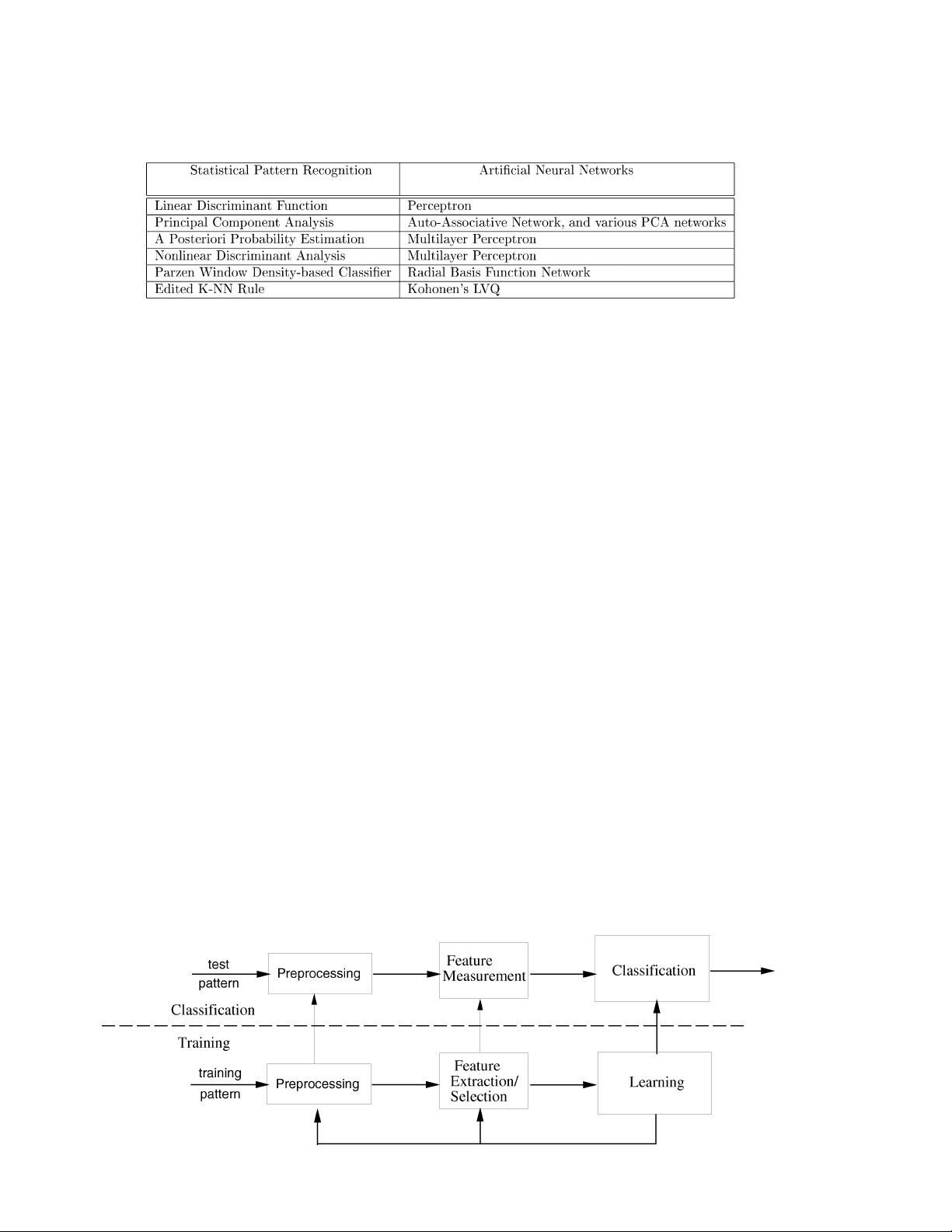

mingly different underlying principles, most of the well-

known neural network models are implicitly equivalent or

similar to classical statistical pattern recognition methods

(see Table 3). Ripley [136] and Anderson et al. [5] also

discuss this relationship between neural networks and

statistical pattern recognition. Anderson et al. point out that

ªneural networks are statistics for amateurs... Most NNs

conceal the statistics from the user.º Despite these simila-

rities, neural networks do offer several advantages such as,

unified approaches for feature extraction and classification

and flexible procedures for finding good, moderately

nonlinear solutions.

1.6 Scope and Organization

In the remainder of this paper we will primarily review

statistical methods for pattern representation and classifica-

tion, emphasizing recent developments. Whenever appro-

priate, we will also discuss closely related algorithms from

the neural networks literature. We omit the whole body of

literature on fuzzy classification and fuzzy clustering which

are in our opinion beyond the scope of this paper.

Interested readers can refer to the well-written books on

fuzzy pattern recognition by Bezdek [15] and [16]. In most

of the sections, the various approaches and methods are

summarized in tables as an easy and quick reference for the

reader. Due to space constraints, we are not able to provide

many details and we have to omit some of the approaches

and the associated references. Our goal is to emphasize

those approaches which have been extensively evaluated

and demonstrated to be useful in practical applications,

along with the new trends and ideas.

The literature on pattern recognition is vast and

scattered in numerous journals in several disciplines

(e.g., applied statistics, machine learning, neural net-

works, and signal and image processing). A quick scan of

the table of contents of all the issues of the IEEE

Transactions on Pattern Analysis and Machine Intelligence,

since its first publication in January 1979, reveals that

approximately 350 papers deal with pattern recognition.

Approximately 300 of these papers covered the statistical

approach and can be broadly categorized into the

following subtopics: curse of dimensionality (15), dimen-

sionality reduction (50), classifier design (175), classifier

combination (10), error estimation (25) and unsupervised

classification (50). In addition to the excellent textbooks

by Duda and Hart [44],

1

Fukunaga [58], Devijver and

Kittler [39], Devroye et al. [41], Bishop [18], Ripley [137],

Schurmann [147], and McLachlan [105], we should also

point out two excellent survey papers written by Nagy

[111] in 1968 and by Kanal [89] in 1974. Nagy described

the early roots of pattern recognition, which at that time

was shared with researchers in artificial intelligence and

perception. A large part of Nagy's paper introduced a

number of potential applications of pattern recognition

and the interplay between feature definition and the

application domain knowledge. He also emphasized the

linear classification methods; nonlinear techniques were

based on polynomial discriminant functions as well as on

potential functions (similar to what are now called the

kernel functions). By the time Kanal wrote his survey

paper, more than 500 papers and about half a dozen

books on pattern recognition were already published.

Kanal placed less emphasis on applications, but more on

modeling and design of pattern recognition systems. The

discussion on automatic feature extraction in [89] was

based on various distance measures between class-

conditional probability density functions and the result-

ing error bounds. Kanal's review also contained a large

section on structural methods and pattern grammars.

In comparison to the state of the pattern recognition field

as described by Nagy and Kanal in the 1960s and 1970s,

today a number of commercial pattern recognition systems

are available which even individuals can buy for personal

use (e.g., machine printed character recognition and

isolated spoken word recognition). This has been made

possible by various technological developments resulting in

the availability of inexpensive sensors and powerful desk-

top computers. The field of pattern recognition has become

so large that in this review we had to skip detailed

descriptions of various applications, as well as almost all

the procedures which model domain-specific knowledge

(e.g., structural pattern recognition, and rule-based sys-

tems). The starting point of our review (Section 2) is the

basic elements of statistical methods for pattern recognition.

It should be apparent that a feature vector is a representa-

tion of real world objects; the choice of the representation

strongly influences the classification results.

JAIN ET AL.: STATISTICAL PATTERN RECOGNITION: A REVIEW 7

1. Its second edition by Duda, Hart, and Stork [45] is in press.

The topic of probabilistic distance measures is cur-

rently not as important as 20 years ago, since it is very

difficult to estimate density functions in high dimensional

feature spaces. Instead, the complexity of classification

procedures and the resulting accuracy have gained a

large interest. The curse of dimensionality (Section 3) as

well as the danger of overtraining are some of the

consequences of a complex classifier. It is now under-

stood that these problems can, to some extent, be

circumvented using regularization, or can even be

completely resolved by a proper design of classification

procedures. The study of support vector machines

(SVMs), discussed in Section 5, has largely contributed

to this understanding. In many real world problems,

patterns are scattered in high-dimensional (often) non-

linear subspaces. As a consequence, nonlinear procedures

and subspace approaches have become popular, both for

dimensionality reduction (Section 4) and for building

classifiers (Section 5). Neural networks offer powerful

tools for these purposes. It is now widely accepted that

no single procedure will completely solve a complex

classification problem. There are many admissible ap-

proaches, each capable of discriminating patterns in

certain portions of the feature space. The combination of

classifiers has, therefore, become a heavily studied topic

(Section 6). Various approaches to estimating the error

rate of a classifier are presented in Section 7. The topic of

unsupervised classification or clustering is covered in

Section 8. Finally, Section 9 identifies the frontiers of

pattern recognition.

It is our goal that most parts of the paper can be

appreciated by a newcomer to the field of pattern

recognition. To this purpose, we have included a number

of examples to illustrate the performance of various

algorithms. Nevertheless, we realize that, due to space

limitations, we have not been able to introduce all the

concepts completely. At these places, we have to rely on

the background knowledge which may be available only

to the more experienced readers.

2STATISTICAL PATTERN RECOGNITION

Statistical pattern recognition has been used successfully to

design a number of commercial recognition systems. In

statistical pattern recognition, a pattern is represented by a

set of d features, or attributes, viewed as a d-dimensional

feature vector. Well-known concepts from statistical

decision theory are utilized to establish decision boundaries

between pattern classes. The recognition system is operated

in two modes: training (learning) and classification (testing)

(see Fig. 1). The role of the preprocessing module is to

segment the pattern of interest from the background,

remove noise, normalize the pattern, and any other

operation which will contribute in defining a compact

representation of the pattern. In the training mode, the

feature extraction/selection module finds the appropriate

features for representing the input patterns and the

classifier is trained to partition the feature space. The

feedback path allows a designer to optimize the preproces-

sing and feature extraction/selection strategies. In the

classification mode, the trained classifier assigns the input

pattern to one of the pattern classes under consideration

based on the measured features.

8 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 22, NO. 1, JANUARY 2000

TABLE 3

Links Between Statistical and Neural Network Methods

Fig. 1. Model for statistical pattern recognition.

剩余33页未读,继续阅读

点击了解资源详情

点击了解资源详情

点击了解资源详情

2010-09-25 上传

2015-03-03 上传

2008-11-28 上传

2015-06-26 上传

2023-06-07 上传

wangyx

- 粉丝: 3

- 资源: 4

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- MATLAB实现小波阈值去噪:Visushrink硬软算法对比

- 易语言实现画板图像缩放功能教程

- 大模型推荐系统: 优化算法与模型压缩技术

- Stancy: 静态文件驱动的简单RESTful API与前端框架集成

- 掌握Java全文搜索:深入Apache Lucene开源系统

- 19计应19田超的Python7-1试题整理

- 易语言实现多线程网络时间同步源码解析

- 人工智能大模型学习与实践指南

- 掌握Markdown:从基础到高级技巧解析

- JS-PizzaStore: JS应用程序模拟披萨递送服务

- CAMV开源XML编辑器:编辑、验证、设计及架构工具集

- 医学免疫学情景化自动生成考题系统

- 易语言实现多语言界面编程教程

- MATLAB实现16种回归算法在数据挖掘中的应用

- ***内容构建指南:深入HTML与LaTeX

- Python实现维基百科“历史上的今天”数据抓取教程

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功