J Sign Process Syst (2016) 84:139–150 141

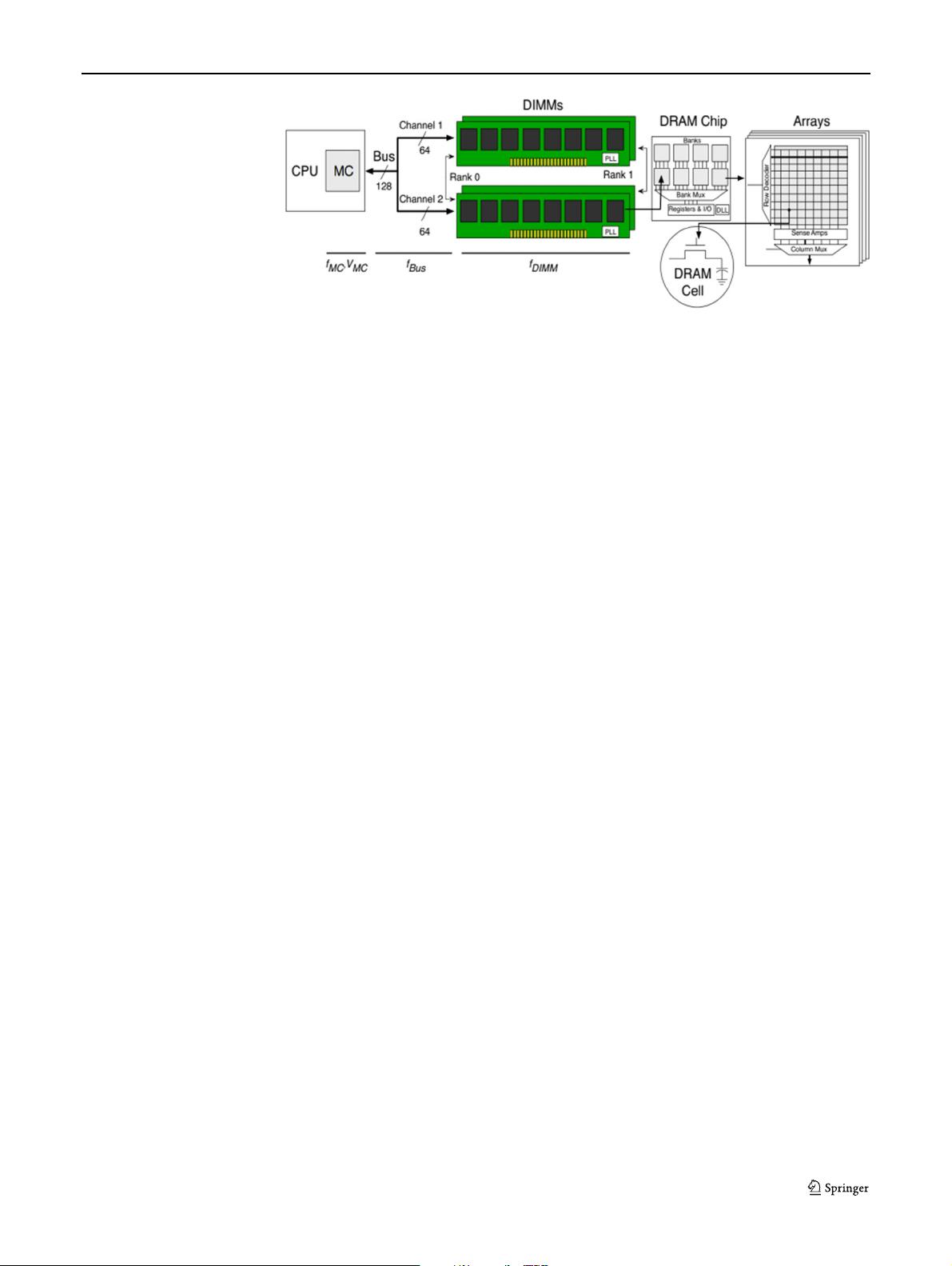

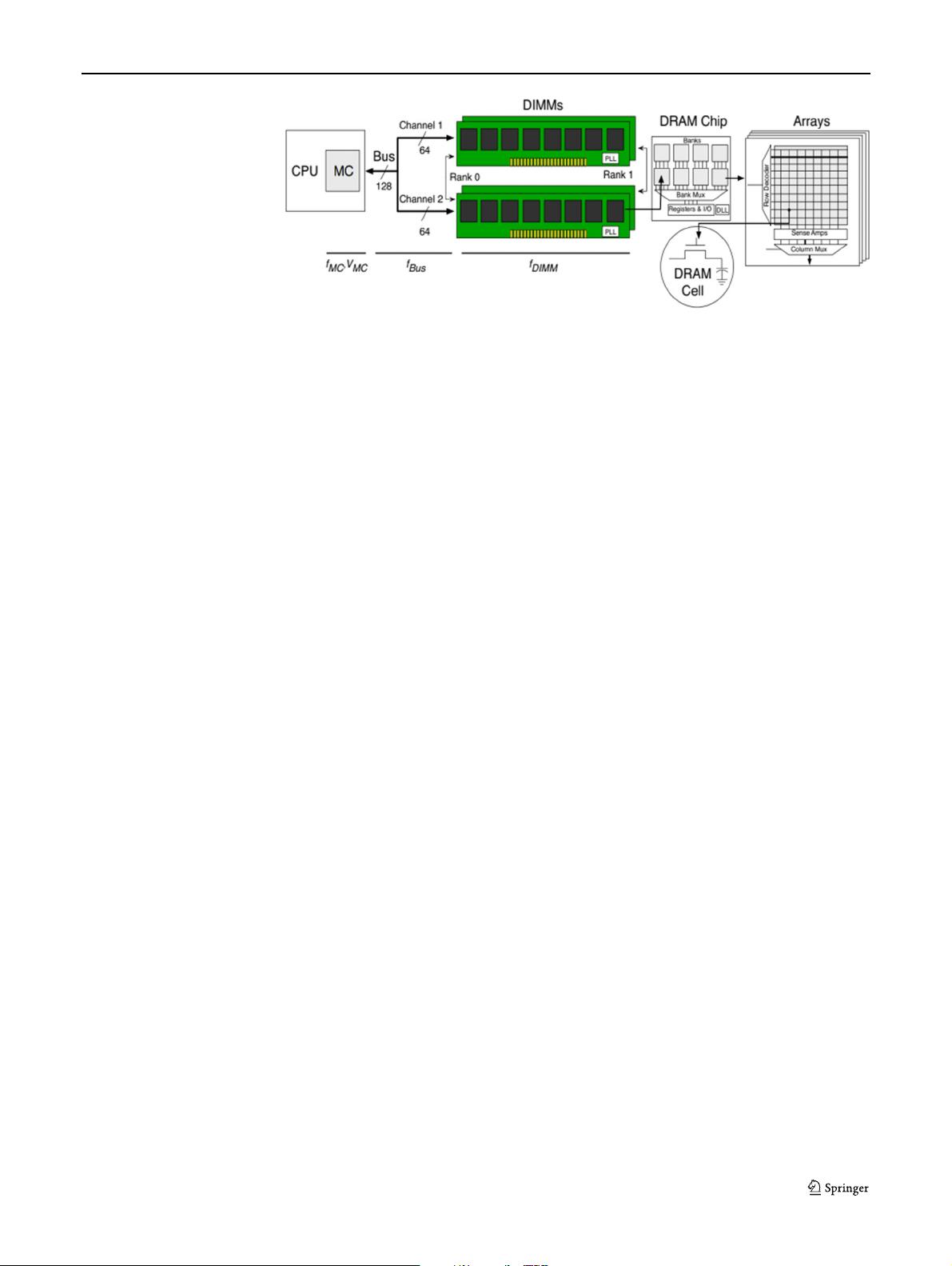

Figure 3 Organization of a

modern memory subsystem.

behalf of the CPU’s last-level cache across a memory bus.

As shown, recent processors have integrated the MC into

the same package as the CPU. To enable greater parallelism,

the width of the memory bus is split into multiple channels.

These channels act independently and can access disjoint

regions of the physical address space in parallel [11].

Multiple DIMMs may be connected to the same channel.

Each DIMM comprises a printed circuit board with register

devices, a Phase Lock Loop device, and multiple DRAM

chips. The DRAM chips are the ultimate destination of the

MC commands. The subset of DRAM chips that participate

in each access is called a rank. The number of chips in a rank

depends on how many bits each chip produces/consumes at

a time. Each DIMM can have up to 16 chips, organized into

1-4 ranks.

Each DRAM chip contains multiple banks (typically 8

banks nowadays), each of which contains multiple two-

dimensional memory arrays. The basic unit of storage in an

array is a simple capacitor representing a bit??the DRAM

cell. Thus, in a x8 DRAM chip, each bank has 8 arrays, each

of which produces/consumes one bit at a time. However,

each time an array is accessed, an entire multi-KB row is

transferred to a row buffer. This operation is called an “acti-

vation” or a “row opening”. Then, any column of the row

can be read/written over the channel in one burst. Because

the activation is destructive, the corresponding row eventu-

ally needs to be “pre-charged”, that is, written back to the

array.

2.2 OS Memory Management

Nowadays, Linux kernel’s memory management system

uses a buddy system to manage physical memory pages.

In the buddy system, the continuous 2order pages (called a

block) are organized in the free list with the corresponding

order, which ranges from 0 to a specific upper limit. When

a program accesses an unmapped virtual address, a page

fault occurs and OS kernel takes over the following execu-

tion wherein the buddy system identifies the right order free

list and allocates on block (2order physical pages) for that

program. Usually the first block of a free list is selected but

the corresponding physical pages are undetermined [12].

2.3 Related Work

There are a number of related studies.

Thread Scheduling Scheduling algorithms aimed to dis-

tribute threads to get an even distribution of miss rate among

multiple caches are proposed in [13], which avoid severe

contention on shared resource of cache, memory controller,

memory bus and prefetching hardware. Similar mechanisms

are also proposed in [14, 15]. Although these methods

can alleviate contention, they hardly eliminate the bank

interference among threads.

Channel Partition Data of different threads are mapped

into different channels according to their memory access

behavior in [1], which can eliminate the interference

between threads at channel level. However, channel parti-

tion cannot be applied to system with cache line interleaving

policy between channels [1], which limit its applicable

scope. Furthermore, there are usually more threads than

channels in a system, so some threads have to be assigned to

the same channel, which still interference with each other.

Besides, channel partition actually partitions the bandwidth

of memory system into several portions. Since the total

number of portions is limited by channel amount, which

is usually small, it is challenging to seek a balance among

channels so as to ensure no bandwidth wasted.

Thread-aware Memory Scheduling Memory controllers

are designed to distinguish the memory access behavior at

thread-level in [1, 6, 10, 16, 17], so that scheduling modules

can adjust their scheduling policy at the running time. TCM

[1], which dynamically groups threads into two clusters

(memory intensive and CPU intensive), and assign different

scheduling policy to different group, is the best scheduling

policy, which aim to address fairness and throughput at the