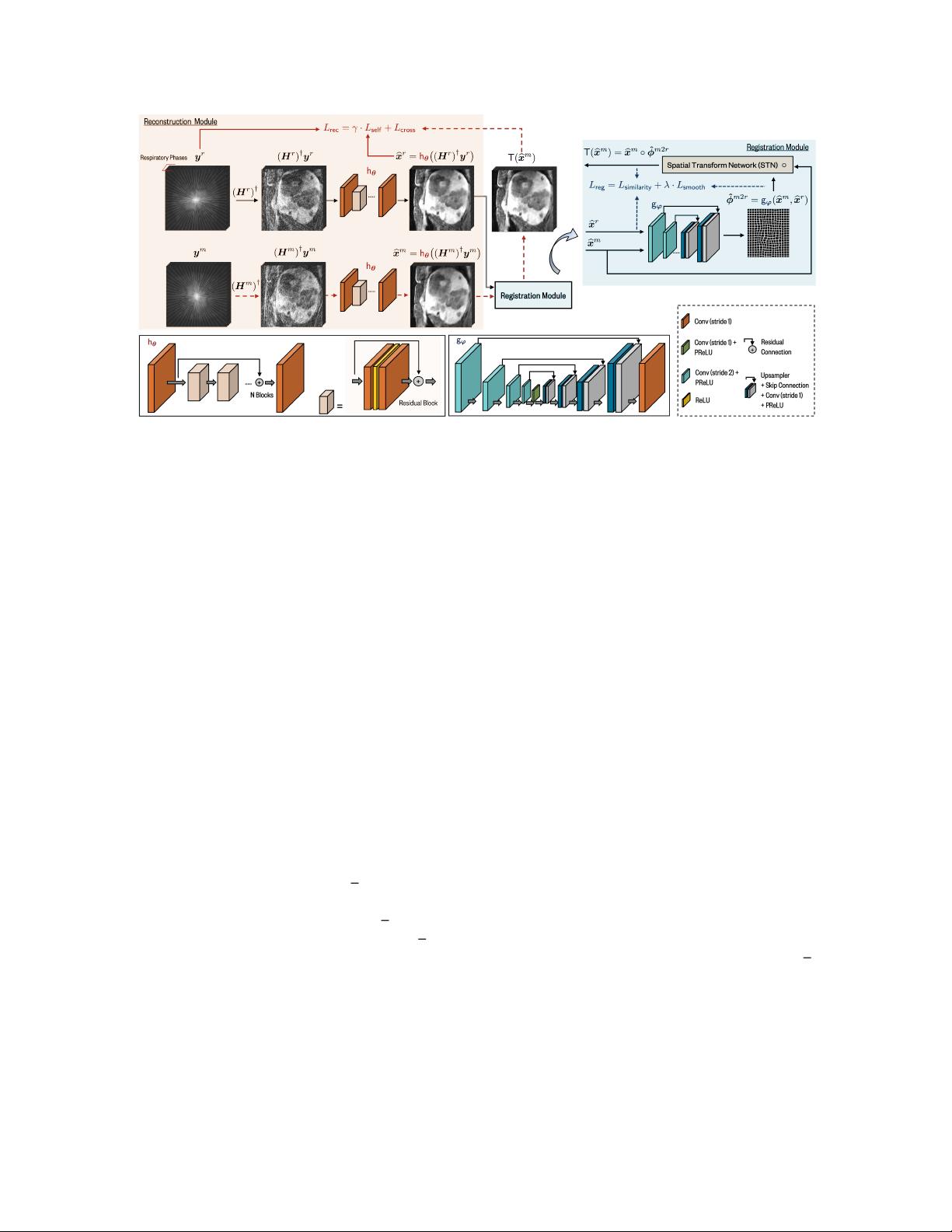

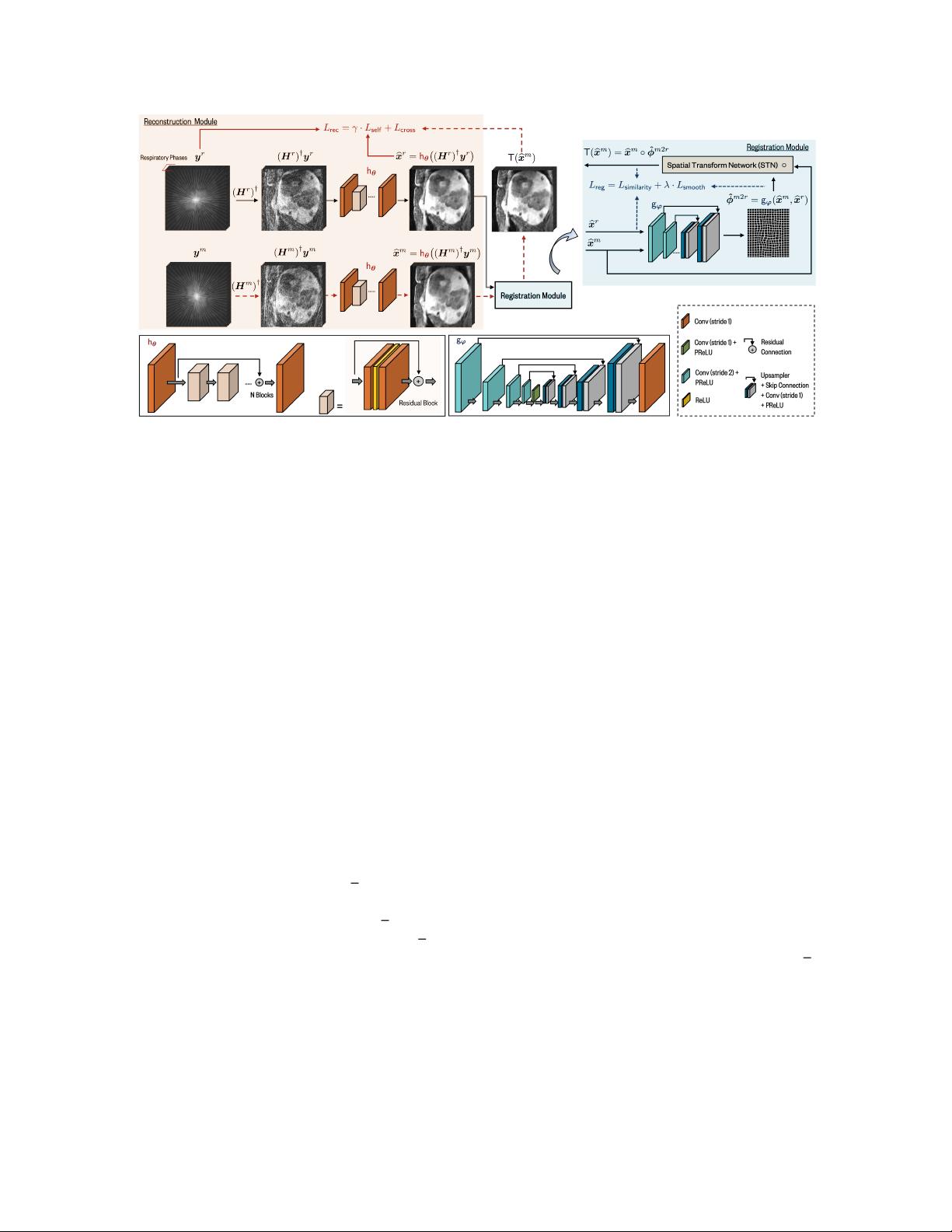

Figure 2: The proposed method jointly trains two CNN modules: h

θ

for image reconstruction and g

ϕ

for image regis-

tration. Inputs are the measurement pairs of the same object but at different motion states. The zero-filled images are

passed through h

θ

to remove artifacts due to noise and undersampling. The output images are then used in g

ϕ

to obtain

the motion field characterizing the directional mapping between their coordinates. We implement the warping operator

as the Spatial Transform Network (STN) to register one of the reconstructed images to the other. We train the whole

network end-to-end without any ground-truth images or transformations.

mapping an input image pair {m, r} to a deformation field

ˆ

φ

m→r

= g

ϕ

(m, r) that can be used for registra-

tion [25]. The CNN is trained on a set of image pairs

m

i

, r

i

by minimizing the following loss function

arg min

ϕ

X

i

L

d

(m

i

◦

ˆ

φ

m→r

i

, r

i

) + L

r

(

ˆ

φ

m→r

i

) , (7)

where ◦ is the warping operator that transforms the coordinates of m

i

based on the registration field

ˆ

φ

m→r

i

.

The term L

d

penalizes the discrepancy between m

i

after transformation and its reference r

i

, while L

r

reg-

ularizes the local spatial variations in the estimated registration field. In order to use the standard gradient

methods for minimizing this loss function, the warping operator needs to be differentiable and is often

implemented as the Spatial Transform Network (STN) [60].

Our work seeks to leverage the recent progress in deep image registration to enable a novel methodology

for training deep reconstruction networks on deformation-affected datasets.

2.4 Motion-Compensated Reconstruction

Motion-compensated (MoCo) reconstruction refers to a class of methods for reconstructing dynamic object

from their noisy measurements [61–71]. MoCo methods seek to leverage data redundancy over the motion

dimension during reconstruction. For example, traditional model-based MoCo methods include an additional

regularizer in the motion dimension [61–63] or enforce spatial smoothness in the images at different motion

phases using motion vector fields (MVFs) [64–66]. MVFs can be obtained by registering images of the re-

constructed object at different motion states or via joint optimization using multi-task optimization [67–69].

Recent methods have also used DL to estimate MVFs by training a self-supervised network on reconstructed

images [70] or by jointly updating both MVFs and images in a supervised fashion [71].

DeCoLearn is a complementary paradigm to the traditional MoCo image reconstruction. The primary

focus of DeCoLearn is to enable learning given pairs of measurements of objects undergoing deformations.

Thus, unlike MoCo methods, DeCoLearn does not specifically target sequential data. DeCoLearn can be used

5