Point Feature Extraction on 3D Range Scans

Taking into Account Object Boundaries

Bastian Steder Radu Bogdan Rusu Kurt Konolige Wolfram Burgard

Abstract— In this paper we address the topic of feature ex-

traction in 3D point cloud data for object recognition and pose

identification. We present a novel interest keypoint extraction

method that operates on range images generated from arbitrary

3D point clouds, which explicitly considers the borders of

the objects identified by transitions from foreground to back-

ground. We furthermore present a feature descriptor that takes

the same information into account. We have implemented our

approach and present rigorous experiments in which we analyze

the individual components with respect to their repeatability

and matching capabilities and evaluate the usefulness for point

feature based object detection methods.

I. INTRODUCTION

In object recognition or mapping applications, the ability

to find similar parts in different sets of sensor readings is

a highly relevant problem. A popular method is to estimate

features that best describe a chunk of data in a compressed

representation and that can be used to efficiently perform

comparisons between different data regions. In 2D or 3D

perception, such features are usually local around a point

in the sense that for a given point in the scene its vicinity

is used to determine the corresponding feature. The entire

task is typically subdivided into two subtasks, namely the

identification of appropriate points, often referred to as

interest points or key points, and the way in which the

information in the vicinity of that point is encoded in a

descriptor or description vector.

Important advantages of interest points are that they

substantially reduce the search space and computation time

required for finding correspondences between two scenes and

that they furthermore focus the computation on areas that are

more likely relevant for the matching process. There has been

surprisingly little research for interest point extraction in raw

3D data in the past, compared to vision, where this is a well

researched area. Most papers about 3D features target only

the descriptor.

In this paper we focus on single range scans, as obtained

with 3D laser range finders or stereo cameras, where the

data is incomplete and dependent on a viewpoint. We chose

range images as the way to represent the data since they

reflect this situation and enable us to borrow ideas from the

vision sector.

We present the normal aligned radial feature (NARF), a

novel interest point extraction method together with a feature

descriptor for points in 3D range data. The interest point

B. Steder and W. Burgard are with the Dept. of Computer Science

of the University of Freiburg, Germany. {steder,burgard}@informatik.uni-

freiburg.de; R. Rusu and K. Konolige are with Willow Garage Inc., USA.

{rusu,konolige}@willowgarage.com

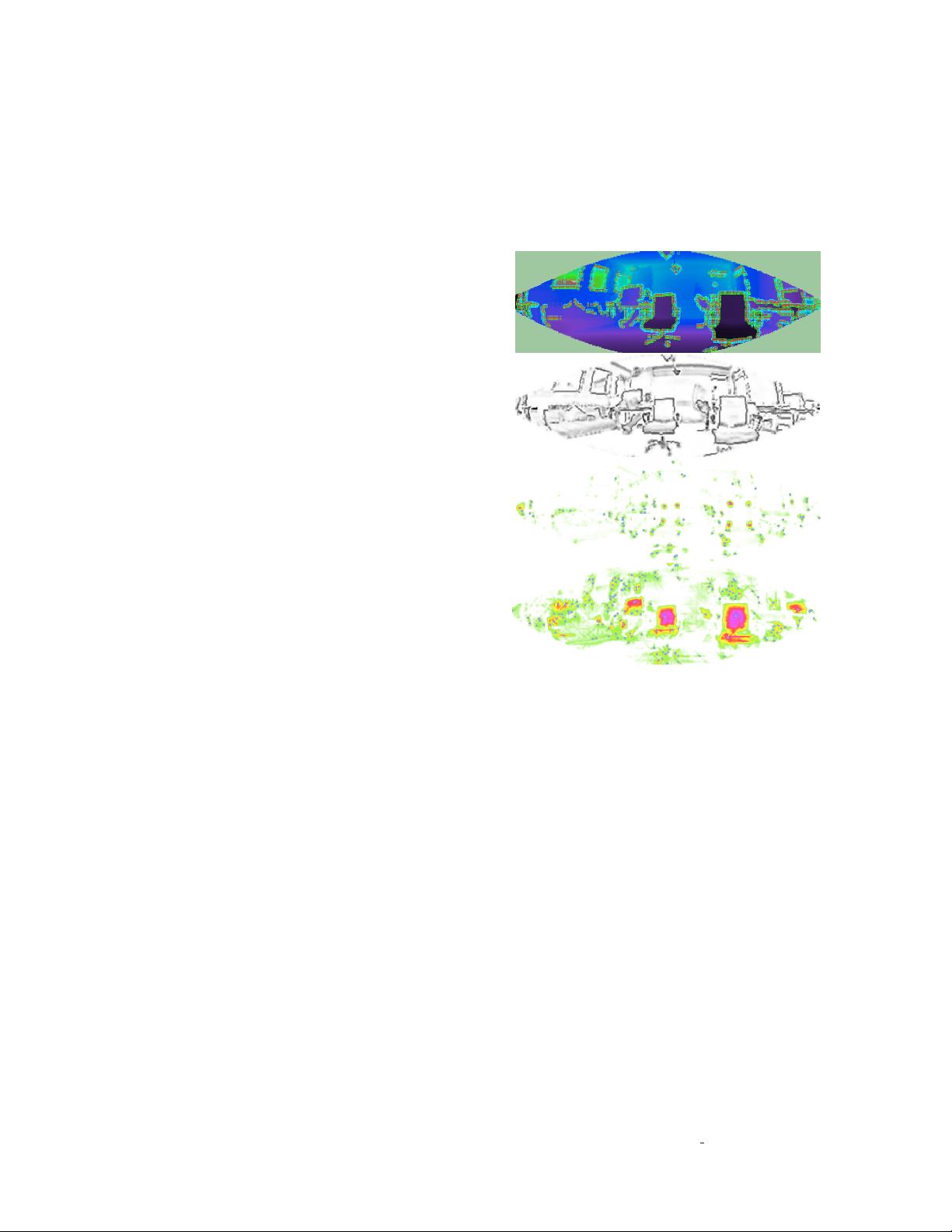

(a)

(b)

(c)

(d)

Fig. 1. The interest point extraction procedure. (a) Range image of an

office scene with two chairs in the front with the extracted borders marked.

(b) Surface change scores according to borders and principle curvature. (c)

Interest values with marked interest points for a support size of 20cm. Note

how the corners of the chairs are detected as interest points at this scale. (d)

Interest values with marked interest points for a support size of 1m. Note

how, compared to (c), the whole surface of the chair’s backrests contain one

interest point at this scale.

extraction method has been designed with two specific goals

in mind: i) the selected points are supposed to be in positions

where the surface is stable (to ensure a robust estimation

of the normal) and where there are sufficient changes in

the immediate vicinity; ii) since we focus on partial views,

we want to make use of object borders, meaning the outer

shapes of objects seen from a certain perspective. The outer

forms are often rather unique so that their explicit use in the

interest point extraction and the descriptor calculation can be

expected to make the overall process more robust. For this

purpose, we also present a method to extract those borders.

To the best of our knowledge, no existing method for feature

extraction tackles all of these issues.

Figure 1 shows an outline of our interest point extraction

procedure. The implementation of our method is available

under an open-source license

1

.

1

The complete source code including instructions about

running the experiments presented in this paper can be found at

http://www.ros.org/wiki/Papers/ICRA2011

Steder