X. Ye et al.: Efficient Stereo Matching Leveraging Deep Local and Context Information

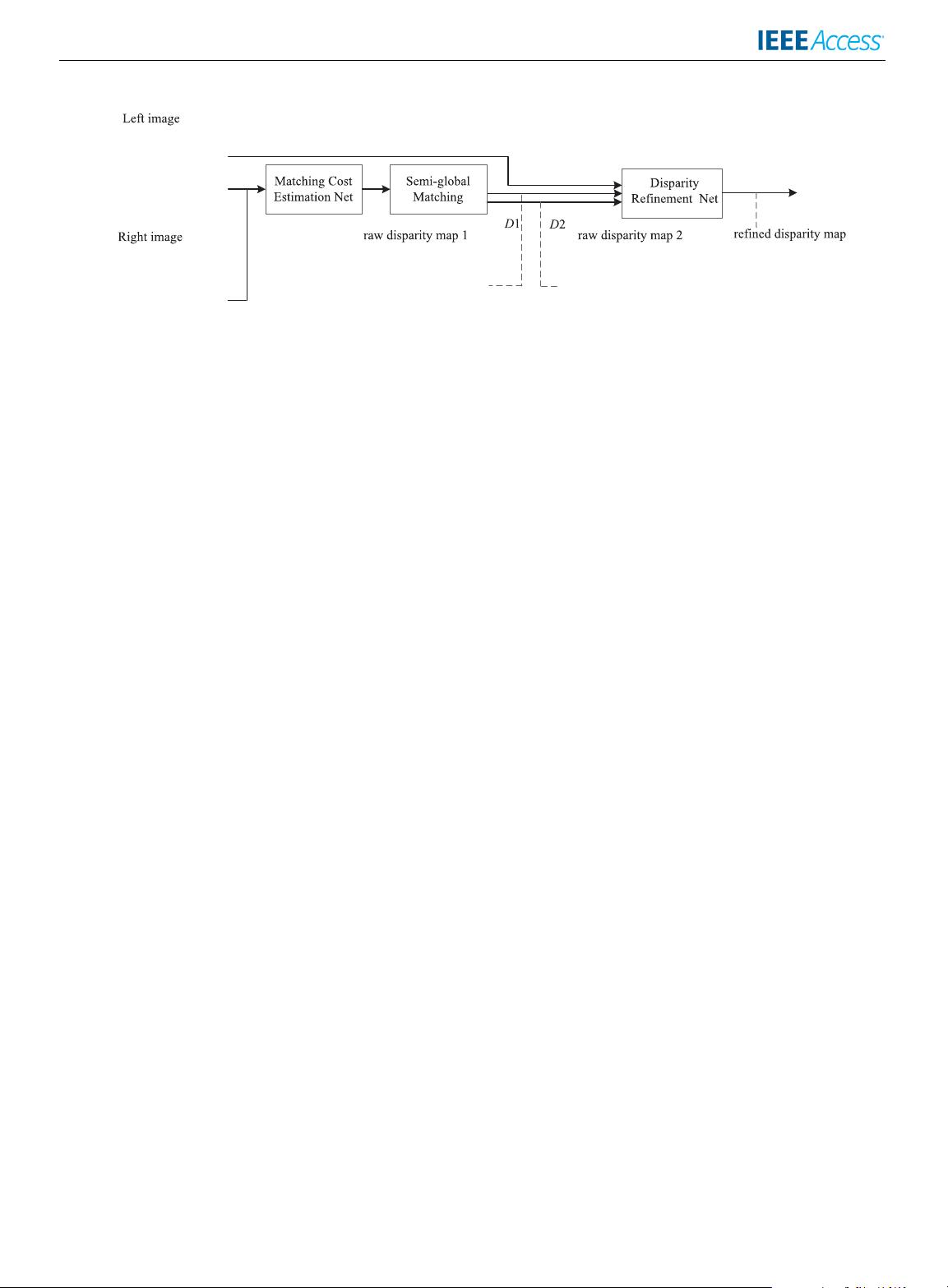

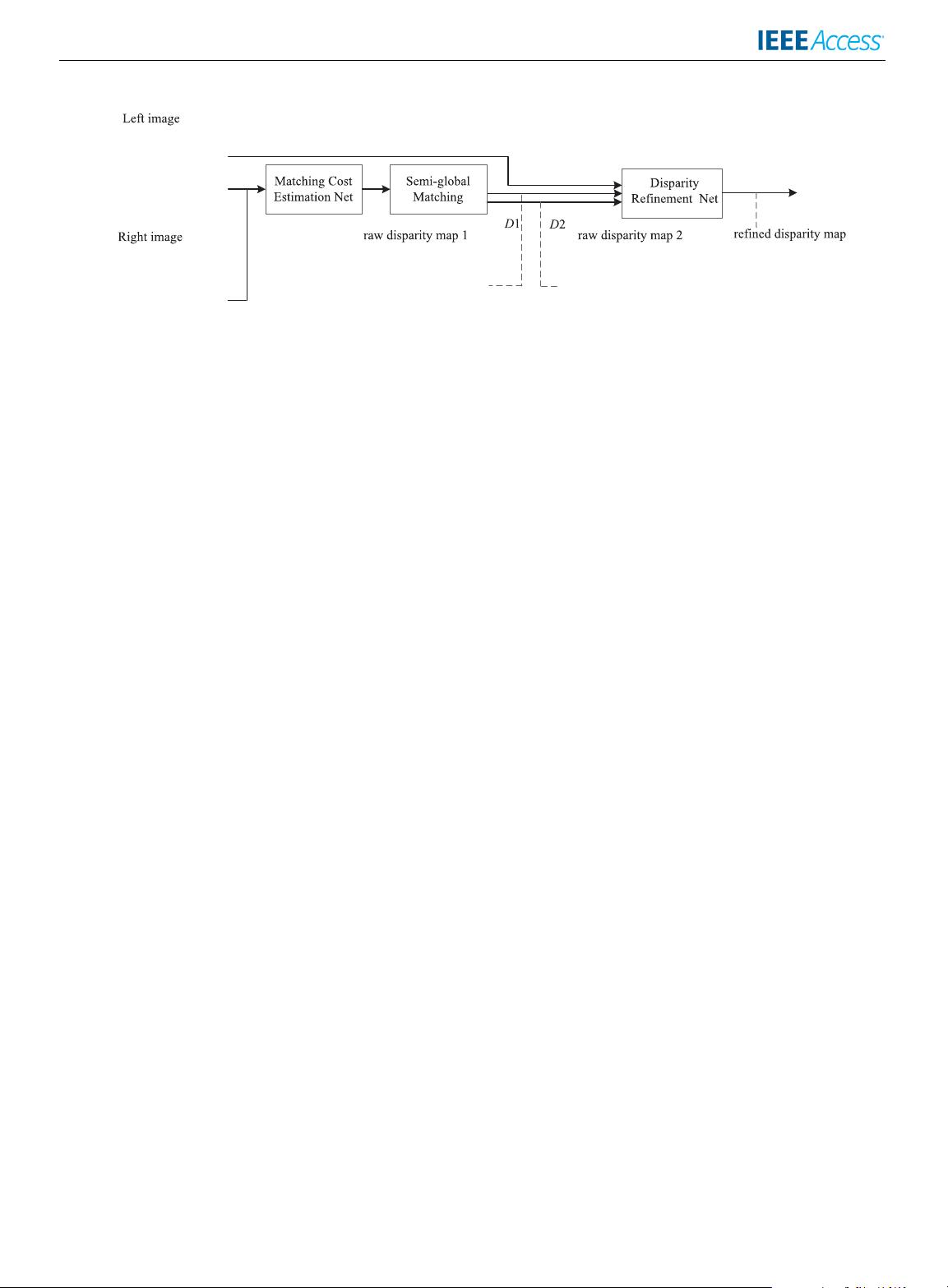

FIGURE 1. Overview of the proposed approach. The framework consists of two key parts: (1) matching cost estimation net and (2) disparity

refinement net. D

1

and D

2

denote the raw disparity maps related to the minimum and second minimum aggregated cost.

B. DISPARIT Y REFINEMENT

Despite of the previous steps, the raw disparity map gained

directly by WTA still contains many outliers, especially

in occlusions, textureless regions and disparity disconti-

nuities. To achieve higher accuracy, various refinement

approaches are harnessed to identify and correct outliers.

Left-right consistency check [1] is widely undertaken to

detect outliers. Weighted median filtering [24] based on

guided image [25] and bilateral weights [26] are employed

to refine aggregated results. Segmentation-based and plane

fitting [15], [27] methods handle weakly-textured regions

well but are time-consuming and subject to the quality of

segmentation. Instead of treating outliers uniformly, multi-

step processing [13], [28]–[30] achieves more competitive

results. Hirschmuller [15] distinguished occlusions from

mismatches by epipolar line, nevertheless, it will fail in

large mismatched area with weak texture, where a pixel

can still be marked as correct even if it has wrong dis-

parities on both maps. Besides, it produces threadlike out-

liers due to its discrete characteristic. Neighboring nearest

and minimal valid disparity were assigned to mismatched

and occluded points respectively based on support region

voting [11] or scanline interpolation [31]. Zhan et al. [13]

performed four-direction propagation to correct inner outliers

and took account of the variation of circumjacent dispar-

ities when handling the leftmost outliers. Concerning the

occlusions, Huq et al. [32] studied the theories and experi-

ments of occlusion filling. Yamaguchi et al. [33] proposed a

slanted plane model for jointly recovering an image segmen-

tation, a dense depth estimate as well as occlusion boundary.

Very recently, Gidaris et al. [10] introduced a deep structured

model to decompose the task into three sub-blocks. It took

advantage of deep CNNs to identify and correct outliers with

the information of left color image and left initial disparity

map.

In contrast to previous works that employ various refine-

ment algorithms singly to the initial disparity map obtained

by WTA, we also take advantage of the sub-optimal disparity

map corresponding to the second minimum cost, since it

contains favorable information that is not reflected in the

WTA-oriented disparity map. Inspired by [10], rather than

constructing a black box architecture, we decompose the

problem into a sequence of subtasks under the guidance of

our understanding.

III. MATCHING COST COMPUTATION ARCHITECTURE

The proposed approach mainly focuses on the matching cost

computation and the disparity refinement step. We achieve

this by two independent networks rather than a blind end-

to-end network because we believe that the typical pipeline

of solving stereo problems can explicitly guide the process.

An overview of our approach is illustrated in Fig. 1. The

output of the first network is used to initialize the matching

cost and is then aggregated by semi-global matching. Next the

disparity labels associated with two smallest costs are chosen

and employed in the follow-up refinement network.

The baseline work [3] adopts a Siamese network to com-

pute the similarity by simple dot product or fully-connected

layers, corresponding to the fast and accurate networks. The

two sub-networks each takes in a small patch extracted

from left and right images and outputs two feature vec-

tors by passing through several convolutional layers with

shared weights. An overview of baseline Siamese network is

depicted in Fig. 2(a). Note that the patch size in [3] is con-

strained to 11 × 11 with the exclusion of pooling unit. This is

because the conventional strided pooling reduces resolution

and could cause the loss of fine details, which is unsuitable

for dense correspondence estimation. As a result, the baseline

work [3] merely learns relatively local knowledge. To enlarge

the receptive field without losing resolution, a pyramid pool-

ing module is appended to the end of the fully connected

layers in [16] to learn multi-scale information (see Fig. 2(b)).

To obtain the similarity score, the pooling module in [16]

as well as the fully-connected modules have to be recom-

puted for each possible disparity, causing D

max

times’ extra

computation, where D

max

is the maximum disparity level.

The training procedure takes as long as several weeks and

the testing process is more than four times slower compared

to [3].

In contrast, we introduce a multi-size and multi-layer pool-

ing module with stride equals to one and append this module

before the fully-connected layers, which only needs to be

VOLUME 5, 2017 18747