382 IEEE TRANSACTIONS ON EVOLUTIONARY COMPUTATION, VOL. 24, NO. 2, APRIL 2020

(a) (b)

(c) (d)

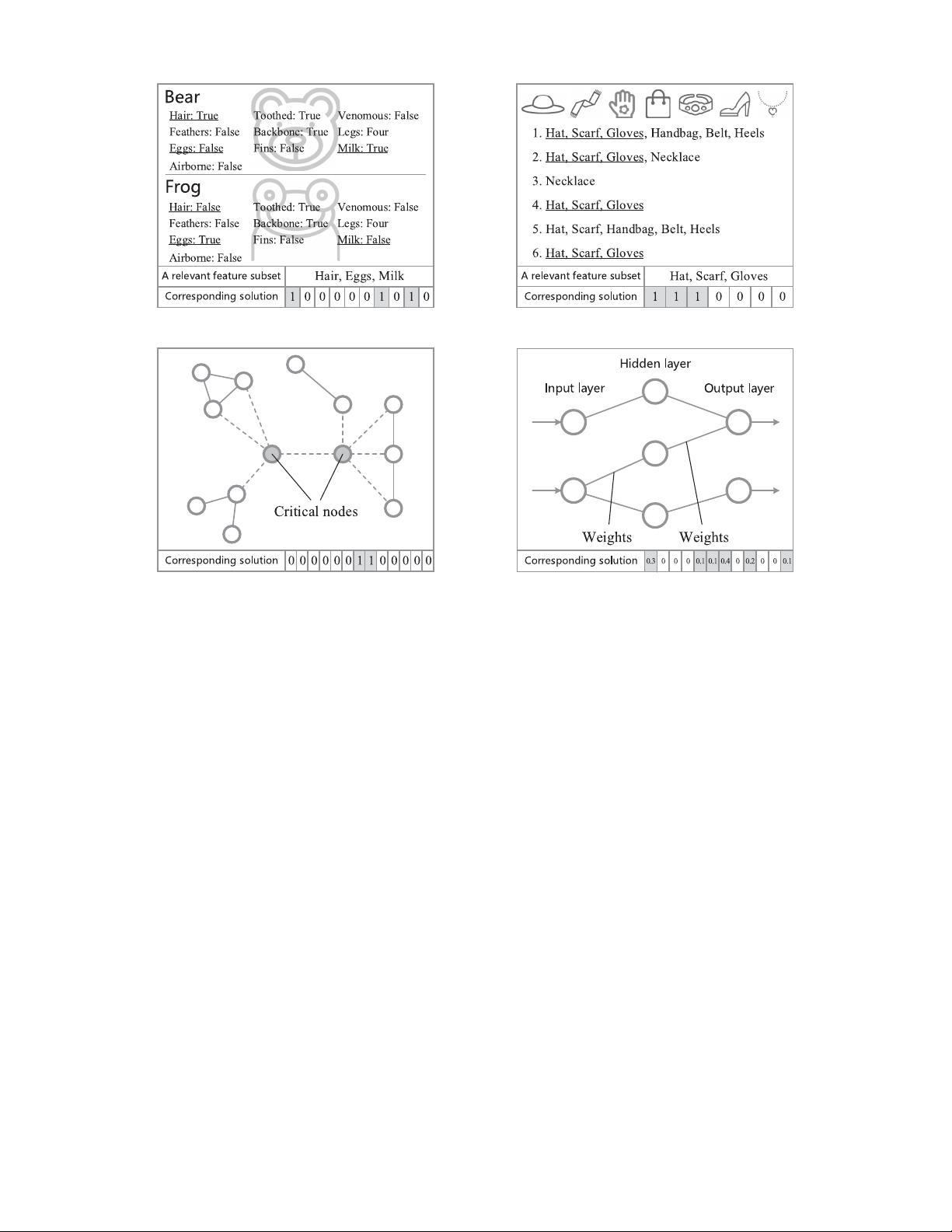

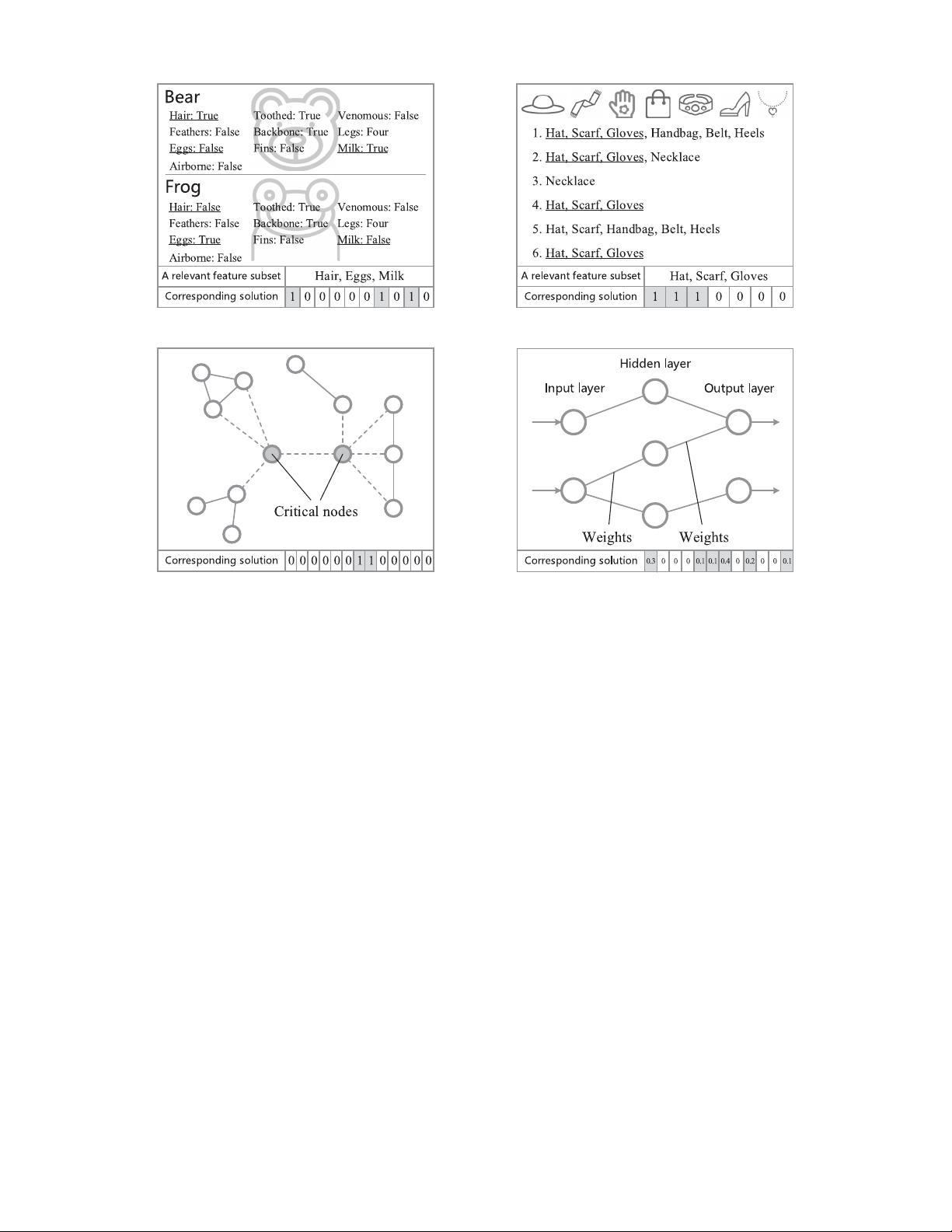

Fig. 2. Examples of four sparse MOPs in applications. (a) Feature selection problem. The features Hair, Eggs,andMilk are relevant features since they can

be used to distinguish the two animals. (b) Pattern mining problem. The items Hat, Scarf,andGloves constitute a recommended pattern since this pattern

occurs in four shopping lists. (c) Critical node detection problem. The two filled circles are critical nodes since the graph will be split into four disconnected

components if they are deleted. (d) Neural network training problem. It aims to optimize the weights of a neural network for minimizing the complexity

and error.

them due to the following two reasons. First, many sparse

SOPs embed the sparsity of solutions in the objective func-

tion [15], [39], which can actually be regarded as the second

objective to be optimized; that is, sparse SOPs can be con-

verted into sparse MOPs. Second, the optimal solutions of

some problems are sparse only if multiple conflicting objec-

tives are considered [2], [10].

B. Four Sparse MOPs in Applications

Four popular sparse MOPs in applications are introduced

in the following, namely, feature selection [8], pattern min-

ing [10], critical node detection [11], and neural network

training [18]. These four problems are described in Fig. 2,

and the mathematical definition of them can be found in the

supplementary material.

1) Feature Selection Problem: For classification tasks, a

large number of features are usually introduced to the dataset,

where many features are irrelevant or redundant. The aim of

feature selection is to choose only relevant features for clas-

sification, which can not only reduce the number of features,

but also improve the classification performance [7]. Therefore,

feature selection is inherently a bi-objective MOP, and some

MOEAs have been employed to solve it [8], [19].

2) Pattern Mining Problem: This problem seeks to find the

most frequent and complete pattern (i.e., a set of items) from a

transaction dataset, where a transaction dataset contains a set

of transactions and each transaction contains a set of items.

For example, when a customer is purchasing one item on a

website, the task is to recommend the matching items to the

customer, by means of mining the best pattern from all the

historical shopping lists. In [21], the pattern mining problem

is regarded as an MOP for maximizing both the frequency

and completeness, where the frequency of a pattern indicates

the ratio of transactions it occurs in, and the completeness

of a pattern indicates its occupancy rate in the transactions it

occurs in.

3) Critical Node Detection Problem: Given a graph G =

(V, E), the critical node detection problem [11] is to select

a point set x ⊆ V, the deletion of which minimizes pairwise

connectivity in the remaining graph G[V\x]. In order to select

fewer points for larger destruction to the graph, both the num-

ber of selected points and the pairwise connectivity in the

remaining graph should be minimized. As a result, the critical

node detection problem is also a subset selection MOP, and

has been tackled by some MOEAs [40], [41].

4) Neural Network Training Problem: Evolutionary algo-

rithms have been deeply studied for training artificial neural

Authorized licensed use limited to: Anhui University. Downloaded on April 22,2020 at 03:26:44 UTC from IEEE Xplore. Restrictions apply.