Relative frequency 3

this naturally leads to the Rayleigh, chi-squared, noncentral chi-squared, and Rice density

functions that you will meet in the problems in Chapters 4, 5, 7, and 9.

Variability in electronic circuits. Although circuit manufacturing processes attempt to

ensure that all items have nominal parameter values, there is always some variation among

items. How can we estimate the average values in a batch of items without testing all of

them? How good is our estimate? You will learn how to do this in Chapter 6 when you

study parameter estimation and confidence intervals. Incidentally, the same concepts apply

to the prediction of presidential elections by surveying only a few voters.

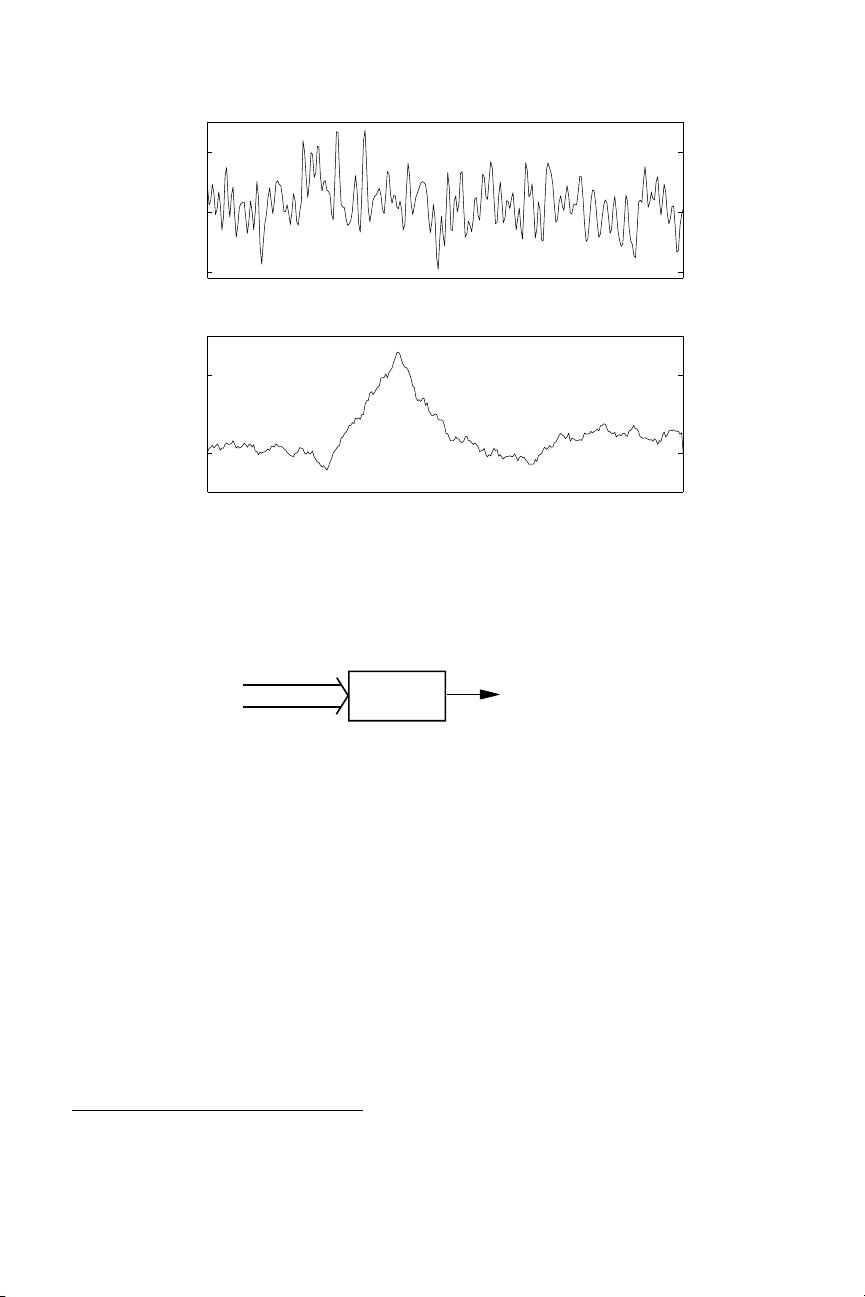

Computer network traffic. Prior to the 1990s, network analysis and design was carried

out using long-established Markovian models [41, p. 1]. You will study Markov chains

in Chapter 12. As self similarity was observed in the traffic of local-area networks [35],

wide-area networks [43], and in World Wide Web traffic [13], a great research effort began

to examine the impact of self similarity on network analysis and design. This research has

yielded some surprising insights into questions about buffer size vs. bandwidth, multiple-

time-scale congestion control, connection duration prediction, and other issues [41, pp. 9–

11]. In Chapter 15 you will be introduced to self similarity and related concepts.

In spite of the foregoing applications, probability was not originally developed to handle

problems in electrical and computer engineering. The first applications of probability were

to questions about gambling posed to Pascal in 1654 by the Chevalier de Mere. Later,

probability theory was applied to the determination of life expectancies and life-insurance

premiums, the theory of measurement errors, and to statistical mechanics. Today, the theory

of probability and statistics is used in many other fields, such as economics, finance, medical

treatment and drug studies, manufacturing quality control, public opinion surveys, etc.

Relative frequency

Consider an experiment that can result in M possible outcomes, O

1

,...,O

M

.Forex-

ample, in tossing a die, one of the six sides will land facing up. We could let O

i

denote

the outcome that the ith side faces up, i = 1,...,6. Alternatively, we might have a computer

with six processors, and O

i

could denote the outcome that a program or thread is assigned to

the ith processor. As another example, there are M = 52 possible outcomes if we draw one

card from a deck of playing cards. Similarly, there are M = 52 outcomes if we ask which

week during the next year the stock market will go up the most. The simplest example we

consider is the flipping of a coin. In this case there are two possible outcomes, “heads” and

“tails.” Similarly, there are two outcomes when we ask whether or not a bit was correctly

received over a digital communication system. No matter what the experiment, suppose

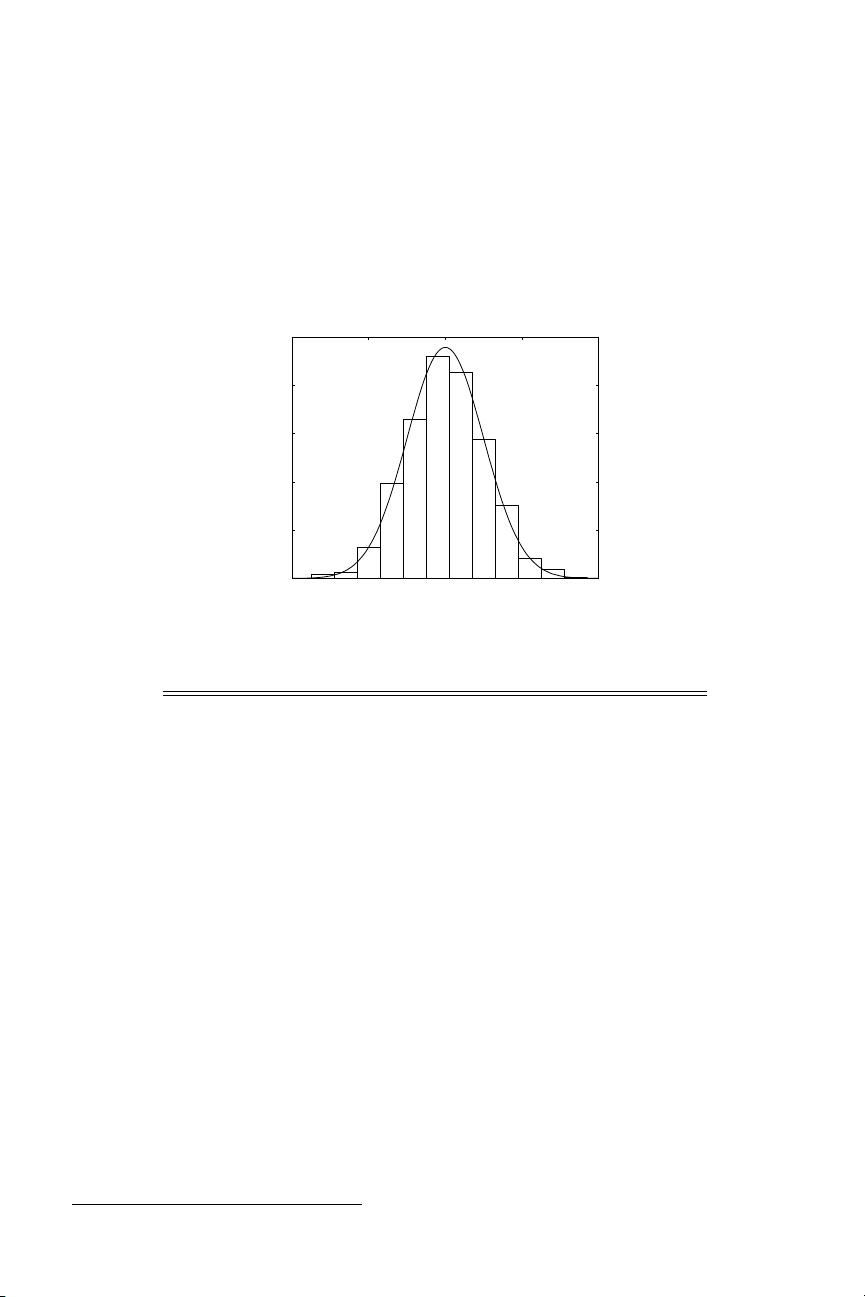

we perform it n times and make a note of how many times each outcome occurred. Each

performance of the experiment is called a trial.

b

Let N

n

(O

i

) denote the number of times O

i

occurred in n trials. The relative frequency of outcome O

i

,

N

n

(O

i

)

n

,

is the fraction of times O

i

occurred.

b

When there are only two outcomes, the repeated experiments are called Bernoulli trials.