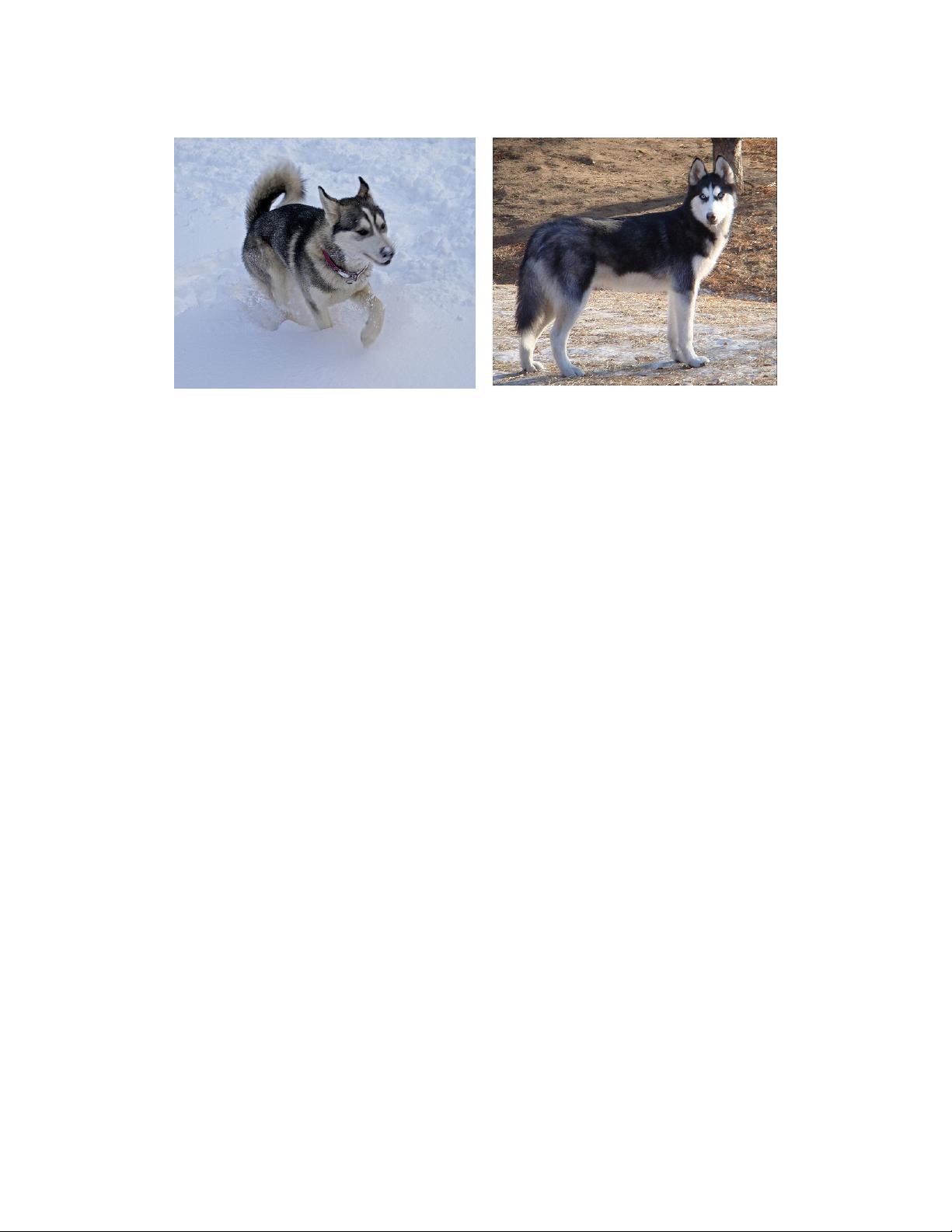

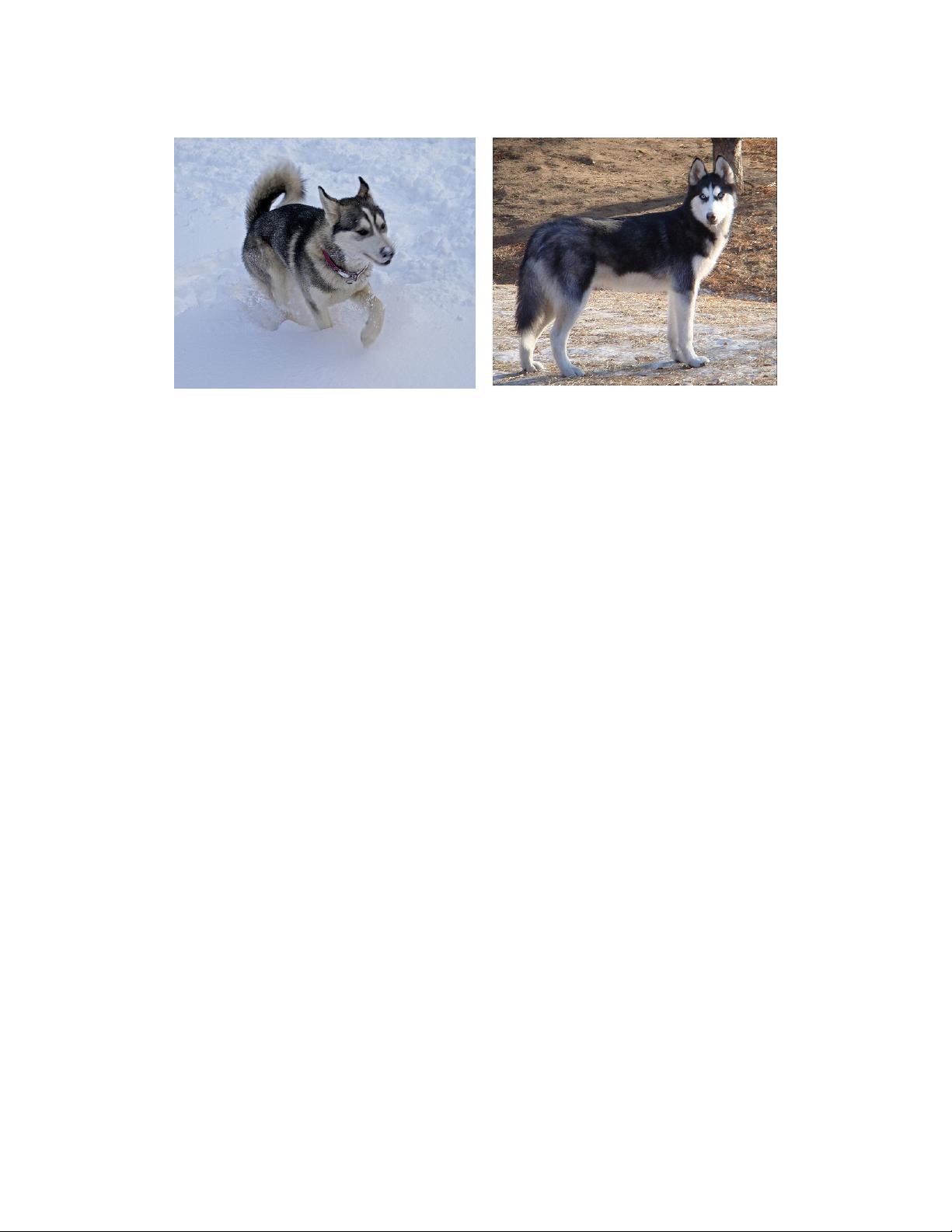

(a) Siberian husky (b) Eskimo dog

Figure 1: Two distinct classes from the 1000 classes of the ILSVRC 2014 classification challenge.

and expensive, especially if expert human raters are necessary to distinguish between fine-grained

visual categories like those in ImageNet (even in the 1000-class ILSVRC subset) as demonstrated

by Figure 1.

Another drawback of uniformly increased network size is the dramatically increased use of compu-

tational resources. For example, in a deep vision network, if two convolutional layers are chained,

any uniform increase in the number of their filters results in a quadratic increase of computation. If

the added capacity is used inefficiently (for example, if most weights end up to be close to zero),

then a lot of computation is wasted. Since in practice the computational budget is always finite, an

efficient distribution of computing resources is preferred to an indiscriminate increase of size, even

when the main objective is to increase the quality of results.

The fundamental way of solving both issues would be by ultimately moving from fully connected

to sparsely connected architectures, even inside the convolutions. Besides mimicking biological

systems, this would also have the advantage of firmer theoretical underpinnings due to the ground-

breaking work of Arora et al. [2]. Their main result states that if the probability distribution of

the data-set is representable by a large, very sparse deep neural network, then the optimal network

topology can be constructed layer by layer by analyzing the correlation statistics of the activations

of the last layer and clustering neurons with highly correlated outputs. Although the strict math-

ematical proof requires very strong conditions, the fact that this statement resonates with the well

known Hebbian principle – neurons that fire together, wire together – suggests that the underlying

idea is applicable even under less strict conditions, in practice.

On the downside, todays computing infrastructures are very inefficient when it comes to numerical

calculation on non-uniform sparse data structures. Even if the number of arithmetic operations is

reduced by 100×, the overhead of lookups and cache misses is so dominant that switching to sparse

matrices would not pay off. The gap is widened even further by the use of steadily improving,

highly tuned, numerical libraries that allow for extremely fast dense matrix multiplication, exploit-

ing the minute details of the underlying CPU or GPU hardware [16, 9]. Also, non-uniform sparse

models require more sophisticated engineering and computing infrastructure. Most current vision

oriented machine learning systems utilize sparsity in the spatial domain just by the virtue of em-

ploying convolutions. However, convolutions are implemented as collections of dense connections

to the patches in the earlier layer. ConvNets have traditionally used random and sparse connection

tables in the feature dimensions since [11] in order to break the symmetry and improve learning, the

trend changed back to full connections with [9] in order to better optimize parallel computing. The

uniformity of the structure and a large number of filters and greater batch size allow for utilizing

efficient dense computation.

This raises the question whether there is any hope for a next, intermediate step: an architecture

that makes use of the extra sparsity, even at filter level, as suggested by the theory, but exploits our

3