226 IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 54, NO. 2, FEBRUARY 2006

Mathematical Analysis of the Impact of Timing Synchronization

Errors on the Performance of an OFDM System

Yasamin Mostofi, Member, IEEE, and Donald C. Cox, Fellow, IEEE

Abstract—This letter addresses the effect of timing synchro-

nization errors that are introduced by an erroneous detection

of the start of an orthogonal frequency-division multiplexing

(OFDM) symbol.

1

Such errors degrade the performance of an

OFDM receiver by introducing intercarrier interference (ICI) and

intersymbol interference (ISI). They can occur due to either an

erroneous initial frame synchronization or a change in the power

delay profile of the channel. In this letter, we provide a mathemat-

ical analysis of the effect of timing errors on the performance of

an OFDM receiver in a frequency-selective fading environment.

2

We find exact formulas for the power of interference terms and

the resulting average signal-to-interference ratio. We further

extend the analysis to the subsample level. Our results show the

nonsymmetric effect of timing errors on the performance of an

OFDM system. Finally, simulation results confirm the analysis.

3

Index Terms—Orthogonal frequency-division multiplexing

(OFDM), signal-to-interference ratio (SIR), timing-synchroniza-

tion errors.

I. INTRODUCTION

O

RTHOGONAL frequency-division multiplexing

(OFDM) divides the given bandwidth into narrow

subchannels. It handles delay spread by sending low data rates

in parallel on these subchannels [1]. By adding a guard interval

to the beginning of each OFDM symbol, the effect of delay

spread (provided that there is perfect synchronization) would

appear as a multiplication in the frequency domain. Adding

the guard interval will also prevent intersymbol interference

(ISI).

4

Timing-synchronization errors, however, degrade the

performance of an OFDM receiver by introducing intercarrier

interference (ICI) and ISI. Several methods have been proposed

for timing synchronization in OFDM receivers [2]–[6]. To

evaluate and improve the performance of these methods, a

comprehensive mathematical analysis of the effect of timing

errors and the underlying interference terms is necessary.

Paper approved by R. De Gaudenzi, the Editor for Synchronization and

CDMA of the IEEE Communications Society. Manuscript received July 30,

2003; revised July 27, 2005. This paper was presented in part at the 37th

Asilomar Conference on Signals, Systems, and Computers, Monterey, CA,

November 2003.

Y. Mostofi is with the California Institute of Technology, Pasadena, CA 91125

USA (e-mail: yasi@cds.caltech.edu).

D. C. Cox is with the Department of Electrical Engineering, Stanford Uni-

versity, Stanford, CA 94305 USA (e-mail: dcox@spark.stanford.edu).

Digital Object Identifier 10.1109/TCOMM.2005.861675

1

Throughout this letter, the term “timing error” would refer to this type of

error.

2

The analysis presented in this letter is for the case that no equalization tech-

nique has been used to mitigate the introduced ICI and ISI.

3

The results of this letter can be easily extended to address the effect of such

errors on DMT modems.

4

Note that intersymbol interference refers to inter-OFDM symbol-interfer-

ence.

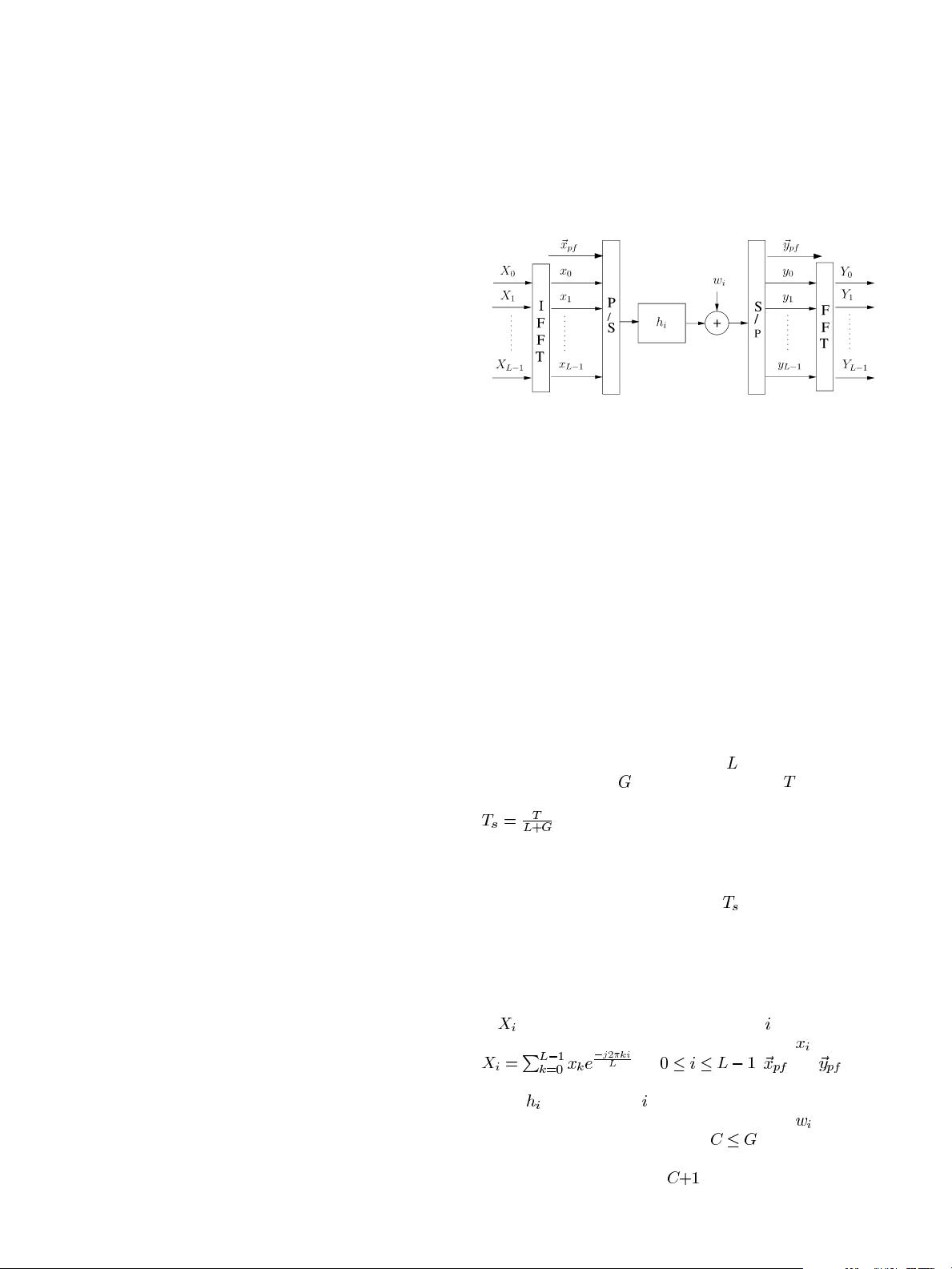

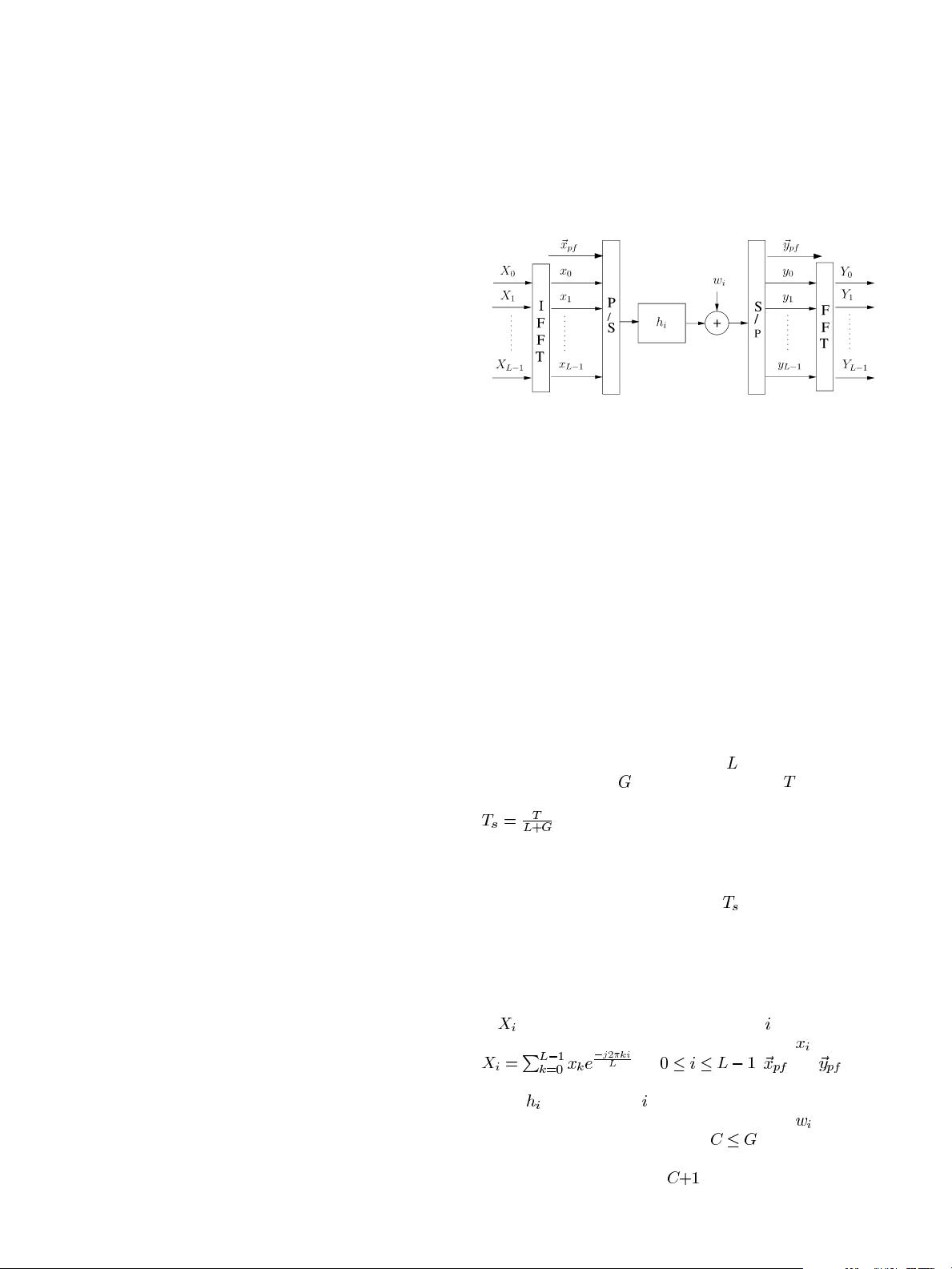

Fig. 1. Discrete baseband equivalent model.

Authors in [5] have provided an approximated formula with lim-

ited applications for the interference caused by timing errors. It

is the goal of this letter to provide an exact mathematical anal-

ysis of the effect of timing errors, which can be a base for eval-

uating the performance of different synchronization methods.

Furthermore, while most of the work on timing synchronization

use sampling-period-level modeling, we also show how to ana-

lyze and evaluate the performance on the subsample level.

II. E

FFECT OF TIMING SYNCHRONIZATION ERRORS

(SAMPLING-PERIOD LEVEL)

Consider an OFDM system, shown in Fig. 1, in which the

available bandwidth is divided into

subchannels, and the

guard interval spans

sampling periods. Let represent the

length of an OFDM symbol (including the guard interval). Then

is the sampling period. In this section, we keep the

analysis on the sampling-period level, which translates to the

following assumptions:

1) no oversampling is done in the receiver;

2) timing error is a multiple of

.

We relax these conditions in Section III, where we extend the

analysis to include oversampling.

A. System Model

represents the transmitted data in the th frequency sub-

band and is related to the time-domain sequence

as follows:

for . and repre-

sent the transmitted and received cyclic prefixes (CPs), respec-

tively.

represents the th channel tap with Rayleigh fading

amplitude and uniformly distributed phase, and

is additive

white Gaussian noise (AWGN). Let

represent the length

of the channel delay spread normalized by the sampling period.

Then the channel would have

taps. In the absence of timing

0090-6778/$20.00 © 2006 IEEE