"PyTorch深度学习:从人工神经网络到无限可能性"

需积分: 5 115 浏览量

更新于2024-03-21

收藏 700KB PDF 举报

Chapter 1 of "Advancements in Deep Learning Technologies Based on PyTorch" delves into the fundamentals of artificial neural networks. The chapter begins by exploring the origins of human curiosity and our quest to understand complex concepts such as the universe, singularity, and the meaning of life. As our brains evolved to become more efficient, we began to ponder deep questions and seek out answers.

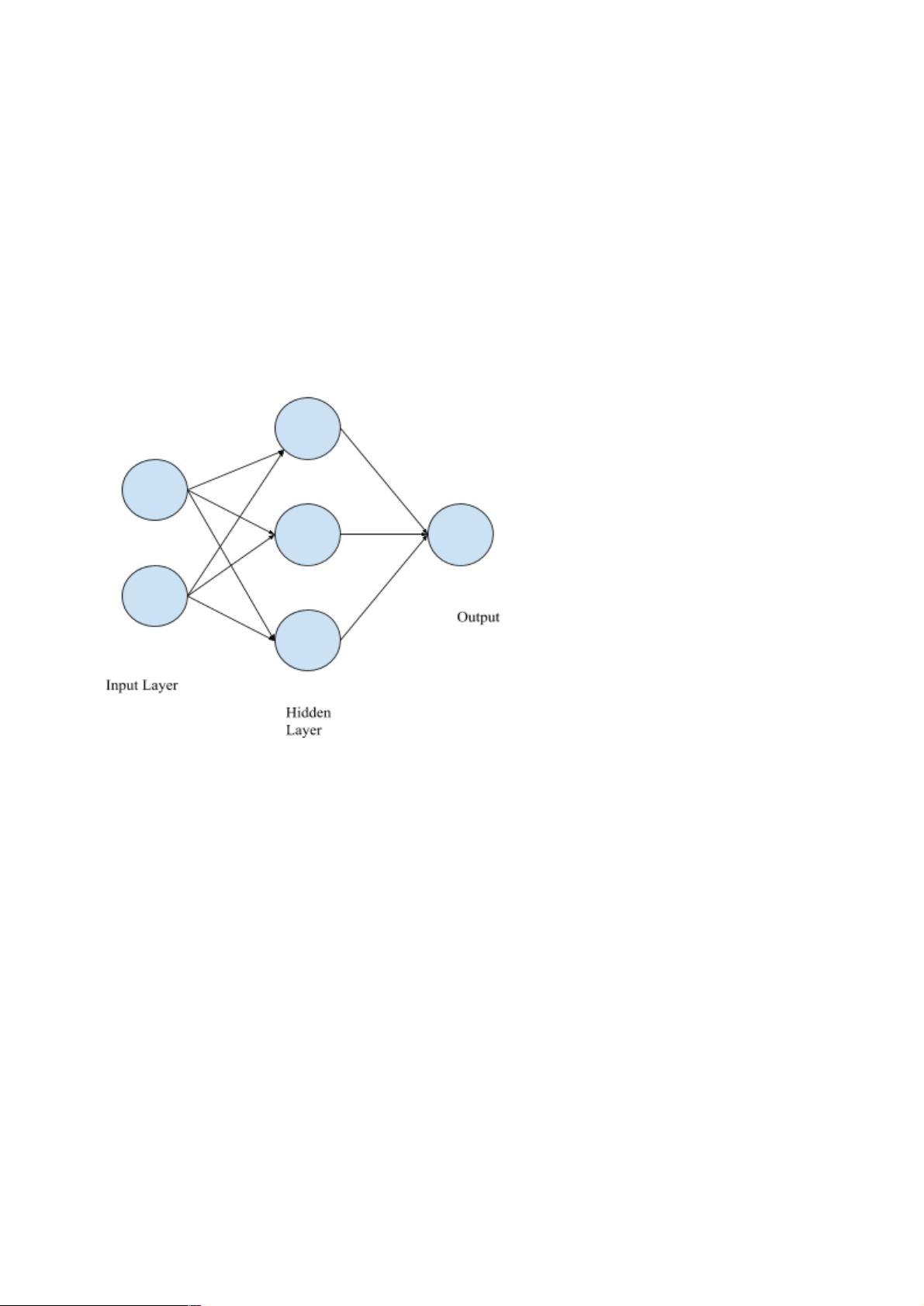

Artificial neural networks are inspired by the intricate workings of the human brain, with the goal of mimicking its capabilities in processing information and making decisions. The components of artificial neural networks include neurons, which are the basic processing units, layers that organize and connect neurons, weights that determine the strength of connections between neurons, biases that help adjust the output of neurons, activation functions that introduce non-linearity, and loss functions that evaluate the performance of the network.

Neural networks are trained using algorithms that adjust the weights and biases iteratively to minimize the difference between the predicted output and the actual output. This process, known as backpropagation, is crucial for enhancing the network's ability to learn from data and improve its performance over time.

Overall, artificial neural networks represent a powerful tool in the field of deep learning, enabling computers to perform complex tasks such as image recognition, natural language processing, and autonomous decision-making. By studying the components and principles of neural networks, researchers can continue to push the boundaries of AI technology and drive advancements in various industries. "Advancements in Deep Learning Technologies Based on PyTorch" provides a comprehensive overview of these concepts and their applications, showcasing the potential for future innovation in the field.

2020-01-15 上传

2023-09-13 上传

2021-08-31 上传

2021-09-01 上传

点击了解资源详情

2022-03-08 上传

2021-08-19 上传

2021-08-19 上传

psupgpv

- 粉丝: 0

- 资源: 4

最新资源

- JavaScript实现的高效pomodoro时钟教程

- CMake 3.25.3版本发布:程序员必备构建工具

- 直流无刷电机控制技术项目源码集合

- Ak Kamal电子安全客户端加载器-CRX插件介绍

- 揭露流氓软件:月息背后的秘密

- 京东自动抢购茅台脚本指南:如何设置eid与fp参数

- 动态格式化Matlab轴刻度标签 - ticklabelformat实用教程

- DSTUHack2021后端接口与Go语言实现解析

- CMake 3.25.2版本Linux软件包发布

- Node.js网络数据抓取技术深入解析

- QRSorteios-crx扩展:优化税务文件扫描流程

- 掌握JavaScript中的算法技巧

- Rails+React打造MF员工租房解决方案

- Utsanjan:自学成才的UI/UX设计师与技术博客作者

- CMake 3.25.2版本发布,支持Windows x86_64架构

- AR_RENTAL平台:HTML技术在增强现实领域的应用