Accelerating SLIDE Deep Learning on Modern CPUs: Vectorization, Quantizations, Memory Optimizations, and More

3

2

1

4

5

6

Feed-forward Pass

!

"

"

… !

#

"

00 … 00

00 … 01

00 … 10

… … … …

11 … 11

!

"

$

… !

#

$

00 … 00

00 … 01

00 … 10

… … … …

11 … 11

…

Thread 1 %

&

3

2

1

4

5

6

Hash Tables

Parameters

'

(

&

) '

(

*

) '

(

+

) '

(

,

) '

(

-

) '

(

.

…

Thread 2 %

*

1 4

6

Active Set

1

2

3

4

Query

Shared Memory

2 6

Active Set

1

2

3

4

Query

Activation

Activation

Retrieval

Retrieval

3

2

1

4

5

6

Backpropagation

!

"

"

… !

#

"

00 … 00

00 … 01

00 … 10

… … … …

11 … 11

!

"

$

…

!

#

$

00 … 00

00 … 01

00 … 10

… … … …

11 … 11

…

Thread 1 %

&

3

2

1

4

5

6

Hash Tables

Parameters

'

(

&

) '

(

*

) '

(

+

) '

(

,

) '

(

-

) '

(

.

…

Thread 2 %

*

1 4

6

Active Set

1

2

3

4

Shared Memory

2 6

Active Set

1

2

3

4

Activation

Activation

Update

Gradient

Gradient

Update

Update

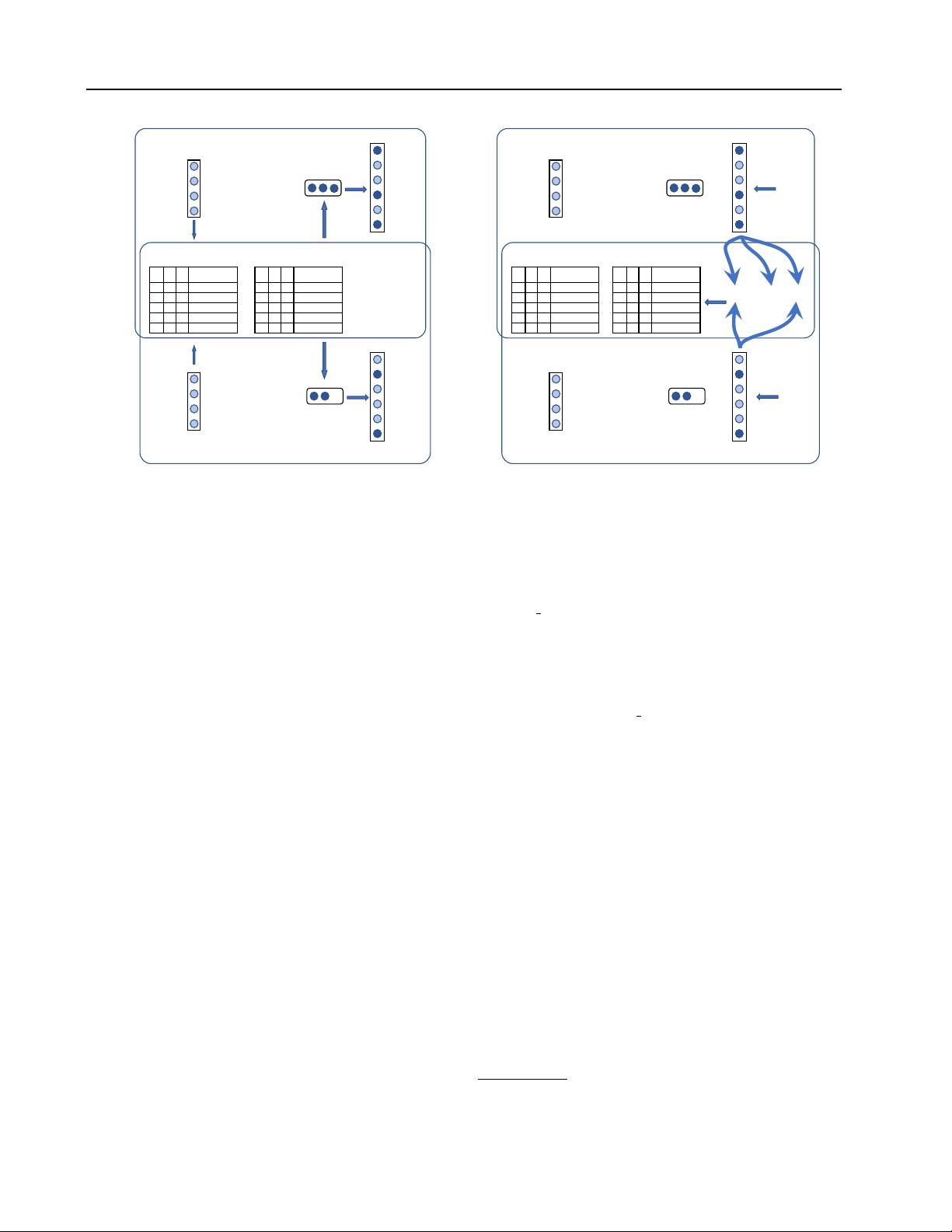

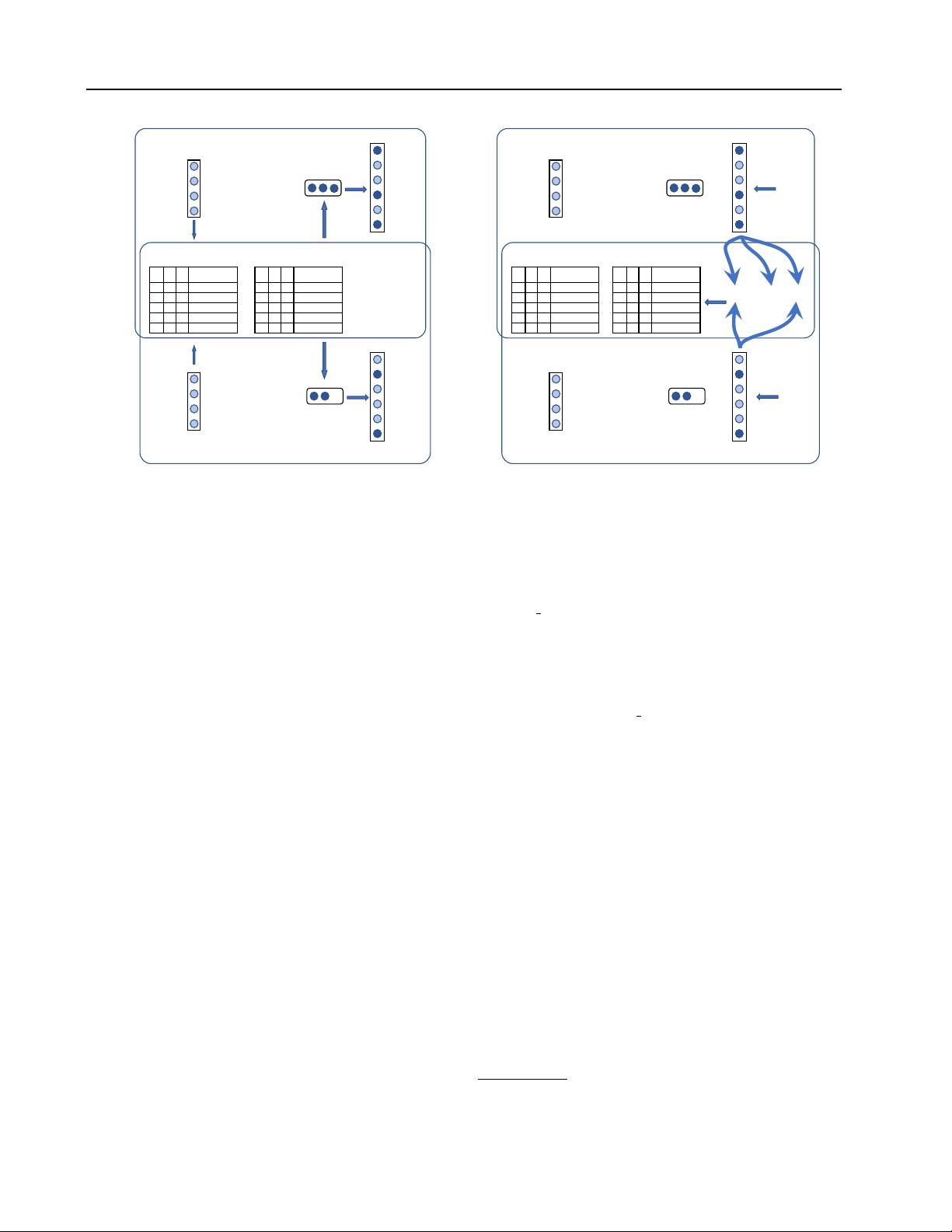

Figure 1.

Illustration of feed-forward and backward pass. Two threads are processing the two data instances

x

1

and

x

2

in parallel with

LSH hash tables and parameters in shared memory space.

updates. For example, on the right-hand side of Fig.1, only

the weights of neurons 1, 4, and 6 for data

x

1

and the

weights of neurons 2 and 6 for data

x

2

are updated. If a

fraction

p

of neurons in one layer is active, the fraction of

weights that would be updated is only

p

2

, which is a sig-

nificant reduction of computation when

p 1

. Afterward,

the hash tables are updated according to the new weights’

values. If the neuron’s buckets in the hash tables need to

be updated, it will be deleted from the current bucket in the

hash table and re-add it. We refer the interested readers to

(Chen et al., 2019) for a detailed review of the algorithm.

HOGWILD Style Parallelism

One unique advantage of

SLIDE is that every data instance in a batch selects a

very sparse and a small set of neurons to process and up-

date. This random and sparse updates open room for HOG-

WILD (Recht et al., 2011) style updates where it is possible

to process all the batch in parallel. SLIDE leverages multi-

core functionality by performing asynchronous data parallel

gradient descent.

3 TARGET CPU PLATFORMS: INTEL CLX

AND INTEL CPX

In this paper, we target two different, recently introduced,

Intel CPUs server to evaluate the SLIDE system and its

effectiveness:

Cooper Lake server (CPX)

(Intel, b) and

Cascade Lake server (CLX)

(Intel, a). Both of these pro-

cessors support AVX-512 instructions. However, only the

CPX machine supports bloat16.

CPX is the new 3rd generation Intel Xeon scalable processor,

which supports AVX512-based BF16 instructions. It has

Intel x86 64 architecture with four 28-core CPU totaling

112 cores capable of running 224 threads in parallel with

hyper-threading. The

L3

cache size is around 39MB, and

L2 cache is about 1MB.

CLX is an older generation that does not support BF16 in-

structions. It has Intel x86 64 architecture with dual 24-core

processors (Intel Xeon Platinum 8260L CPU @ 2.40GHz)

totaling 48 cores. With hyper-threading, the maximum num-

ber of parallel threads that can be run reaches 96. The

L3

cache size is 36MB, and L2 cache is about 1MB.

4 OPPORTUNITIES FOR OPTIMIZATION IN

SLIDE

The SLIDE C++ implementation is open sourced codebase

2

which allowed us to delve deeper into its inefficiencies. In

this section, we describe a series of our efforts in exploiting

the unique opportunities available on CPU to scaling up

SLIDE.

4.1 Memory Coalescing and cache utilization

Most machine learning jobs are memory bound. They re-

quire reading continuous data in batches and then updating

a large number of parameters stored in the main memory.

2

https://github.com/keroro824/HashingDeepLearning