Convolutional Neural Networks with Alternately Updated Clique

Yibo Yang

1,2

, Zhisheng Zhong

2

, Tiancheng Shen

1,2

, Zhouchen Lin

2,3, ∗

1

Academy for Advanced Interdisciplinary Studies, Peking University

2

Key Laboratory of Machine Perception (MOE), School of EECS, Peking University

3

Cooperative Medianet Innovation Center, Shanghai Jiao Tong University

{ibo,zszhong,tianchengShen,zlin}@pku.edu.cn

Abstract

Improving information flow in deep networks helps to

ease the training difficulties and utilize parameters more

efficiently. Here we propose a new convolutional neu-

ral network architecture with alternately updated clique

(CliqueNet). In contrast to prior networks, there are both

forward and backward connections between any two layers

in the same block. The layers are constructed as a loop and

are updated alternately. The CliqueNet has some unique

properties. For each layer, it is both the input and output of

any other layer in the same block, so that the information

flow among layers is maximized. During propagation, the

newly updated layers are concatenated to re-update previ-

ously updated layer, and parameters are reused for mul-

tiple times. This recurrent feedback structure is able to

bring higher level visual information back to refine low-

level filters and achieve spatial attention. We analyze the

features generated at different stages and observe that using

refined features leads to a better result. We adopt a multi-

scale feature strategy that effectively avoids the progressive

growth of parameters. Experiments on image recognition

datasets including CIFAR-10, CIFAR-100, SVHN and Ima-

geNet show that our proposed models achieve the state-of-

the-art performance with fewer parameters

1

.

1. Introduction

In recent years, the structure and topology of deep neural

networks have attracted significant research interests, since

the convolutional neural network (CNN) based models have

achieved huge success in a wide range of tasks of computer

vision. A notable trend of those CNN architectures is that

the layers are going deeper, from AlexNet [23] with 5 con-

volutional layers, the VGG network and GoogleLeNet with

19 and 22 layers, respectively [32, 36], to recent ResNets

[13] whose deepest model has more than one thousand

layers. However, inappropriately designed deep networks

∗

Corresponding author

1

Code address: http://github.com/iboing/CliqueNet

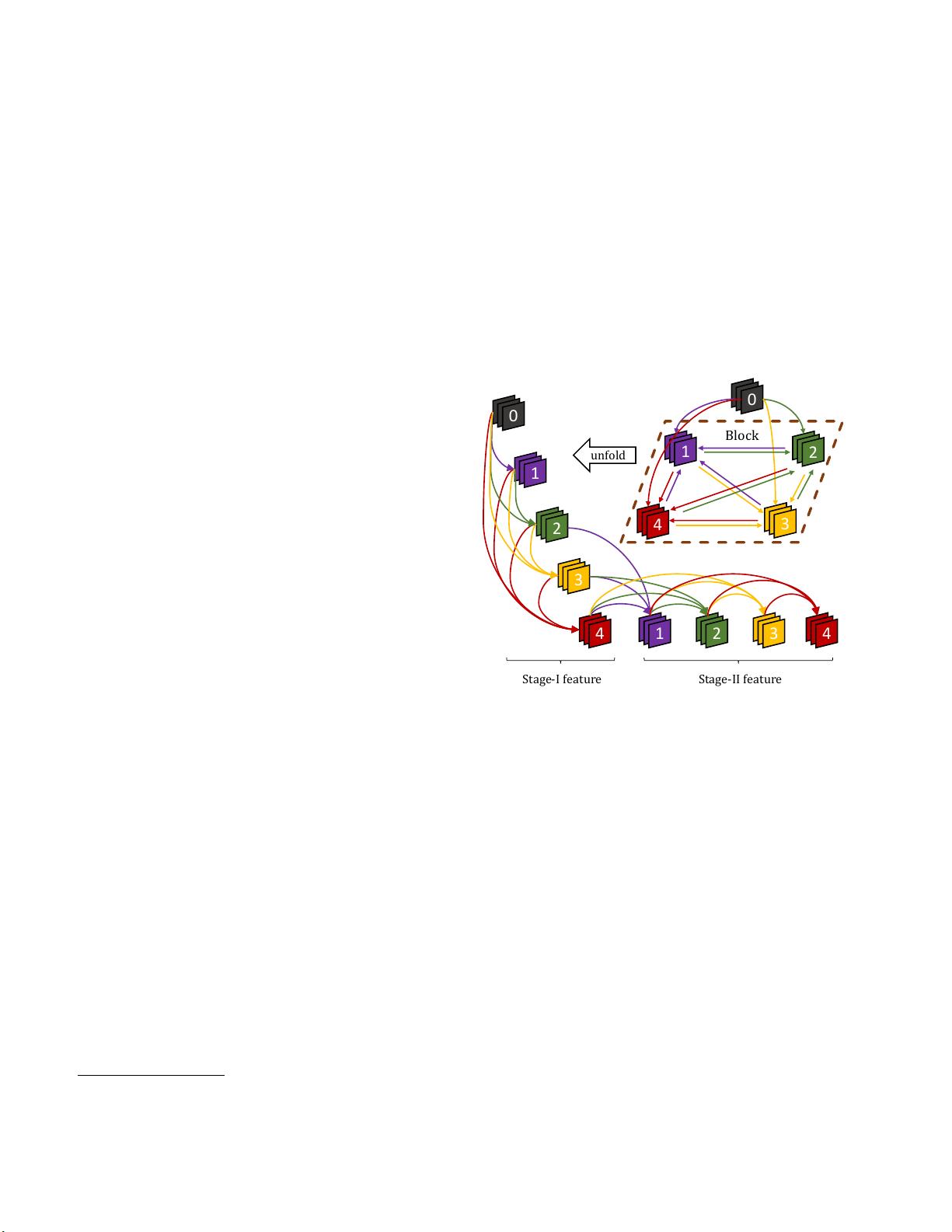

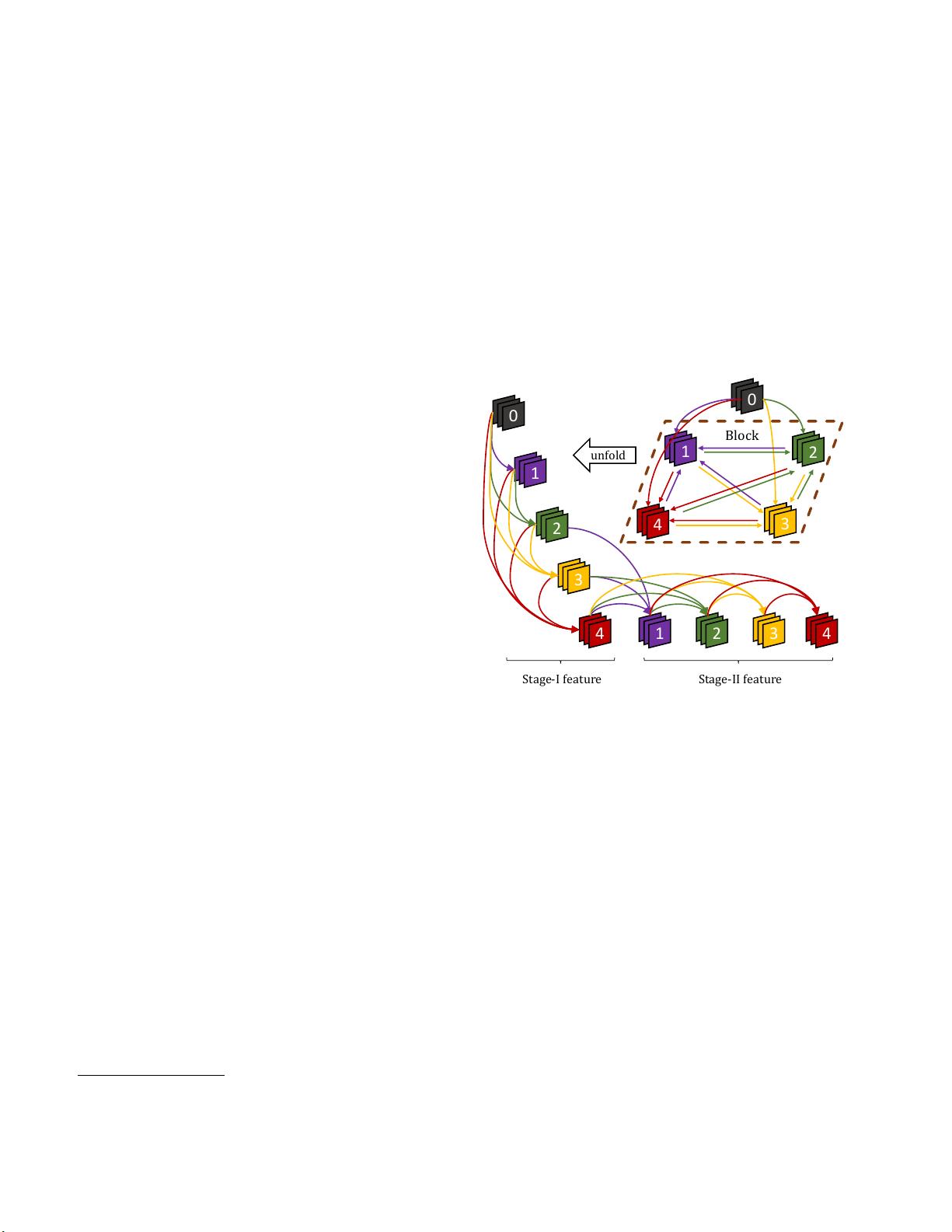

0

1

2

3

4 1

2 3 4

Stage-I feature

Stage-II feature

unfold

1

2

3

4

0

Block

Figure 1. An illustration of a block with 4 layers. Any layer is

both the input and output of another one. Node 0 denotes the input

layer of this block.

would make it hard for latter layer to access the gradient in-

formation from previous layers, which may cause gradient

vanishing and parameter redundancy problems [17, 18].

Successfully adopted in ResNet [13] and Highway Net-

work [34], skip connection is an efficient way to make

top layers accessible to the information from bottom lay-

ers, and ease the network training at the same time, due

to its relief of the gradient vanishing problem. The resid-

ual block structure in ResNet [13] also inspires a series

of ResNet variations, including ResNext [40], WRN [41],

PolyNet [44], etc. To further activate the gradient and in-

formation flow in networks, DenseNet [17] is a newly pro-

posed structure, where any layer in a block is the output of

all preceding layers, and the input of all subsequent layers.

Recent studies show that the skip connection mechanism

can be extrapolated as a recurrent neural network (RNN)

or LSTM [14], when weights are shared among different

layers [27, 5, 21]. In this way, the deep residual network