Robust Visual Object Tracking with Two-Stream

Residual Convolutional Networks

Ning Zhang

∗

, Jingen Liu

∗

, Ke Wang

†

, Dan Zeng

‡

and Tao Mei

§

∗

JD AI Research, Mountain View, USA.

†

Migu Culture & Technology, Beijing, China

‡

Shanghai University, Shanghai, China

§

JD AI Research, Beijing, China

∗

{ning.zhang,jingen.liu}@jd.com,

†

wangke@migu.cn,

‡

dzeng@shu.edu.cn,

§

tmei@live.com

Abstract—The current deep learning based visual tracking

approaches have been very successful by learning the target

classification and/or estimation model from a large amount

of supervised training data in offline mode. However, most

of them can still fail in tracking objects due to some more

challenging issues such as dense distractor objects, confusing

background, motion blurs, and so on. Inspired by the human

“visual tracking” capability which leverages motion cues to

distinguish the target from the background, we propose a Two-

Stream Residual Convolutional Network (TS-RCN) for visual

tracking, which successfully exploits both appearance and motion

features for model update. Our TS-RCN can be integrated with

existing deep learning based visual trackers. To further improve

the tracking performance, we adopt a “wider” residual network

ResNeXt as its feature extraction backbone. To the best of our

knowledge, TS-RCN is the first end-to-end trainable two-stream

visual tracking system, which makes full use of both appearance

and motion features of the target. We have extensively evaluated

the TS-RCN on most widely used benchmark datasets including

VOT2018, VOT2019, and GOT-10K. The experiment results have

successfully demonstrated that our two-stream model can greatly

outperform the appearance based tracker, and it also achieves

state-of-the-art performance. The tracking system can run at up

to 38.1 FPS.

I. INTRODUCTION

Generic visual object tracking predicts the location of a

class-agnostic object at every frame of a video sequence. It

is a highly challenging task due to its class-agnostic nature,

background distraction, illumination discrepancy, motion blur,

and many more [1], [2]. In general, a visual tracking system

needs to perform two tasks simultaneously: target classifica-

tion and bounding-box estimation [3]. The former task is to

coarsely identify the target object region in current frame from

the background, while the latter further estimates the precise

bounding-box (i.e., tracker state) of the target object.

Recently, researchers have made great progress in visual

object tracking by exploiting the effective power of deep

convolution networks. The Siamese tracking approaches [4]

leverage a large amount of supervised data to learn a more

general region similarity measurement in offline mode, which

enables tracking to be performed by searching image regions

most similar to the target template. Due to the lack of

background appearance (e.g., distractor objects ), however, the

Siamese approaches are inferior to deal with unseen objects

and distractor objects. To address these limits, Bhat et al. [5]

propose a discriminative learning architecture (DiMP) which

is able to fully exploit both target and background appearance.

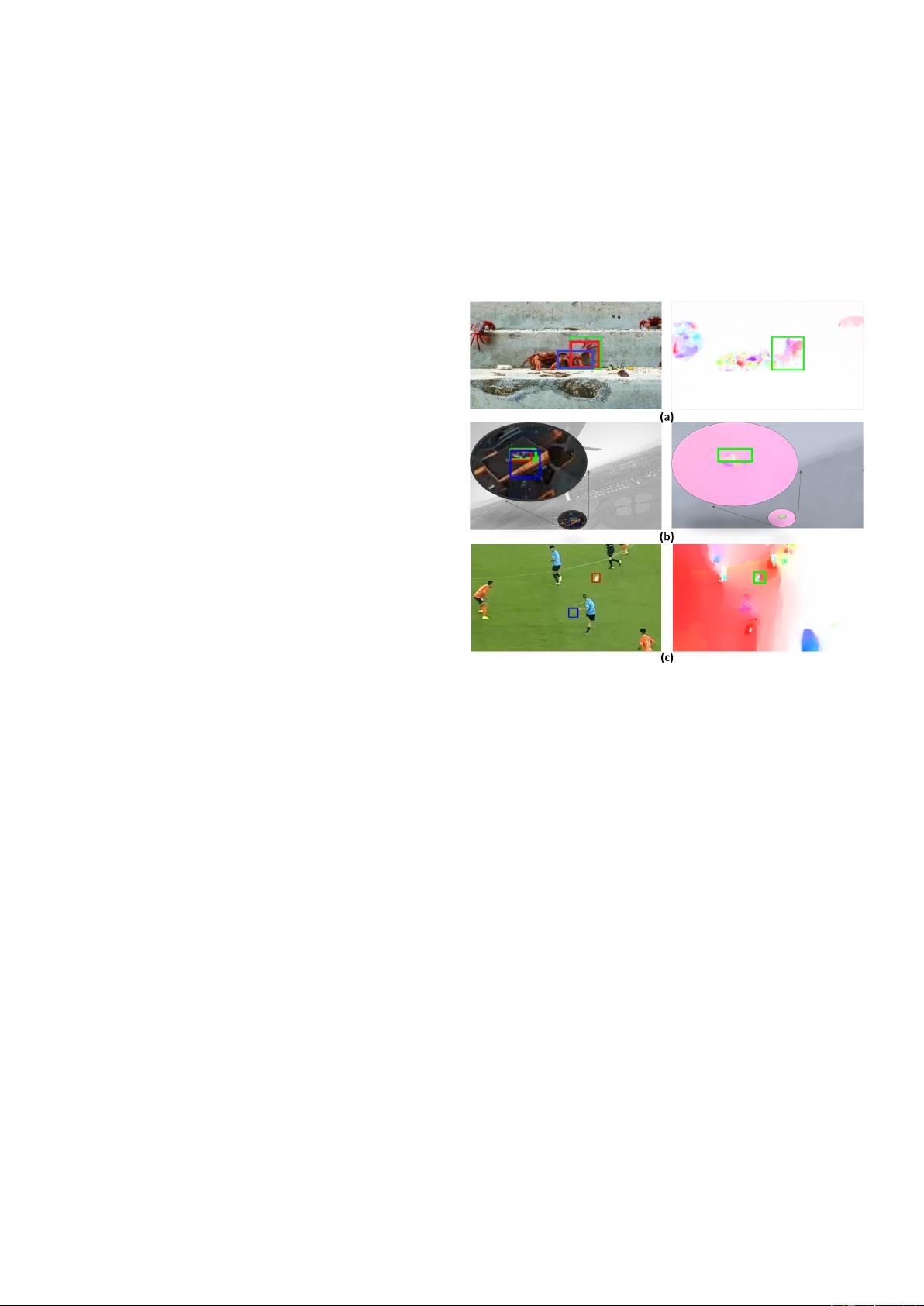

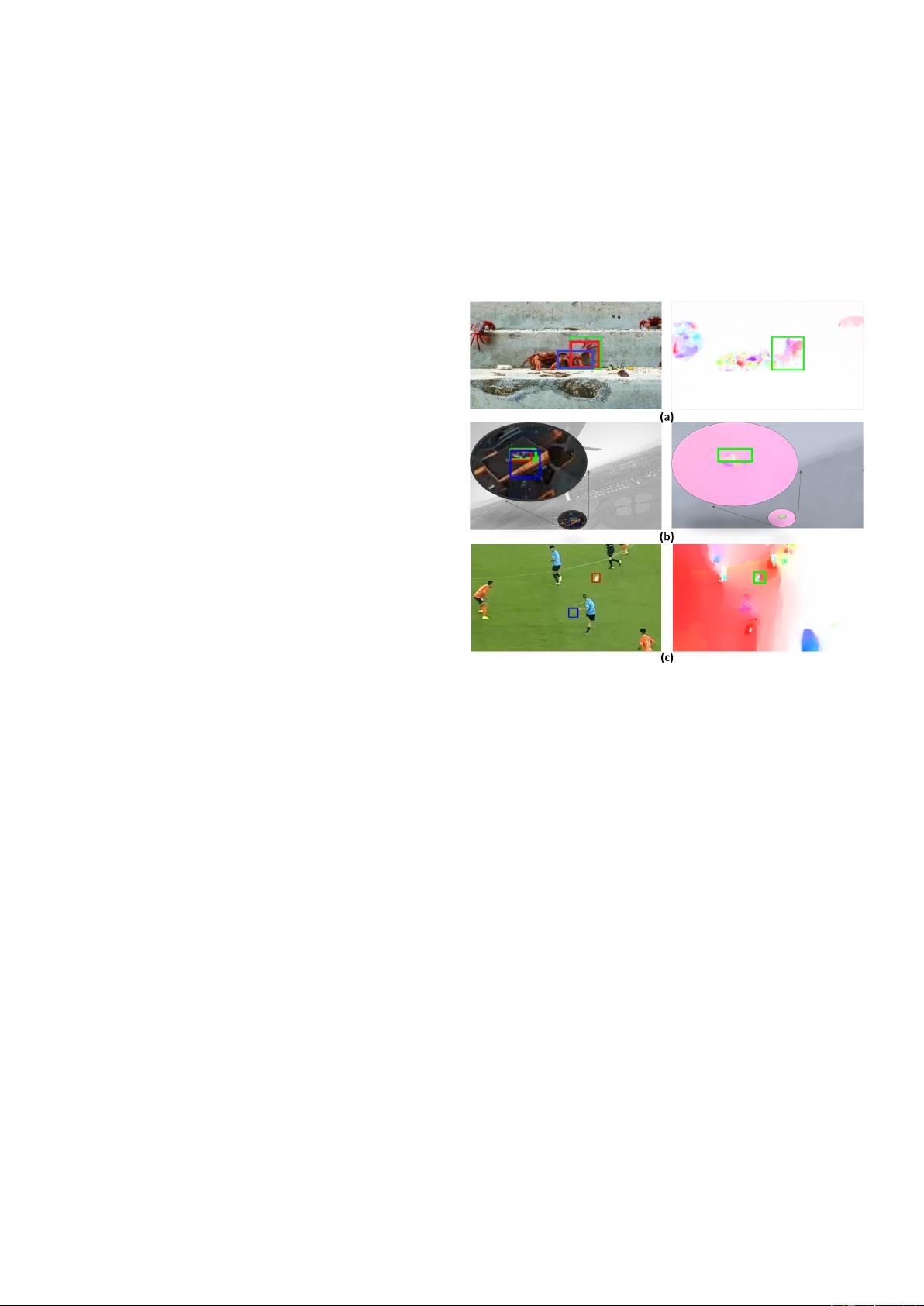

Fig. 1: The illustration of limitations for appearance based

visual tracking. The first column shows the tracking results

where the Green, Blue, and Red bounding box represent

groundtruth, DiMP tracker, and our TS-RCN tracker, respec-

tively. The second column illustrates the HSV-color visualiza-

tion of the optical flow. In all three cases, the target optical

flow has a different pattern than that of its local background.

Row (a) shows dense similar objects (i.e. crabs) as distractors;

Row (b) shows confusing background textures as distractors

(i.e., the flying drone blends with background buildings); Row

(c) shows the target (i.e., soccer ball) has motion blurs. This

figure is best viewed in PDF format.

Although DiMP is trained to separate the background from

target, it may still fail when the background becomes more

confusing and challenging. As shown in Fig. 1 (a) and (b),

DiMP is not able to track the targets (blue bounding box) due

to same-class distractors (i.e., similar crabs) and confusing

background texture, respectively. Additionally, the tracking

can be disconnected if the current frame has motion blurs

on the target. For example, the soccer ball is blurred due to

high speed as shown in 1 (c). All the aforementioned issues

can happen in most deep learning based tracking approaches

arXiv:2005.06536v1 [cs.CV] 13 May 2020