1.1. Mo del-based Reasoning 17

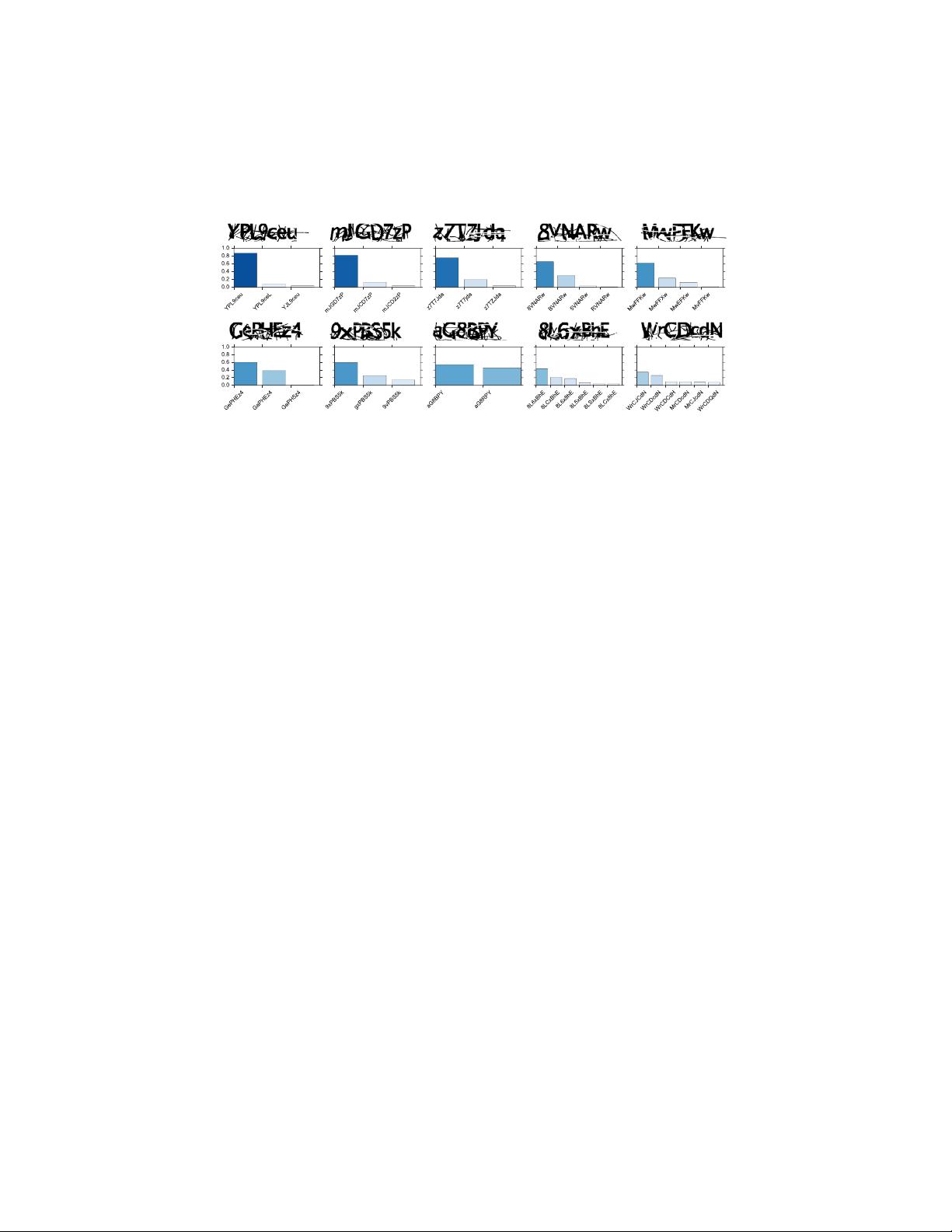

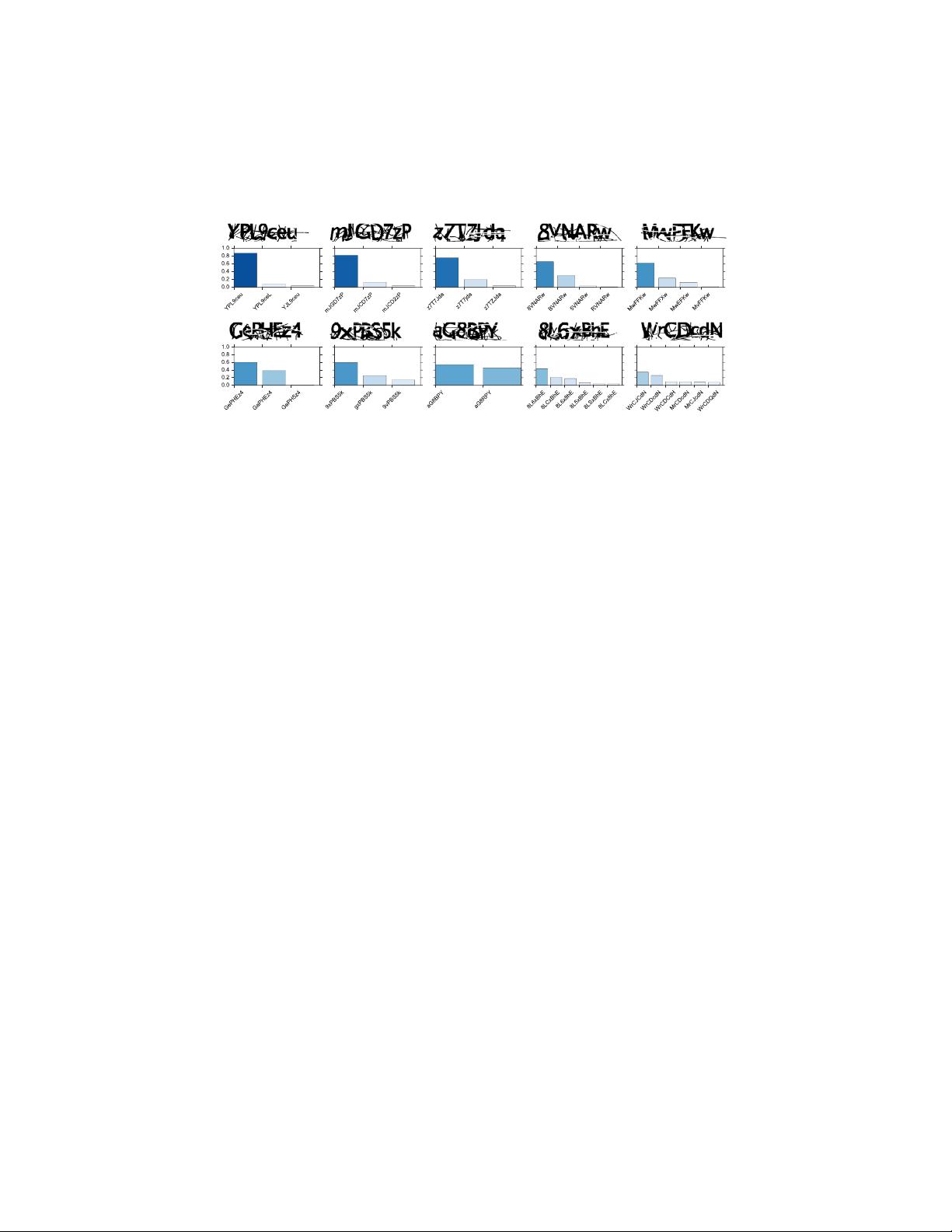

Fig. 4. Posteriors of real Facebook and Wikipedia Captchas. Conditioning on each Captcha, we show an approximate posterior produced by a set of weighted

importance sampling particles {(w

m

,x

(m)

)}

M=100

m=1

.

synthetic data generative model sets an empirical cornerstone

for future theory that quantifies and bounds the impact of

model mismatch on neural network and approximate inference

performance.

ACKNOWLEDGMENTS

Tuan Anh Le is supported by EPSRC DTA and Google

(project code DF6700) studentships. Atılım G

¨

unes¸ Baydin and

Frank Wood are supported under DARPA PPAML through

the U.S. AFRL under Cooperative Agreement FA8750-14-2-

0006, Sub Award number 61160290-111668. Robert Zinkov

is supported under DARPA grant FA8750-14-2-0007.

REFERENCES

[1] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,”

Nature, vol. 521, no. 7553, pp. 436–444, 2015.

[2] P. Y. Simard, D. Steinkraus, and J. C. Platt, “Best

practices for convolutional neural networks applied to

visual document analysis,” in Proceedings of the Seventh

International Conference on Document Analysis and

Recognition - Volume 2, ser. ICDAR ’03. Washington,

DC: IEEE Computer Society, 2003, pp. 958–962.

[3] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet

classification with deep convolutional neural networks,”

in Advances in Neural Information Processing Systems,

2012, pp. 1097–1105.

[4] M. Jaderberg, K. Simonyan, A. Vedaldi, and A. Zis-

serman, “Synthetic data and artificial neural networks

for natural scene text recognition,” arXiv preprint

arXiv:1406.2227, 2014.

[5] ——, “Reading text in the wild with convolutional neural

networks,” International Journal of Computer Vision,

vol. 116, no. 1, pp. 1–20, 2016.

[6] A. Gupta, A. Vedaldi, and A. Zisserman, “Synthetic Data

for Text Localisation in Natural Images,” in Proceedings

Figure 1.1:

Posterior uncertainties after inference in a probabilistic programming

language model of 2017 Facebook Captchas (reproduced from Le et al. (2017a))

P (X|Y ) arrived at by conditioning reflects this uncertainty.

By this simple example, whose source code appears in Chapter 5 in

a simplified form, we aim only to liberate your thinking in regards to

what a model is (a joint distribution, potentially over richly structured

objects, produced by adding stochastic choice to normal computer pro-

grams like Captcha generators) and what the output of a conditioning

computation can be like. What probabilistic programming languages

do is to allow denotation of any such model. What this tutorial cov-

ers in great detail is how to develop inference algorithms that allow

computational characterization of the posterior distribution of interest,

increasingly very rapidly as well (see Chapter 7).

1.1.2 Conditioning

Returning to our simple coin-flip statistics example, let us continue and

write out the joint probability density for the distribution on

X

and

Y

.

The reason to do this is to paint a picture, by this simple example, of

what the mathematical operations involved in conditioning are like and

why the problem of conditioning is, in general, hard.

Assume that the symbol

Y

denotes the observed outcome of the

coin flip and that we encode the event “comes up heads” using the

mathematical value of the integer 1 and 0 for the converse. We will

denote the bias of the coin, i.e. the probability it comes up heads, using