NOTE: This position paper has been coordinated among the software specialists of

certification authorities from North and South America, and Europe. However, it does not

constitute official policy or guidance from any of the authorities. This document is provided

for educational and informational purposes only and should be discussed with the

appropriate certification authority when considering for actual projects.

2

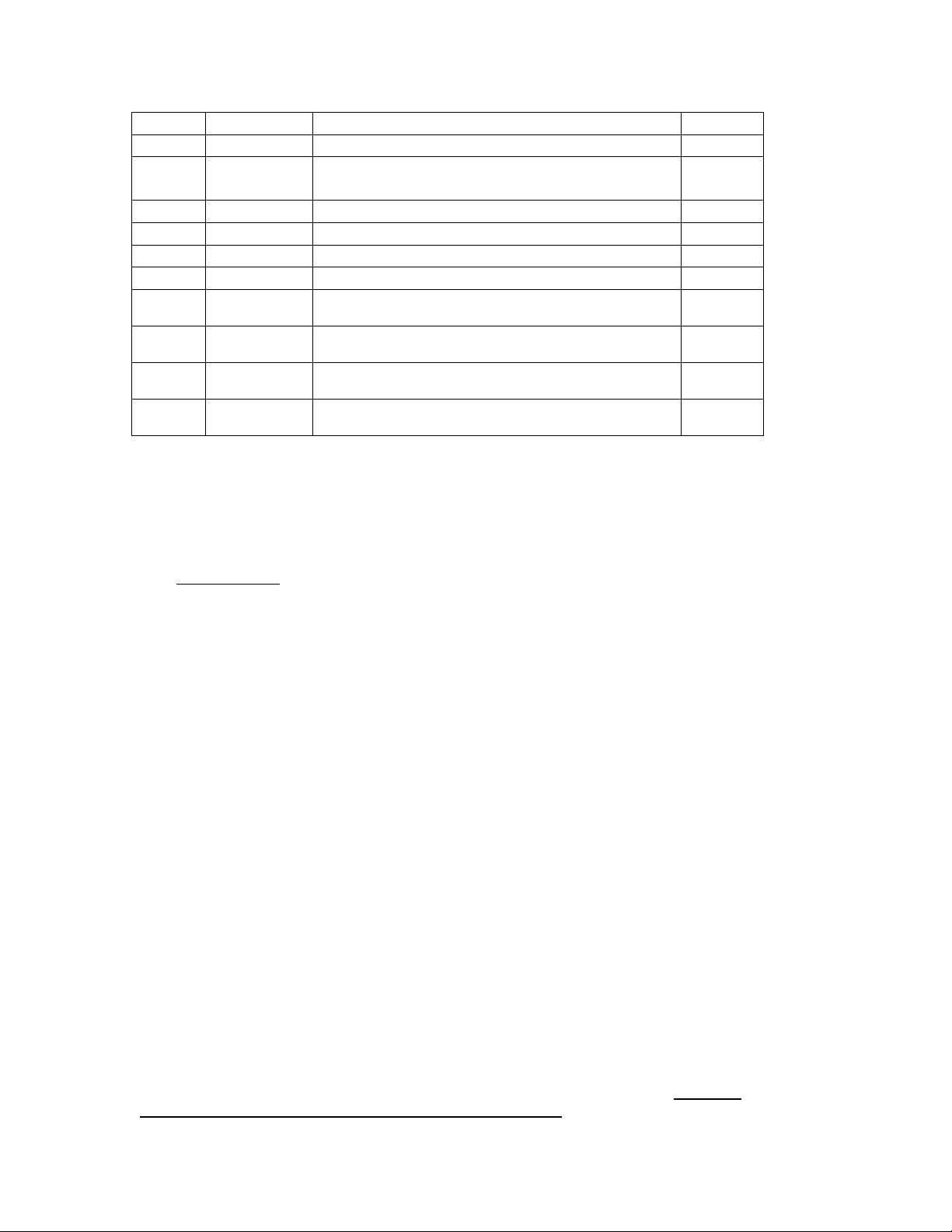

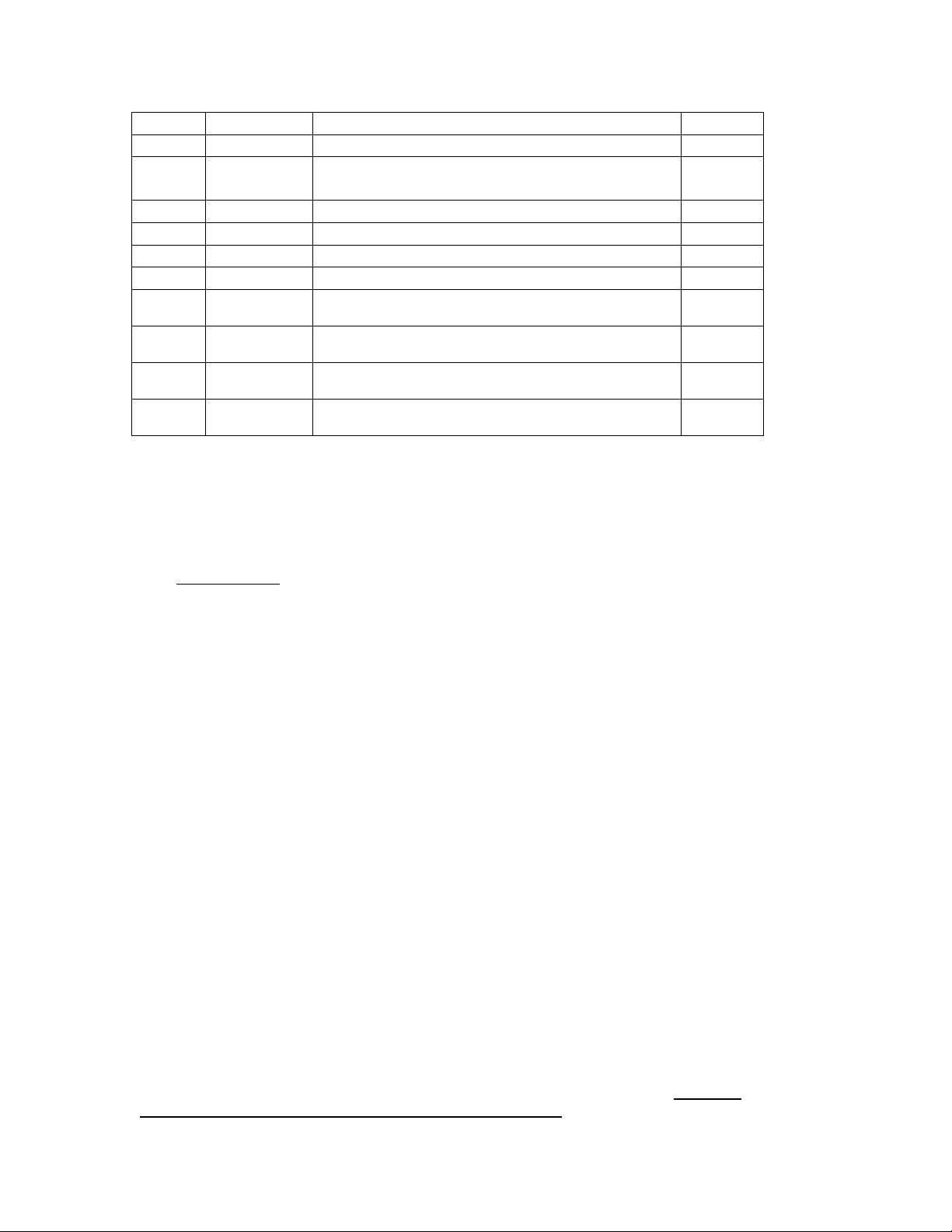

Table Reference Summary Level(s)

6.4.3

A-6 6.4.2.2,

6.4.3

“Executable object code is robust with low-level

requirements.”

A

A-7 6.3.6b

“Test procedures are correct.”

A

A-7 6.3.6c

“Test results are correct and discrepancies explained.”

A

A-7 6.4.4.1

“Test coverage of high-level requirements is achieved.”

A

A-7 6.4.4.1

“Test coverage of low-level requirements is achieved.”

A

A-7 6.4.4.2

“Test coverage of software structure (modified

condition/decision) is achieved.”

A

A-7 6.4.4.2a, b

“Test coverage of software structure (decision) is

achieved.”

A and B

A-7 6.4.4.2a, b

“Test coverage of software structure (statement

coverage) is achieved.”

A and B

A-7 6.4.4.2c

“Test coverage of software structure (data coupling and

control coupling) is achieved.”

A and B

Note: The text for each objective in the DO-178B/ED-12B Annex A tables is a

summarized form of the actual objective’s wording, and the referenced

sections should be read for the full text of each objective.

Furthermore, in Annex B, the DO-178B/ED-12B glossary, a definition of

independence is provided which states:

“Independence – Separation of responsibilities which ensures the

accomplishment of objective evaluation. (1) For software verification process

activities, independence is achieved when the verification activity is

performed by a person(s) other than the developer of the item being verified,

and a tool(s) may be used to achieve an equivalence to the human verification

activity. …”

Typically, compliance with the referenced objectives is achieved with the use of

humans manually reviewing the subject data, using a standard and/or checklist,

and is a very labor intensive activity. The results of these reviews are usually

captured by completing review checklists, keeping review notes of errors or

deficiencies found or questions, marking (red-lining) the data noting corrections

to be made, or other means; and then ensuring that corrections are implemented in

a subsequent version of the data artifact, using either a formal problem reporting

and resolution process, or an informal actions listing. Software quality assurance

(SQA) personnel usually attend at least some of these reviews to ensure that the

engineers are applying the relevant plans, standards, checklists, etc. Other terms

used for review include inspection, evaluation, walkthrough, audit, etc.

More recently, applicants have proposed to use tools to attempt to automate

certain aspects of these reviews or enforce their criteria. Probably the most

common tools proposed are “structural coverage” analysis tools which make