16 S. Holmbacka et al. / Microprocessors and Microsystems 43 (2016) 14–25

on single devices with a fixed deadline and on real-time systems.

In our approach we focus on many-core processors and static

schedules and we consider different deadlines for each schedule.

Zuhravlev et al. [32] give a survey of energy-cognizant schedul-

ing techniques. Many scheduling algorithms are presented and dif-

ferent techniques are explained. They distinguish between DVFS

and DPM-based solutions, thermal management solutions and

asymmetry-aware scheduling. None of the described scheduling al-

gorithms take the switching or shutdown/wakeup overhead into

account like our approach.

While acknowledging that DVFS and DPM are possible energy

savers in data centers [5,14,19] , our work focus on core level gran-

ularity with a smaller time scale and our measurements are based

on the per-core sleep state mechanism rather than suspension to

RAM or CPU hibernation. Aside from the mentioned differences,

none of the previous work deals with latency overhead for both

DVFS and DPM on a practical level from the operating system’s

point of view. Without this information, it is difficult to determine

the additional penalty regarding energy and performance for us-

ing power management on modern multi-core hardware using an

off-the-shelf OS such as Linux.

3. Power distribution and latency of power-saving mechanisms

Power saving techniques in microprocessors are hardware-

software coordinated mechanisms used to scale up or down parts

of the CPU dynamically during runtime. We outline the function-

alities and current implementation in the Linux kernel to display

the obstacles of practically using power management.

3.1. Dynamic Voltage and Frequency Scaling (DVFS)

The DVFS functionality was integrated into microprocessors to

lower the dynamic power dissipation of a CPU by scaling the clock

frequency and the chip voltage. Eq. (1) shows the simple relation

of these characteristics and the dynamic power

P

dynamic

= C · f · V

2

(1)

where C is the effective charging capacitance of the CPU, f is

the clock frequency and V is the CPU supply voltage. Since DVFS

reduces both the frequency and voltage of the chip (which is

squared), the power savings are more significant when used on

high clock frequencies [28,31] .

The relation between frequency and voltage is usually stored

in a hardware specific look-up table from which the OS retrieves

the values as the DVFS functionality is utilized. Since the clock fre-

quency switching involves both hardware and software actions, we

investigated the procedure in more detail to pinpoint the source of

the latency. In a Linux based system the following core procedure

describes how the clock frequency is scaled:

(1) A change in frequency is requested by the user.

(2) A mutex is taken to prevent other threads from changing the

frequency.

(3) Platform-specific routines are called from the generic inter-

face.

(4) The PLL is switched out to a temporary MPLL source.

(5) A safe voltage level for the new clock frequency is selected.

(6) New values for clock divider and PLL are written to registers.

(7) The mutex is given back and the system returns to normal

operation.

3.1.1. DVFS implementations

To adjust the DVFS settings, the Linux kernel uses a frequency

governor [15] to select, during run-time, the most appropriate fre-

quency based on a set of policies. In order to not be affected by the

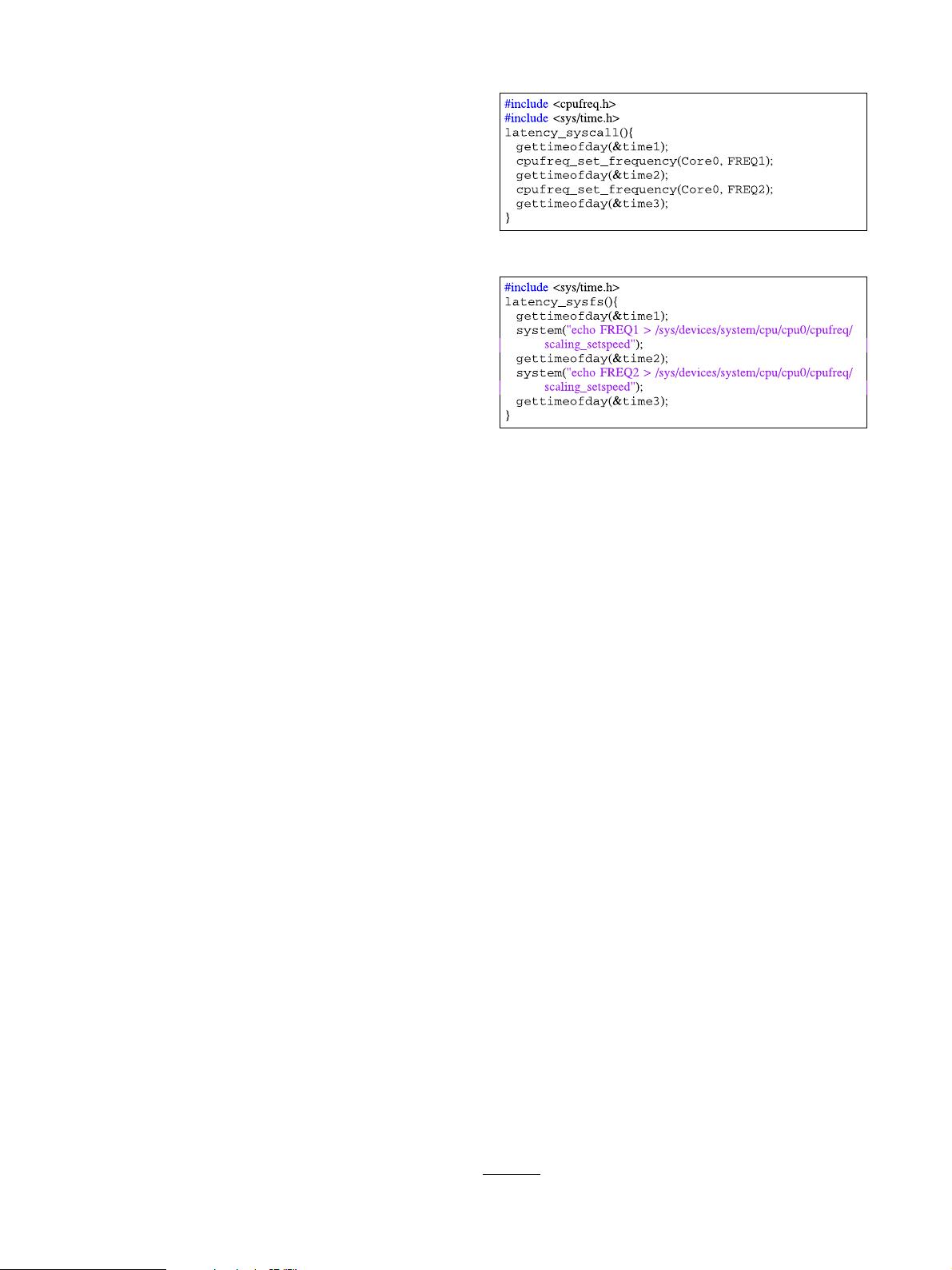

Listing 1. Pseudo code for measuring DVFS latency using system calls.

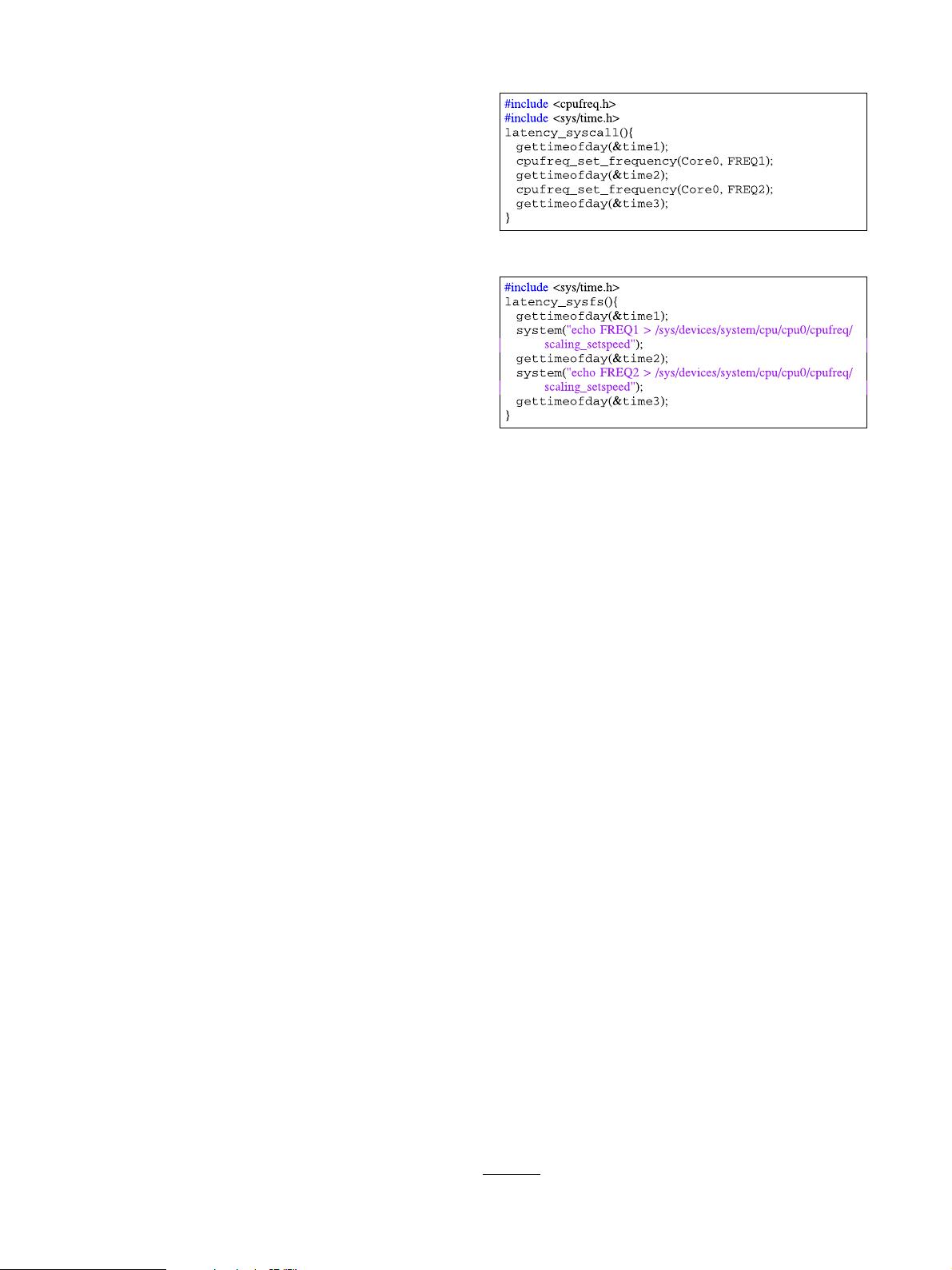

Listing 2. Pseudo code for measuring DVFS latency using sysfs .

governors, we selected the userspace governor for application-

controlled DVFS. The DVFS functionality can be accessed either by

directly writing to the sysfs interface or by using the system

calls. By using the sysfs , the DVFS procedure includes file man-

agement which is expected to introduce more overhead than call-

ing the kernel headers directly from the application. We studied,

however, both options in order to validate the latency differences

between the user space interface and the system call.

3.1.1.1. System call interface. The system call interface for DVFS un-

der Linux is accessible directly in the Linux kernel. We measured

the elapsed time between issuing the DVFS system call and the

return of the call which indicates a change in clock frequency.

Listing 1 outlines the pseudo code for accessing the DVFS func-

tionality from the system call interface.

3.1.1.2. User space interface. The second option is to use the sysfs

interface for accessing the DVFS functionality from user space. The

CPU clock frequency is altered by writing the frequency to be used

from now on into a sysfs file, which is read and consequently

used to change the frequency. The kernel functionality is not di-

rectly called from the c-program, but file system I/O is required for

both reads and writes to the sysfs filesystem. Listing 2 outlines

an example for the DVFS call via the sysfs interface.

3.1.2. DVFS measurement results

The user space and the kernel space mechanisms were eval-

uated, and the results are presented in this section. Since the

DVFS mechanism is ultimately executed on kernel- and user space

threads, the system should be stressed using different load levels

to evaluate the impact on the response time. For this purpose, we

used spurg-bench [23] . Spurg-bench is a benchmark capa-

ble of generating a defined set of load levels on a set of threads

executing for example floating point multiplications. We gener-

ated load levels in the range [0;90]% using spurg-Bench . Fur-

thermore we generated a load level of 100% using stress

1

since

this benchmark is designed to represent the maximum case CPU

utilization. All experiments were iterated 100 times with differ-

ent frequency hops, and with a timing granularity of microseconds.

1

http://people.seas.harvard.edu/apw/stress/ .