[ xi ]

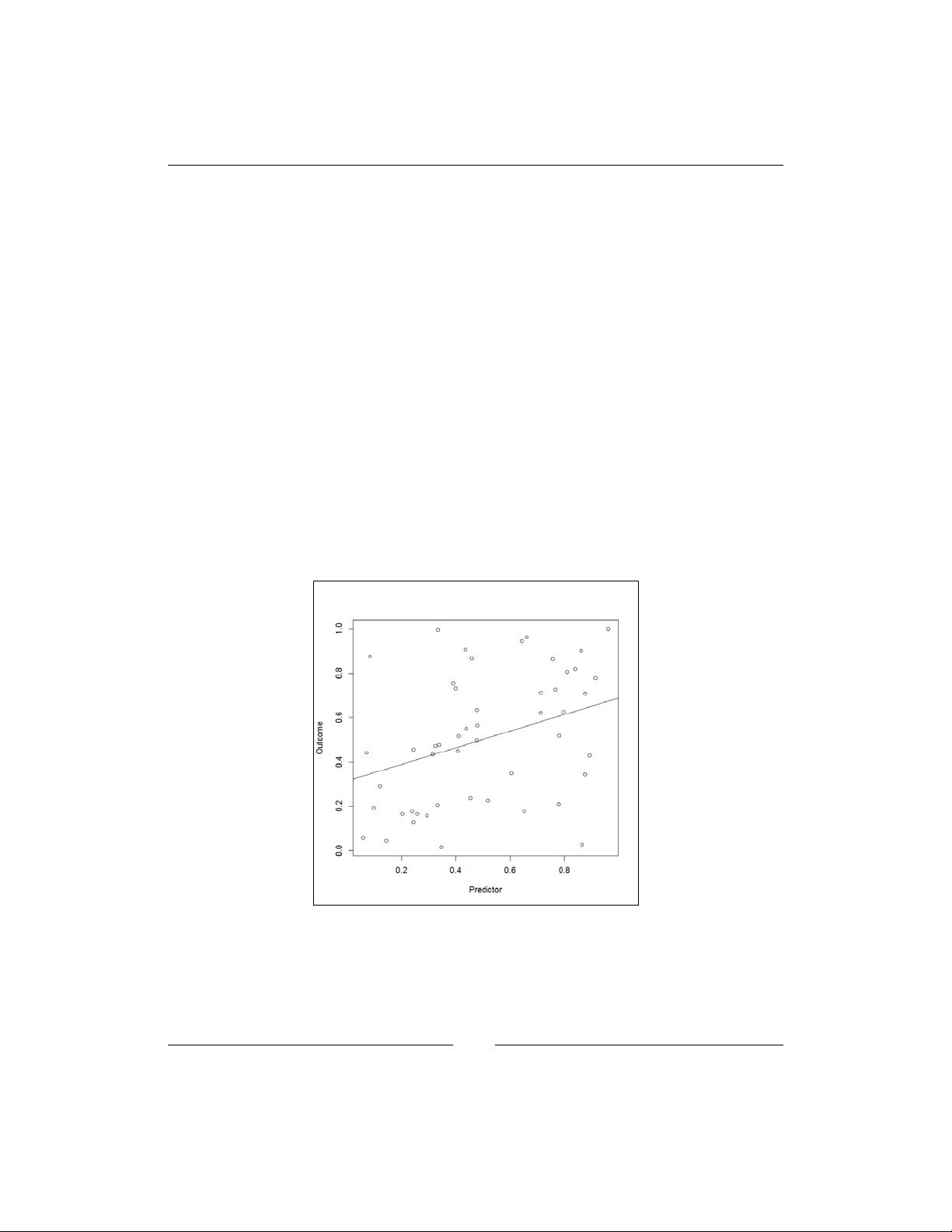

Believe it or not, the population these observations come from is that of randomly

generated numbers. We generated a data frame of 50 columns of 50 randomly

generated numbers. We then examined all the correlations (manually) and generated

a scatterplot of the two attributes with the largest correlation we found. The code is

provided here, in case you want to check it yourself—line 1 sets the seed so that you

nd the same results as we did, line 2 generates to the data frame, line 3 lls it with

random numbers, column by column, line 4 generates the scatterplot, line 5 ts the

regression line, and line 6 tests the signicance of the correlation:

1 set.seed(1)

2 DF = data.frame(matrix(nrow=50,ncol=50))

3 for (i in 1:50) DF[,i] = runif(50)

4 plot(DF[[2]],DF[[16]], xlab = "Predictor", ylab = "Outcome")

5 abline(lm(DF[[2]]~DF[[16]]))

6 cor.test(DF[[2]], DF[[16]])

How could this relationship happen given that the odds were 2.4 in 1000 ? Well,

think of it; we correlated all 50 attributes 2 x 2, which resulted in 2,450 tests (not

considering the correlation of each attribute with itself). Such spurious correlation

was quite expectable. The usual threshold below which we consider a relationship

signicant is p = 0.05, as we will discuss in Chapter 8, Probability Distributions,

Covariance, and Correlation. This means that we expect to be wrong once in 20 times.

You would be right to suspect that there are other signicant correlations in the

generated data frame (there should be approximately 125 of them in total). This is

the reason why we should always correct the number of tests. In our example, as

we performed 2,450 tests, our threshold for signicance should be 0.0000204 (0.05 /

2450). This is called the Bonferroni correction.

Spurious correlations are always a possibility in data analysis and this should be

kept in mind at all times. A related concept is that of overtting. Overtting happens,

for instance, when a weak classier bases its prediction on the noise in data. We

will discuss overtting in the book, particularly when discussing cross-validation

in Chapter 14, Cross-validation and Bootstrapping Using Caret and Exporting Predictive

Models Using PMML. All the chapters are listed in the following section.

We hope you enjoy reading the book and hope you learn a lot from us!

www.it-ebooks.info