3

It is worth mentioning that the residual r

k

of the gOMP is

orthogonal to the columns of Φ

Λ

k since

Φ

Λ

k , r

k

=

Φ

Λ

k , P

⊥

Λ

k

y

(4)

= Φ

′

Λ

k

P

⊥

Λ

k

y (5)

= Φ

′

Λ

k

P

⊥

Λ

k

′

y (6)

=

P

⊥

Λ

k

Φ

Λ

k

′

y = 0 (7)

where (6) follows from the symmetry of P

⊥

Λ

k

(P

⊥

Λ

k

=

P

⊥

Λ

k

′

)

and (7) is due to

P

⊥

Λ

k

Φ

Λ

k = (I − P

Λ

k ) Φ

Λ

k = Φ

Λ

k −Φ

Λ

k Φ

†

Λ

k

Φ

Λ

k = 0.

Here we note that this property is satisfied when Φ

Λ

k has

full column rank, which is true if k ≤ m/N in the gOMP

operation. It is clear from this observation that indices in

Λ

k

cannot be re-selected in the succeeding iterations and the

cardinality of Λ

k

becomes simply kN. When the iteration loop

of the gOMP is finished, therefore, it is possible that the final

support set Λ

s

contains indices not in T . Note that, even in

this situation, the final result is unaffected and the original

signal is recovered because

ˆ

x

Λ

s

= Φ

†

Λ

s

y (8)

= (Φ

′

Λ

s

Φ

Λ

s

)

−1

Φ

′

Λ

s

Φ

T

x

T

(9)

= (Φ

′

Λ

s

Φ

Λ

s

)

−1

Φ

′

Λ

s

(Φ

Λ

s

x

Λ

s

)

−(Φ

′

Λ

s

Φ

Λ

s

)

−1

Φ

′

Λ

s

Φ

Λ

s

−T

x

Λ

s

−T

(10)

= x

Λ

s

, (11)

where (10) follows from the fact that x

Λ

s

−T

= 0. From

this observation, we deduce that as long as at least one

correct index is found in each iteration of the gOMP, we can

ensure that the original signal is perfectly recovered within K

iterations. In practice, however, the number of correct indices

being selected is usually more than one so that the required

number of iterations is much smaller than K.

In order to observe the empirical performance of the gOMP

algorithm, we performed computer simulations. In our ex-

periment, we use the testing strategy in [16], [22] which

measures the effectiveness of recovery algorithms by checking

the empirical frequency of exact reconstruction in the noiseless

environment. By comparing the maximal sparsity level of

the underlying sparse signals at which the perfect recovery

is ensured (this point is often called critical sparsity [16]),

accuracy of the reconstruction algorithms can be compared

empirically. In our simulation, the following algorithms are

considered.

1) LP technique for solving ℓ

1

-minimization problem

(http://cvxr.com/cvx/).

2) OMP algorithm.

3) gOMP algorithm.

4) StOMP with false alarm control (FAC) based threshold-

ing (http://sparselab.stanford.edu/).

1

5) ROMP algorithm

(http://www.cmc.edu/pages/faculty/DNeedell).

1

Since FAC scheme outperforms false discovery control (FDC) scheme, we

exclusively use FAC scheme in our simulation.

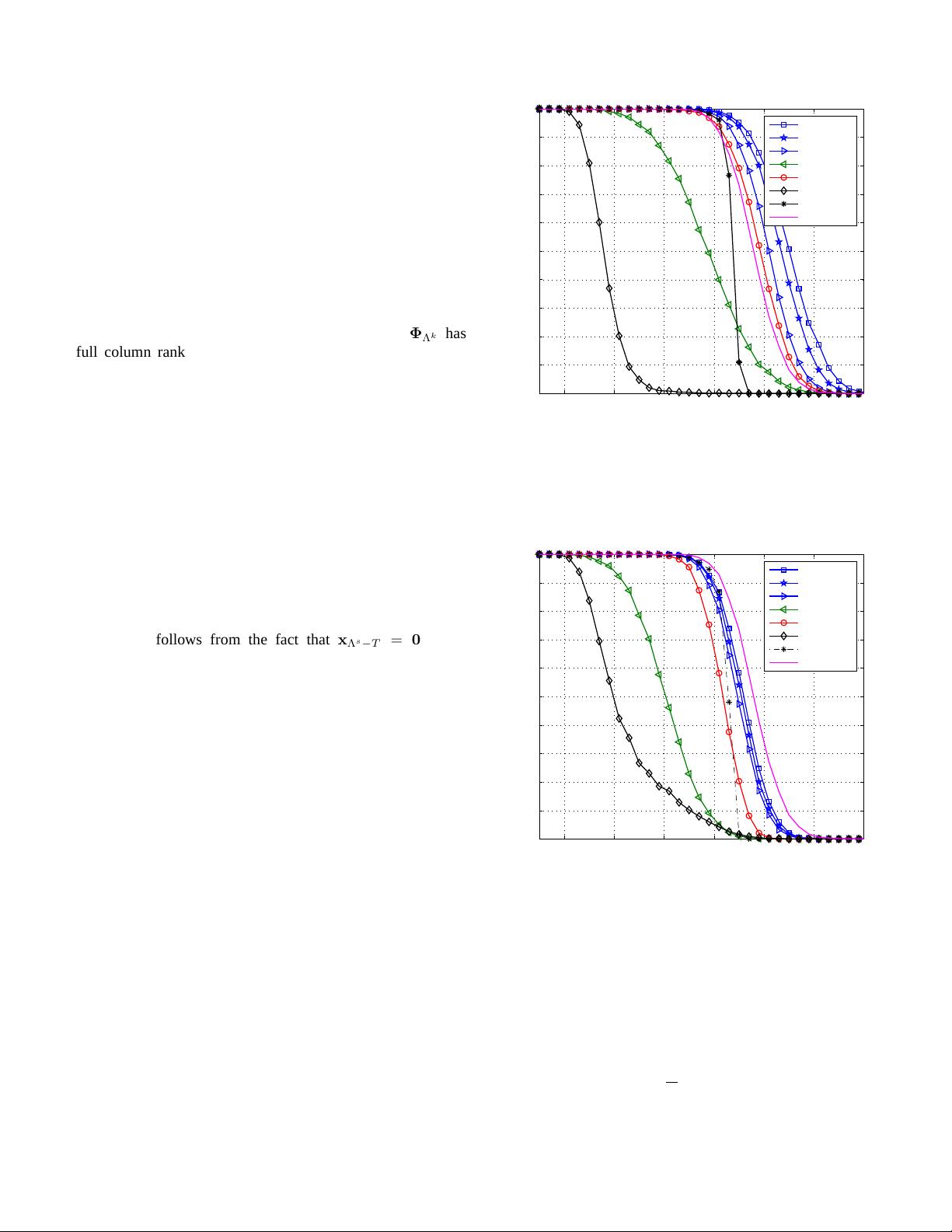

10 20 30 40 50 60 70

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Sparsity

Frequency of Exact Reconstruction

gOMP (N=3)

gOMP (N=6)

gOMP (N=9)

OMP

StOMP

ROMP

CoSaMP

LP

Fig. 1. Reconstruction performance for K-sparse Gaussian signal vector as

a function of sparsity K.

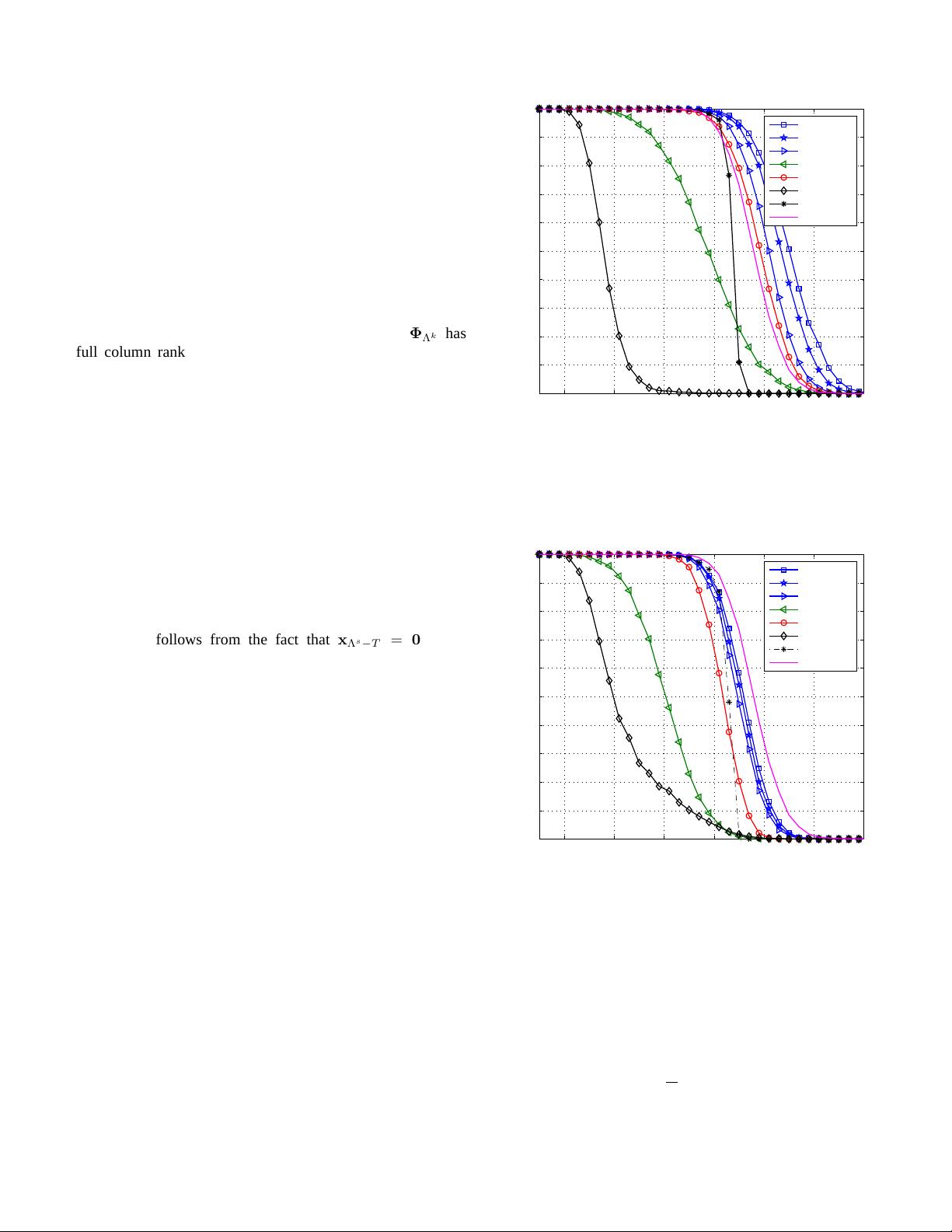

10 20 30 40 50 60 70

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Sparsity

Frequency of Exact Reconstruction

gOMP (N=3)

gOMP (N=6)

gOMP (N=9)

OMP

StOMP

ROMP

CoSaMP

LP

Fig. 2. Reconstruction performance for K-sparse PAM signal vector as a

function of sparsity K.

6) CoSaMP algorithm

(http://www.cmc.edu/pages/faculty/DNeedell).

In each trial, we construct m ×n (m = 128 and n = 256)

sensing matrix Φ with entries drawn independently from

Gaussian distribution N(0,

1

m

). In addition, we generate a

K-sparse vector x whose support is chosen at random. We

consider two types of sparse signals; Gaussian signals and

pulse amplitude modulation (PAM) signals. Each nonzero

element of Gaussian signals is drawn from standard Gaussian