Correlated and Individual Multi-Modal Deep Learning for RGB-D Object

Recognition

Ziyan Wang

Tsinghua University

zy-wang13@mails.tsinghua.edu.cn

Jiwen Lu

Tsinghua University

lujiwen@tsinghua.edu.cn

Ruogu Lin

Tsinghua University

lrg14@mails.tsinghua.edu.cn

Jianjiang Feng

Tsinghua University

jfeng@tsinghua.edu.cn

Jie Zhou

Tsinghua University

jzhou@tsinghua.edu.cn

Abstract

In this paper, we propose a correlated and individual

multi-modal deep learning (CIMDL) method for RGB-D

object recognition. Unlike most conventional RGB-D object

recognition methods which extract features from the RGB

and depth channels individually, our CIMDL jointly learns

feature representations from raw RGB-D data with a pair

of deep neural networks, so that the sharable and modal-

specific information can be simultaneously and explicitly

exploited. Specifically, we construct a pair of deep resid-

ual networks for the RGB and depth data, and concatenate

them at the top layer of the network with a loss function

which learns a new feature space where both the correlated

part and the individual part of the RGB-D information are

well modelled. The parameters of the whole networks are

updated by using the back-propagation criterion. Exper-

imental results on two widely used RGB-D object image

benchmark datasets clearly show that our method outper-

forms most of the state-of-the-art methods.

1. Introduction

Object recognition is one of the most challenging prob-

lems in computer vision, and is catalysed by the swift devel-

opment of deep learning [16, 18, 24] in recent years. Var-

ious works have achieved exciting results on several RGB

object recognition challenges [11, 14]. However, there are

several limitations for object recognition using only RGB

information in many real world applications, as it projects

the 3-dimensional world into a 2-dimensional space which

leads to inevitable data loss. To amend those shortcomings

of RGB images, using depth images as a complimentary is

a plausible way. The RGB image contains information of

color, shape and texture while the depth contains informa-

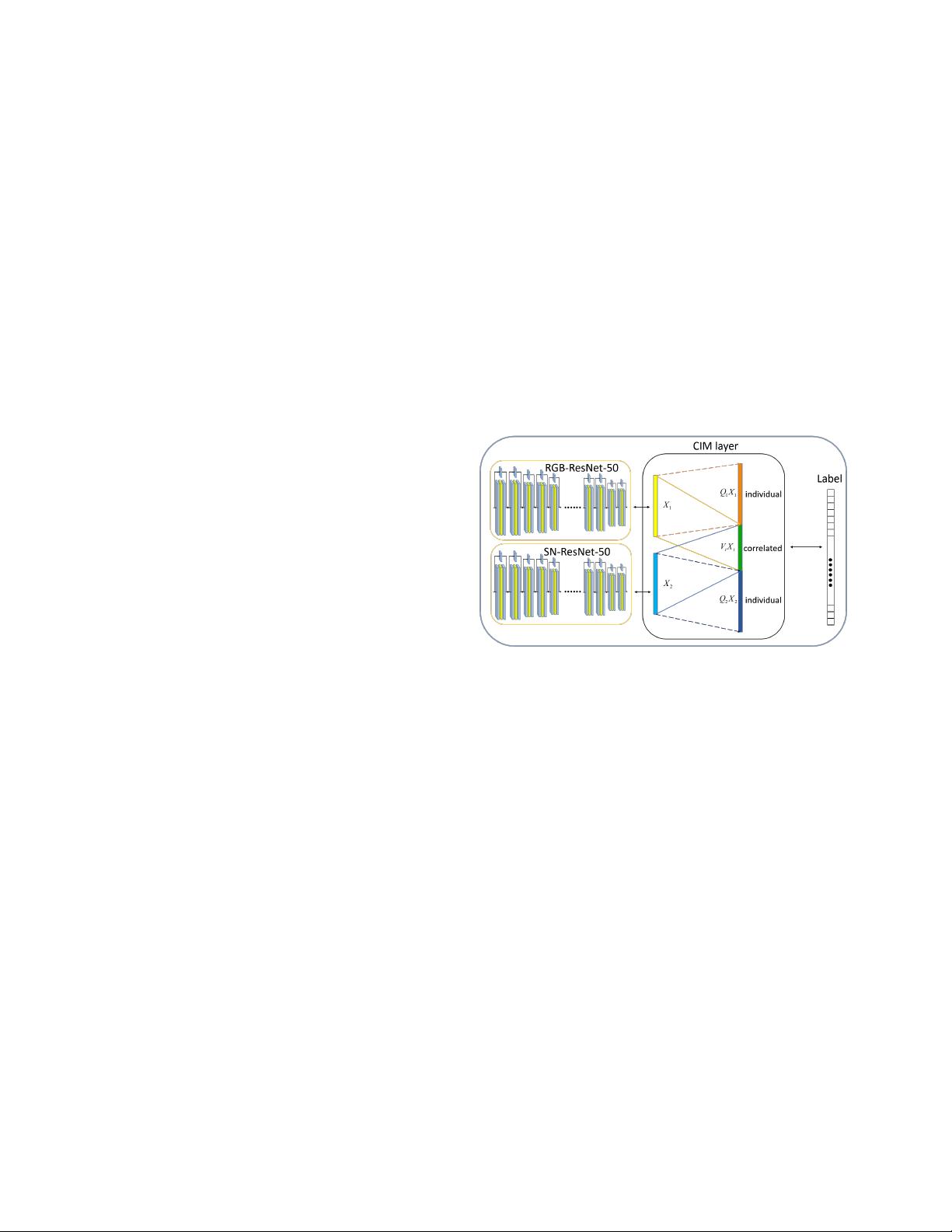

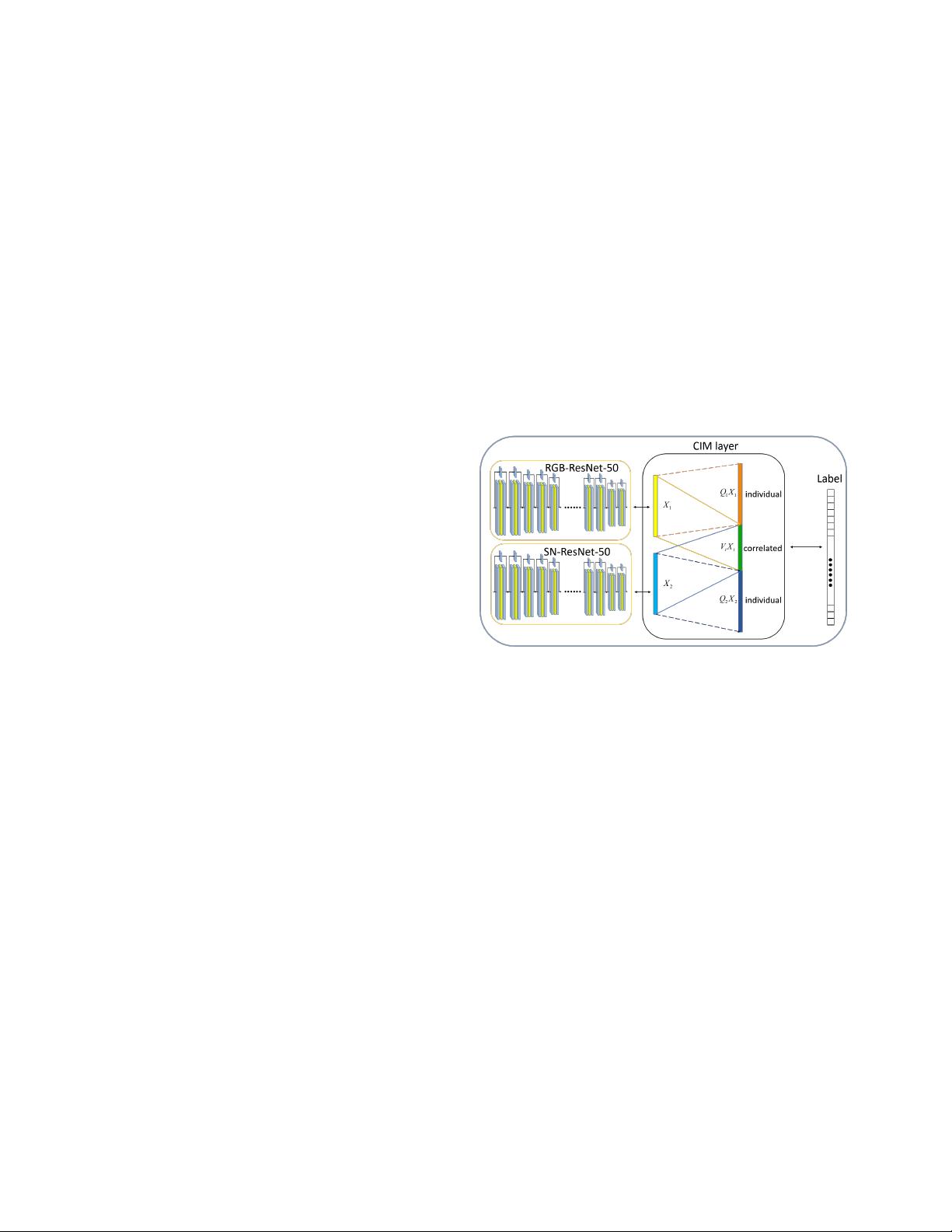

Figure 1. The pipeline of our proposed approach. We construct

a two-way ResNet for RGB images and surface normal depth im-

ages for feature extraction. We design a multi-modal learning layer

to learn the correlated part V

i

X

i

and the individual parts Q

i

X

i

of

the RGB and depth features, respectively. We project the RGB-D

features X

1

, X

2

into a new feature space. The loss function en-

forces the affinity of the correlated part from different modalities

and discriminative information of the modal-specific part, where

the combination weights are also automatically learned within the

same framework. (Best view in the color file)

tion of shape and edge. Those basic features can serve both

as a strength or weakness in object recognition. For exam-

ple, we are able to tell the difference between an apple and

a table simply by the shape information from depth. How-

ever it is ambiguous when it comes to figure out whether

it is an apple or an orange just by depth. When an or-

ange plastic ball and an orange are placed together, it is

equally difficult for us to tell the difference just by RGB

image. This means that a simple combination of features

from two modalities sometimes jeopardizes the discrim-

inability of feature. Therefore, we are supposed to choose

those shared and specific features more wisely. Thus, we

believe a more elaborated combination of modality-specific

4321

arXiv:1604.01655v3 [cs.CV] 9 Dec 2016