ESprint: QoS-Aware Management for Effective

Computational Sprinting in Data Centers

Haoran Cai, Qiang Cao , Feng Sheng, Yang Yang, Changsheng Xie, Liang Xiao

Wuhan National Laboratory for Optoelectronics, Key Laboratory of Information Storage System of Ministry of Education,

School of Computer Science and Technology, Huazhong University of Science and Technology

Corresponding Author: caoqiang@hust.edu.cn

Abstract—In the era of ’dark silicon’, modern data centers

have to provision additional hardware resources to guarantee

the Quality of Service (QoS) of applications in case of bursty

workloads that typically occur in low frequency but high inten-

sity. Fortunately, Computational Sprinting has proven to be an

effective approach to boost the computing performance of many-

core processor chips, which allows a chip to exceed its power and

thermal limits temporarily by turning on all processor cores and

absorbing the extra heat dissipation with novel phase-changing

materials. Consequently, it offers a promising way to deal with

these occasional workload bursts by unleashing the full potentials

of hardware, avoiding deploying extra computing resources. In

this work, we propose ESprint, a QoS-aware management system

based on an effective feedback control mechanism for latency-

critical applications in data centers. ESprint can perform compu-

tational sprinting by precisely scheduling core count, frequency

levels, and sprinting duration, serving bursty workloads without

QoS violation under the thermal constraint. Specifically, ESprint

effectively predict load intensity in the next time interval, and

further dynamically allocates appropriate computing resources to

minimize actual power consumption. Our prototype-based evalu-

ation results show that ESprint achieves up to 1.92x improvement

on energy efficiency for typical workloads while ensuring QoS,

over the non-sprinting strategy. We also explore the design space

among energy efficiency, core count/frequency scaling techniques,

workload characteristics, burst intensity, and QoS requirements,

and draw several key insights to guide the effective use of

computational sprinting in data centers.

Keywords: Computational Sprinting, Energy efficiency,

Power management, Data center, Quality of Service

I. I

NTRODUCTION

Modern processors cannot power on all cores at the nomi-

nal operating voltage and frequency due to thermal constraints,

a phenomenon known as ’dark silicon’ [11]. Recent studies

have manifested that computational sprinting, which activates

idle cores and increases voltage and/or frequency for a short

period of time by absorbing the extra heat dissipation with

emerging materials (e.g., phase-changing materials), provides

a promising way to temporarily speed up application perfor-

mance during workload bursts [28], [27], [34], [15].

On the other side, cloud data centers routinely endure

bursty workloads with low frequency but high intensity own

to interactive services (e.g., search, forum, news) [34], [21],

particularly under special events such as breaking news, online

shopping big sales (e.g., the Black Friday after Thanksgiving),

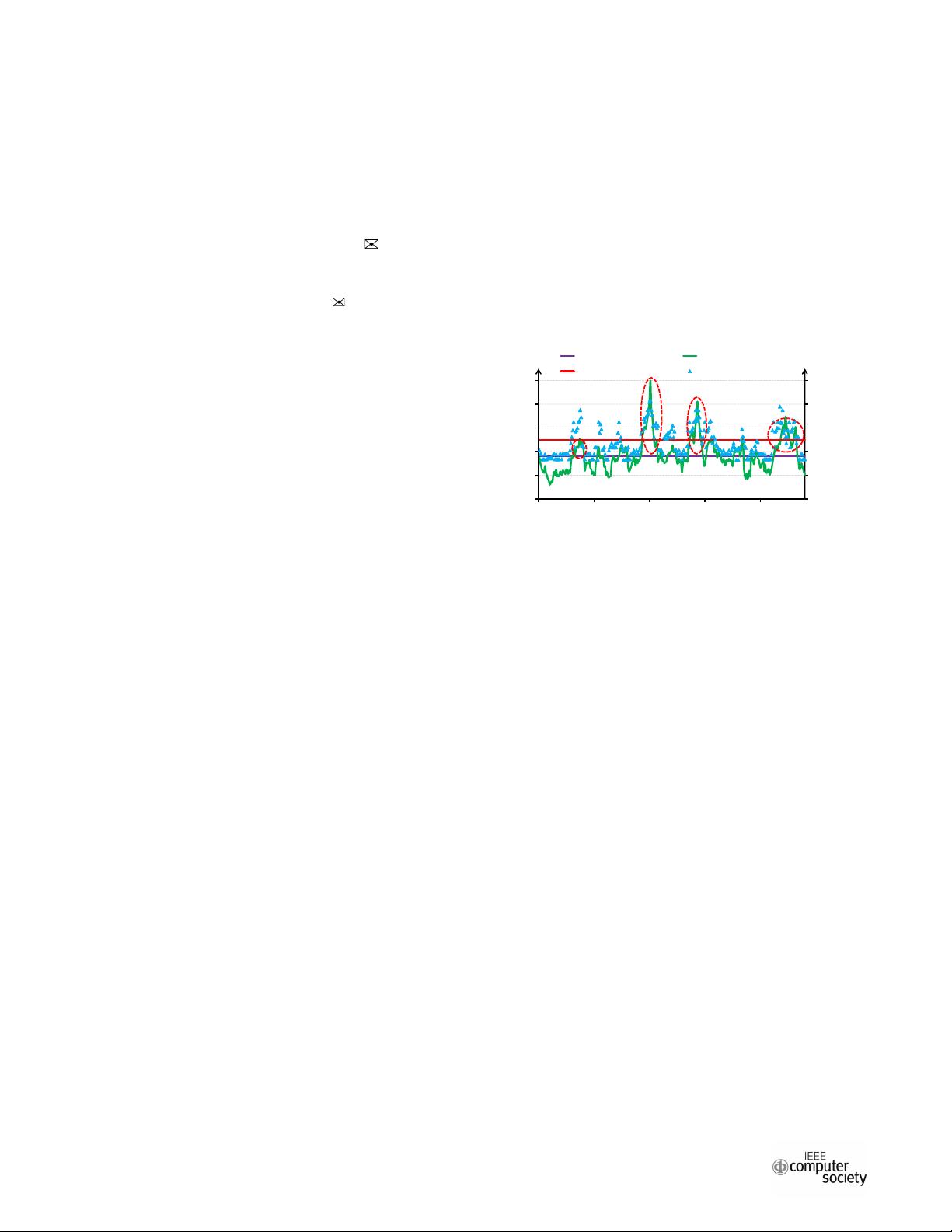

etc. As illustrated by Figure 1, the diurnal workload pattern

(green line) from a study of a Google data center [30] consists

0

0

0.2

0.4

0.6

0.8

1

0 5 10 15 20

Load

Time (Hour)

Figure 1: Workload pattern (normalized to the maximal load

intensity) for a Google data center [30] and measured latency

results under limited server processing capacity.

of several load spikes (indicated by the red ovals) during

a whole day with varying burst intensities and durations.

However, the actual processing capacity of modern servers

in data centers, which is denoted by a purple line in Figure

1, is limited under normal cooling environment. Therefore,

the measured latency results can violate the quality of service

(QoS) target (e.g., 500ms constraint for Web-search workload

as the red line shows) under the load spikes as shown in this

figure. Considering that the QoS of latency-critical applica-

tions plays a pivotal role in the financial success of a business

[2], [16], it is imperative to strictly ensure user satisfaction

even by deploying extra servers or high-density rack, such as

Scorpio [4] and Open Compute Project [3], leading to high

investment cost and over-provisioned power budget.

Fortunately, computational sprinting can temporarily un-

leash the full power of processors. Recent studies have

introduced this approach to improve the task throughput

for data analytic workloads or to reduce the runtime for

parallel workloads, avoiding deploying extra hardware and

power budget [15], [27]. However, computational sprinting

precisely serving workload bursts has to overcome two critical

challenges: a) Thermal constraint at chip level and power

limitation at data center level from the supply side. During

a sprinting process, the cooling system needs to effectively

remove the extra heat dissipated from chip-level sprinting.

In a data center, simply conducting sprinting must draw

more power, potentially overloading facilities in the power

infrastructure, such as the power distribution units (PDUs)

and the on-site power substation. b) Workload fluctuation

420

2019 19th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID)

978-1-7281-0912-1/19/$31.00 ©2019 IEEE

DOI 10.1109/CCGRID.2019.00056