Oracle Solaris ZFS Features

Oracle Solaris ZFS Features

The Oracle Solaris ZFS file system provides features and benefits not found in other file

systems. The following table compares the features of the ZFS file system with traditional file

systems.

Note - For a more detailed discussion of the differences between ZFS and historical file

systems, see Oracle Solaris ZFS and Traditional File System Differences.

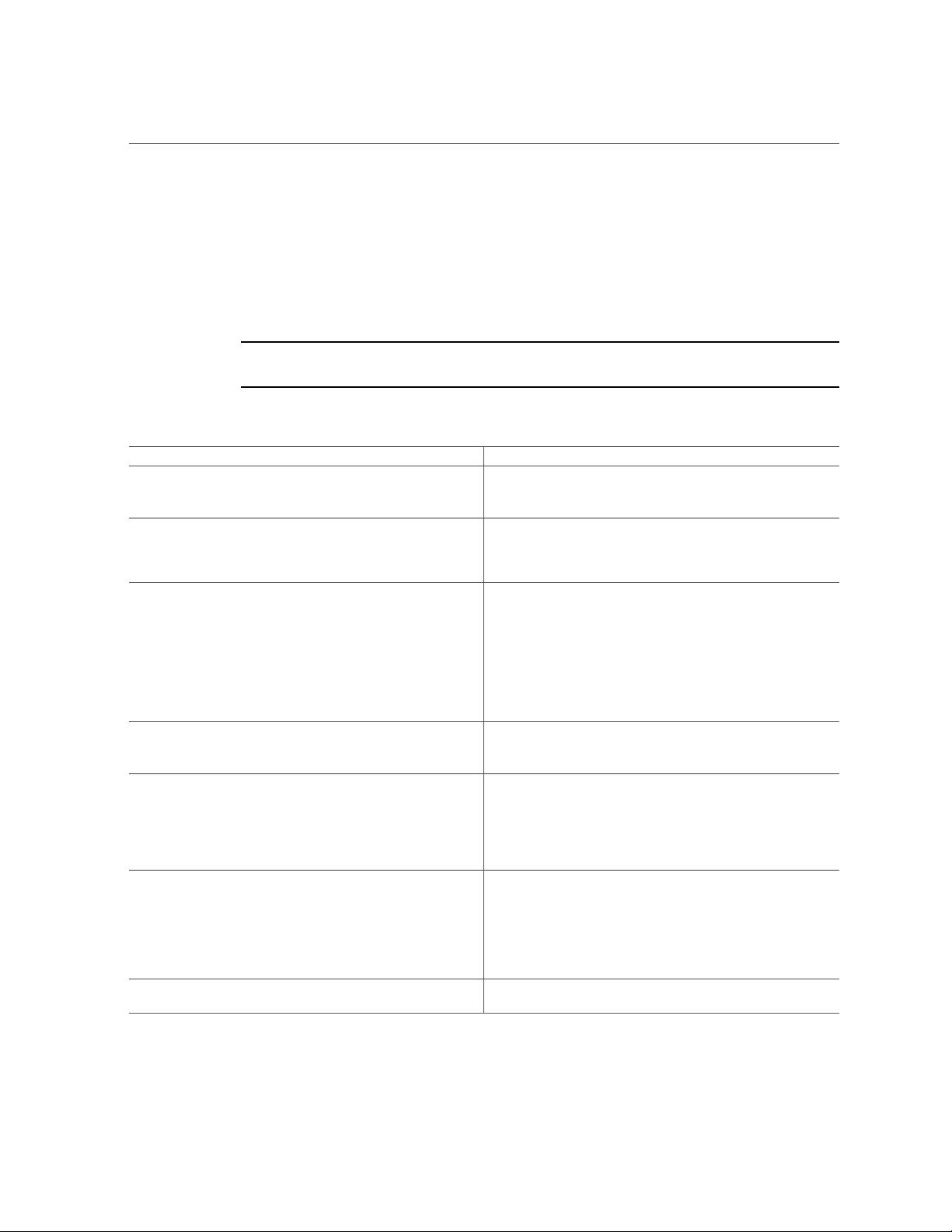

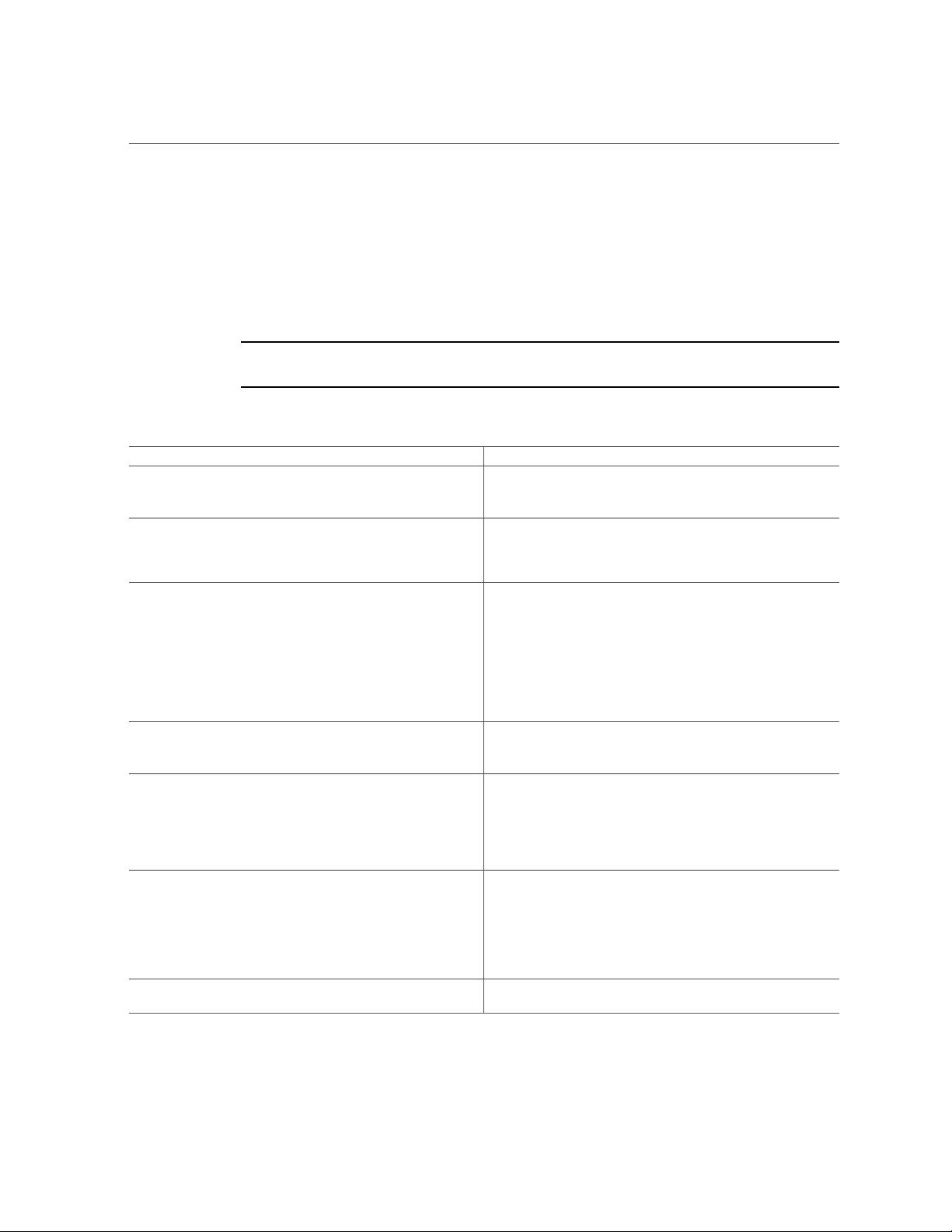

TABLE 1

Comparison of the ZFS File System and Traditional File Systems

ZFS File System Traditional File Systems

Uses concept of storage pools created on devices. The pool size

grows as more devices are added to the pool, The additional space is

immediately available for use.

Constrained to one device and to the size of that device.

Volume manager unnecessary. Commands configure pools for data

redundancy over multiple devices.

Supports one file system per user or project for easier management.

Requires volume manager to handle multiple devices to provide data

redundancy, which adds to the complexity of administration.

Uses one file system to manage multiple subdirectories.

Set up and manage many file systems by issuing commands, and

directly apply properties that can be inherited by the descendant file

systems within the hierarchy. No need to edit the /etc/vfstab file.

File system mounts or unmounts are automatic based on file system

properties.

You can create a snapshot, a read-only copy of a file system

or volume quickly and easily. Initially, snapshots consume no

additional disk space within the pool.

Complex administration due to device and size constraints. For

example, every time you add a new file system, you must edit the

/etc/vfstab file.

Metadata is allocated dynamically. No pre-allocation or

predetermined limits are set. The number of supported file systems

is limited only by the available disk space.

Pre-allocation of metadata results in immediate space cost at the

creation of the file system. Pre-allocation also predetermines the

total number of file systems that can be supported.

Uses transactional semantics, where data management uses copy-

on-write semantics, not data overwrite. Any sequence of operations

is either entirely committed or entirely ignored. During accidental

loss of power or a system crash, most recently written pieces of

data might be lost but the file system always remains consistent and

uncorrupted.

Overwrites data in place. File system vulnerable to getting into an

inconsistent state, for example, if the system loses power between

the time a data block is allocated and when it is linked into a

directory. Tools such as fsck command or journaling do not always

guarantee a fix and can introduce unnecessary overhead.

All checksum verification and data recovery are performed at the

file system layer, and are transparent to applications. All failures are

detected and recovery can be performed.

Supports self-healing data through its varying levels of data

redundancy. A bad data block can be repaired by replacing it with

correct data from another redundant copy.

Checksum verification, if provided, is performed on a per-block

basis. Certain failures, such as writing a complete block to an

incorrect location, can result in data that is incorrect but has no

checksum errors.

ACL (Access Control List) model based on NFSv4 specifications to

protect ZFS, similar to NT-style ACL. The model provides a much

In previous Oracle Solaris releases, ACL implementation was based

on POSIX ACL specifications to protect UFS.

16 Managing ZFS File Systems in Oracle Solaris 11.3 • May 2019