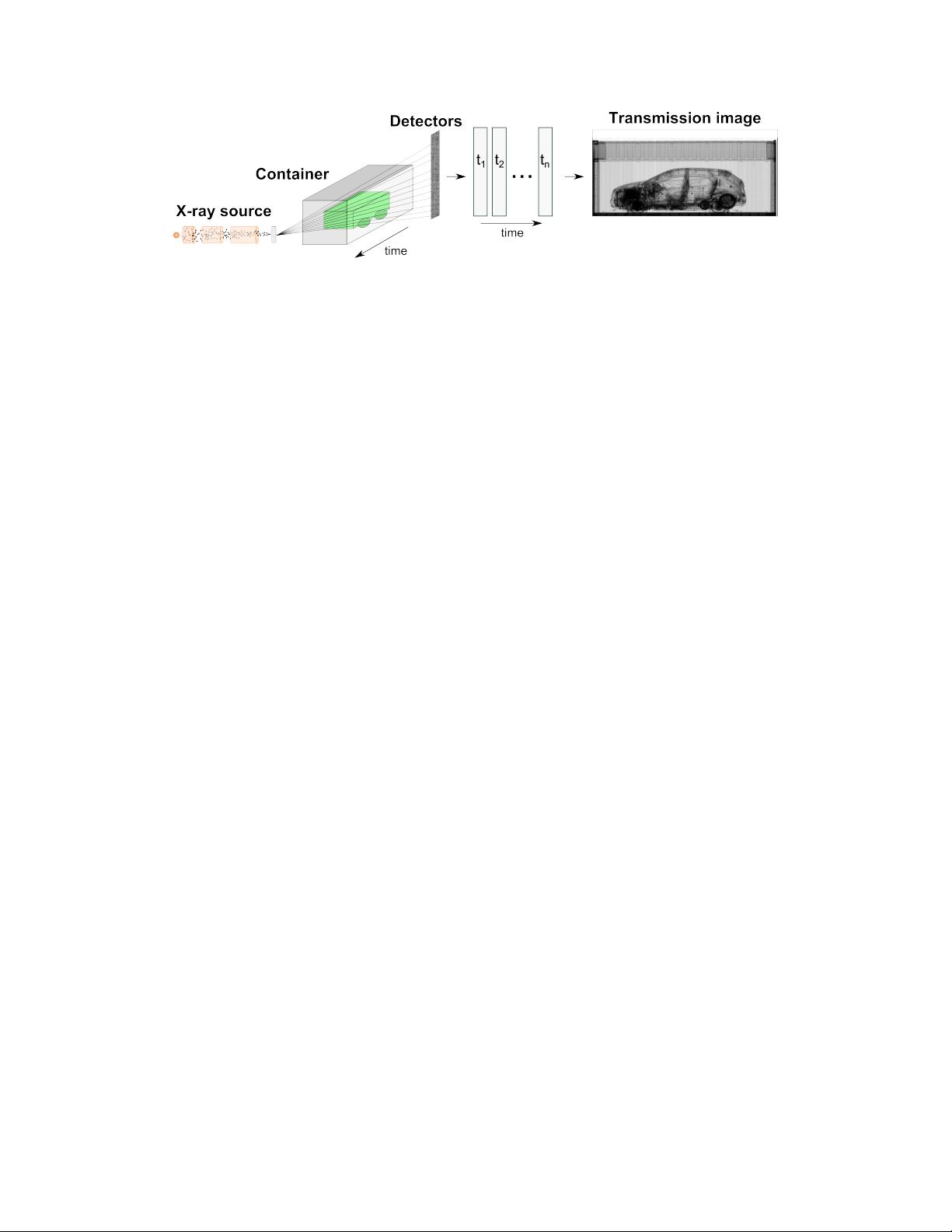

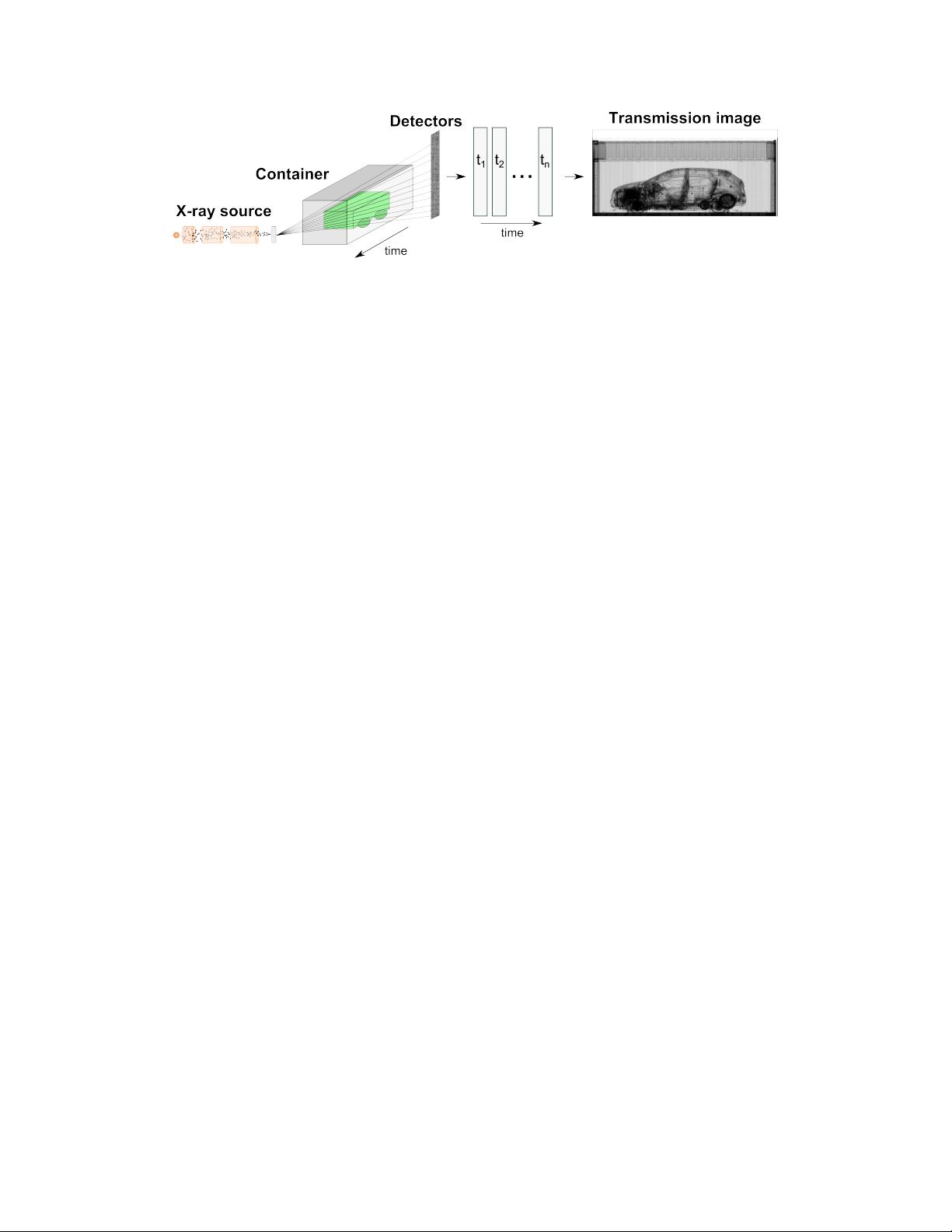

Figure 1: Illustration of the X-ray image formation and acquisition processes. Photons emitted by

an X-ray source interact with a container and its content, leading to a signal attenuation measured

by detectors placed behind the container. By moving the container or the detector, attenuations are

determined spatially and are be mapped to pixel values to produce an X-ray transmission image

is in part made possible by the relatively constrained process of baggage scanning: scene dimensions

and complexity are both bounded by the small dimensions of a bag. Multi-view (potentially volumet-

ric), multi-energy, and high resolution imaging enable discriminating between threats and legitimate

objects, with the latter being mostly identical across different baggage.

In contrast, the detection of threats and anomalies in X-ray cargo imagery is significantly more

challenging. Scenes tend to be very large and complex with little constraints on the arrangement and

packing of goods. Scanning is usually limited to a single view and the spatial resolution is much

lower than in baggage, making it especially difficult to resolve and locate small anomalous objects.

Moreover, a very high fraction of items packed in baggage are well-cataloged (e.g. clothing), whereas

potentially anything can be transported in a container making it impractical to learn the appearance

of frequent legitimate objects to facilitate the detection of threats. For these reasons, the performance

reported for cargo imagery is usually low.

Zhang et al. [

15

] built a so-called “joint shape and texture model” of X-ray cargo images based on

BoW extracted in superpixel regions. Using this model, images were classified into 22 categories

depending on their content (e.g. car parts, paper, plywood). The results highlighted the challenges

associated with X-ray cargo image classification, with only 51% of images being assigned to the

correct category. In another effort to develop an automated method for the verification of cargo

content in X-ray images, Tuszynski et al. [

5

] developed models based on the log-intensity histograms

of images categorized into 92 high-level HS-codes (Harmonized Commodity Description Coding

System). A city block distance was used to determine how much a new image deviates from training

examples for the declared HS-code. Using this approach, 31% of images were associated with the

correct category, while in 65% of cases the correct category was amongst the five closest matching

models.

With around 20% of cargo containers being shipped empty, it would be of interest to automatically

classify images as empty or non-empty in order to facilitate further processing (e.g. avoid processing

empty images with object-specific detectors) and to prevent fraud. Rogers et al. [

23

] described a

scheme where small non-overlapping windows were classified by a Random Forest (RF) based on

multi-scale oriented Basic Image Features (oBIFs) and intensity moments. In addition, window

coordinates were used as features so that the classifier would implicitly learn location-specific

appearances. The authors reported that 99.3% of SoC non-empty containers were detected as such

for a 0.7% false alarm rate and that 90% of synthetic images (where a single object equivalent

to 1L of water was placed) were correctly classified as empty for 0.51% false alarms. The same

problem was tackled by Andrews and colleagues [

24

] using an anomaly detection approach; instead

of implementing the empty container verification as a binary classification problem, a “normal” class

is defined (either empty or non-empty containers) and new images are scored based on their distance

from this “normal” class. Features of markedly down-sampled images (

32 × 9

pixel) were extracted

from the hidden layers of an auto-encoder and classified by a one-class SVM, achieving 99.2%

accuracy when empty containers were chosen as the “normal” class and non-empty instances were

considered as anomalies.

Representation-learning is an alternative to classification based on designed features, whereby the

image features that optimise classification are learned during training. CNNs, often referred to as

deep learning, are representation-learning methods [

25

] that were recently shown to significantly

3