Language Models are Unsupervised Multitask Learners

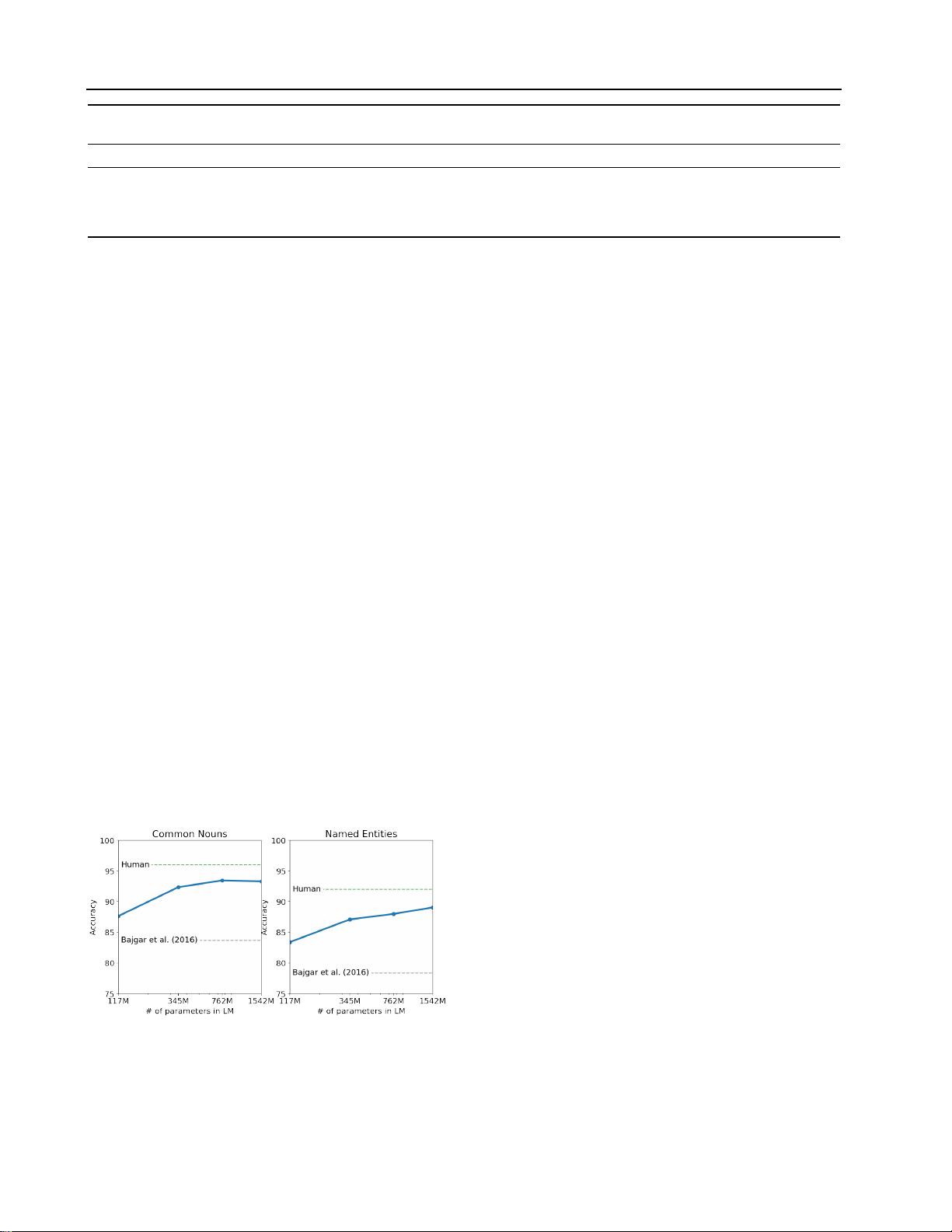

LAMBADA LAMBADA CBT-CN CBT-NE WikiText2 PTB enwik8 text8 WikiText103 1BW

(PPL) (ACC) (ACC) (ACC) (PPL) (PPL) (BPB) (BPC) (PPL) (PPL)

SOTA 99.8 59.23 85.7 82.3 39.14 46.54 0.99 1.08 18.3 21.8

117M 35.13 45.99 87.65 83.4 29.41 65.85 1.16 1.17 37.50 75.20

345M 15.60 55.48 92.35 87.1 22.76 47.33 1.01 1.06 26.37 55.72

762M 10.87 60.12 93.45 88.0 19.93 40.31 0.97 1.02 22.05 44.575

1542M 8.63 63.24 93.30 89.05 18.34 35.76 0.93 0.98 17.48 42.16

Table 3.

Zero-shot results on many datasets. No training or fine-tuning was performed for any of these results. PTB and WikiText-2

results are from (Gong et al., 2018). CBT results are from (Bajgar et al., 2016). LAMBADA accuracy result is from (Hoang et al., 2018)

and LAMBADA perplexity result is from (Grave et al., 2016). Other results are from (Dai et al., 2019).

<UNK>

which is extremely rare in WebText - occurring

only 26 times in 40 billion bytes. We report our main re-

sults in Table 3 using invertible de-tokenizers which remove

as many of these tokenization / pre-processing artifacts as

possible. Since these de-tokenizers are invertible, we can

still calculate the log probability of a dataset and they can

be thought of as a simple form of domain adaptation. We

observe gains of 2.5 to 5 perplexity for GPT-2 with these

de-tokenizers.

WebText LMs transfer well across domains and datasets,

improving the state of the art on 7 out of the 8 datasets in a

zero-shot setting. Large improvements are noticed on small

datasets such as Penn Treebank and WikiText-2 which have

only 1 to 2 million training tokens. Large improvements

are also noticed on datasets created to measure long-term

dependencies like LAMBADA (Paperno et al., 2016) and

the Children’s Book Test (Hill et al., 2015). Our model is

still significantly worse than prior work on the One Billion

Word Benchmark (Chelba et al., 2013). This is likely due

to a combination of it being both the largest dataset and

having some of the most destructive pre-processing - 1BW’s

sentence level shuffling removes all long-range structure.

3.2. Children’s Book Test

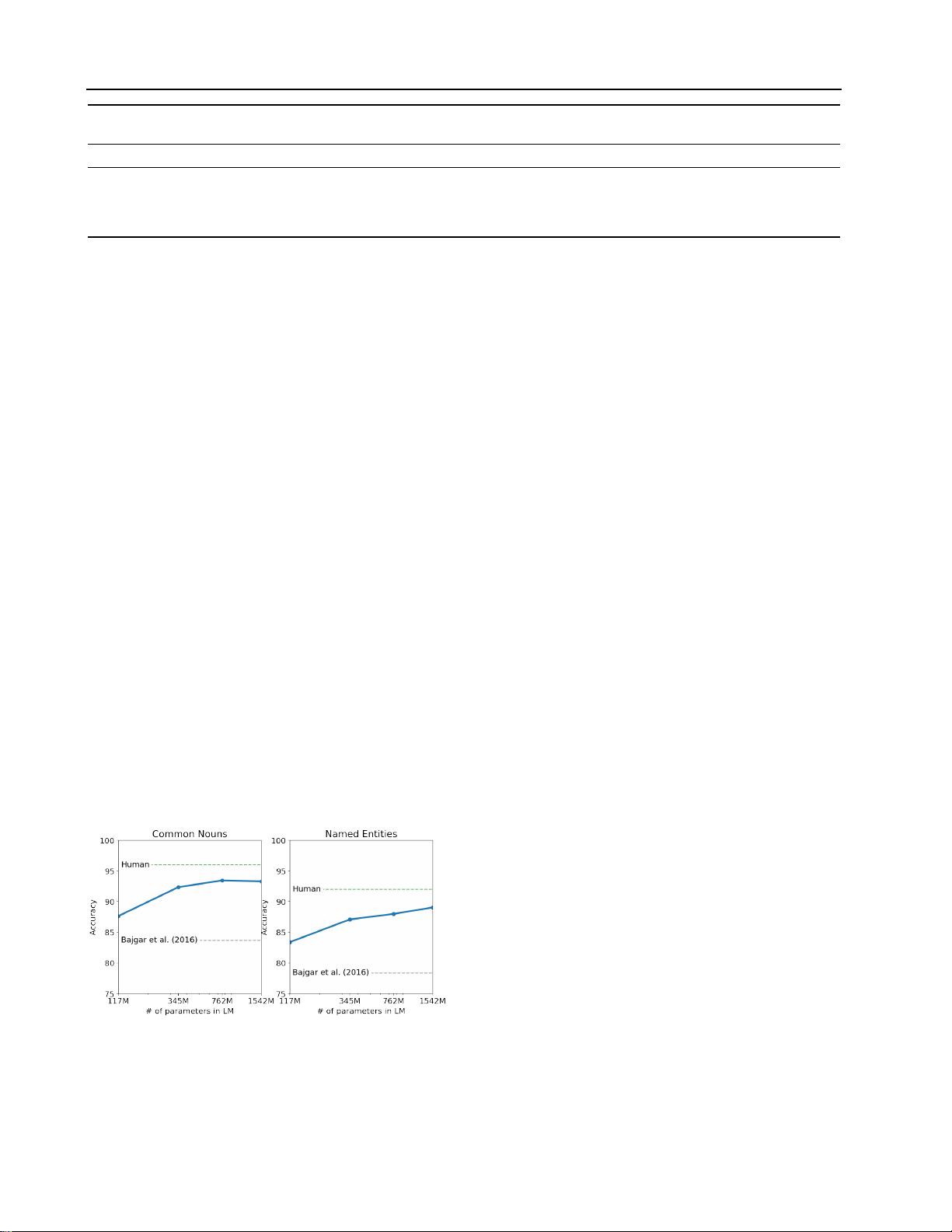

Figure 2.

Performance on the Children’s Book Test as a function of

model capacity. Human performance are from Bajgar et al. (2016),

instead of the much lower estimates from the original paper.

The Children’s Book Test (CBT) (Hill et al., 2015) was

created to examine the performance of LMs on different cat-

egories of words: named entities, nouns, verbs, and preposi-

tions. Rather than reporting perplexity as an evaluation met-

ric, CBT reports accuracy on an automatically constructed

cloze test where the task is to predict which of 10 possible

choices for an omitted word is correct. Following the LM

approach introduced in the original paper, we compute the

probability of each choice and the rest of the sentence con-

ditioned on this choice according to the LM, and predict

the one with the highest probability. As seen in Figure 2

performance steadily improves as model size is increased

and closes the majority of the gap to human performance

on this test. Data overlap analysis showed one of the CBT

test set books, The Jungle Book by Rudyard Kipling, is in

WebText, so we report results on the validation set which

has no significant overlap. GPT-2 achieves new state of the

art results of 93.3% on common nouns and 89.1% on named

entities. A de-tokenizer was applied to remove PTB style

tokenization artifacts from CBT.

3.3. LAMBADA

The LAMBADA dataset (Paperno et al., 2016) tests the

ability of systems to model long-range dependencies in

text. The task is to predict the final word of sentences

which require at least 50 tokens of context for a human to

successfully predict. GPT-2 improves the state of the art

from 99.8 (Grave et al., 2016) to 8.6 perplexity and increases

the accuracy of LMs on this test from 19% (Dehghani et al.,

2018) to 52.66%. Investigating GPT-2’s errors showed most

predictions are valid continuations of the sentence, but are

not valid final words. This suggests that the LM is not

using the additional useful constraint that the word must be

the final of the sentence. Adding a stop-word filter as an

approximation to this further increases accuracy to 63.24%,

improving the overall state of the art on this task by 4%. The

previous state of the art (Hoang et al., 2018) used a different

restricted prediction setting where the outputs of the model

were constrained to only words that appeared in the context.

For GPT-2, this restriction is harmful rather than helpful

http://chat.xutongbao.top