A Category-Level 3-D Object Dataset: Putting the Kinect to Work

Allison Janoch, Sergey Karayev, Yangqing Jia, Jonathan T. Barron, Mario Fritz, Kate Saenko, Trevor Darrell

UC Berkeley and Max-Plank-Institute for Informatics

{allie, sergeyk, jiayq, barron, saenko, trevor}@eecs.berkeley.edu, mfritz@mpi-inf.mpg.de

Abstract

Recent proliferation of a cheap but quality depth sen-

sor, the Microsoft Kinect, has brought the need for a chal-

lenging category-level 3D object detection dataset to the

fore. We review current 3D datasets and find them lack-

ing in variation of scenes, categories, instances, and view-

points. Here we present our dataset of color and depth

image pairs, gathered in real domestic and office environ-

ments. It currently includes over 50 classes, with more

images added continuously by a crowd-sourced collection

effort. We establish baseline performance in a PASCAL

VOC-style detection task, and suggest two ways that in-

ferred world size of the object may be used to improve de-

tection. The dataset and annotations can be downloaded at

http://www.kinectdata.com.

1. Introduction

Recently, there has been a resurgence of interest in avail-

able 3-D sensing techniques due to advances in active depth

sensing, including techniques based on LIDAR, time-of-

flight (Canesta), and projected texture stereo (PR2). The

Primesense sensor used on the Microsoft Kinect gaming

interface offers a particularly attractive set of capabilities,

and is quite likely the most common depth sensor available

worldwide due to its rapid market acceptance (8 million

Kinects were sold in just the first two months).

While there is a large literature on instance recogni-

tion using 3-D scans in the computer vision and robotics

literatures, there are surprisingly few existing datasets for

category-level 3-D recognition, or for recognition in clut-

tered indoor scenes, despite the obvious importance of this

application to both communities. As reviewed below, pub-

lished 3-D datasets have been limited to instance tasks, or

to a very small numbers of categories. We have collected

and describe here the initial bulk of the Berkeley 3-D Ob-

ject dataset (B3DO), an ongoing collection effort using the

Kinect sensor in domestic environments. The dataset al-

ready has an order of magnitude more variation than previ-

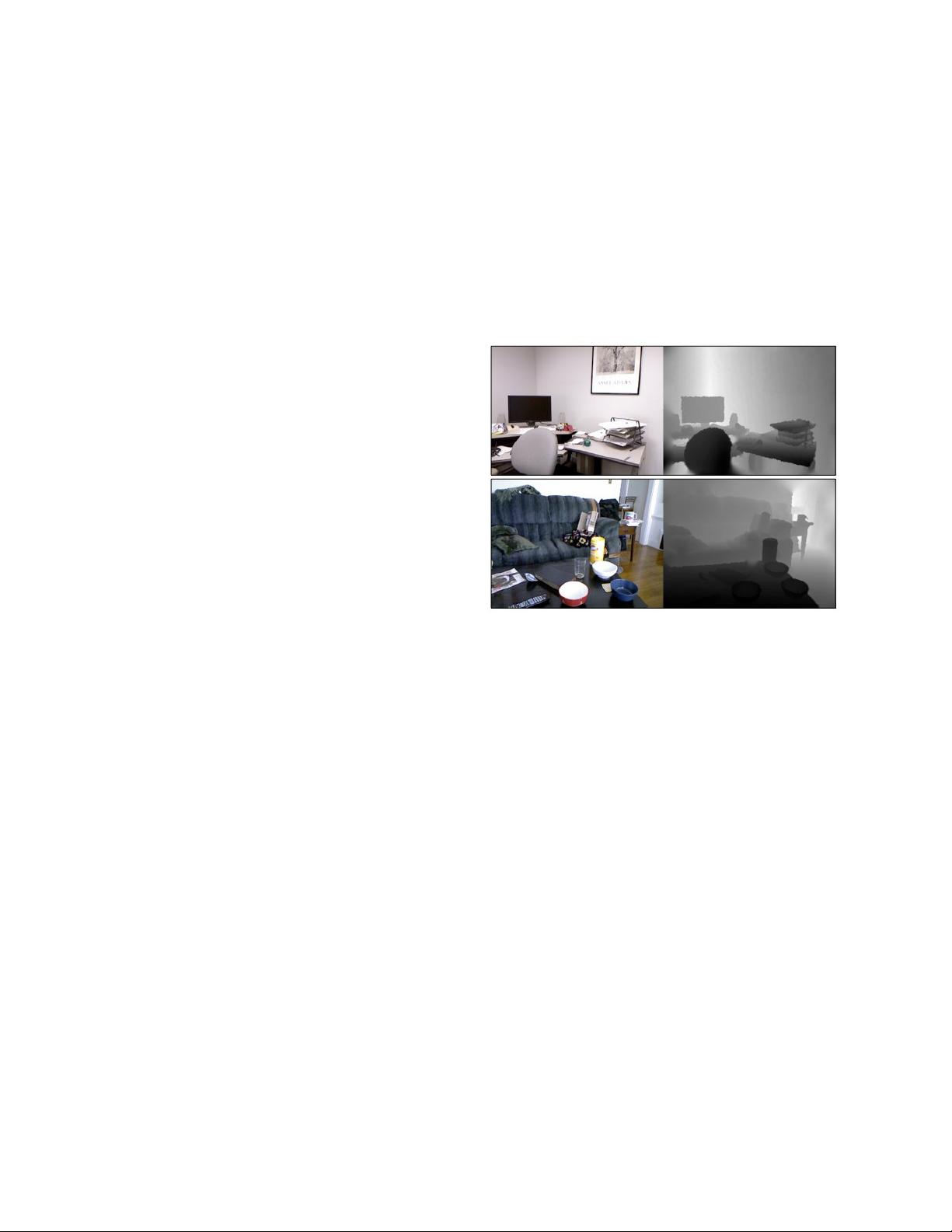

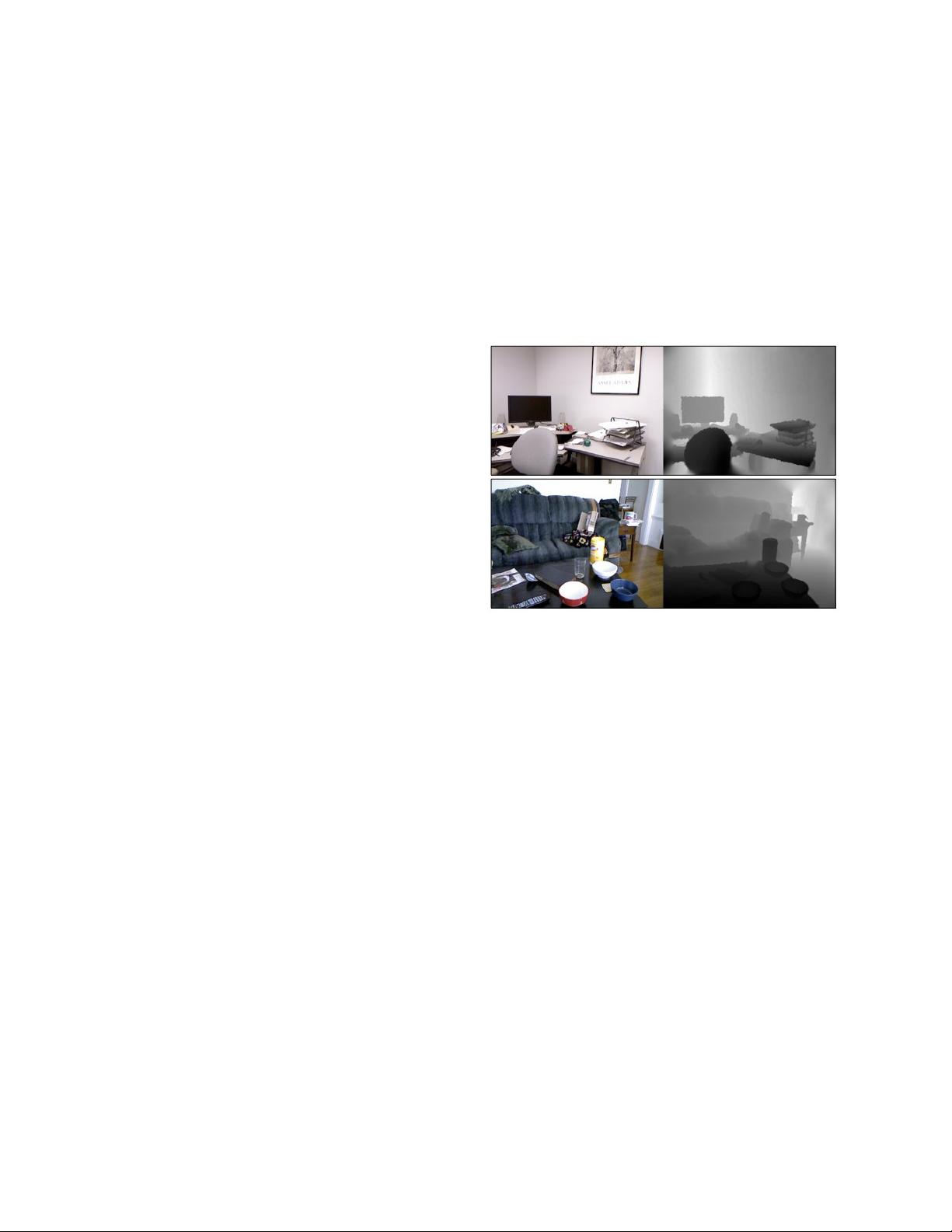

Figure 1. Two scenes typical of our dataset.

ously published datasets. The latest version of the dataset is

available at http://www.kinectdata.com

As with existing 2-D challenge datasets, our dataset has

considerable variation in pose and object size. An impor-

tant observation our dataset enables is that the actual world

size distribution of objects has less variance than the image-

projected, apparent size distribution. We report the statistics

of these and other quantities for categories in our dataset.

A key question is what value does depth data offer for

category level recognition? It is conventional wisdom that

ideal 3-D observations provide strong shape cues for recog-

nition, but in practice even the cleanest 3-D scans may re-

veal less about an object than available 2-D intensity data.

Numerous schemes for defining 3-D features analogous to

popular 2-D features for category-level recognition have

been proposed and can perform in uncluttered domains. We

evaluate the application of HOG descriptors on 3D data and

evaluate the benefit of such a scheme on our dataset. We

also use our observation about world size distribution to

place a size prior on detections, and find that it improves

detections as evaluated by average precision, and provides

a potential benefit for detection efficiency.